Distributed computing is a foundational element for most modern day computing. It paved the way for processing to be shared across multiple computers and, nowadays, within the cloud. Most technology companies, including IBM, Google, Amazon, and Facebook, use distributed computing to provide highly scalable and reliable computing power for their systems and services. Yet, Bill Gates did not invent distributed computing, nor did Steve Jobs. In fact, it was pioneered in the mid-1970s by an unsung hero of computer science, Leslie Lamport. Know aged 73 Leslie Lamport was recognized with this year’s Turing Award.

Distributed computing is a foundational element for most modern day computing. It paved the way for processing to be shared across multiple computers and, nowadays, within the cloud. Most technology companies, including IBM, Google, Amazon, and Facebook, use distributed computing to provide highly scalable and reliable computing power for their systems and services. Yet, Bill Gates did not invent distributed computing, nor did Steve Jobs. In fact, it was pioneered in the mid-1970s by an unsung hero of computer science, Leslie Lamport. Know aged 73 Leslie Lamport was recognized with this year’s Turing Award.

From Technology Review:

This year’s winner of the Turing Award—often referred to as the Nobel Prize of computing—was announced today as Leslie Lamport, a computer scientist whose research made possible the development of the large, networked computer systems that power, among other things, today’s cloud and Web services. The Association for Computing Machinery grants the award annually, with an associated prize of $250,000.

Lamport, now 73 and a researcher with Microsoft, was recognized for a series of major breakthroughs that began in the 1970s. He devised algorithms that make it possible for software to function reliably even if it is running on a collection of independent computers or components that suffer from delays in communication or sometimes fail altogether.

That work, within a field now known as distributed computing, remains crucial to the sprawling data centers used by Internet giants, and is also involved in coördinating the multiple cores of modern processors in computers and mobile devices. Lamport talked to MIT Technology Review’s Tom Simonite about why his ideas have lasted.

Why is distributed computing important?

Distribution is not something that you just do, saying “Let’s distribute things.” The question is ‘How do you get it to behave coherently?’”

My Byzantine Generals work [on making software fault-tolerant, in 1980] came about because I went to SRI and had a contract to build a reliable prototype computer for flying airplanes for NASA. That used multiple computers that could fail, and so there you have a distributed system. Today there are computers in Palo Alto and Beijing and other places, and we want to use them together, so we build distributed systems. Computers with multiple processors inside are also distributed systems.

We no longer use computers like those you worked with in the 1970s and ’80s. Why have your distributed-computing algorithms survived?

Some areas have had enormous changes, but the aspect of things I was looking at, the fundamental notions of synchronization, are the same.

Running multiple processes on a single computer is very different from a set of different computers talking over a relatively slow network, for example. [But] when you’re trying to reason mathematically about their correctness, there’s no fundamental difference between the two systems.

I [developed] Paxos [in 1989] because people at DEC [Digital Equipment Corporation] were building a distributed file system. The Paxos algorithm is very widely used now. Look inside of Bing or Google or Amazon—where they’ve got rooms full of computers, they’ll probably be running an instance of Paxos.

More recently, you have worked on ways to improve how software is built. What’s wrong with how it’s done now?

People seem to equate programming with coding, and that’s a problem. Before you code, you should understand what you’re doing. If you don’t write down what you’re doing, you don’t know whether you understand it, and you probably don’t if the first thing you write down is code. If you’re trying to build a bridge or house without a blueprint—what we call a specification—it’s not going to be very pretty or reliable. That’s how most code is written. Every time you’ve cursed your computer, you’re cursing someone who wrote a program without thinking about it in advance.

There’s something about the culture of software that has impeded the use of specification. We have a wonderful way of describing things precisely that’s been developed over the last couple of millennia, called mathematics. I think that’s what we should be using as a way of thinking about what we build.

Read the entire story here.

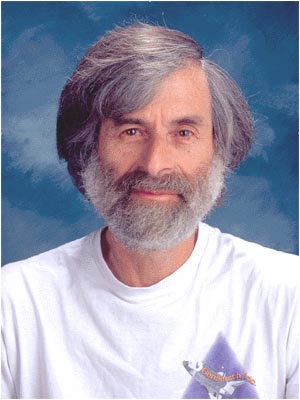

Image: Leslie Lamport, 2005. Courtesy of Wikipedia.

Computer hardware reached (or plummeted, depending upon your viewpoint) the level of commodity a while ago. And of course, some types of operating systems platforms, and software and applications have followed suit recently — think Platform as a Service (PaaS) and Software as a Service (SaaS). So, it should come as no surprise to see new services arise that try to match supply and demand, and profit in the process. Welcome to the “cloud brokerage”.

Computer hardware reached (or plummeted, depending upon your viewpoint) the level of commodity a while ago. And of course, some types of operating systems platforms, and software and applications have followed suit recently — think Platform as a Service (PaaS) and Software as a Service (SaaS). So, it should come as no surprise to see new services arise that try to match supply and demand, and profit in the process. Welcome to the “cloud brokerage”. [div class=attrib]More from theSource

[div class=attrib]More from theSource