The first number, 13.6 billion, is the age in years of the oldest known star in the cosmos. It was discovered recently by astronomers in Australia at the National University’s Mount Stromlo SkyMapper Observatory. The star is located in our Milky Way galaxy about 6,000 light years away. A little closer to home, in Kentucky at the aptly named Creation Museum, the Synchronological Chart places the beginning of time and all things at 4004 BCE.

Interestingly enough both Australia and Kentucky should not exist according to the flat earth myth or the widespread pre-Columbus view of our world with an edge at the visible horizon. But, the evolution versus creationism debates continue unabated. The chasm between the two camps remains a mere 13.6 billion years give or take a handful of millennia. But perhaps over time, those who subscribe to reason and the scientific method are likely to prevail — an apt example of survival of the most adaptable at work.

Hitch, we still miss you!

From ars technica:

In 1878, the American scholar and minister Sebastian Adams put the final touches on the third edition of his grandest project: a massive Synchronological Chart that covers nothing less than the entire history of the world in parallel, with the deeds of kings and kingdoms running along together in rows over 25 horizontal feet of paper. When the chart reaches 1500 BCE, its level of detail becomes impressive; at 400 CE it becomes eyebrow-raising; at 1300 CE it enters the realm of the wondrous. No wonder, then, that in their 2013 book Cartographies of Time: A History of the Timeline, authors Daniel Rosenberg and Anthony Grafton call Adams’ chart “nineteenth-century America’s surpassing achievement in complexity and synthetic power… a great work of outsider thinking.”

The chart is also the last thing that visitors to Kentucky’s Creation Museum see before stepping into the gift shop, where full-sized replicas can be purchased for $40.

That’s because, in the world described by the museum, Adams’ chart is more than a historical curio; it remains an accurate timeline of world history. Time is said to have begun in 4004 BCE with the creation of Adam, who went on to live for 930 more years. In 2348 BCE, the Earth was then reshaped by a worldwide flood, which created the Grand Canyon and most of the fossil record even as Noah rode out the deluge in an 81,000 ton wooden ark. Pagan practices at the eight-story high Tower of Babel eventually led God to cause a “confusion of tongues” in 2247 BCE, which is why we speak so many different languages today.

Adams notes on the second panel of the chart that “all the history of man, before the flood, extant, or known to us, is found in the first six chapters of Genesis.”

Ken Ham agrees. Ham, CEO of Answers in Genesis (AIG), has become perhaps the foremost living young Earth creationist in the world. He has authored more books and articles than seems humanly possible and has built AIG into a creationist powerhouse. He also made national headlines when the slickly modern Creation Museum opened in 2007.

He has also been looking for the opportunity to debate a prominent supporter of evolution.

And so it was that, as a severe snow and sleet emergency settled over the Cincinnati region, 900 people climbed into cars and wound their way out toward the airport to enter the gates of the Creation Museum. They did not come for the petting zoo, the zip line, or the seasonal camel rides, nor to see the animatronic Noah chortle to himself about just how easy it had really been to get dinosaurs inside his ark. They did not come to see The Men in White, a 22-minute movie that plays in the museum’s halls in which a young woman named Wendy sees that what she’s been taught about evolution “doesn’t make sense” and is then visited by two angels who help her understand the truth of six-day special creation. They did not come to see the exhibits explaining how all animals had, before the Fall of humanity into sin, been vegetarians.

He has also been looking for the opportunity to debate a prominent supporter of evolution.

And so it was that, as a severe snow and sleet emergency settled over the Cincinnati region, 900 people climbed into cars and wound their way out toward the airport to enter the gates of the Creation Museum. They did not come for the petting zoo, the zip line, or the seasonal camel rides, nor to see the animatronic Noah chortle to himself about just how easy it had really been to get dinosaurs inside his ark. They did not come to see The Men in White, a 22-minute movie that plays in the museum’s halls in which a young woman named Wendy sees that what she’s been taught about evolution “doesn’t make sense” and is then visited by two angels who help her understand the truth of six-day special creation. They did not come to see the exhibits explaining how all animals had, before the Fall of humanity into sin, been vegetarians.

They came to see Ken Ham debate TV presenter Bill Nye the Science Guy—an old-school creation v. evolution throwdown for the Powerpoint age. Even before it began, the debate had been good for both men. Traffic to AIG’s website soared by 80 percent, Nye appeared on CNN, tickets sold out in two minutes, and post-debate interviews were lined up with Piers Morgan Live and MSNBC.

While plenty of Ham supporters filled the parking lot, so did people in bow ties and “Bill Nye is my Homeboy” T-shirts. They all followed the stamped dinosaur tracks to the museum’s entrance, where a pack of AIG staffers wearing custom debate T-shirts stood ready to usher them into “Discovery Hall.”

Security at the Creation Museum is always tight; the museum’s security force is made up of sworn (but privately funded) Kentucky peace officers who carry guns, wear flat-brimmed state trooper-style hats, and operate their own K-9 unit. For the debate, Nye and Ham had agreed to more stringent measures. Visitors passed through metal detectors complete with secondary wand screenings, packages were prohibited in the debate hall itself, and the outer gates were closed 15 minutes before the debate began.

Inside the hall, packed with bodies and the blaze of high-wattage lights, the temperature soared. The empty stage looked—as everything at the museum does—professionally designed, with four huge video screens, custom debate banners, and a pair of lecterns sporting Mac laptops. 20 different video crews had set up cameras in the hall, and 70 media organizations had registered to attend. More than 10,000 churches were hosting local debate parties. As AIG technical staffers made final preparations, one checked the YouTube-hosted livestream—242,000 people had already tuned in before start time.

An AIG official took the stage eight minutes before start time. “We know there are people who disagree with each other in this room,” he said. “No cheering or—please—any disruptive behavior.”

At 6:59pm, the music stopped and the hall fell silent but for the suddenly prominent thrumming of the air conditioning. For half a minute, the anticipation was electric, all eyes fixed on the stage, and then the countdown clock ticked over to 7:00pm and the proceedings snapped to life. Nye, wearing his traditional bow tie, took the stage from the left; Ham appeared from the right. The two shook hands in the center to sustained applause, and CNN’s Tom Foreman took up his moderating duties.

Inside the hall, packed with bodies and the blaze of high-wattage lights, the temperature soared. The empty stage looked—as everything at the museum does—professionally designed, with four huge video screens, custom debate banners, and a pair of lecterns sporting Mac laptops. 20 different video crews had set up cameras in the hall, and 70 media organizations had registered to attend. More than 10,000 churches were hosting local debate parties. As AIG technical staffers made final preparations, one checked the YouTube-hosted livestream—242,000 people had already tuned in before start time.

An AIG official took the stage eight minutes before start time. “We know there are people who disagree with each other in this room,” he said. “No cheering or—please—any disruptive behavior.”

At 6:59pm, the music stopped and the hall fell silent but for the suddenly prominent thrumming of the air conditioning. For half a minute, the anticipation was electric, all eyes fixed on the stage, and then the countdown clock ticked over to 7:00pm and the proceedings snapped to life. Nye, wearing his traditional bow tie, took the stage from the left; Ham appeared from the right. The two shook hands in the center to sustained applause, and CNN’s Tom Foreman took up his moderating duties.

Ham had won the coin toss backstage and so stepped to his lectern to deliver brief opening remarks. “Creation is the only viable model of historical science confirmed by observational science in today’s modern scientific era,” he declared, blasting modern textbooks for “imposing the religion of atheism” on students.

“We’re teaching people to think critically!” he said. “It’s the creationists who should be teaching the kids out there.”

And we were off.

Two kinds of science

Digging in the fossil fields of Colorado or North Dakota, scientists regularly uncover the bones of ancient creatures. No one doubts the existence of the bones themselves; they lie on the ground for anyone to observe or weigh or photograph. But in which animal did the bones originate? How long ago did that animal live? What did it look like? One of Ham’s favorite lines is that the past “doesn’t come with tags”—so the prehistory of a stegosaurus thigh bone has to be interpreted by scientists, who use their positions in the present to reconstruct the past.

For mainstream scientists, this is simply an obvious statement of our existential position. Until a real-life Dr. Emmett “Doc” Brown finds a way to power a Delorean with a 1.21 gigawatt flux capacitor in order to shoot someone back through time to observe the flaring-forth of the Universe, the formation of the Earth, or the origins of life, or the prehistoric past can’t be known except by interpretation. Indeed, this isn’t true only of prehistory; as Nye tried to emphasize, forensic scientists routinely use what they know of nature’s laws to reconstruct past events like murders.

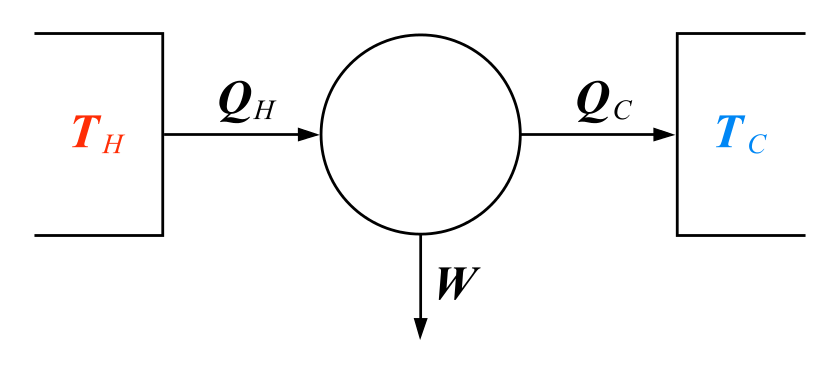

For Ham, though, science is broken into two categories, “observational” and “historical,” and only observational science is trustworthy. In the initial 30 minute presentation of his position, Ham hammered the point home.

“You don’t observe the past directly,” he said. “You weren’t there.”

Ham spoke with the polish of a man who has covered this ground a hundred times before, has heard every objection, and has a smooth answer ready for each one.

When Bill Nye talks about evolution, Ham said, that’s “Bill Nye the Historical Science Guy” speaking—with “historical” being a pejorative term.

In Ham’s world, only changes that we can observe directly are the proper domain of science. Thus, when confronted with the issue of speciation, Ham readily admits that contemporary lab experiments on fast-breeding creatures like mosquitoes can produce new species. But he says that’s simply “micro-evolution” below the family level. He doesn’t believe that scientists can observe “macro-evolution,” such as the alteration of a lobe-finned fish into a tiger over millions of years.

Because they can’t see historical events unfold, scientists must rely on reconstructions of the past. Those might be accurate, but they simply rely on too many “assumptions” for Ham to trust them. When confronted during the debate with evidence from ancient trees which have more rings than there are years on the Adams Sychronological Chart, Ham simply shrugged.

“We didn’t see those layers laid down,” he said.

To him, the calculus of “one ring, one year” is merely an assumption when it comes to the past—an assumption possibly altered by cataclysmic events such as Noah’s flood.

In other words, “historical science” is dubious; we should defer instead to the “observational” account of someone who witnessed all past events: God, said to have left humanity an eyewitness account of the world’s creation in the book of Genesis. All historical reconstructions should thus comport with this more accurate observational account.

Mainstream scientists don’t recognize this divide between observational and historical ways of knowing (much as they reject Ham’s distinction between “micro” and “macro” evolution). Dinosaur bones may not come with tags, but neither does observed contemporary reality—think of a doctor presented with a set of patient symptoms, who then has to interpret what she sees in order to arrive at a diagnosis.

Given that the distinction between two kinds of science provides Ham’s key reason for accepting the “eyewitness account” of Genesis as a starting point, it was unsurprising to see Nye take generous whacks at the idea. You can’t observe the past? “That’s what we do in astronomy,” said Nye in his opening presentation. Since light takes time to get here, “All we can do in astronomy is look at the past. By the way, you’re looking at the past right now.”

Those in the present can study the past with confidence, Nye said, because natural laws are generally constant and can be used to extrapolate into the past.

“This idea that you can separate the natural laws of the past from the natural laws you have now is at the heart of our disagreement,” Nye said. “For lack of a better word, it’s magical. I’ve appreciated magic since I was a kid, but it’s not what we want in mainstream science.”

How do scientists know that these natural laws are correctly understood in all their complexity and interplay? What operates as a check on their reconstructions? That’s where the predictive power of evolutionary models becomes crucial, Nye said. Those models of the past should generate predictions which can then be verified—or disproved—through observations in the present.

Read the entire article here.

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”.

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”.

British voters may recall Screaming Lord Sutch, 3rd Earl of Harrow, of the Official Monster Raving Loony Party, who ran in over 40 parliamentary elections during the 1980s and 90s. He never won, but garnered a respectable number of votes and many fans (he was also a musician).

British voters may recall Screaming Lord Sutch, 3rd Earl of Harrow, of the Official Monster Raving Loony Party, who ran in over 40 parliamentary elections during the 1980s and 90s. He never won, but garnered a respectable number of votes and many fans (he was also a musician).

[div class=attrib]From Smithsonian:[end-div]

[div class=attrib]From Smithsonian:[end-div] A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London. It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?

It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?

In the early 19th century Noah Webster set about re-defining written English. His aim was to standardize the spoken word in the fledgling nation and to distinguish American from British usage. In his own words, “as an independent nation, our honor requires us to have a system of our own, in language as well as government.”

In the early 19th century Noah Webster set about re-defining written English. His aim was to standardize the spoken word in the fledgling nation and to distinguish American from British usage. In his own words, “as an independent nation, our honor requires us to have a system of our own, in language as well as government.”