Billions of people have access to the Internet. Now, whether a significant proportion of these do anything productive with this tremendous resource is open to debate — many preferring only to post pictures of their breakfasts, themselves or to watch last-minute’s viral video hit.

Despite all these humans clogging up the Tubes of the Internets most traffic along the information superhighway is in fact not even human. Over 60 percent of all activity comes from computer systems, such as web crawlers, botnets, and increasingly, industrial control systems, ranging from security and monitoring devices, to in-home devices such as your thermostat, refrigerator, smart TV , smart toilet and toaster. So, soon Google will know what you eat and when, and your fridge will tell you what you should eat (or not) based on what it knows of your body mass index (BMI) from your bathroom scales.

Jokes aside, the Internet of Things (IoT) promises to herald an even more significant information revolution over the coming decades as all our devices and machines, from home to farm to factory, are connected and inter-connected.

From the ars technica:

If you believe what the likes of LG and Samsung have been promoting this week at CES, everything will soon be smart. We’ll be able to send messages to our washing machines, run apps on our fridges, and have TVs as powerful as computers. It may be too late to resist this movement, with smart TVs already firmly entrenched in the mid-to-high end market, but resist it we should. That’s because the “Internet of things” stands a really good chance of turning into the “Internet of unmaintained, insecure, and dangerously hackable things.”

These devices will inevitably be abandoned by their manufacturers, and the result will be lots of “smart” functionality—fridges that know what we buy and when, TVs that know what shows we watch—all connected to the Internet 24/7, all completely insecure.

While the value of smart watches or washing machines isn’t entirely clear, at least some smart devices—I think most notably phones and TVs—make sense. The utility of the smartphone, an Internet-connected computer that fits in your pocket, is obvious. The growth of streaming media services means that your antenna or cable box are no longer the sole source of televisual programming, so TVs that can directly use these streaming services similarly have some appeal.

But these smart features make the devices substantially more complex. Your smart TV is not really a TV so much as an all-in-one computer that runs Android, WebOS, or some custom operating system of the manufacturer’s invention. And where once it was purely a device for receiving data over a coax cable, it’s now equipped with bidirectional networking interfaces, exposing the Internet to the TV and the TV to the Internet.

The result is a whole lot of exposure to security problems. Even if we assume that these devices ship with no known flaws—a questionable assumption in and of itself if SOHO routers are anything to judge by—a few months or years down the line, that will no longer be the case. Flaws and insecurities will be uncovered, and the software components of these smart devices will need to be updated to address those problems. They’ll need these updates for the lifetime of the device, too. Old software is routinely vulnerable to newly discovered flaws, so there’s no point in any reasonable timeframe at which it’s OK to stop updating the software.

In addition to security, there’s also a question of utility. Netflix and Hulu may be hot today, but that may not be the case in five years’ time. New services will arrive; old ones will die out. Even if the service lineup remains the same, its underlying technology is unlikely to be static. In the future, Netflix, for example, might want to deprecate old APIs and replace them with new ones; Netflix apps will need to be updated to accommodate the changes. I can envision changes such as replacing the H.264 codec with H.265 (for reduced bandwidth and/or improved picture quality), which would similarly require updated software.

To remain useful, app platforms need up-to-date apps. As such, for your smart device to remain safe, secure, and valuable, it needs a lifetime of software fixes and updates.

A history of non-existent updates

Herein lies the problem, because if there’s one thing that companies like Samsung have demonstrated in the past, it’s a total unwillingness to provide a lifetime of software fixes and updates. Even smartphones, which are generally assumed to have a two-year lifecycle (with replacements driven by cheap or “free” contract-subsidized pricing), rarely receive updates for the full two years (Apple’s iPhone being the one notable exception).

A typical smartphone bought today will remain useful and usable for at least three years, but its system software support will tend to dry up after just 18 months.

This isn’t surprising, of course. Samsung doesn’t make any money from making your two-year-old phone better. Samsung makes its money when you buy a new Samsung phone. Improving the old phones with software updates would cost money, and that tends to limit sales of new phones. For Samsung, it’s lose-lose.

Our fridges, cars, and TVs are not even on a two-year replacement cycle. Even if you do replace your TV after it’s a couple years old, you probably won’t throw the old one away. It will just migrate from the living room to the master bedroom, and then from the master bedroom to the kids’ room. Likewise, it’s rare that a three-year-old car is simply consigned to the scrap heap. It’s given away or sold off for a second, third, or fourth “life” as someone else’s primary vehicle. Your fridge and washing machine will probably be kept until they blow up or you move houses.

These are all durable goods, kept for the long term without any equivalent to the smartphone carrier subsidy to promote premature replacement. If they’re going to be smart, software-powered devices, they’re going to need software lifecycles that are appropriate to their longevity.

That costs money, it requires a commitment to providing support, and it does little or nothing to promote sales of the latest and greatest devices. In the software world, there are companies that provide this level of support—the Microsofts and IBMs of the world—but it tends to be restricted to companies that have at least one eye on the enterprise market. In the consumer space, you’re doing well if you’re getting updates and support five years down the line. Consumer software fixes a decade later are rare, especially if there’s no system of subscriptions or other recurring payments to monetize the updates.

Of course, the companies building all these products have the perfect solution. Just replace all our stuff every 18-24 months. Fridge no longer getting updated? Not a problem. Just chuck out the still perfectly good fridge you have and buy a new one. This is, after all, the model that they already depend on for smartphones. Of course, it’s not really appropriate even to smartphones (a mid/high-end phone bought today will be just fine in three years), much less to stuff that will work well for 10 years.

These devices will be abandoned by their manufacturers, and it’s inevitable that they are abandoned long before they cease to be useful.

Superficially, this might seem to be no big deal. Sure, your TV might be insecure, but your NAT router will probably provide adequate protection, and while it wouldn’t be tremendously surprising to find that it has some passwords for online services or other personal information on it, TVs are sufficiently diverse that people are unlikely to expend too much effort targeting specific models.

Read the entire story here.

Image: A classically styled chrome two-slot automatic electric toaster. Courtesy of Wikipedia.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

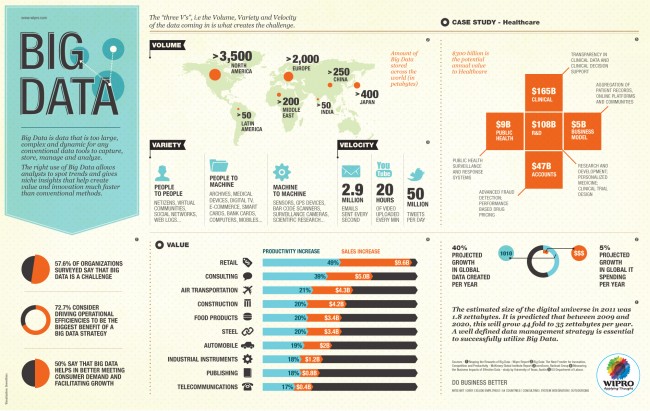

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading. We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT. For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology. The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing.

The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing. One wonders what the world would look like today had Alan Turing been criminally prosecuted and jailed by the British government for his homosexuality before the Second World War, rather than in 1952. Would the British have been able to break German Naval ciphers encoded by their Enigma machine? Would the German Navy have prevailed, and would the Nazis have gone on to conquer the British Isles?

One wonders what the world would look like today had Alan Turing been criminally prosecuted and jailed by the British government for his homosexuality before the Second World War, rather than in 1952. Would the British have been able to break German Naval ciphers encoded by their Enigma machine? Would the German Navy have prevailed, and would the Nazis have gone on to conquer the British Isles?

Last week on October 8, 2011, Dennis Richie passed away. Most of the mainstream media failed to report his death — after all he was never quite as flamboyant as another technology darling, Steve Jobs. However, his contributions to the worlds of technology and computer science should certainly place him in the same club.

Last week on October 8, 2011, Dennis Richie passed away. Most of the mainstream media failed to report his death — after all he was never quite as flamboyant as another technology darling, Steve Jobs. However, his contributions to the worlds of technology and computer science should certainly place him in the same club.