It does indeed appear that a computer armed with Google’s experimental AI (artificial intelligence) software just beat a grandmaster of the strategy board game Go. The game was devised in ancient China — it’s been around for several millennia. Go is commonly held to be substantially more difficult than chess to master, to which I can personally attest.

So, does this mean that the human race is next in line for a defeat at the hands of an uber-intelligent AI? Well, not really, not yet anyway.

But, I’m with prominent scientists and entrepreneurs — including Stephen Hawking, Bill Gates and Elon Musk — who warn of the long-term existential peril to humanity from unfettered AI. In the meantime check out how AlphaGo from Google’s DeepMind unit set about thrashing a human.

From Wired:

An artificially intelligent Google machine just beat a human grandmaster at the game of Go, the 2,500-year-old contest of strategy and intellect that’s exponentially more complex than the game of chess. And Nick Bostrom isn’t exactly impressed.

Bostrom is the Swedish-born Oxford philosophy professor who rose to prominence on the back of his recent bestseller Superintelligence: Paths, Dangers, Strategies, a book that explores the benefits of AI, but also argues that a truly intelligent computer could hasten the extinction of humanity. It’s not that he discounts the power of Google’s Go-playing machine. He just argues that it isn’t necessarily a huge leap forward. The technologies behind Google’s system, Bostrom points out, have been steadily improving for years, including much-discussed AI techniques such as deep learning and reinforcement learning. Google beating a Go grandmaster is just part of a much bigger arc. It started long ago, and it will continue for years to come.

“There has been, and there is, a lot of progress in state-of-the-art artificial intelligence,” Bostrom says. “[Google’s] underlying technology is very much continuous with what has been under development for the last several years.”

But if you look at this another way, it’s exactly why Google’s triumph is so exciting—and perhaps a little frightening. Even Bostrom says it’s a good excuse to stop and take a look at how far this technology has come and where it’s going. Researchers once thought AI would struggle to crack Go for at least another decade. Now, it’s headed to places that once seemed unreachable. Or, at least, there are many people—with much power and money at their disposal—who are intent on reaching those places.

…

Building a Brain

Google’s AI system, known as AlphaGo, was developed at DeepMind, the AI research house that Google acquired for $400 million in early 2014. DeepMind specializes in both deep learning and reinforcement learning, technologies that allow machines to learn largely on their own.

…

Using what are called neural networks—networks of hardware and software that approximate the web of neurons in the human brain—deep learning is what drives the remarkably effective image search tool build into Google Photos—not to mention the face recognition service on Facebook and the language translation tool built into Microsoft’s Skype and the system that identifies porn on Twitter. If you feed millions of game moves into a deep neural net, you can teach it to play a video game.

…

Reinforcement learning takes things a step further. Once you’ve built a neural net that’s pretty good at playing a game, you can match it against itself. As two versions of this neural net play thousands of games against each other, the system tracks which moves yield the highest reward—that is, the highest score—and in this way, it learns to play the game at an even higher level.

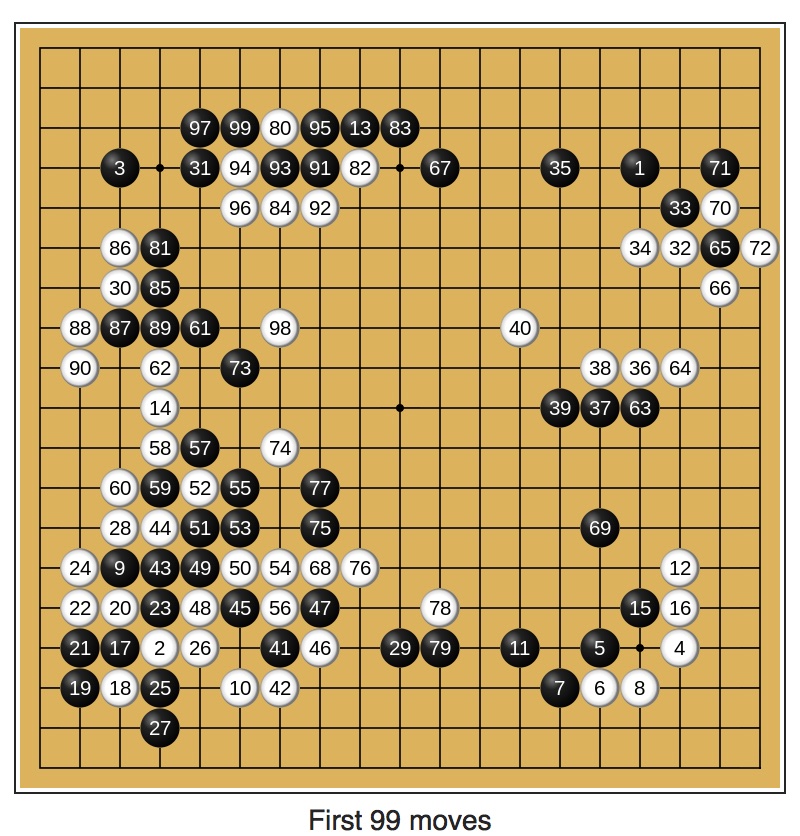

AlphaGo uses all this. And then some. Hassabis [Demis Hassabis, AlphaGo founder] and his team added a second level of “deep reinforcement learning” that looks ahead to the longterm results of each move. And they lean on traditional AI techniques that have driven Go-playing AI in the past, including the Monte Carlo tree search method, which basically plays out a huge number of scenarios to their eventual conclusions. Drawing from techniques both new and old, they built a system capable of beating a top professional player. In October, AlphaGo played a close-door match against the reigning three-time European Go champion, which was only revealed to the public on Wednesday morning. The match spanned five games, and AlphaGo won all five.

Read the entire story here.

Image: Korean couple, in traditional dress, play Go; photograph dated between 1910 and 1920. Courtesy: Frank and Frances Carpenter Collection. Public Domain.