History reminds us of those critical events that pose threats to us on various levels: to our well being at a narrow level and to the foundations of our democracies at a much broader level. And, most of these existential threats seem to come from the outside: wars, terrorism, ethnic cleansing.

History reminds us of those critical events that pose threats to us on various levels: to our well being at a narrow level and to the foundations of our democracies at a much broader level. And, most of these existential threats seem to come from the outside: wars, terrorism, ethnic cleansing.

But it’s not quite that simple — the biggest threats come not from external sources of evil, but from within us. Perhaps the two most significant are our apathy and paranoia. Taken together they erode our duty to protect our democracy, and hand over ever-increasing power to those who claim to protect us. Thus, before the Nazi machine enslaved huge portions of Europe, the citizens of Germany allowed it to gain power; before Al-Qaeda and Isis and their terrorist look-a-likes gained notoriety local conditions allowed these groups to flourish. We are all complicit in our inaction — driven by indifference or fear, or both.

Two timely events serve to remind us of the huge costs and consequences of our inaction from apathy and paranoia. One from the not too distant past, and the other portends our future. First, it is Victory in Europe (VE) day, the anniversary of the Allied win in WWII, on May 8, 1945. Many millions perished through the brutal policies of the Nazi ideology and its instrument, the Wehrmacht, and millions more subsequently perished in the fight to restore moral order. Much of Europe first ignored the growing threat of the national socialists. As the threat grew, Europe continued to contemplate appeasement. Only later, as true scale of atrocities became apparent did leaders realize that the threat needed to be tackled head-on.

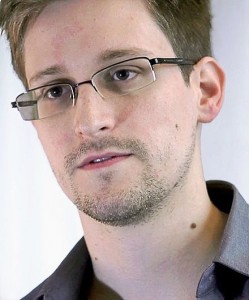

Second, a federal appeals court in the United States ruled on May 7, 2015 that the National Security Agency’s collection of millions of phone records is illegal. This serves to remind us of the threat that our own governments pose to our fundamental freedoms under the promise of continued comfort and security. For those who truly care about the fragility of democracy this is a momentous and rightful ruling. It is all the more remarkable that since the calamitous events of September 11, 2001 few have challenged this governmental overreach into our private lives: our phone calls, our movements, our internet surfing habits, our credit card history. We have seen few public demonstrations and all too little ongoing debate. Indeed, only through the recent revelations by Edward Snowden did the debate even enter the media cycle. And, the debate is only just beginning.

Both of these events show that only we, the people who are fortunate enough to live within a democracy, can choose a path that strengthens our governmental institutions and balances these against our fundamental rights. By corollary we can choose a path that weakens our institutions too. One path requires engagement and action against those who use fear to make us conform. The other path, often easier, requires that we do nothing, accept the status quo, curl up in the comfort of our cocoons and give in to fear.

So this is why the appeals court ruling is so important. While only three in number, the judges have established that our government has been acting illegally, yet supposedly on our behalf. While the judges did not terminate the unlawful program, they pointedly requested the US Congress to debate and then define laws that would be narrower and less at odds with citizens’ constitutional rights. So, the courts have done us all a great favor. One can only hope that this opens the eyes, ears and mouths of the apathetic and fearful so that they continuously demand fair and considered action from their elected representatives. Only then can we begin to make inroads against the real and insidious threats to our democracy — our apathy and our fear. And perhaps, also, Mr.Snowden can take a small helping of solace.

From the Guardian:

The US court of appeals has ruled that the bulk collection of telephone metadata is unlawful, in a landmark decision that clears the way for a full legal challenge against the National Security Agency.

A panel of three federal judges for the second circuit overturned an earlier rulingthat the controversial surveillance practice first revealed to the US public by NSA whistleblower Edward Snowden in 2013 could not be subject to judicial review.

But the judges also waded into the charged and ongoing debate over the reauthorization of a key Patriot Act provision currently before US legislators. That provision, which the appeals court ruled the NSA program surpassed, will expire on 1 June amid gridlock in Washington on what to do about it.

The judges opted not to end the domestic bulk collection while Congress decides its fate, calling judicial inaction “a lesser intrusion” on privacy than at the time the case was initially argued.

“In light of the asserted national security interests at stake, we deem it prudent to pause to allow an opportunity for debate in Congress that may (or may not) profoundly alter the legal landscape,” the judges ruled.

But they also sent a tacit warning to Senator Mitch McConnell, the Republican leader in the Senate who is pushing to re-authorize the provision, known as Section 215, without modification: “There will be time then to address appellants’ constitutional issues.”

“We hold that the text of section 215 cannot bear the weight the government asks us to assign to it, and that it does not authorize the telephone metadata program,” concluded their judgment.

“Such a monumental shift in our approach to combating terrorism requires a clearer signal from Congress than a recycling of oft?used language long held in similar contexts to mean something far narrower,” the judges added.

“We conclude that to allow the government to collect phone records only because they may become relevant to a possible authorized investigation in the future fails even the permissive ‘relevance’ test.

“We agree with appellants that the government’s argument is ‘irreconcilable with the statute’s plain text’.”

Read the entire story here.

Image: Edward Snowden. Courtesy of Wikipedia.