The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing.

The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing.

[div class=attrib]From the Observer:[end-div]

The reality of the universe in which we live is an outrage to common sense. Over the past 100 years, scientists have been forced to abandon a theory in which the stuff of the universe constitutes a single, concrete reality in exchange for one in which a single particle can be in two (or more) places at the same time. This is the universe as revealed by the laws of quantum physics and it is a model we are forced to accept – we have been battered into it by the weight of the scientific evidence. Without it, we would not have discovered and exploited the tiny switches present in their billions on every microchip, in every mobile phone and computer around the world. The modern world is built using quantum physics: through its technological applications in medicine, global communications and scientific computing it has shaped the world in which we live.

Although modern computing relies on the fidelity of quantum physics, the action of those tiny switches remains firmly in the domain of everyday logic. Each switch can be either “on” or “off”, and computer programs are implemented by controlling the flow of electricity through a network of wires and switches: the electricity flows through open switches and is blocked by closed switches. The result is a plethora of extremely useful devices that process information in a fantastic variety of ways.

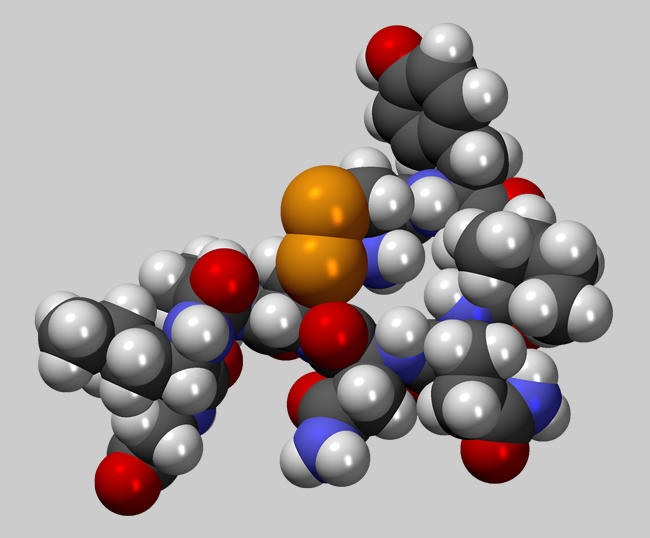

Modern “classical” computers seem to have almost limitless potential – there is so much we can do with them. But there is an awful lot we cannot do with them too. There are problems in science that are of tremendous importance but which we have no hope of solving, not ever, using classical computers. The trouble is that some problems require so much information processing that there simply aren’t enough atoms in the universe to build a switch-based computer to solve them. This isn’t an esoteric matter of mere academic interest – classical computers can’t ever hope to model the behaviour of some systems that contain even just a few tens of atoms. This is a serious obstacle to those who are trying to understand the way molecules behave or how certain materials work – without the possibility to build computer models they are hampered in their efforts. One example is the field of high-temperature superconductivity. Certain materials are able to conduct electricity “for free” at surprisingly high temperatures (still pretty cold, though, at well but still below -100 degrees celsius). The trouble is, nobody really knows how they work and that seriously hinders any attempt to make a commercially viable technology. The difficulty in simulating physical systems of this type arises whenever quantum effects are playing an important role and that is the clue we need to identify a possible way to make progress.

It was American physicist Richard Feynman who, in 1981, first recognised that nature evidently does not need to employ vast computing resources to manufacture complicated quantum systems. That means if we can mimic nature then we might be able to simulate these systems without the prohibitive computational cost. Simulating nature is already done every day in science labs around the world – simulations allow scientists to play around in ways that cannot be realised in an experiment, either because the experiment would be too difficult or expensive or even impossible. Feynman’s insight was that simulations that inherently include quantum physics from the outset have the potential to tackle those otherwise impossible problems.

Quantum simulations have, in the past year, really taken off. The ability to delicately manipulate and measure systems containing just a few atoms is a requirement of any attempt at quantum simulation and it is thanks to recent technical advances that this is now becoming possible. Most recently, in an article published in the journal Nature last week, physicists from the US, Australia and South Africa have teamed up to build a device capable of simulating a particular type of magnetism that is of interest to those who are studying high-temperature superconductivity. Their simulator is esoteric. It is a small pancake-like layer less than 1 millimetre across made from 300 beryllium atoms that is delicately disturbed using laser beams… and it paves the way for future studies into quantum magnetism that will be impossible using a classical computer.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: A crystal of beryllium ions confined by a large magnetic field at the US National Institute of Standards and Technology’s quantum simulator. The outermost electron of each ion is a quantum bit (qubit), and here they are fluorescing blue, which indicates they are all in the same state. Photograph courtesy of Britton/NIST, Observer.[end-div]

An insightful opinion on the benefits and perils of nanotechnology from essayist and naturalist, Diane Ackerman.

An insightful opinion on the benefits and perils of nanotechnology from essayist and naturalist, Diane Ackerman. May the Fourth was Star Wars Day. Why? Say, “May the Fourth” slowly while pretending to lisp slightly, and you’ll understand. Appropriately, Matt Cresswen over at the Guardian took this day to review the growing Jedi religion in the UK.

May the Fourth was Star Wars Day. Why? Say, “May the Fourth” slowly while pretending to lisp slightly, and you’ll understand. Appropriately, Matt Cresswen over at the Guardian took this day to review the growing Jedi religion in the UK. Google has been variously praised and derided for its corporate manta, “Don’t Be Evil”. For those who like to believe that Google has good intentions recent events strain these assumptions. The company was found to have been snooping on and collecting data from personal Wi-Fi routers. Is this the case of a lone-wolf or a corporate strategy?

Google has been variously praised and derided for its corporate manta, “Don’t Be Evil”. For those who like to believe that Google has good intentions recent events strain these assumptions. The company was found to have been snooping on and collecting data from personal Wi-Fi routers. Is this the case of a lone-wolf or a corporate strategy? [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

A small, but growing, idea in theoretical physics and cosmology is that spacetime may be emergent. That is, spacetime emerges from something much more fundamental, in much the same way that our perception of temperature emerges from the motion and characteristics of underlying particles.

A small, but growing, idea in theoretical physics and cosmology is that spacetime may be emergent. That is, spacetime emerges from something much more fundamental, in much the same way that our perception of temperature emerges from the motion and characteristics of underlying particles.

Peter Ludlow, professor of philosophy at Northwestern University, has authored a number of fascinating articles on the philosophy of language and linguistics. Here he discusses his view of language as a dynamic, living organism. Literalists take note.

Peter Ludlow, professor of philosophy at Northwestern University, has authored a number of fascinating articles on the philosophy of language and linguistics. Here he discusses his view of language as a dynamic, living organism. Literalists take note.

Alfred Hitchcock was a pioneer of modern cinema. His finely crafted movies introduced audiences to new levels of suspense, sexuality and violence. His work raised cinema to the level of great art.

Alfred Hitchcock was a pioneer of modern cinema. His finely crafted movies introduced audiences to new levels of suspense, sexuality and violence. His work raised cinema to the level of great art.

Some online videos and stories are seen by tens or hundreds of millions, yet others never see the light of day. Advertisers and reality star wannabes search daily for the secret sauce that determines the huge success of one internet meme over many others. However, much to the frustration of the many agents to the “next big thing”, several fascinating new studies point at nothing more than simple randomness.

Some online videos and stories are seen by tens or hundreds of millions, yet others never see the light of day. Advertisers and reality star wannabes search daily for the secret sauce that determines the huge success of one internet meme over many others. However, much to the frustration of the many agents to the “next big thing”, several fascinating new studies point at nothing more than simple randomness. [div class=attrib]From Slate:[end-div]

[div class=attrib]From Slate:[end-div]

[div class=attrib]From the Wall Street Journal:[end-div]

[div class=attrib]From the Wall Street Journal:[end-div]

The tension between science, religion and politics that began several millennia ago continues unabated.

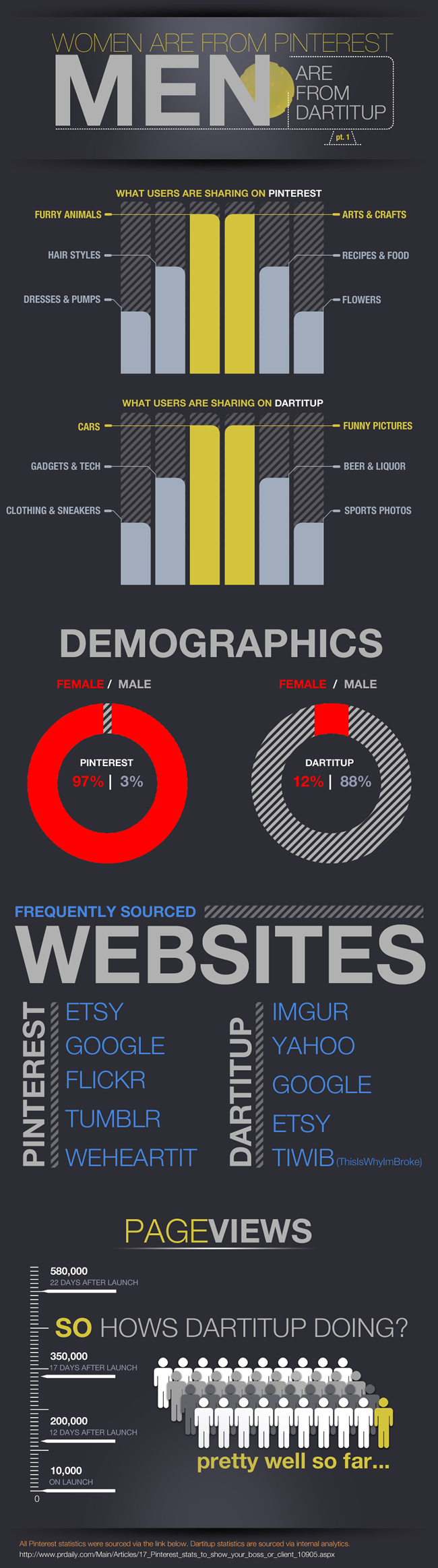

The tension between science, religion and politics that began several millennia ago continues unabated. The old maxim used to go something like, “you are what you eat”. Well, in the early 21st century it has been usurped by, “you are what you share online (knowingly or not)”.

The old maxim used to go something like, “you are what you eat”. Well, in the early 21st century it has been usurped by, “you are what you share online (knowingly or not)”.