[div class=attrib]From The New York Times:[end-div]

Robert Rauschenberg, the irrepressibly prolific American artist who time and again reshaped art in the 20th century, died on Monday night at his home on Captiva Island, Fla. He was 82.

Robert Rauschenberg, the irrepressibly prolific American artist who time and again reshaped art in the 20th century, died on Monday night at his home on Captiva Island, Fla. He was 82.

The cause was heart failure, said Arne Glimcher, chairman of PaceWildenstein, the Manhattan gallery that represents Mr. Rauschenberg.

Mr. Rauschenberg’s work gave new meaning to sculpture. “Canyon,” for instance, consisted of a stuffed bald eagle attached to a canvas. “Monogram” was a stuffed goat girdled by a tire atop a painted panel. “Bed” entailed a quilt, sheet and pillow, slathered with paint, as if soaked in blood, framed on the wall. All became icons of postwar modernism.

A painter, photographer, printmaker, choreographer, onstage performer, set designer and, in later years, even a composer, Mr. Rauschenberg defied the traditional idea that an artist stick to one medium or style. He pushed, prodded and sometimes reconceived all the mediums in which he worked.

Building on the legacies of Marcel Duchamp, Kurt Schwitters, Joseph Cornell and others, he helped obscure the lines between painting and sculpture, painting and photography, photography and printmaking, sculpture and photography, sculpture and dance, sculpture and technology, technology and performance art — not to mention between art and life.

Mr. Rauschenberg was also instrumental in pushing American art onward from Abstract Expressionism, the dominant movement when he emerged, during the early 1950s. He became a transformative link between artists like Jackson Pollock and Willem de Kooning and those who came next, artists identified with Pop, Conceptualism, Happenings, Process Art and other new kinds of art in which he played a signal role.

No American artist, Jasper Johns once said, invented more than Mr. Rauschenberg. Mr. Johns, John Cage, Merce Cunningham and Mr. Rauschenberg, without sharing exactly the same point of view, collectively defined this new era of experimentation in American culture.

Apropos of Mr. Rauschenberg, Cage once said, “Beauty is now underfoot wherever we take the trouble to look.” Cage meant that people had come to see, through Mr. Rauschenberg’s efforts, not just that anything, including junk on the street, could be the stuff of art (this wasn’t itself new), but that it could be the stuff of an art aspiring to be beautiful — that there was a potential poetics even in consumer glut, which Mr. Rauschenberg celebrated.

“I really feel sorry for people who think things like soap dishes or mirrors or Coke bottles are ugly,” he once said, “because they’re surrounded by things like that all day long, and it must make them miserable.”

The remark reflected the optimism and generosity of spirit that Mr. Rauschenberg became known for. His work was likened to a St. Bernard: uninhibited and mostly good-natured. He could be the same way in person. When he became rich, he gave millions of dollars to charities for women, children, medical research, other artists and Democratic politicians.

A brash, garrulous, hard-drinking, open-faced Southerner, he had a charm and peculiar Delphic felicity with language that masked a complex personality and an equally multilayered emotional approach to art, which evolved as his stature did. Having begun by making quirky, small-scale assemblages out of junk he found on the street in downtown Manhattan, he spent increasing time in his later years, after he had become successful and famous, on vast international, ambassadorial-like projects and collaborations.

Conceived in his immense studio on the island of Captiva, off southwest Florida, these projects were of enormous size and ambition; for many years he worked on one that grew literally to exceed the length of its title, “The 1/4 Mile or 2 Furlong Piece.” They generally did not live up to his earlier achievements. Even so, he maintained an equanimity toward the results. Protean productivity went along with risk, he felt, and risk sometimes meant failure.

The process — an improvisatory, counterintuitive way of doing things — was always what mattered most to him. “Screwing things up is a virtue,” he said when he was 74. “Being correct is never the point. I have an almost fanatically correct assistant, and by the time she re-spells my words and corrects my punctuation, I can’t read what I wrote. Being right can stop all the momentum of a very interesting idea.”

This attitude also inclined him, as the painter Jack Tworkov once said, “to see beyond what others have decided should be the limits of art.”

He “keeps asking the question — and it’s a terrific question philosophically, whether or not the results are great art,” Mr. Tworkov said, “and his asking it has influenced a whole generation of artists.”

A Wry, Respectful Departure

That generation was the one that broke from Pollock and company. Mr. Rauschenberg maintained a deep but mischievous respect for Abstract Expressionist heroes like de Kooning and Barnett Newman. Famously, he once painstakingly erased a drawing by de Kooning, an act both of destruction and devotion. Critics regarded the all-black paintings and all-red paintings he made in the early 1950s as spoofs of de Kooning and Pollock. The paintings had roiling, bubbled surfaces made from scraps of newspapers embedded in paint.

But these were just as much homages as they were parodies. De Kooning, himself a parodist, had incorporated bits of newspapers in pictures, and Pollock stuck cigarette butts to canvases.

Mr. Rauschenberg’s “Automobile Tire Print,” from the early 1950s — resulting from Cage’s driving an inked tire of a Model A Ford over 20 sheets of white paper — poked fun at Newman’s famous “zip” paintings.

At the same time, Mr. Rauschenberg was expanding on Newman’s art. The tire print transformed Newman’s zip — an abstract line against a monochrome backdrop with spiritual pretensions — into an artifact of everyday culture, which for Mr. Rauschenberg had its own transcendent dimension.

Mr. Rauschenberg frequently alluded to cars and spaceships, even incorporating real tires and bicycles into his art. This partly reflected his own restless, peripatetic imagination. The idea of movement was logically extended when he took up dance and performance.

There was, beneath this, a darkness to many of his works, notwithstanding their irreverence. “Bed” (1955) was gothic. The all-black paintings were solemn and shuttered. The red paintings looked charred, with strips of fabric akin to bandages, from which paint dripped like blood. “Interview” (1955), which resembled a cabinet or closet with a door, enclosing photos of bullfighters, a pinup, a Michelangelo nude, a fork and a softball, suggested some black-humored encoded erotic message.

There were many other images of downtrodden and lonely people, rapt in thought; pictures of ancient frescoes, out of focus as if half remembered; photographs of forlorn, neglected sites; bits and pieces of faraway places conveying a kind of nostalgia or remoteness. In bringing these things together, the art implied consolation.

Mr. Rauschenberg, who knew that not everybody found it easy to grasp the open-endedness of his work, once described to the writer Calvin Tomkins an encounter with a woman who had reacted skeptically to “Monogram” (1955-59) and “Bed” in his 1963 retrospective at the Jewish Museum, one of the events that secured Mr. Rauschenberg’s reputation: “To her, all my decisions seemed absolutely arbitrary — as though I could just as well have selected anything at all — and therefore there was no meaning, and that made it ugly.

[div class=attrib]More from theSource here.[end-div]

[div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] The earthquake in central Italy last week zeroed in on the beautiful medieval hill town of L’Aquila. It claimed the lives of 294 young and old, injured several thousand more, and made tens of thousands homeless. This is a heart-wrenching human tragedy. It’s also a cultural one. The quake razed centuries of L’Aquila’s historical buildings, broke the foundations of many of the town’s churches and public spaces, destroyed countless cultural artifacts, and forever buried much of the town’s irreplaceable art under tons of twisted iron and fractured stone.

The earthquake in central Italy last week zeroed in on the beautiful medieval hill town of L’Aquila. It claimed the lives of 294 young and old, injured several thousand more, and made tens of thousands homeless. This is a heart-wrenching human tragedy. It’s also a cultural one. The quake razed centuries of L’Aquila’s historical buildings, broke the foundations of many of the town’s churches and public spaces, destroyed countless cultural artifacts, and forever buried much of the town’s irreplaceable art under tons of twisted iron and fractured stone. [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once.

Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once. [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]By Andrew Sullivan for the Altantic[end-div]

[div class=attrib]By Andrew Sullivan for the Altantic[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div] [div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div] [div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]

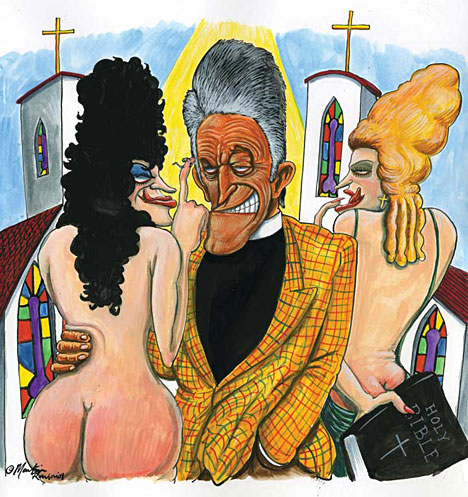

Robert Rauschenberg

Robert Rauschenberg

[div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]