Americans love their apocalypses. So, should demise come at the hands of a natural catastrophe, hastened by human (in)action, or should it come courtesy of an engineered biological or nuclear disaster? You chose. Isn’t this so much fun, thinking about absolute extinction?

Americans love their apocalypses. So, should demise come at the hands of a natural catastrophe, hastened by human (in)action, or should it come courtesy of an engineered biological or nuclear disaster? You chose. Isn’t this so much fun, thinking about absolute extinction?

Ira Chernus, Professor of Religious Studies at the University of Colorado at Boulder, brings us a much-needed scholarly account of our love affairs with all things apocalyptic. But our fascination for Armageddon — often driven by hope — does nothing to resolve the ultimate conundrum: regardless of the type of ending, it is unlikely that Bruce Willis will be featuring.

From TomDispatch / Salon:

Wherever we Americans look, the threat of apocalypse stares back at us.

Two clouds of genuine doom still darken our world: nuclear extermination and environmental extinction. If they got the urgent action they deserve, they would be at the top of our political priority list.

But they have a hard time holding our attention, crowded out as they are by a host of new perils also labeled “apocalyptic”: mounting federal debt, the government’s plan to take away our guns, corporate control of the Internet, the Comcast-Time Warner mergerocalypse, Beijing’s pollution airpocalypse, the American snowpocalypse, not to speak of earthquakes and plagues. The list of topics, thrown at us with abandon from the political right, left, and center, just keeps growing.

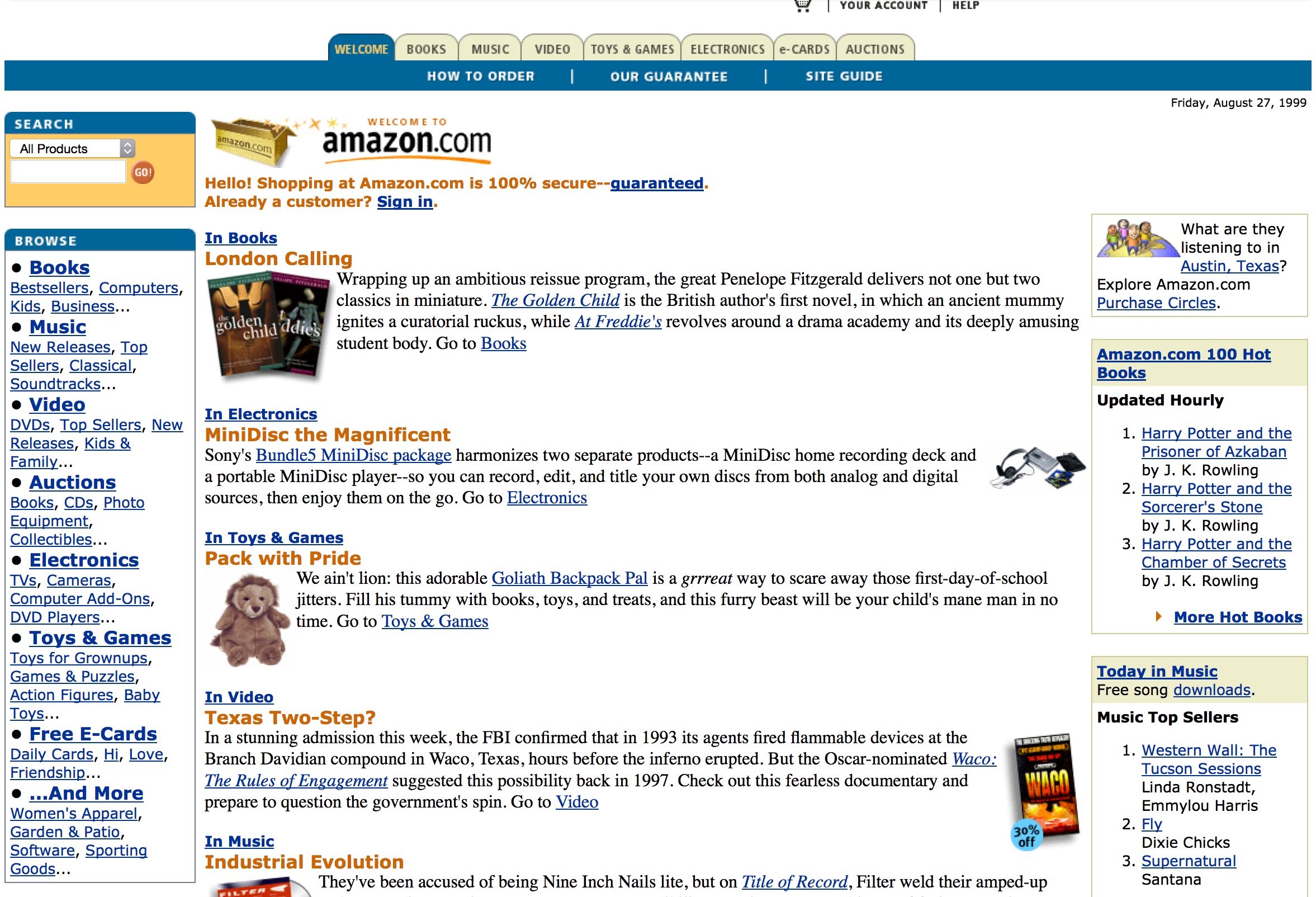

Then there’s the world of arts and entertainment where selling the apocalypse turns out to be a rewarding enterprise. Check out the website “Romantically Apocalyptic,” Slash’s album “Apocalyptic Love,” or the history-lite documentary “Viking Apocalypse” for starters. These days, mathematicians even have an “apocalyptic number.”

Yes, the A-word is now everywhere, and most of the time it no longer means “the end of everything,” but “the end of anything.” Living a life so saturated with apocalypses undoubtedly takes a toll, though it’s a subject we seldom talk about.

So let’s lift the lid off the A-word, take a peek inside, and examine how it affects our everyday lives. Since it’s not exactly a pretty sight, it’s easy enough to forget that the idea of the apocalypse has been a container for hope as well as fear. Maybe even now we’ll find some hope inside if we look hard enough.

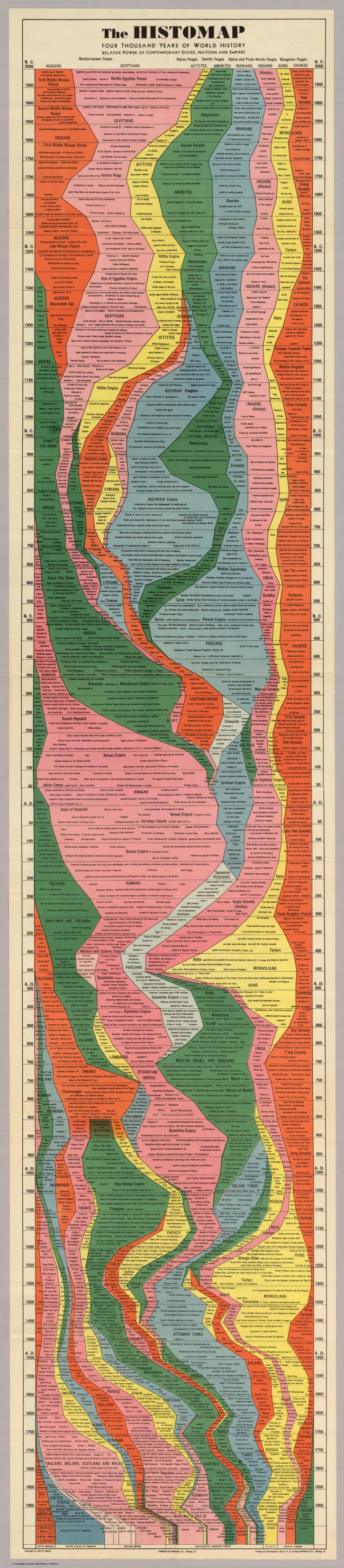

A Brief History of Apocalypse

Apocalyptic stories have been around at least since biblical times, if not earlier. They show up in many religions, always with the same basic plot: the end is at hand; the cosmic struggle between good and evil (or God and the Devil, as the New Testament has it) is about to culminate in catastrophic chaos, mass extermination, and the end of the world as we know it.

That, however, is only Act I, wherein we wipe out the past and leave a blank cosmic slate in preparation for Act II: a new, infinitely better, perhaps even perfect world that will arise from the ashes of our present one. It’s often forgotten that religious apocalypses, for all their scenes of destruction, are ultimately stories of hope; and indeed, they have brought it to millions who had to believe in a better world a-comin’, because they could see nothing hopeful in this world of pain and sorrow.

That traditional religious kind of apocalypse has also been part and parcel of American political life since, in Common Sense, Tom Paine urged the colonies to revolt by promising, “We have it in our power to begin the world over again.”

When World War II — itself now sometimes called an apocalypse – ushered in the nuclear age, it brought a radical transformation to the idea. Just as novelist Kurt Vonnegut lamented that the threat of nuclear war had robbed us of “plain old death” (each of us dying individually, mourned by those who survived us), the theologically educated lamented the fate of religion’s plain old apocalypse.

After this country’s “victory weapon” obliterated two Japanese cities in August 1945, most Americans sighed with relief that World War II was finally over. Few, however, believed that a permanently better world would arise from the radioactive ashes of that war. In the 1950s, even as the good times rolled economically, America’s nuclear fear created something historically new and ominous — a thoroughly secular image of the apocalypse. That’s the one you’ll get first if you type “define apocalypse” into Google’s search engine: “the complete final destruction of the world.” In other words, one big “whoosh” and then… nothing. Total annihilation. The End.

Apocalypse as utter extinction was a new idea. Surprisingly soon, though, most Americans were (to adapt the famous phrase of filmmaker Stanley Kubrick) learning how to stop worrying and get used to the threat of “the big whoosh.” With the end of the Cold War, concern over a world-ending global nuclear exchange essentially evaporated, even if the nuclear arsenals of that era were left ominously in place.

Meanwhile, another kind of apocalypse was gradually arising: environmental destruction so complete that it, too, would spell the end of all life.

This would prove to be brand new in a different way. It is, as Todd Gitlin has so aptly termed it, history’s first “slow-motion apocalypse.” Climate change, as it came to be called, had been creeping up on us “in fits and starts,” largely unnoticed, for two centuries. Since it was so different from what Gitlin calls “suddenly surging Genesis-style flood” or the familiar “attack out of the blue,” it presented a baffling challenge. After all, the word apocalypse had been around for a couple of thousand years or more without ever being associated in any meaningful way with the word gradual.

The eminent historian of religions Mircea Eliade once speculated that people could grasp nuclear apocalypse because it resembled Act I in humanity’s huge stock of apocalypse myths, where the end comes in a blinding instant — even if Act II wasn’t going to follow. This mythic heritage, he suggested, remains lodged in everyone’s unconscious, and so feels familiar.

But in a half-century of studying the world’s myths, past and present, he had never found a single one that depicted the end of the world coming slowly. This means we have no unconscious imaginings to pair it with, nor any cultural tropes or traditions that would help us in our struggle to grasp it.

That makes it so much harder for most of us even to imagine an environmentally caused end to life. The very category of “apocalypse” doesn’t seem to apply. Without those apocalyptic images and fears to motivate us, a sense of the urgent action needed to avert such a slowly emerging global catastrophe lessens.

All of that (plus of course the power of the interests arrayed against regulating the fossil fuel industry) might be reason enough to explain the widespread passivity that puts the environmental peril so far down on the American political agenda. But as Dr. Seuss would have said, that is not all! Oh no, that is not all.

Apocalypses Everywhere

When you do that Google search on apocalypse, you’ll also get the most fashionable current meaning of the word: “Any event involving destruction on an awesome scale; [for example] ‘a stock market apocalypse.’” Welcome to the age of apocalypses everywhere.

With so many constantly crying apocalyptic wolf or selling apocalyptic thrills, it’s much harder now to distinguish between genuine threats of extinction and the cheap imitations. The urgency, indeed the very meaning, of apocalypse continues to be watered down in such a way that the word stands in danger of becoming virtually meaningless. As a result, we find ourselves living in an era that constantly reflects premonitions of doom, yet teaches us to look away from the genuine threats of world-ending catastrophe.

Oh, America still worries about the Bomb — but only when it’s in the hands of some “bad” nation. Once that meant Iraq (even if that country, under Saddam Hussein, never had a bomb and in 2003, when the Bush administration invaded, didn’t even have a bomb program). Now, it means Iran — another country without a bomb or any known plan to build one, but with the apocalyptic stare focused on it as if it already had an arsenal of such weapons — and North Korea.

These days, in fact, it’s easy enough to pin the label “apocalyptic peril” on just about any country one loathes, even while ignoring friends, allies, and oneself. We’re used to new apocalyptic threats emerging at a moment’s notice, with little (or no) scrutiny of whether the A-word really applies.

What’s more, the Cold War era fixed a simple equation in American public discourse: bad nation + nuclear weapon = our total destruction. So it’s easy to buy the platitude that Iran must never get a nuclear weapon or it’s curtains. That leaves little pressure on top policymakers and pundits to explain exactly how a few nuclear weapons held by Iran could actually harm Americans.

Meanwhile, there’s little attention paid to the world’s largest nuclear arsenal, right here in the U.S. Indeed, America’s nukes are quite literally impossible to see, hidden as they are underground, under the seas, and under the wraps of “top secret” restrictions. Who’s going to worry about what can’t be seen when so many dangers termed “apocalyptic” seem to be in plain sight?

Environmental perils are among them: melting glaciers and open-water Arctic seas, smog-blinded Chinese cities, increasingly powerful storms, and prolonged droughts. Yet most of the time such perils seem far away and like someone else’s troubles. Even when dangers in nature come close, they generally don’t fit the images in our apocalyptic imagination. Not surprisingly, then, voices proclaiming the inconvenient truth of a slowly emerging apocalypse get lost in the cacophony of apocalypses everywhere. Just one more set of boys crying wolf and so remarkably easy to deny or stir up doubt about.

Death in Life

Why does American culture use the A-word so promiscuously? Perhaps we’ve been living so long under a cloud of doom that every danger now readily takes on the same lethal hue.

Psychiatrist Robert Lifton predicted such a state years ago when he suggested that the nuclear age had put us all in the grips of what he called “psychic numbing” or “death in life.” We can no longer assume that we’ll die Vonnegut’s plain old death and be remembered as part of an endless chain of life. Lifton’s research showed that the link between death and life had become, as he put it, a “broken connection.”

As a result, he speculated, our minds stop trying to find the vitalizing images necessary for any healthy life. Every effort to form new mental images only conjures up more fear that the chain of life itself is coming to a dead end. Ultimately, we are left with nothing but “apathy, withdrawal, depression, despair.”

If that’s the deepest psychic lens through which we see the world, however unconsciously, it’s easy to understand why anything and everything can look like more evidence that The End is at hand. No wonder we have a generation of American youth and young adults who take a world filled with apocalyptic images for granted.

Think of it as, in some grim way, a testament to human resiliency. They are learning how to live with the only reality they’ve ever known (and with all the irony we’re capable of, others are learning how to sell them cultural products based on that reality). Naturally, they assume it’s the only reality possible. It’s no surprise that “The Walking Dead,” a zombie apocalypse series, is theirfavorite TV show, since it reveals (and revels in?) what one TV critic called the “secret life of the post-apocalyptic American teenager.”

Perhaps the only thing that should genuinely surprise us is how many of those young people still manage to break through psychic numbing in search of some way to make a difference in the world.

Yet even in the political process for change, apocalypses are everywhere. Regardless of the issue, the message is typically some version of “Stop this catastrophe now or we’re doomed!” (An example: Stop the Keystone XL pipeline or it’s “game over”!) A better future is often implied between the lines, but seldom gets much attention because it’s ever harder to imagine such a future, no less believe in it.

No matter how righteous the cause, however, such a single-minded focus on danger and doom subtly reinforces the message of our era of apocalypses everywhere: abandon all hope, ye who live here and now.

Read the entire article here.

Image: Armageddon movie poster. Courtesy of Touchstone Pictures.

Is Nicolas Felton the Samuel Pepys of our digital age?

Is Nicolas Felton the Samuel Pepys of our digital age?

You could be forgiven for believing that celebrity is a peculiar and pervasive symptom of our contemporary culture. After all in our multi-channel, always on pop-culture, 24×7 event-driven, media-obsessed maelstrom celebrities come, and go, in the blink of an eye. This is the age of celebrity.

You could be forgiven for believing that celebrity is a peculiar and pervasive symptom of our contemporary culture. After all in our multi-channel, always on pop-culture, 24×7 event-driven, media-obsessed maelstrom celebrities come, and go, in the blink of an eye. This is the age of celebrity. Camera aficionados will find themselves lamenting the demise of the film advance. Now that the world has moved on from film to digital you will no longer hear that distinctive mechanical sound as you wind on the film, and hope the teeth on the spool engage the plastic of the film.

Camera aficionados will find themselves lamenting the demise of the film advance. Now that the world has moved on from film to digital you will no longer hear that distinctive mechanical sound as you wind on the film, and hope the teeth on the spool engage the plastic of the film.

Social media is great for notifying members in one’s circle of events in the here and now. Of course, most events turn out to be rather trivial, of the “what I ate for dinner” kind. However, social media also has a role in spreading word of more momentous social and political events; the Arab Spring comes to mind.

Social media is great for notifying members in one’s circle of events in the here and now. Of course, most events turn out to be rather trivial, of the “what I ate for dinner” kind. However, social media also has a role in spreading word of more momentous social and political events; the Arab Spring comes to mind.

[div class=attrib]Thomas Rogers for Slate:[end-div]

[div class=attrib]Thomas Rogers for Slate:[end-div] A new book by James Morton examines the life and times of cross-dressing burglar, prison-escapee and snitch turned super-detective Eugène-François Vidocq.

A new book by James Morton examines the life and times of cross-dressing burglar, prison-escapee and snitch turned super-detective Eugène-François Vidocq.