Important new research suggests that traumatic memories can be rewritten. Timing is critical.

From Technology Review:

It was a Saturday night at the New York Psychoanalytic Institute, and the second-floor auditorium held an odd mix of gray-haired, cerebral Upper East Side types and young, scruffy downtown grad students in black denim. Up on the stage, neuroscientist Daniela Schiller, a riveting figure with her long, straight hair and impossibly erect posture, paused briefly from what she was doing to deliver a mini-lecture about memory.

She explained how recent research, including her own, has shown that memories are not unchanging physical traces in the brain. Instead, they are malleable constructs that may be rebuilt every time they are recalled. The research suggests, she said, that doctors (and psychotherapists) might be able to use this knowledge to help patients block the fearful emotions they experience when recalling a traumatic event, converting chronic sources of debilitating anxiety into benign trips down memory lane.

And then Schiller went back to what she had been doing, which was providing a slamming, rhythmic beat on drums and backup vocals for the Amygdaloids, a rock band composed of New York City neuroscientists. During their performance at the institute’s second annual “Heavy Mental Variety Show,” the band blasted out a selection of its greatest hits, including songs about cognition (“Theory of My Mind”), memory (“A Trace”), and psychopathology (“Brainstorm”).

“Just give me a pill,” Schiller crooned at one point, during the chorus of a song called “Memory Pill.” “Wash away my memories …”

The irony is that if research by Schiller and others holds up, you may not even need a pill to strip a memory of its power to frighten or oppress you.

Schiller, 40, has been in the vanguard of a dramatic reassessment of how human memory works at the most fundamental level. Her current lab group at Mount Sinai School of Medicine, her former colleagues at New York University, and a growing army of like-minded researchers have marshaled a pile of data to argue that we can alter the emotional impact of a memory by adding new information to it or recalling it in a different context. This hypothesis challenges 100 years of neuroscience and overturns cultural touchstones from Marcel Proust to best-selling memoirs. It changes how we think about the permanence of memory and identity, and it suggests radical nonpharmacological approaches to treating pathologies like post-traumatic stress disorder, other fear-based anxiety disorders, and even addictive behaviors.

In a landmark 2010 paper in Nature, Schiller (then a postdoc at New York University) and her NYU colleagues, including Joseph E. LeDoux and Elizabeth A. Phelps, published the results of human experiments indicating that memories are reshaped and rewritten every time we recall an event. And, the research suggested, if mitigating information about a traumatic or unhappy event is introduced within a narrow window of opportunity after its recall—during the few hours it takes for the brain to rebuild the memory in the biological brick and mortar of molecules—the emotional experience of the memory can essentially be rewritten.

“When you affect emotional memory, you don’t affect the content,” Schiller explains. “You still remember perfectly. You just don’t have the emotional memory.”

Fear training

The idea that memories are constantly being rewritten is not entirely new. Experimental evidence to this effect dates back at least to the 1960s. But mainstream researchers tended to ignore the findings for decades because they contradicted the prevailing scientific theory about how memory works.

That view began to dominate the science of memory at the beginning of the 20th century. In 1900, two German scientists, Georg Elias Müller and Alfons Pilzecker, conducted a series of human experiments at the University of Göttingen. Their results suggested that memories were fragile at the moment of formation but were strengthened, or consolidated, over time; once consolidated, these memories remained essentially static, permanently stored in the brain like a file in a cabinet from which they could be retrieved when the urge arose.

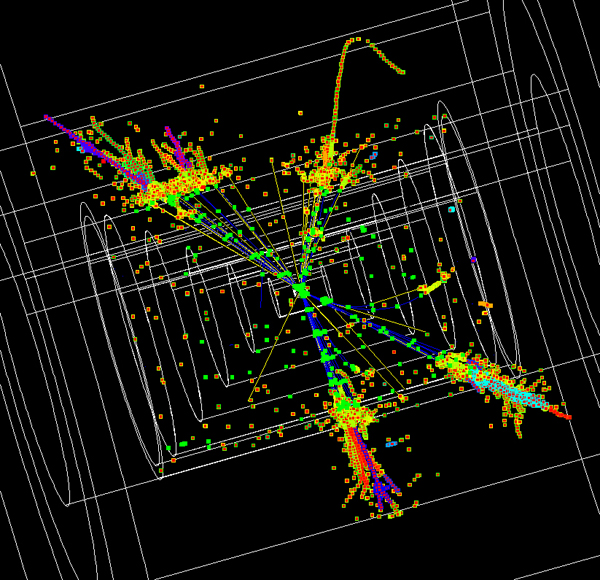

It took decades of painstaking research for neuroscientists to tease apart a basic mechanism of memory to explain how consolidation occurred at the level of neurons and proteins: an experience entered the neural landscape of the brain through the senses, was initially “encoded” in a central brain apparatus known as the hippocampus, and then migrated—by means of biochemical and electrical signals—to other precincts of the brain for storage. A famous chapter in this story was the case of “H.M.,” a young man whose hippocampus was removed during surgery in 1953 to treat debilitating epileptic seizures; although physiologically healthy for the remainder of his life (he died in 2008), H.M. was never again able to create new long-term memories, other than to learn new motor skills.

Subsequent research also made clear that there is no single thing called memory but, rather, different types of memory that achieve different biological purposes using different neural pathways. “Episodic” memory refers to the recollection of specific past events; “procedural” memory refers to the ability to remember specific motor skills like riding a bicycle or throwing a ball; fear memory, a particularly powerful form of emotional memory, refers to the immediate sense of distress that comes from recalling a physically or emotionally dangerous experience. Whatever the memory, however, the theory of consolidation argued that it was an unchanging neural trace of an earlier event, fixed in long-term storage. Whenever you retrieved the memory, whether it was triggered by an unpleasant emotional association or by the seductive taste of a madeleine, you essentially fetched a timeless narrative of an earlier event. Humans, in this view, were the sum total of their fixed memories. As recently as 2000 in Science, in a review article titled “Memory—A Century of Consolidation,” James L. McGaugh, a leading neuroscientist at the University of California, Irvine, celebrated the consolidation hypothesis for the way that it “still guides” fundamental research into the biological process of long-term memory.

As it turns out, Proust wasn’t much of a neuroscientist, and consolidation theory couldn’t explain everything about memory. This became apparent during decades of research into what is known as fear training.

Schiller gave me a crash course in fear training one afternoon in her Mount Sinai lab. One of her postdocs, Dorothee Bentz, strapped an electrode onto my right wrist in order to deliver a mild but annoying shock. She also attached sensors to several fingers on my left hand to record my galvanic skin response, a measure of physiological arousal and fear. Then I watched a series of images—blue and purple cylinders—flash by on a computer screen. It quickly became apparent that the blue cylinders often (but not always) preceded a shock, and my skin conductivity readings reflected what I’d learned. Every time I saw a blue cylinder, I became anxious in anticipation of a shock. The “learning” took no more than a couple of minutes, and Schiller pronounced my little bumps of anticipatory anxiety, charted in real time on a nearby monitor, a classic response of fear training. “It’s exactly the same as in the rats,” she said.

In the 1960s and 1970s, several research groups used this kind of fear memory in rats to detect cracks in the theory of memory consolidation. In 1968, for example, Donald J. Lewis of Rutgers University led a study showing that you could make the rats lose the fear associated with a memory if you gave them a strong electroconvulsive shock right after they were induced to retrieve that memory; the shock produced an amnesia about the previously learned fear. Giving a shock to animals that had not retrieved the memory, in contrast, did not cause amnesia. In other words, a strong shock timed to occur immediately after a memory was retrieved seemed to have a unique capacity to disrupt the memory itself and allow it to be reconsolidated in a new way. Follow-up work in the 1980s confirmed some of these observations, but they lay so far outside mainstream thinking that they barely received notice.

Moment of silence

At the time, Schiller was oblivious to these developments. A self-described skateboarding “science geek,” she grew up in Rishon LeZion, Israel’s fourth-largest city, on the coastal plain a few miles southeast of Tel Aviv. She was the youngest of four children of a mother from Morocco and a “culturally Polish” father from Ukraine—“a typical Israeli melting pot,” she says. As a tall, fair-skinned teenager with European features, she recalls feeling estranged from other neighborhood kids because she looked so German.

Schiller remembers exactly when her curiosity about the nature of human memory began. She was in the sixth grade, and it was the annual Holocaust Memorial Day in Israel. For a school project, she asked her father about his memories as a Holocaust survivor, and he shrugged off her questions. She was especially puzzled by her father’s behavior at 11 a.m., when a simultaneous eruption of sirens throughout Israel signals the start of a national moment of silence. While everyone else in the country stood up to honor the victims of genocide, he stubbornly remained seated at the kitchen table as the sirens blared, drinking his coffee and reading the newspaper.

“The Germans did something to my dad, but I don’t know what because he never talks about it,” Schiller told a packed audience in 2010 at The Moth, a storytelling event.

During her compulsory service in the Israeli army, she organized scientific and educational conferences, which led to studies in psychology and philosophy at Tel Aviv University; during that same period, she procured a set of drums and formed her own Hebrew rock band, the Rebellion Movement. Schiller went on to receive a PhD in psychobiology from Tel Aviv University in 2004. That same year, she recalls, she saw the movie Eternal Sunshine of the Spotless Mind, in which a young man undergoes treatment with a drug that erases all memories of a former girlfriend and their painful breakup. Schiller heard (mistakenly, it turns out) that the premise of the movie had been based on research conducted by Joe LeDoux, and she eventually applied to NYU for a postdoctoral fellowship.

In science as in memory, timing is everything. Schiller arrived in New York just in time for the second coming of memory reconsolidation in neuroscience.

Altering the story

The table had been set for Schiller’s work on memory modification in 2000, when Karim Nader, a postdoc in LeDoux’s lab, suggested an experiment testing the effect of a drug on the formation of fear memories in rats. LeDoux told Nader in no uncertain terms that he thought the idea was a waste of time and money. Nader did the experiment anyway. It ended up getting published in Nature and sparked a burst of renewed scientific interest in memory reconsolidation (see “Manipulating Memory,” May/June 2009).

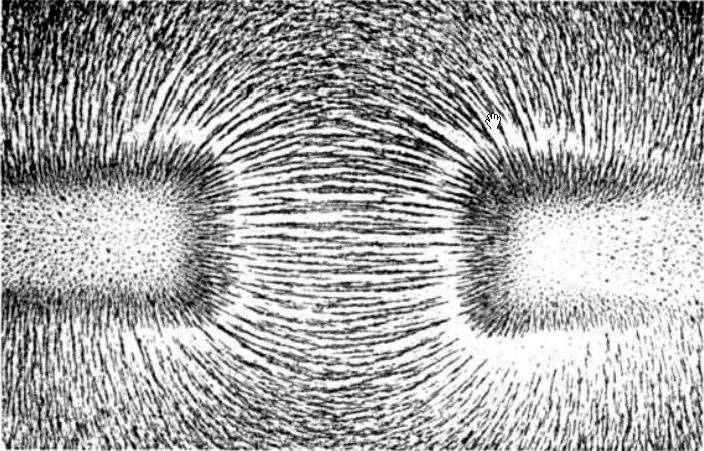

The rats had undergone classic fear training—in an unpleasant twist on Pavlovian conditioning, they had learned to associate an auditory tone with an electric shock. But right after the animals retrieved the fearsome memory (the researchers knew they had done so because they froze when they heard the tone), Nader injected a drug that blocked protein synthesis directly into their amygdala, the part of the brain where fear memories are believed to be stored. Surprisingly, that appeared to pave over the fearful association. The rats no longer froze in fear of the shock when they heard the sound cue.

Decades of research had established that long-term memory consolidation requires the synthesis of proteins in the brain’s memory pathways, but no one knew that protein synthesis was required after the retrieval of a memory as well—which implied that the memory was being consolidated then, too. Nader’s experiments also showed that blocking protein synthesis prevented the animals from recalling the fearsome memory only if they received the drug at the right time, shortly after they were reminded of the fearsome event. If Nader waited six hours before giving the drug, it had no effect and the original memory remained intact. This was a big biochemical clue that at least some forms of memories essentially had to be neurally rewritten every time they were recalled.

When Schiller arrived at NYU in 2005, she was asked by Elizabeth Phelps, who was spearheading memory research in humans, to extend Nader’s findings and test the potential of a drug to block fear memories. The drug used in the rodent experiment was much too toxic for human use, but a class of antianxiety drugs known as beta-adrenergic antagonists (or, in common parlance, “beta blockers”) had potential; among these drugs was propranolol, which had previously been approved by the FDA for the treatment of panic attacks and stage fright. Schiller immediately set out to test the effect of propranolol on memory in humans, but she never actually performed the experiment because of prolonged delays in getting institutional approval for what was then a pioneering form of human experimentation. “It took four years to get approval,” she recalls, “and then two months later, they took away the approval again. My entire postdoc was spent waiting for this experiment to be approved.” (“It still hasn’t been approved!” she adds.)

While waiting for the approval that never came, Schiller began to work on a side project that turned out to be even more interesting. It grew out of an offhand conversation with a colleague about some anomalous data described at meeting of LeDoux’s lab: a group of rats “didn’t behave as they were supposed to” in a fear experiment, Schiller says.

The data suggested that a fear memory could be disrupted in animals even without the use of a drug that blocked protein synthesis. Schiller used the kernel of this idea to design a set of fear experiments in humans, while Marie-H. Monfils, a member of the LeDoux lab, simultaneously pursued a parallel line of experimentation in rats. In the human experiments, volunteers were shown a blue square on a computer screen and then given a shock. Once the blue square was associated with an impending shock, the fear memory was in place. Schiller went on to show that if she repeated the sequence that produced the fear memory the following day but broke the association within a narrow window of time—that is, showed the blue square without delivering the shock—this new information was incorporated into the memory.

Here, too, the timing was crucial. If the blue square that wasn’t followed by a shock was shown within 10 minutes of the initial memory recall, the human subjects reconsolidated the memory without fear. If it happened six hours later, the initial fear memory persisted. Put another way, intervening during the brief window when the brain was rewriting its memory offered a chance to revise the initial memory itself while diminishing the emotion (fear) that came with it. By mastering the timing, the NYU group had essentially created a scenario in which humans could rewrite a fearsome memory and give it an unfrightening ending. And this new ending was robust: when Schiller and her colleagues called their subjects back into the lab a year later, they were able to show that the fear associated with the memory was still blocked.

The study, published in Nature in 2010, made clear that reconsolidation of memory didn’t occur only in rats.

Read the entire article here.

There are more theories on why we sleep than there are cable channels in the U.S. But that hasn’t prevented researchers from proposing yet another one — it’s all about flushing waste.

There are more theories on why we sleep than there are cable channels in the U.S. But that hasn’t prevented researchers from proposing yet another one — it’s all about flushing waste.

Curiosity, the latest rover to explore Mars, has found lots of water in the martian soil. Now, it doesn’t run freely, but is chemically bound to other substances. Yet the large volume of H2O bodes well for future human exploration (and settlement).

Curiosity, the latest rover to explore Mars, has found lots of water in the martian soil. Now, it doesn’t run freely, but is chemically bound to other substances. Yet the large volume of H2O bodes well for future human exploration (and settlement).

New research models show just how precarious our planet’s climate really is. Runaway greenhouse warming would make a predicted 2-6 feet rise in average sea levels over the next 50-100 years seem like a puddle at the local splash pool.

New research models show just how precarious our planet’s climate really is. Runaway greenhouse warming would make a predicted 2-6 feet rise in average sea levels over the next 50-100 years seem like a puddle at the local splash pool. Dopamine is one of the brain’s key signalling chemicals. And, because of its central role in the risk-reward structures of the brain it often gets much attention — both in neuroscience research and in the public consciousness.

Dopamine is one of the brain’s key signalling chemicals. And, because of its central role in the risk-reward structures of the brain it often gets much attention — both in neuroscience research and in the public consciousness.

Biomolecular and genetic engineering continue apace. This time researchers have inserted artificially constructed human genes into the cells of living mice.

Biomolecular and genetic engineering continue apace. This time researchers have inserted artificially constructed human genes into the cells of living mice.