Hot on the heels of the recent research finding that the Mediterranean diet improves heart health, come news that choc-a-holics the world over have been anxiously awaiting — chocolate improves brain function.

Researchers have found that chocolate rich in compounds known as flavanols can improve cognitive function. Now, before you rush out the door to visit the local grocery store to purchase a mountain of Mars bars (perhaps not coincidentally, Mars, Inc., partly funded the research study), Godiva pralines, Cadbury flakes or a slab of Dove, take note that all chocolate is not created equally. Flavanols tend to be found in highest concentrations in raw cocoa. In fact, during the process of making most chocolate, including the dark kind, most flavanols tend to be removed or destroyed. Perhaps the silver lining here is that to replicate the dose of flavanols found to have a positive effect on brain function, you would have to eat around 20 bars of chocolate per day for several months. This may be good news for your brain, but not your waistline!

[div class=attrib]From Scientific American:[end-div]

It’s news chocolate lovers have been craving: raw cocoa may be packed with brain-boosting compounds. Researchers at the University of L’Aquila in Italy, with scientists from Mars, Inc., and their colleagues published findings last September that suggest cognitive function in the elderly is improved by ingesting high levels of natural compounds found in cocoa called flavanols. The study included 90 individuals with mild cognitive impairment, a precursor to Alzheimer’s disease. Subjects who drank a cocoa beverage containing either moderate or high levels of flavanols daily for eight weeks demonstrated greater cognitive function than those who consumed low levels of flavanols on three separate tests that measured factors that included verbal fluency, visual searching and attention.

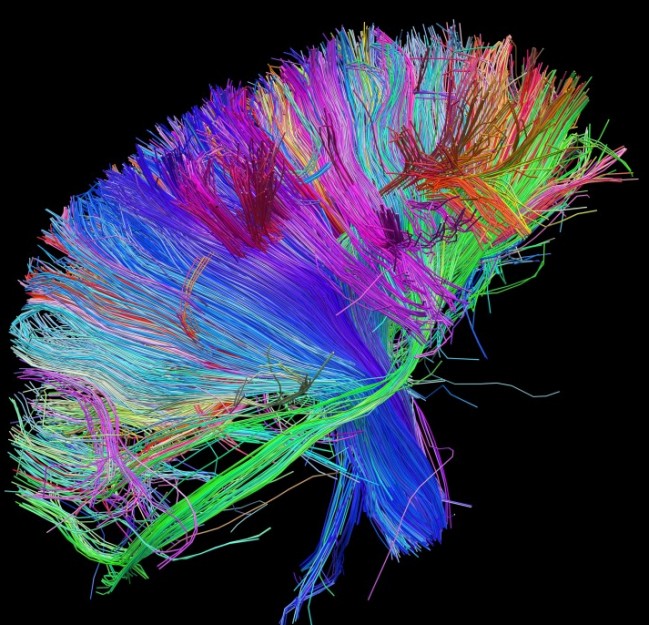

Exactly how cocoa causes these changes is still unknown, but emerging research points to one flavanol in particular: (-)-epicatechin, pronounced “minus epicatechin.” Its name signifies its structure, differentiating it from other catechins, organic compounds highly abundant in cocoa and present in apples, wine and tea. The graph below shows how (-)-epicatechin fits into the world of brain-altering food molecules. Other studies suggest that the compound supports increased circulation and the growth of blood vessels, which could explain improvements in cognition, because better blood flow would bring the brain more oxygen and improve its function.

Animal research has already demonstrated how pure (-)-epicatechin enhances memory. Findings published last October in the Journal of Experimental Biology note that snails can remember a trained task—such as holding their breath in deoxygenated water—for more than a day when given (-)-epicatechin but for less than three hours without the flavanol. Salk Institute neuroscientist Fred Gage and his colleagues found previously that (-)-epicatechin improves spatial memory and increases vasculature in mice. “It’s amazing that a single dietary change could have such profound effects on behavior,” Gage says. If further research confirms the compound’s cognitive effects, flavanol supplements—or raw cocoa beans—could be just what the doctor ordered.

So, Can We Binge on Chocolate Now?

Nope, sorry. A food’s origin, processing, storage and preparation can each alter its chemical composition. As a result, it is nearly impossible to predict which flavanols—and how many—remain in your bonbon or cup of tea. Tragically for chocoholics, most methods of processing cocoa remove many of the flavanols found in the raw plant. Even dark chocolate, touted as the “healthy” option, can be treated such that the cocoa darkens while flavanols are stripped.

Researchers are only beginning to establish standards for measuring flavanol content in chocolate. A typical one and a half ounce chocolate bar might contain about 50 milligrams of flavanols, which means you would need to consume 10 to 20 bars daily to approach the flavanol levels used in the University of L’Aquila study. At that point, the sugars and fats in these sweet confections would probably outweigh any possible brain benefits. Mars Botanical nutritionist and toxicologist Catherine Kwik-Uribe, an author on the University of L’Aquila study, says, “There’s now even more reasons to enjoy tea, apples and chocolate. But diversity and variety in your diet remain key.”

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of Google Search.[end-div]

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”.

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”.

The illustrious Vaccinia virus may well have an Act Two in its future.

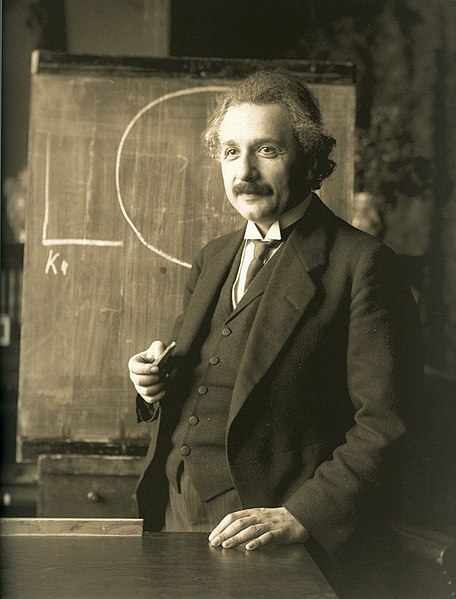

The illustrious Vaccinia virus may well have an Act Two in its future. There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible?

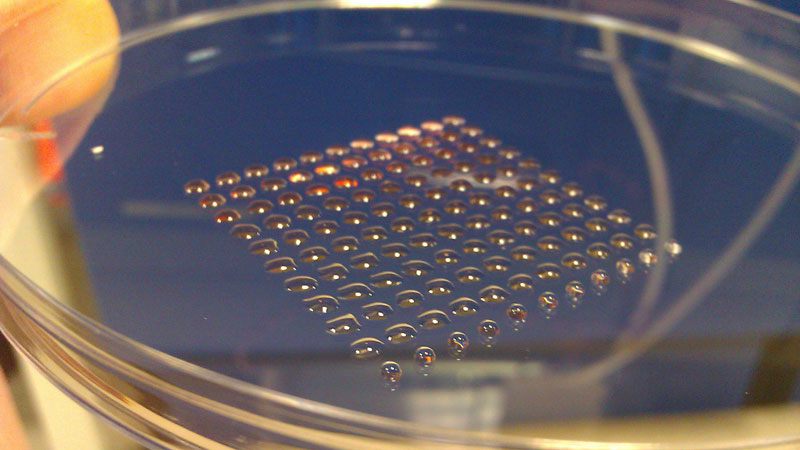

There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible? The most fundamental innovation tends to happen at the intersection of disciplines. So, what do you get if you cross 3-D printing technology with embryonic stem cell research? Well, you get a device that can print lines of cells with similar functions, such as heart muscle or kidney cells. Welcome to the new world of biofabrication. The science fiction future seems to be ever increasingly close.

The most fundamental innovation tends to happen at the intersection of disciplines. So, what do you get if you cross 3-D printing technology with embryonic stem cell research? Well, you get a device that can print lines of cells with similar functions, such as heart muscle or kidney cells. Welcome to the new world of biofabrication. The science fiction future seems to be ever increasingly close.

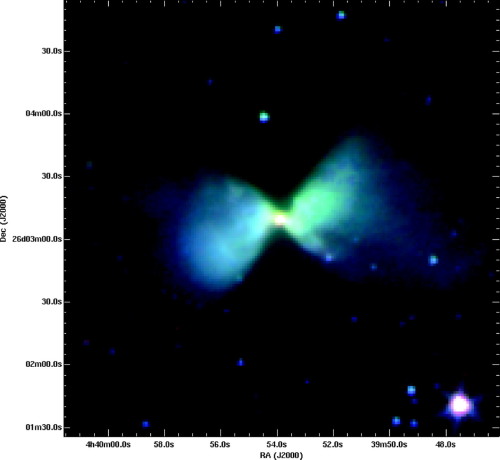

Cosmologists theorized the need for dark matter to account for hidden mass in our universe. Yet, as the name implies, it is proving rather hard to find. Now astronomers believe they see hints of it in ancient galactic collisions.

Cosmologists theorized the need for dark matter to account for hidden mass in our universe. Yet, as the name implies, it is proving rather hard to find. Now astronomers believe they see hints of it in ancient galactic collisions. Having missed the recent apocalypse said to have been predicted by the Mayans, the next possible end of the world is set for 2036. This time it’s courtesy of aptly named asteroid – Apophis.

Having missed the recent apocalypse said to have been predicted by the Mayans, the next possible end of the world is set for 2036. This time it’s courtesy of aptly named asteroid – Apophis.

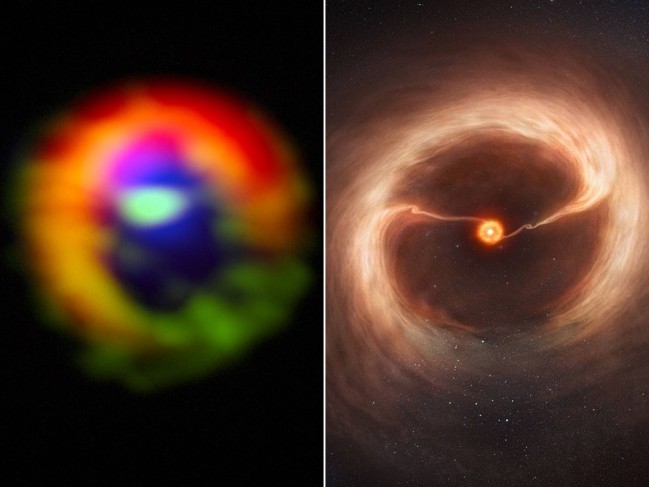

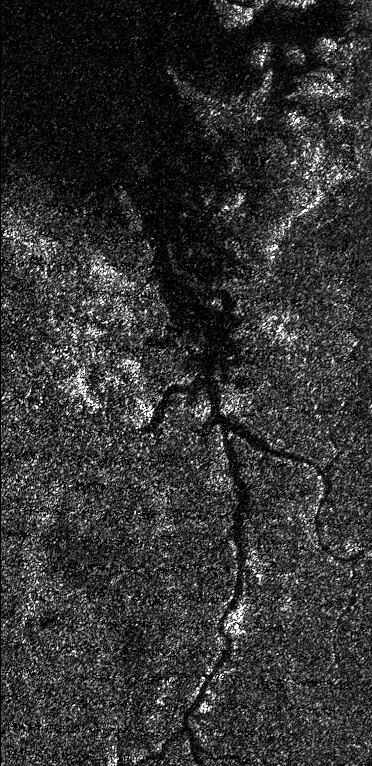

A diminutive stellar blob some 450 million light years away seems to be a young star giving birth to a planetary system much like our very own Solar System. The developing protostar and its surrounding gas cloud is being tracked astronomers at the National Radio Astronomy Observatory in Charlottesville, Virginia. Stellar and planetary evolution in action.

A diminutive stellar blob some 450 million light years away seems to be a young star giving birth to a planetary system much like our very own Solar System. The developing protostar and its surrounding gas cloud is being tracked astronomers at the National Radio Astronomy Observatory in Charlottesville, Virginia. Stellar and planetary evolution in action. The little space probe that could — Voyager I — is close to leaving our solar system and entering the relative void of interstellar space. As it does so, from a distance of around 18.4 billion kilometers (today), it continues to send back signals of what it finds. And, surprises continue.

The little space probe that could — Voyager I — is close to leaving our solar system and entering the relative void of interstellar space. As it does so, from a distance of around 18.4 billion kilometers (today), it continues to send back signals of what it finds. And, surprises continue.