Some innovative research shows that we are generally more inclined to cheat others if we are clad in counterfeit designer clothing or carrying faux accessories.

Some innovative research shows that we are generally more inclined to cheat others if we are clad in counterfeit designer clothing or carrying faux accessories.

[div class=attrib]From Scientific American:[end-div]

Let me tell you the story of my debut into the world of fashion. When Jennifer Wideman Green (a friend of mine from graduate school) ended up living in New York City, she met a number of people in the fashion industry. Through her I met Freeda Fawal-Farah, who worked for Harper’s Bazaar. A few months later Freeda invited me to give a talk at the magazine, and because it was such an atypical crowd for me, I agreed.

I found myself on a stage before an auditorium full of fashion mavens. Each woman was like an exhibit in a museum: her jewelry, her makeup, and, of course, her stunning shoes. I talked about how people make decisions, how we compare prices when we are trying to figure out how much something is worth, how we compare ourselves to others, and so on. They laughed when I hoped they would, asked thoughtful questions, and offered plenty of their own interesting ideas. When I finished the talk, Valerie Salembier, the publisher of Harper’s Bazaar, came onstage, hugged and thanked me—and gave me a stylish black Prada overnight bag.

I headed downtown to my next meeting. I had some time to kill, so I decided to take a walk. As I wandered, I couldn’t help thinking about my big black leather bag with its large Prada logo. I debated with myself: should I carry my new bag with the logo facing outward? That way, other people could see and admire it (or maybe just wonder how someone wearing jeans and red sneakers could possibly have procured it). Or should I carry it with the logo facing toward me, so that no one could recognize that it was a Prada? I decided on the latter and turned the bag around.

Even though I was pretty sure that with the logo hidden no one realized it was a Prada bag, and despite the fact that I don’t think of myself as someone who cares about fashion, something felt different to me. I was continuously aware of the brand on the bag. I was wearing Prada! And it made me feel different; I stood a little straighter and walked with a bit more swagger. I wondered what would happen if I wore Ferrari underwear. Would I feel more invigorated? More confident? More agile? Faster?

I continued walking and passed through Chinatown, which was bustling with activity. Not far away, I spotted an attractive young couple in their twenties taking in the scene. A Chinese man approached them. “Handbags, handbags!” he called, tilting his head to indicate the direction of his small shop. After a moment or two, the woman asked the Chinese man, “You have Prada?”

The vendor nodded. I watched as she conferred with her partner. He smiled at her, and they followed the man to his stand.

The Prada they were referring to, of course, was not actually Prada. Nor were the $5 “designer” sunglasses on display in his stand really Dolce&Gabbana. And the Armani perfumes displayed over by the street food stands? Fakes too.

From Ermine to Armani

Going back a way, ancient Roman law included a set of regulations called sumptuary laws, which filtered down through the centuries into the laws of nearly all European nations. Among other things, the laws dictated who could wear what, according to their station and class. For example, in Renaissance England, only the nobility could wear certain kinds of fur, fabrics, laces, decorative beading per square foot, and so on, while those in the gentry could wear decisively less appealing clothing. (The poorest were generally excluded from the law, as there was little point in regulating musty burlap, wool, and hair shirts.) People who “dressed above their station” were silently, but directly, lying to those around them. And those who broke the law were often hit with fines and other punishments.

What may seem to be an absurd degree of obsessive compulsion on the part of the upper crust was in reality an effort to ensure that people were what they signaled themselves to be; the system was designed to eliminate disorder and confusion. Although our current sartorial class system is not as rigid as it was in the past, the desire to signal success and individuality is as strong today as ever.

When thinking about my experience with the Prada bag, I wondered whether there were other psychological forces related to fakes that go beyond external signaling. There I was in Chinatown holding my real Prada bag, watching the woman emerge from the shop holding her fake one. Despite the fact that I had neither picked out nor paid for mine, it felt to me that there was a substantial difference between the way I related to my bag and the way she related to hers.

More generally, I started wondering about the relationship between what we wear and how we behave, and it made me think about a concept that social scientists call self-signaling. The basic idea behind self-signaling is that despite what we tend to think, we don’t have a very clear notion of who we are. We generally believe that we have a privileged view of our own preferences and character, but in reality we don’t know ourselves that well (and definitely not as well as we think we do). Instead, we observe ourselves in the same way we observe and judge the actions of other people— inferring who we are and what we like from our actions.

For example, imagine that you see a beggar on the street. Rather than ignoring him or giving him money, you decide to buy him a sandwich. The action in itself does not define who you are, your morality, or your character, but you interpret the deed as evidence of your compassionate and charitable character. Now, armed with this “new” information, you start believing more intensely in your own benevolence. That’s self-signaling at work.

The same principle could also apply to fashion accessories. Carrying a real Prada bag—even if no one else knows it is real—could make us think and act a little differently than if we were carrying a counterfeit one. Which brings us to the questions: Does wearing counterfeit products somehow make us feel less legitimate? Is it possible that accessorizing with fakes might affect us in unexpected and negative ways?

Calling All Chloés

I decided to call Freeda and tell her about my recent interest in high fashion. During our conversation, Freeda promised to convince a fashion designer to lend me some items to use in some experiments. A few weeks later, I received a package from the Chloé label containing twenty handbags and twenty pairs of sunglasses. The statement accompanying the package told me that the handbags were estimated to be worth around $40,000 and the sunglasses around $7,000. (The rumor about this shipment quickly traveled around Duke, and I became popular among the fashion-minded crowd.)

With those hot commodities in hand, Francesca Gino, Mike Norton (both professors at Harvard University), and I set about testing whether participants who wore fake products would feel and behave differently from those wearing authentic ones. If our participants felt that wearing fakes would broadcast (even to themselves) a less honorable self-image, we wondered whether they might start thinking of themselves as somewhat less honest. And with this tainted self-concept in mind, would they be more likely to continue down the road of dishonesty?

Using the lure of Chloé accessories, we enlisted many female MBA students for our experiment. We assigned each woman to one of three conditions: authentic, fake or no information. In the authentic condition, we told participants that they would be donning real Chloé designer sunglasses. In the fake condition, we told them that they would be wearing counterfeit sunglasses that looked identical to those made by Chloé (in actuality all the products we used were the real McCoy). Finally, in the no-information condition, we didn’t say anything about the authenticity of the sunglasses.

Once the women donned their sunglasses, we directed them to the hallway, where we asked them to look at different posters and out the windows so that they could later evaluate the quality and experience of looking through their sunglasses. Soon after, we called them into another room for another task.

In this task, the participants were given 20 sets of 12 numbers (3.42, 7.32 and so on), and they were asked to find in each set the two numbers that add up to 10. They had five minutes to solve as many as possible and were paid for each correct answer. We set up the test so that the women could cheat—report that they solved more sets than they did (after shredding their worksheet and all the evidence)—while allowing us to figure out who cheated and by how much (by rigging the shredders so that they only cut the sides of the paper).

Over the years we carried out many versions of this experiment, and we repeatedly find that a lot of people cheated by a few questions. This experiment was not different in this regard, but what was particularly interesting was the effect of wearing counterfeits. While “only” 30 percent of the participants in the authentic condition reported solving more matrices than they actually had, 74 percent of those in the fake condition reported solving more matrices than they actually had. These results gave rise to another interesting question. Did the presumed fakeness of the product make the women cheat more than they naturally would? Or did the genuine Chloé label make them behave more honestly than they would otherwise?

This is why we also had a no-information condition, in which we didn’t mention anything about whether the sunglasses were real or fake. In that condition 42 percent of the women cheated. That result was between the other two, but it was much closer to the authentic condition (in fact, the two conditions were not statistically different from each other). These results suggest that wearing a genuine product does not increase our honesty (or at least not by much). But once we knowingly put on a counterfeit product, moral constraints loosen to some degree, making it easier for us to take further steps down the path of dishonesty.

The moral of the story? If you, your friend, or someone you are dating wears counterfeit products, be careful! Another act of dishonesty may be closer than you expect.

Up to No Good

These results led us to another question: if wearing counterfeits changes the way we view our own behavior, does it also cause us to be more suspicious of others? To find out, we asked another group of participants to put on what we told them were either real or counterfeit Chloé sunglasses. This time, we asked them to fill out a rather long survey with their sunglasses on. In this survey, we included three sets of questions. The questions in set A asked participants to estimate the likelihood that people they know might engage in various ethically questionable behaviors such as standing in the express line with too many groceries. The questions in set B asked them to estimate the likelihood that when people say particular phrases, including “Sorry, I’m late. Traffic was terrible,” they are lying. Set C presented participants with two scenarios depicting someone who has the opportunity to behave dishonestly, and asked them to estimate the likelihood that the person in the scenario would take the opportunity to cheat.

What were the results? You guessed it. When reflecting on the behavior of people they know, participants in the counterfeit condition judged their acquaintances to be more likely to behave dishonestly than did participants in the authentic condition. They also interpreted the list of common excuses as more likely to be lies, and judged the actor in the two scenarios as being more likely to choose the shadier option. We concluded that counterfeit products not only tend to make us more dishonest; they also cause us to view others as less than honest as well.

[div class=attrib]Read the entire article after the jump.[end-div]

Yet another research study of gender differences shows some fascinating variation in the way men and women see and process their perceptions of others. Men tend to be perceived as a whole, women, on the other hand, are more likely to be perceived as parts.

Yet another research study of gender differences shows some fascinating variation in the way men and women see and process their perceptions of others. Men tend to be perceived as a whole, women, on the other hand, are more likely to be perceived as parts.

Procrastinators have known this for a long time: that success comes from making a decision at the last possible moment.

Procrastinators have known this for a long time: that success comes from making a decision at the last possible moment.

Many people in industrialized countries often describe time as flowing like a river: it flows back into the past, and it flows forward into the future. Of course, for bored workers time sometimes stands still, while for kids on summer vacation time flows all too quickly. And, for many people over, say the age of forty, days often drag, but the years fly by.

Many people in industrialized countries often describe time as flowing like a river: it flows back into the past, and it flows forward into the future. Of course, for bored workers time sometimes stands still, while for kids on summer vacation time flows all too quickly. And, for many people over, say the age of forty, days often drag, but the years fly by.

The last couple of decades has seen some remarkable cases of corporate excess and corruption. The deep-rooted human inclinations toward greed, telling falsehoods and exhibiting questionable ethics can probably be traced to the dawn of bipedalism. However, in more recent times we have seen misdeeds particularly in the business world grow in their daring, scale and impact.

The last couple of decades has seen some remarkable cases of corporate excess and corruption. The deep-rooted human inclinations toward greed, telling falsehoods and exhibiting questionable ethics can probably be traced to the dawn of bipedalism. However, in more recent times we have seen misdeeds particularly in the business world grow in their daring, scale and impact. Accessorize

Accessorize

Slip

Slip

Strangely and ironically it takes a satirist to tell the truth, and of course, academics now study the phenomenon.

Strangely and ironically it takes a satirist to tell the truth, and of course, academics now study the phenomenon. [div class=attrib]From the New York Times:[end-div]

[div class=attrib]From the New York Times:[end-div]

Apparently, being busy alleviates the human existential threat. So, if your roughly 16 hours, or more, of wakefulness each day is crammed with memos, driving, meetings, widgets, calls, charts, quotas, angry customers, school lunches, deciding, reports, bank statements, kids, budgets, bills, baking, making, fixing, cleaning and mad bosses, then your life must be meaningful, right?

Apparently, being busy alleviates the human existential threat. So, if your roughly 16 hours, or more, of wakefulness each day is crammed with memos, driving, meetings, widgets, calls, charts, quotas, angry customers, school lunches, deciding, reports, bank statements, kids, budgets, bills, baking, making, fixing, cleaning and mad bosses, then your life must be meaningful, right? Hailing from Classical Greece of around 2,400 years ago, Plato has given our contemporary world many important intellectual gifts. His broad interests in justice, mathematics, virtue, epistemology, rhetoric and art, laid the foundations for Western philosophy and science. Yet in his quest for deeper and broader knowledge he also had some important things to say about ignorance.

Hailing from Classical Greece of around 2,400 years ago, Plato has given our contemporary world many important intellectual gifts. His broad interests in justice, mathematics, virtue, epistemology, rhetoric and art, laid the foundations for Western philosophy and science. Yet in his quest for deeper and broader knowledge he also had some important things to say about ignorance.

A court in Germany recently banned circumcision at birth for religious reasons. Quite understandably the court saw that this practice violates bodily integrity. Aside from being morally repugnant to many theists and non-believers alike, the practice inflicts pain. So, why do some religions continue to circumcise children?

A court in Germany recently banned circumcision at birth for religious reasons. Quite understandably the court saw that this practice violates bodily integrity. Aside from being morally repugnant to many theists and non-believers alike, the practice inflicts pain. So, why do some religions continue to circumcise children?

Some innovative research shows that we are generally more inclined to cheat others if we are clad in counterfeit designer clothing or carrying faux accessories.

Some innovative research shows that we are generally more inclined to cheat others if we are clad in counterfeit designer clothing or carrying faux accessories. So, you think an all-seeing, all-knowing supreme deity encourages moral behavior and discourages crime? Think again.

So, you think an all-seeing, all-knowing supreme deity encourages moral behavior and discourages crime? Think again.

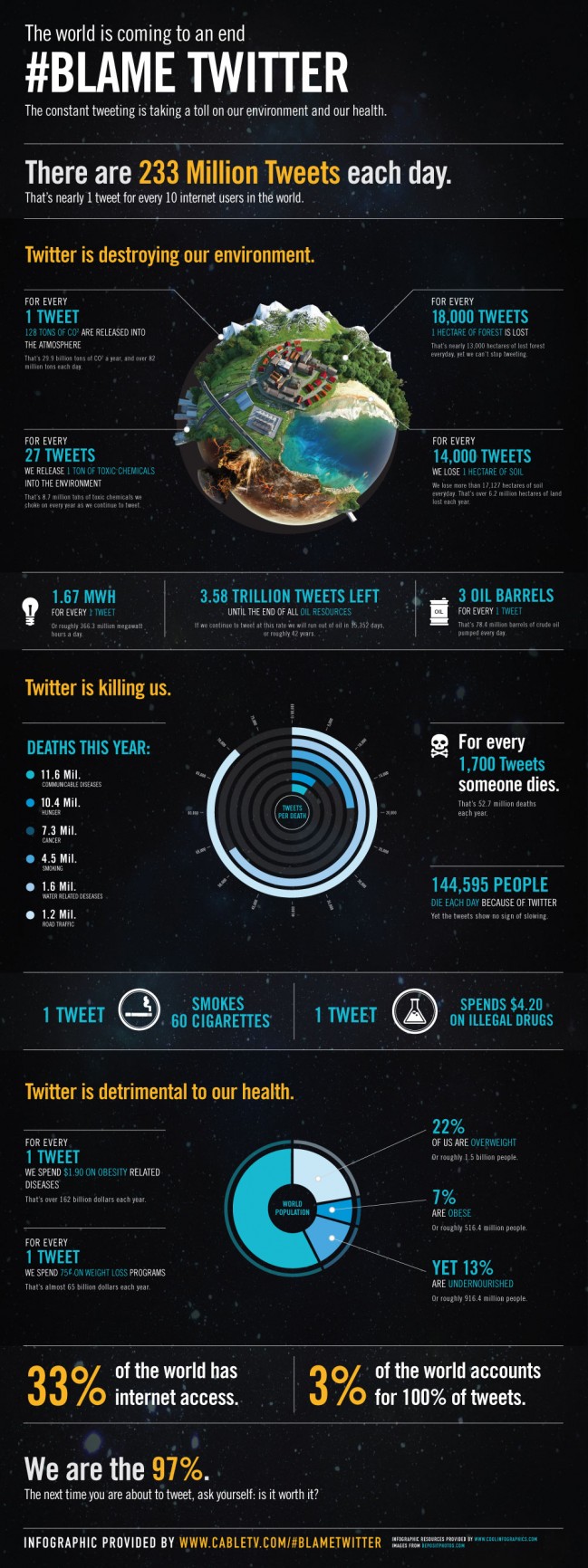

China, India, Facebook. With its 900 million member-citizens Facebook is the third largest country on the planet, ranked by population. This country has some benefits: no taxes, freedom to join and/or leave, and of course there’s freedom to assemble and a fair degree of free speech.

China, India, Facebook. With its 900 million member-citizens Facebook is the third largest country on the planet, ranked by population. This country has some benefits: no taxes, freedom to join and/or leave, and of course there’s freedom to assemble and a fair degree of free speech.

Forget art school, engineering school, law school and B-school (business). For wannabe innovators the current place to be is D-school. Design school, that is.

Forget art school, engineering school, law school and B-school (business). For wannabe innovators the current place to be is D-school. Design school, that is. The mind boggles at the possible situations when a SpeechJammer (affectionately known as the “Shutup Gun”) might come in handy – raucous parties, boring office meetings, spousal arguments, playdates with whiny children.

The mind boggles at the possible situations when a SpeechJammer (affectionately known as the “Shutup Gun”) might come in handy – raucous parties, boring office meetings, spousal arguments, playdates with whiny children.