Most people come down on one side or the other; there’s really no middle ground when it comes to the soda (or pop) wars. But, while the choice of drink itself may seem trivial the combined annual revenues of these food and beverage behemoths is far from it — close to $100 billion. The infographic below dissects this seriously big business.

On Being a Billionare For a Day

New York Times writer Kevin Roose recently lived the life of a billionaire for a day. His report while masquerading as a member of the 0.01 percent of the 0.1 percent of the 1 percent makes for fascinating and disturbing reading.

New York Times writer Kevin Roose recently lived the life of a billionaire for a day. His report while masquerading as a member of the 0.01 percent of the 0.1 percent of the 1 percent makes for fascinating and disturbing reading.

[div class=attrib]From the New York Times:[end-div]

I HAVE a major problem: I just glanced at my $45,000 Chopard watch, and it’s telling me that my Rolls-Royce may not make it to the airport in time for my private jet flight.

Yes, I know my predicament doesn’t register high on the urgency scale. It’s not exactly up there with malaria outbreaks in the Congo or street riots in Athens. But it’s a serious issue, because my assignment today revolves around that plane ride.

“Step on it, Mike,” I instruct my chauffeur, who nods and guides the $350,000 car into the left lane of the West Side Highway.

Let me back up a bit. As a reporter who writes about Wall Street, I spend a fair amount of time around extreme wealth. But my face is often pressed up against the gilded window. I’ve never eaten at Per Se, or gone boating on the French Riviera. I live in a pint-size Brooklyn apartment, rarely take cabs and feel like sending Time Warner to The Hague every time my cable bill arrives.

But for the next 24 hours, my goal is to live like a billionaire. I want to experience a brief taste of luxury — the chauffeured cars, the private planes, the V.I.P. access and endless privilege — and then go back to my normal life.

The experiment illuminates a paradox. In the era of the Occupy Wall Street movement, when the global financial elite has been accused of immoral and injurious conduct, we are still obsessed with the lives of the ultrarich. We watch them on television shows, follow their exploits in magazines and parse their books and public addresses for advice. In addition to the long-running list by Forbes, Bloomberg now maintains a list of billionaires with rankings that update every day.

Really, I wondered, what’s so great about billionaires? What privileges and perks do a billion dollars confer? And could I tap into the psyches of the ultrawealthy by walking a mile in their Ferragamo loafers?

At 6 a.m., Mike, a chauffeur with Flyte Tyme Worldwide, picked me up at my apartment. He opened the Rolls-Royce’s doors to reveal a spotless white interior, with lamb’s wool floor mats, seatback TVs and a football field’s worth of legroom. The car, like the watch, was lent to me by the manufacturer for the day while The New York Times made payments toward the other services.

Mike took me to my first appointment, a power breakfast at the Core club in Midtown. “Core,” as the cognoscenti call it, is a members-only enclave with hefty dues — $15,000 annually, plus a $50,000 initiation fee — and a membership roll that includes brand-name financiers like Stephen A. Schwarzman of the Blackstone Group and Daniel S. Loeb of Third Point.

Over a spinach omelet, Jennie Enterprise, the club’s founder, told me about the virtues of having a cloistered place for “ultrahigh net worth individuals” to congregate away from the bustle of the boardroom.

“They want someplace that respects their privacy,” she said. “They want a place that they can seamlessly transition from work to play, that optimizes their time.”

After breakfast, I rush back to the car for a high-speed trip to Teterboro Airport in New Jersey, where I’m meeting a real-life billionaire for a trip on his private jet. The billionaire, a hedge fund manager, was scheduled to go down to Georgia and offered to let me interview him during the two-hour jaunt on the condition that I not reveal his identity.

[div class=attrib]Read the entire article after the Learjet.[end-div]

[div class=attrib]Image: Waited On: Mr. Roose exits the Rolls-Royce looking not unlike many movers and shakers in Manhattan. Courtesy of New York Times.[end-div]

Runner’s High: How and Why

There is a small but mounting body of evidence that supports the notion of the so-called Runner’s High, a state of euphoria attained by athletes during and immediately following prolonged and vigorous exercise. But while the neurochemical basis for this may soon be understood little is known as to why this happens. More on the how and the why from Scicurious Brain.

There is a small but mounting body of evidence that supports the notion of the so-called Runner’s High, a state of euphoria attained by athletes during and immediately following prolonged and vigorous exercise. But while the neurochemical basis for this may soon be understood little is known as to why this happens. More on the how and the why from Scicurious Brain.

[div class=attrib]From the Scicurious over at Scientific American:[end-div]

I just came back from an 11 mile run. The wind wasn’t awful like it usually is, the sun was out, and I was at peace with the world, and right now, I still am. Later, I know my knees will be yelling at me and my body will want nothing more than to lie down. But right now? Right now I feel FANTASTIC.

What I am in the happy, zen-like, yet curiously energetic throes of is what is popularly known as the “runner’s high”. The runner’s high is a state of bliss achieved by athletes (not just runners) during and immediately following prolonged and intense exercise. It can be an extremely powerful, emotional experience. Many athletes will say they get it (and indeed, some would say we MUST get it, because otherwise why would we keep running 26.2 miles at a stretch?), but what IS it exactly? For some people it’s highly emotional, for some it’s peaceful, and for some it’s a burst of energy. And there are plenty of other people who don’t appear to get it at all. What causes it? Why do some people get it and others don’t?

Well, the short answer is that we don’t know. As I was coming back from my run, blissful and emotive enough that the sight of a small puppy could make me weepy with joy, I began to wonder myself…what is up with me? As I re-hydrated and and began to sift through the literature, I found…well, not much. But what I did find suggests two competing hypothesis: the endogenous opioid hypothesis and the cannabinoid hypothesis.

The endogenous opioid hypothesis

This hypothesis of the runner’s high is based on a study showing that enorphins, endogenous opioids, are released during intense physical activity. When you think of the word “opioids”, you probably think of addictive drugs like opium or morphine. But your body also produces its own versions of these chemicals (called ‘endogenous’ or produced within an organism), usually in response to times of physical stress. Endogenous opioids can bind to the opioid receptors in your brain, which affect all sorts of systems. Opioid receptor activations can help to blunt pain, something that is surely present at the end of a long workout. Opioid receptors can also act in reward-related areas such as the striatum and nucleus accumbens. There, they can inhibit the release of inhibitory transmitters and increase the release of dopamine, making strenuous physical exercise more pleasurable. Endogenous opioid production has been shown to occur during the runner’s high in humans and well as after intense exercise in rats.

The cannabinoid hypothesis

Not only does the brain release its own forms of opioid chemicals, it also releases its own form of cannabinoids. When we usually talk about cannabinoids, we think about things like marijuana or the newer synthetic cannabinoids, which act upon cannabinoid receptors in the brain to produce their effects. But we also produce endogenous cannabinoids (called endocannabinoids), such as anandamide, which also act upon those same receptors. Studies have shown that deletion of cannabinoid receptor 1 decreases wheel running in mice, and that intense exercise causes increases in anadamide in humans.

Not only how, but why?

There isn’t a lot out there on HOW the runner’s high might occur, but there is even less on WHY. There are several hypotheses out there, but none of them, as far as I can tell, are yet supported by evidence. First there is the hypothesis of a placebo effect due to achieving goals. The idea is that you expect yourself to achieve a difficult goal, and then feel great when you do. While the runner’s high does have some things in common with goal achievement, it doesn’t really explain why people get them on training runs or regular runs, when they are not necessarily pushing themselves extremely hard.

[div class=attrib]Read the entire article after the jump, (no pun intended).[end-div]

[div class=attrib]Image courtesy of Cincinnati.com.[end-div]

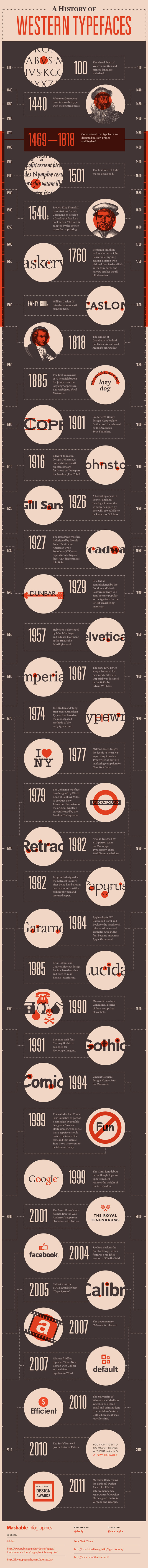

Arial or Calibri?

Nowadays the choice of a particular font for the written word seems just as important as the word itself. Most organizations, from small businesses to major advertisers, from individual authors to global publishers, debate and analyze the typefaces for their communications to ensure brand integrity and optimum readability. Some even select a particular font to save on printing costs.

The infographic below, courtesy of Mashable, shows some of the key milestones in the development of some of our favorite fonts.

[div class=attrib]See the original, super-sized infographic after the jump.[end-div]

Inward Attention and Outward Attention

New studies show that our brains use two fundamentally different neurological pathways when we focus on our external environment and pay attention to our internal world. Researchers believe this could have important consequences, from finding new methods to manage stress and in treating some types of mental illness.

[div class=attrib]From Scientific American:[end-div]

What’s the difference between noticing the rapid beat of a popular song on the radio and noticing the rapid rate of your heart when you see your crush? Between noticing the smell of fresh baked bread and noticing that you’re out of breath? Both require attention. However, the direction of that attention differs: it is either turned outward, as in the case of noticing a stop sign or a tap on your shoulder, or turned inward, as in the case of feeling full or feeling love.

Scientists have long held that attention – regardless to what – involves mostly the prefrontal cortex, that frontal region of the brain responsible for complex thought and unique to humans and advanced mammals. A recent study by Norman Farb from the University of Toronto published in Cerebral Cortex, however, suggests a radically new view: there are different ways of paying attention. While the prefrontal cortex may indeed be specialized for attending to external information, older and more buried parts of the brain including the “insula” and “posterior cingulate cortex” appear to be specialized in observing our internal landscape.

Most of us prioritize externally oriented attention. When we think of attention, we often think of focusing on something outside of ourselves. We “pay attention” to work, the TV, our partner, traffic, or anything that engages our senses. However, a whole other world exists that most of us are far less aware of: an internal world, with its varied landscape of emotions, feelings, and sensations. Yet it is often the internal world that determines whether we are having a good day or not, whether we are happy or unhappy. That’s why we can feel angry despite beautiful surroundings or feel perfectly happy despite being stuck in traffics. For this reason perhaps, this newly discovered pathway of attention may hold the key to greater well-being.

Although this internal world of feelings and sensations dominates perception in babies, it becomes increasingly foreign and distant as we learn to prioritize the outside world. Because we don’t pay as much attention to our internal world, it often takes us by surprise. We often only tune into our body when it rings an alarm bell –– that we’re extremely thirsty, hungry, exhausted or in pain. A flush of anger, a choked up feeling of sadness, or the warmth of love in our chest often appear to come out of the blue.

In a collaboration with professors Zindel Segal and Adam Anderson at the University of Toronto, the study compared exteroceptive (externally focused) attention to interoceptive (internally focused) attention in the brain. Participants were instructed to either focus on the sensation of their breath (interoceptive attention) or to focus their attention on words on a screen (exteroceptive attention). Contrary to the conventional assumption that all attention relies upon the frontal lobe of the brain, the researchers found that this was true of only exteroceptive attention; interoceptive attention used evolutionarily older parts of the brain more associated with sensation and integration of physical experience.

[div class=attrib]Read the entire article after the jump.[end-div]

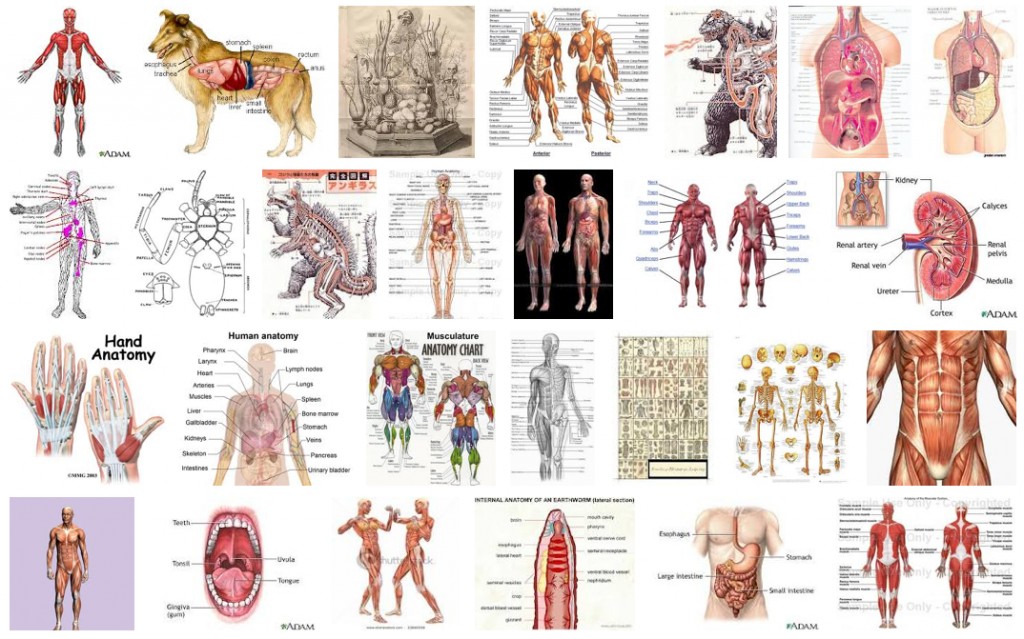

Dissecting Artists

Jonathan Jones dissects artists’ fascination over the ages with anatomy and pickled organs in glass jars.

[div class=attrib]From the Guardian:[end-div]

From Hirst to Da Vinci, a shared obsession with dissection and the human body seems to connect exhibitions opening this spring.

Is it something to do with the Olympics? Athletics is physical, the logic might go, so let’s think about bodies… Anyway, a shared anatomical obsession connects exhibitions that open this week, and later in the spring. Damien Hirst’s debt to anatomy does not need labouring. But just as his specimens are unveiled at Tate Modern, everyone else seems to be opening their own cabinets of curiosities. At London’s Natural History Museum, dissected animals are going on view in an exhibition that brings the morbid spectacle – which in my childhood was simultaneously the horror and fascination of this museum – back into its largely flesh-free modern galleries.

If that were not enough, the Wellcome Collection invites you to take a good look at some brains in jars.

It is no surprise that art and science keep coming together on the anatomist’s table this spring, for anatomy has a venerable and intimate connection with the efforts of artists to depict life and death. In his series of popular prints The Four Stages of Cruelty, William Hogarth sees the public dissection of a murderer by cold-blooded anatomists as the ultimate cruelty. But many artists have been happy to watch or even wield a knife at such events.

In the 16th century, the first published modern study of the human body, by Vesalius, was illustrated by a pupil of Titian. In the 18th century, the British animal artist George Stubbs undertook his own dissections of a horse, and published the results. He is one of the greatest ever portrayers of equine majesty, and his study of the skeleton and muscles of the horse helped him to achieve this.

Clinical knowledge, to help them portray humans and animals correctly, is one reason artists have been drawn to anatomy. Another attraction is more metaphysical: to look inside a human body is to get an eerie sense of who we are, to probe the mystery of being. Rembrandt’s painting The Anatomy Lesson of Dr Nicolaes Tulp is not a scientific study but a poetic reverie on the fragility and wonder of life – glimpsed in a study of death. Does Hirst make it as an artist in this tradition?

[div class=attrib]Read more after the jump.[end-div]

[div class=attrib]Image courtesy of Google search.[end-div]

The Benefits of Bilingualism

[div class=attrib]From the New York Times:[end-div]

SPEAKING two languages rather than just one has obvious practical benefits in an increasingly globalized world. But in recent years, scientists have begun to show that the advantages of bilingualism are even more fundamental than being able to converse with a wider range of people. Being bilingual, it turns out, makes you smarter. It can have a profound effect on your brain, improving cognitive skills not related to language and even shielding against dementia in old age.

This view of bilingualism is remarkably different from the understanding of bilingualism through much of the 20th century. Researchers, educators and policy makers long considered a second language to be an interference, cognitively speaking, that hindered a child’s academic and intellectual development.

They were not wrong about the interference: there is ample evidence that in a bilingual’s brain both language systems are active even when he is using only one language, thus creating situations in which one system obstructs the other. But this interference, researchers are finding out, isn’t so much a handicap as a blessing in disguise. It forces the brain to resolve internal conflict, giving the mind a workout that strengthens its cognitive muscles.

Bilinguals, for instance, seem to be more adept than monolinguals at solving certain kinds of mental puzzles. In a 2004 study by the psychologists Ellen Bialystok and Michelle Martin-Rhee, bilingual and monolingual preschoolers were asked to sort blue circles and red squares presented on a computer screen into two digital bins — one marked with a blue square and the other marked with a red circle.

In the first task, the children had to sort the shapes by color, placing blue circles in the bin marked with the blue square and red squares in the bin marked with the red circle. Both groups did this with comparable ease. Next, the children were asked to sort by shape, which was more challenging because it required placing the images in a bin marked with a conflicting color. The bilinguals were quicker at performing this task.

The collective evidence from a number of such studies suggests that the bilingual experience improves the brain’s so-called executive function — a command system that directs the attention processes that we use for planning, solving problems and performing various other mentally demanding tasks. These processes include ignoring distractions to stay focused, switching attention willfully from one thing to another and holding information in mind — like remembering a sequence of directions while driving.

Why does the tussle between two simultaneously active language systems improve these aspects of cognition? Until recently, researchers thought the bilingual advantage stemmed primarily from an ability for inhibition that was honed by the exercise of suppressing one language system: this suppression, it was thought, would help train the bilingual mind to ignore distractions in other contexts. But that explanation increasingly appears to be inadequate, since studies have shown that bilinguals perform better than monolinguals even at tasks that do not require inhibition, like threading a line through an ascending series of numbers scattered randomly on a page.

The key difference between bilinguals and monolinguals may be more basic: a heightened ability to monitor the environment. “Bilinguals have to switch languages quite often — you may talk to your father in one language and to your mother in another language,” says Albert Costa, a researcher at the University of Pompeu Fabra in Spain. “It requires keeping track of changes around you in the same way that we monitor our surroundings when driving.” In a study comparing German-Italian bilinguals with Italian monolinguals on monitoring tasks, Mr. Costa and his colleagues found that the bilingual subjects not only performed better, but they also did so with less activity in parts of the brain involved in monitoring, indicating that they were more efficient at it.

[div class=attrib]Read more after the jump.[end-div]

[div class=attrib]Image courtesy of Futurity.org.[end-div]

So Where Is Everybody?

Astrobiologist Caleb Scharf brings us up to date on Fermi’s Paradox — which asks why, given that our galaxy is so old, haven’t other sentient intergalactic travelers found us. The answer may come from a video game.

Astrobiologist Caleb Scharf brings us up to date on Fermi’s Paradox — which asks why, given that our galaxy is so old, haven’t other sentient intergalactic travelers found us. The answer may come from a video game.

[div class=attrib]From Scientific American:[end-div]

Right now, all across the planet, millions of people are engaged in a struggle with enormous implications for the very nature of life itself. Making sophisticated tactical decisions and wrestling with chilling and complex moral puzzles, they are quite literally deciding the fate of our existence.

Or at least they are pretending to.

The video game Mass Effect has now reached its third and final installment; a huge planet-destroying, species-wrecking, epic finale to a story that takes humanity from its tentative steps into interstellar space to a critical role in a galactic, and even intergalactic saga. It’s awfully good, even without all the fantastic visual design or gameplay, at the heart is a rip-roaring plot and countless backstories that tie the experience into one of the most carefully and completely imagined sci-fi universes out there.

As a scientist, and someone who will sheepishly admit to a love of videogames (from countless hours spent as a teenager coding my own rather inferior efforts, to an occasional consumer’s dip into the lushness of what a multi-billion dollar industry can produce), the Mass Effect series is fascinating for a number of reasons. The first of which is the relentless attention to plausible background detail. Take for example the task of finding mineral resources in Mass Effect 2. Flying your ship to different star systems presents you with a bird’s eye view of the planets, each of which has a fleshed out description – be it inhabited, or more often, uninhabitable. These have been torn from the annals of the real exoplanets, gussied up a little, but still recognizable. There are hot Jupiters, and icy Neptune-like worlds. There are gassy planets, rocky planets, and watery planets of great diversity in age, history and elemental composition. It’s a surprisingly good representation of what we now think is really out there.

But the biggest idea, the biggest piece of fiction-meets-genuine-scientific-hypothesis is the overarching story of Mass Effect. It directly addresses one of the great questions of astrobiology – is there intelligent life elsewhere in our galaxy, and if so, why haven’t we intersected with it yet? The first serious thinking about this problem seems to have arisen during a lunchtime chat in the 1940?s where the famous physicist Enrico Fermi (for whom the fundamental particle type ‘fermion’ is named) is supposed to have asked “Where is Everybody?” The essence of the Fermi Paradox is that since our galaxy is very old, perhaps 10 billion years old, unless intelligent life is almost impossibly rare it will have arisen ages before we came along. Such life will have had time to essentially span the Milky Way, even if spreading out at relatively slow sub-light speeds, it – or its artificial surrogates, machines – will have reached every nook and cranny. Thus we should have noticed it, or been noticed by it, unless we are truly the only example of intelligent life.

The Fermi Paradox comes with a ton of caveats and variants. It’s not hard to think of all manner of reasons why intelligent life might be teeming out there, but still not have met us – from self-destructive behavior to the realistic hurdles of interstellar travel. But to my mind Mass Effect has what is perhaps one of the most interesting, if not entertaining, solutions. This will spoil the story; you have been warned.

Without going into all the colorful details, the central premise is that a hugely advanced and ancient race of artificially intelligent machines ‘harvests’ all sentient, space-faring life in the Milky Way every 50,000 years. These machines otherwise lie dormant out in the depths of intergalactic space. They have constructed and positioned an ingenious web of technological devices (including the Mass Effect relays, providing rapid interstellar travel) and habitats within the Galaxy that effectively sieve through the rising civilizations, helping the successful flourish and multiply, ripening them up for eventual culling. The reason for this? Well, the plot is complex and somewhat ambiguous, but one thing that these machines do is use the genetic slurry of millions, billions of individuals from a species to create new versions of themselves.

It’s a grand ol’ piece of sci-fi opera, but it also provides a neat solution to the Fermi Paradox via a number of ideas: a) The most truly advanced interstellar species spends most of its time out of the Galaxy in hibernation. b) Purging all other sentient (space-faring) life every 50,000 years puts a stop to any great spreading across the Galaxy. c) Sentient, space-faring species are inevitably drawn into the technological lures and habitats left for them, and so are less inclined to explore.

These make it very unlikely that until a species is capable of at least proper interplanetary space travel (in the game humans have to reach Mars to become aware of what’s going on at all) it will have to conclude that the Galaxy is a lonely place.

[div class=attrib]Read more after the jump.[end-div]

[div class=attrib]Image: Intragalactic life. Courtesy of J. Schombert, U. Oregon.[end-div]

Your Molecular Ancestors

[div class=attrib]From Scientific American:[end-div]

Well, perhaps your great-to-the-hundred-millionth-grandmother was.

Understanding the origins of life and the mechanics of the earliest beginnings of life is as important for the quest to unravel the Earth’s biological history as it is for the quest to seek out other life in the universe. We’re pretty confident that single-celled organisms – bacteria and archaea – were the first ‘creatures’ to slither around on this planet, but what happened before that is a matter of intense and often controversial debate.

One possibility for a precursor to these organisms was a world without DNA, but with the bare bone molecular pieces that would eventually result in the evolutionary move to DNA and its associated machinery. This idea was put forward by an influential paper in the journal Nature in 1986 by Walter Gilbert (winner of a Nobel in Chemistry), who fleshed out an idea by Carl Woese – who had earlier identified the Archaea as a distinct branch of life. This ancient biomolecular system was called the RNA-world, since it consists of ribonucleic acid sequences (RNA) but lacks the permanent storage mechanisms of deoxyribonucleic acids (DNA).

A key part of the RNA-world hypothesis is that in addition to carrying reproducible information in their sequences, RNA molecules can also perform the duties of enzymes in catalyzing reactions – sustaining a busy, self-replicating, evolving ecosystem. In this picture RNA evolves away until eventually items like proteins come onto the scene, at which point things can really gear up towards more complex and familiar life. It’s an appealing picture for the stepping-stones to life as we know it.

In modern organisms a very complex molecular structure called the ribosome is the critical machine that reads the information in a piece of messenger-RNA (that has spawned off the original DNA) and then assembles proteins according to this blueprint by snatching amino acids out of a cell’s environment and putting them together. Ribosomes are amazing, they’re also composed of a mix of large numbers of RNA molecules and protein molecules.

But there’s a possible catch to all this, and it relates to the idea of a protein-free RNA-world some 4 billion years ago.

[div class=attrib]Read more after the jump:[end-div]

[div class=attrib]Image: RNA molecule. Courtesy of Wired / Universitat Pampeu Fabra.[end-div]

Male Brain + Female = Jello

[div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

In one experiment, just telling a man he would be observed by a female was enough to hurt his psychological performance.

Movies and television shows are full of scenes where a man tries unsuccessfully to interact with a pretty woman. In many cases, the potential suitor ends up acting foolishly despite his best attempts to impress. It seems like his brain isn’t working quite properly and according to new findings, it may not be.

Researchers have begun to explore the cognitive impairment that men experience before and after interacting with women. A 2009 study demonstrated that after a short interaction with an attractive woman, men experienced a decline in mental performance. A more recent study suggests that this cognitive impairment takes hold even w hen men simply anticipate interacting with a woman who they know very little about.

Sanne Nauts and her colleagues at Radboud University Nijmegen in the Netherlands ran two experiments using men and women university students as participants. They first collected a baseline measure of cognitive performance by having the students complete a Stroop test. Developed in 1935 by the psychologist John Ridley Stroop, the test is a common way of assessing our ability to process competing information. The test involves showing people a series of words describing different colors that are printed in different colored inks. For example, the word “blue” might be printed in green ink and the word “red” printed in blue ink. Participants are asked to name, as quickly as they can, the color of the ink that the words are written in. The test is cognitively demanding because our brains can’t help but process the meaning of the word along with the color of the ink. When people are mentally tired, they tend to complete the task at a slower rate.

After completing the Stroop Test, participants in Nauts’ study were asked to take part in another supposedly unrelated task. They were asked to read out loud a number of Dutch words while sitting in front of a webcam. The experimenters told them that during this “lip reading task” an observer would watch them over the webcam. The observer was given either a common male or female name. Participants were led to believe that this person would see them over the web cam, but they would not be able to interact with the person. No pictures or other identifying information were provided about the observer—all the participants knew was his or her name. After the lip reading task, the participants took another Stroop test. Women’s performance on the second test did not differ, regardless of the gender of their observer. However men who thought a woman was observing them ended up performing worse on the second Stroop test. This cognitive impairment occurred even though the men had not interacted with the female observer.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of Scientific American / iStock/Iconogenic.[end-div]

Saucepan Lids No Longer Understand Cockney

You may not “adam and eve it”, but it seems that fewer and fewer Londoners now take to their “jam jars” for a drive down the “frog and toad” to their neighborhood “rub a dub dub”.

[div class=attrib]From the Daily Telegraph:[end-div]

The slang is dying out amid London’s diverse, multi-cultural society, new research has revealed.

A study of 2,000 adults, including half from the capital, found the world famous East End lingo which has been mimicked and mocked for decades is on the wane.

The survey, commissioned by The Museum of London, revealed almost 80 per cent of Londoners do not understand phrases such as ‘donkey’s ears’ – slang for years.

Other examples of rhyming slang which baffled participants included ‘mother hubbard’, which means cupboard, and ‘bacon and eggs’ which means legs.

Significantly, Londoners’ own knowledge of the jargon is now almost as bad as those who live outside of the capital.

Yesterday, Alex Werner, head of history collections at the Museum of London, said: “For many people, Cockney rhyming slang is intrinsic to the identity of London.

“However this research suggests that the Cockney dialect itself may not be enjoying the same level of popularity.

“The origins of Cockney slang reflects the diverse, immigrant community of London’s East End in the 19th century so perhaps it’s no surprise that other forms of slang are taking over as the cultural influences on the city change.”

The term ‘cokenay’ was used in The Reeve’s Tale, the third story in Geoffrey Chaucer’s The Canterbury Tales, to describe a child who was “tenderly brought up” and “effeminate”.

By the early 16th century the reference was commonly used as a derogatory term to describe town-dwellers. Later still, it was used to indicate those born specifically within earshot of the ringing of Bow-bell at St Mary-le-Bow church in east London.

Research by The Museum of London found that just 20 per cent of the 2,000 people questioned knew that ‘rabbit and pork’ meant talk.

It also emerged that very few of those polled understood the meaning of tommy tucker (supper), watch the custard and jelly (telly) or spend time with the teapot lids (kids).

Instead, the report found that most Londoners now have a grasp of just a couple of Cockney phrases such as tea leaf (thief), and apples and pears (stairs).

The most-used cockney slang was found to be the phrase ‘porky pies’ with 13 per cent of those questioned still using it. One in 10 used the term ‘cream crackered’.

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image courtesy of Tesco UK.[end-div]

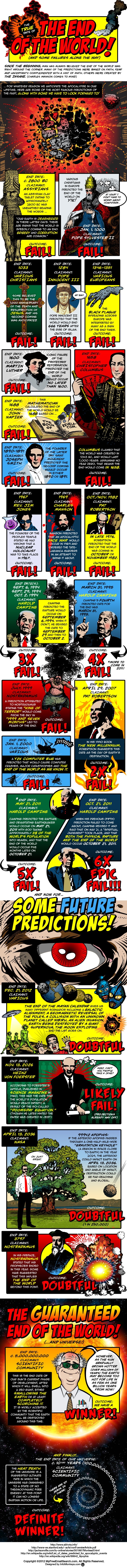

The End of the World for Doomsday Predictions

Apparently the world is due to end, again, this time on December 21, 2012. This latest prediction is from certain scholars of all things ancient Mayan. Now, of course, the world did not end as per Harold Camping’s most recent predictions, so let’s hope, or not, that the Mayan’s get it right for the sake of humanity.

The infographic below courtesy of xerxy brings many of these failed predictions of death, destruction and apocalypse into living color.

Are you a Spammer?

Infographic week continues here at theDiagonal with a visual guide to amateur email spammers. You know you may one if you’ve ever sent an email titled “Read now: this will make your Friday!”, to friends, family and office colleagues. You may be a serial offender if you use the “forward this email” button more than a couple of times as day.

[div class=attrib]Infographic courtesy of OnlineITDegree.[end-div]

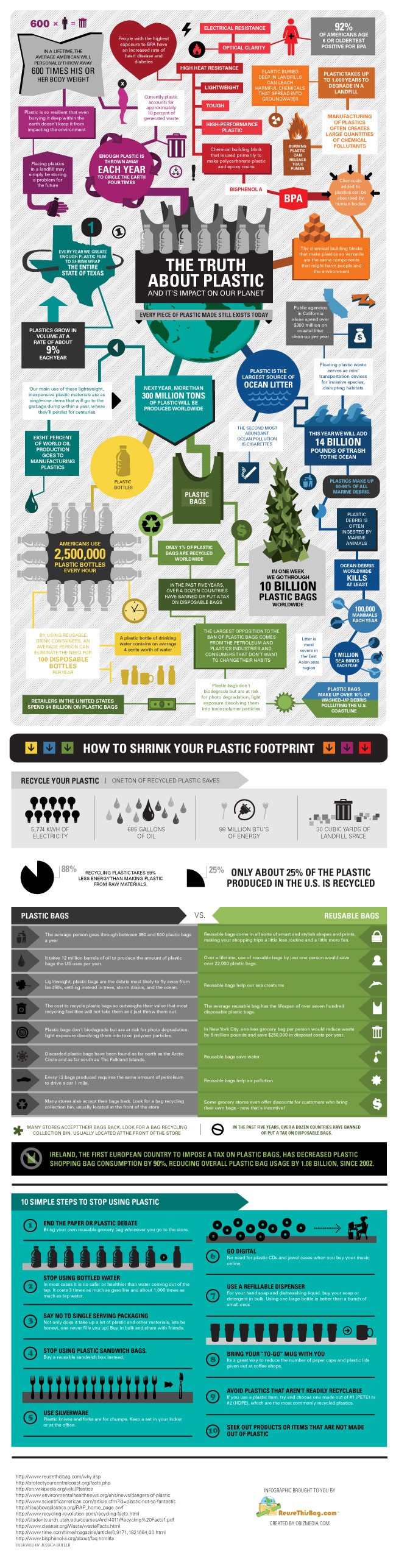

Everything You Ever Wanted to Know About Plastic

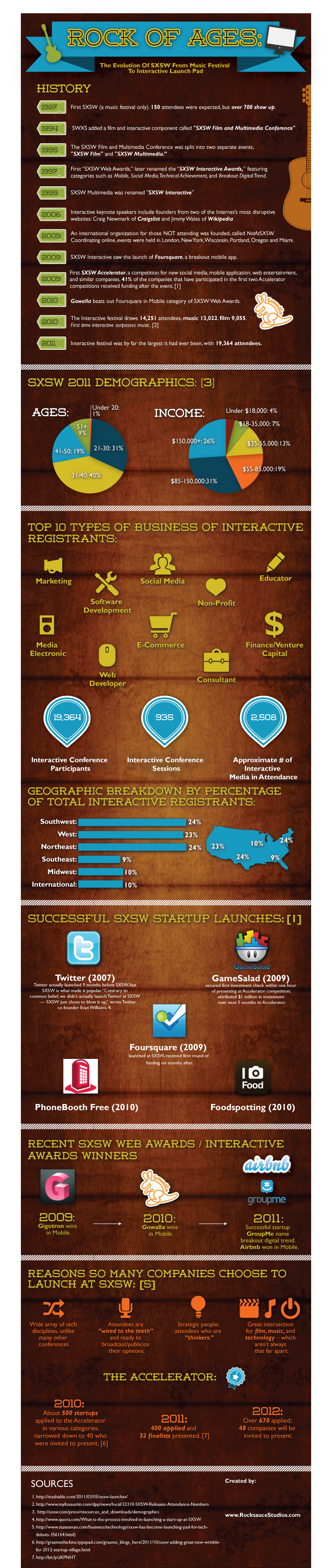

SxSW Explained

Our Children: Independently Dependent

Why can’t our kids tie their own shoes?

Why can’t our kids tie their own shoes?

Are we raising our children to be self-obsessed, attention-seeking, helpless and dependent groupthinkers? And, why may the phenomenon of “family time” in the U.S. be a key culprit?

These are some of the questions raised by anthropologist Elinor Ochs and her colleagues. Over the last decade they have studied family life across the globe, from the Amazon region, to Samoa, and middle-America.

[div class=attrib]From the Wall Street Journal:[end-div]

Why do American children depend on their parents to do things for them that they are capable of doing for themselves? How do U.S. working parents’ views of “family time” affect their stress levels? These are just two of the questions that researchers at UCLA’s Center on Everyday Lives of Families, or CELF, are trying to answer in their work.

By studying families at home—or, as the scientists say, “in vivo”—rather than in a lab, they hope to better grasp how families with two working parents balance child care, household duties and career, and how this balance affects their health and well-being.

The center, which also includes sociologists, psychologists and archeologists, wants to understand “what the middle class thought, felt and what they did,” says Dr. Ochs. The researchers plan to publish two books this year on their work, and say they hope the findings may help families become closer and healthier.

Ten years ago, the UCLA team recorded video for a week of nearly every moment at home in the lives of 32 Southern California families. They have been picking apart the footage ever since, scrutinizing behavior, comments and even their refrigerators’s contents for clues.

The families, recruited primarily through ads, owned their own homes and had two or three children, at least one of whom was between 7 and 12 years old. About a third of the families had at least one nonwhite member, and two were headed by same-sex couples. Each family was filmed by two cameras and watched all day by at least three observers.

Among the findings: The families had very a child-centered focus, which may help explain the “dependency dilemma” seen among American middle-class families, says Dr. Ochs. Parents intend to develop their children’s independence, yet raise them to be relatively dependent, even when the kids have the skills to act on their own, she says.

In addition, these parents tended to have a very specific, idealized way of thinking about family time, says Tami Kremer-Sadlik, a former CELF research director who is now the director of programs for the division of social sciences at UCLA. These ideals appeared to generate guilt when work intruded on family life, and left parents feeling pressured to create perfect time together. The researchers noted that the presence of the observers may have altered some of the families’ behavior.

How kids develop moral responsibility is an area of focus for the researchers. Dr. Ochs, who began her career in far-off regions of the world studying the concept of “baby talk,” noticed that American children seemed relatively helpless compared with those in other cultures she and colleagues had observed.

In those cultures, young children were expected to contribute substantially to the community, says Dr. Ochs. Children in Samoa serve food to their elders, waiting patiently in front of them before they eat, as shown in one video snippet. Another video clip shows a girl around 5 years of age in Peru’s Amazon region climbing a tall tree to harvest papaya, and helping haul logs thicker than her leg to stoke a fire.

By contrast, the U.S. videos showed Los Angeles parents focusing more on the children, using simplified talk with them, doing most of the housework and intervening quickly when the kids had trouble completing a task.

In 22 of 30 families, children frequently ignored or resisted appeals to help, according to a study published in the journal Ethos in 2009. In the remaining eight families, the children weren’t asked to do much. In some cases, the children routinely asked the parents to do tasks, like getting them silverware. “How am I supposed to cut my food?” Dr. Ochs recalls one girl asking her parents.

Asking children to do a task led to much negotiation, and when parents asked, it sounded often like they were asking a favor, not making a demand, researchers said. Parents interviewed about their behavior said it was often too much trouble to ask.

For instance, one exchange caught on video shows an 8-year-old named Ben sprawled out on a couch near the front door, lifting his white, high-top sneaker to his father, the shoe laced. “Dad, untie my shoe,” he pleads. His father says Ben needs to say “please.”

“Please untie my shoe,” says the child in an identical tone as before. After his father hands the shoe back to him, Ben says, “Please put my shoe on and tie it,” and his father obliges.

[div class=attrib]Read the entire article after the jump:[end-div]

[div class=attrib]Image courtesy of Kyle T. Webster / Wall Street Journal.[end-div]

There’s the Big Bang theory and then there’s The Big Bang Theory

Now in it’s fifth season on U.S. television, The Big Bang Theory has made serious geekiness fun and science cool. In fact, the show is rising in popularity to such an extent that a Google search for “big bang theory” ranks the show first and above all other more learned scientific entires.

Brad Hooker from Symmetry Breaking asks some deep questions of David Saltzberg, science advisor to The Big Bang Theory.

[div class=attrib]From Symmetry Breaking:[end-div]

For those who live, breathe and laugh physics, one show entangles them all: The Big Bang Theory. Now in its fifth season on CBS, the show follows a group of geeks, including a NASA engineer, an astrophysicist and two particle physicists.

Every episode has at least one particle physics joke. On faster-than-light neutrinos: “Is this observation another Swiss export full of more holes than their cheese?” On Saul Perlmutter clutching the Nobel Prize: “What’s the matter, Saul? You afraid somebody’s going to steal it, like you stole Einstein’s cosmological constant?”

To make these jokes timely and accurate, while sprinkling the sets with authentic scientific plots and posters, the show’s writers depend on one physicist, David Saltzberg. Since the first episode, Saltzberg’s dose of realism has made science chic again, and has even been credited with increasing admissions to physics programs. Symmetry writer Brad Hooker asked the LHC physicist, former Tevatron researcher and University of California, Los Angeles professor to explain how he walks the tightrope between science and sitcom.

Brad: How many of your suggestions are put into the show?

David: In general, when they ask for something, they use it. But it’s never anything that’s funny or moves the story along. It’s the part that you don’t need to understand. They explained to me in the beginning that you can watch an I Love Lucy rerun and not understand Spanish, but understand that Ricky Ricardo is angry. That’s all the level of science understanding needed for the show.

B: These references are current. Astrophysicist Saul Perlmutter of Lawrence Berkeley National Laboratory was mentioned on the show just weeks after winning the Nobel Prize for discovering the accelerating expansion of the universe.

D: Right. And you may wonder why they chose Saul Perlmutter, as opposed to the other two winners. It just comes down to that they liked the sound of his name better. Things like that matter. The writers think of the script in terms of music and the rhythm of the lines. I usually give them multiple choices because I don’t know if they want something short or long or something with odd sounds in it. They really think about that kind of thing.

B: Do the writers ever ask you to explain the science and it goes completely over their heads?

D: We respond by email so I don’t really know. But I don’t think it goes over their heads because you can Wikipedia anything.

One thing was a little difficult for me: they asked for a spoof of the Born-Oppenheimer approximation, which is harder than it sounds. But for the most part it’s just a matter of narrowing it down to a few choices. There are so many ways to go through it and I deliberately chose things that are current.

First of all, these guys live in our universe—they’re talking about the things we physicists are talking about. And also, there isn’t a whole lot of science journalism out there. It’s been cut back a lot. In getting the words out there, whether it’s “dark matter” or “topological insulators,” hopefully some fraction of the audience will Google it.

B: Are you working with any other science advisors? I know one character is a neurobiologist.

D: Luckily the actress who portrays her, Mayim Bialik, is also a neuroscientist. She has a PhD in neuroscience from UCLA. So that worked out really well because I don’t know all of physics, let alone all of science. What I’m able to do with the physics is say, “Well, we don’t really talk like that even though it’s technically correct.” And I can’t do that for biology, but she can.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of The Big Bang Theory, Warner Bros.[end-div]

First, There Was Bell Labs

![]() The results of innovation surround us. Innovation nourishes our food supply and helps us heal when we are sick; innovation lubricates our businesses, underlies our products, and facilitates our interactions. Innovation stokes our forward momentum.

The results of innovation surround us. Innovation nourishes our food supply and helps us heal when we are sick; innovation lubricates our businesses, underlies our products, and facilitates our interactions. Innovation stokes our forward momentum.

But, before many of our recent technological marvels could come in to being, some fundamental innovations were necessary. These were the technical precursors and catalysts that paves the way for the iPad and the smartphone , GPS and search engines and microwave ovens. The building blocks that made much of this possible included the transistor, the laser, the Unix operating system, the communication satellite. And, all of these came from one place, Bell Labs, during a short but highly productive period from 1920 to 1980.

In his new book, “The Idea Factory”, Jon Gertner explores how and why so much innovation sprung from the visionary leaders, engineers and scientists of Bell Labs

[div class=attrib]From the New York Times:[end-div]

In today’s world of Apple, Google and Facebook, the name may not ring any bells for most readers, but for decades — from the 1920s through the 1980s — Bell Labs, the research and development wing of AT&T, was the most innovative scientific organization in the world. As Jon Gertner argues in his riveting new book, “The Idea Factory,” it was where the future was invented.

Indeed, Bell Labs was behind many of the innovations that have come to define modern life, including the transistor (the building block of all digital products), the laser, the silicon solar cell and the computer operating system called Unix (which would serve as the basis for a host of other computer languages). Bell Labs developed the first communications satellites, the first cellular telephone systems and the first fiber-optic cable systems.

The Bell Labs scientist Claude Elwood Shannon effectively founded the field of information theory, which would revolutionize thinking about communications; other Bell Labs researchers helped push the boundaries of physics, chemistry and mathematics, while defining new industrial processes like quality control.

In “The Idea Factory,” Mr. Gertner — an editor at Fast Company magazine and a writer for The New York Times Magazine — not only gives us spirited portraits of the scientists behind Bell Labs’ phenomenal success, but he also looks at the reasons that research organization became such a fount of innovation, laying the groundwork for the networked world we now live in.

It’s clear from this volume that the visionary leadership of the researcher turned executive Mervin Kelly played a large role in Bell Labs’ sense of mission and its ability to institutionalize the process of innovation so effectively. Kelly believed that an “institute of creative technology” needed a critical mass of talented scientists — whom he housed in a single building, where physicists, chemists, mathematicians and engineers were encouraged to exchange ideas — and he gave his researchers the time to pursue their own investigations “sometimes without concrete goals, for years on end.”

That freedom, of course, was predicated on the steady stream of revenue provided (in the years before the AT&T monopoly was broken up in the early 1980s) by the monthly bills paid by telephone subscribers, which allowed Bell Labs to function “much like a national laboratory.” Unlike, say, many Silicon Valley companies today, which need to keep an eye on quarterly reports, Bell Labs in its heyday could patiently search out what Mr. Gertner calls “new and fundamental ideas,” while using its immense engineering staff to “develop and perfect those ideas” — creating new products, then making them cheaper, more efficient and more durable.

Given the evolution of the digital world we inhabit today, Kelly’s prescience is stunning in retrospect. “He had predicted grand vistas for the postwar electronics industry even before the transistor,” Mr. Gertner writes. “He had also insisted that basic scientific research could translate into astounding computer and military applications, as well as miracles within the communications systems — ‘a telephone system of the future,’ as he had said in 1951, ‘much more like the biological systems of man’s brain and nervous system.’ ”

[div class=attrib]Read the entire article after jump.[end-div]

[div class=attrib]Image: Jack A. Morton (left) and J. R. Wilson at Bell Laboratories, circa 1948. Courtesy of Computer History Museum.[end-div]

GE and EE: The Dark Side of Facebook

That’s G.E. and E.E, not “Glee”. In social psychology circles GE means grandiose exhibitionism, while EE stands for entitlement / exploitativeness. Researchers find that having a large number of “ifriends”on social networks, such as Facebook, correlates with high levels of GE and EE. The greater the number of friends you have online, the greater the odds that you are a chronic attention seeker with shallow relationships or a “socially disruptive narcissist”.

[div class=attrib]From the Guardian:[end-div]

People who score highly on the Narcissistic Personality Inventory questionnaire had more friends on Facebook, tagged themselves more often and updated their newsfeeds more regularly.

The research comes amid increasing evidence that young people are becoming increasingly narcissistic, and obsessed with self-image and shallow friendships.

The latest study, published in the journal Personality and Individual Differences, also found that narcissists responded more aggressively to derogatory comments made about them on the social networking site’s public walls and changed their profile pictures more often.

A number of previous studies have linked narcissism with Facebook use, but this is some of the first evidence of a direct relationship between Facebook friends and the most “toxic” elements of narcissistic personality disorder.

Researchers at Western Illinois University studied the Facebook habits of 294 students, aged between 18 and 65, and measured two “socially disruptive” elements of narcissism – grandiose exhibitionism (GE) and entitlement/exploitativeness (EE).

GE includes ”self-absorption, vanity, superiority, and exhibitionistic tendencies” and people who score high on this aspect of narcissism need to be constantly at the centre of attention. They often say shocking things and inappropriately self-disclose because they cannot stand to be ignored or waste a chance of self-promotion.

The EE aspect includes “a sense of deserving respect and a willingness to manipulate and take advantage of others”.

The research revealed that the higher someone scored on aspects of GE, the greater the number of friends they had on Facebook, with some amassing more than 800.

Those scoring highly on EE and GG were also more likely to accept friend requests from strangers and seek social support, but less likely to provide it, according to the research.

Carol Craig, a social scientist and chief executive of the Centre for Confidence and Well-being, said young people in Britain were becoming increasingly narcissistic and Facebook provided a platform for the disorder.

“The way that children are being educated is focussing more and more on the importance of self esteem – on how you are seen in the eyes of others. This method of teaching has been imported from the US and is ‘all about me’.

“Facebook provides a platform for people to self-promote by changing profile pictures and showing how many hundreds of friends you have. I know of some who have more than 1,000.”

Dr Viv Vignoles, senior lecturer in social psychology at Sussex University, said there was “clear evidence” from studies in America that college students were becoming increasingly narcissistic.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image “Looking at You, and You and You”, Jennifer Daniel, an illustrator, created a fan page on Facebook and asked friends to submit their images for this mosaic; 238 of them did so. Courtesy of the New York Times.[end-div]

Tube Map

The iconic Tube Map showing London’s metropolitan, mostly subterranean subway system has inspired many artists and designers. Here’s another fascinating interpretation created by Sam Loman:

Spectres in the Urban Jungle

Following on from our recent article on contemporary artist Rob Mulholland, whose mirrored sculptures wander in a woodland in Scotland, comes Chinese artist Liu Bolin, with his series of “invisible” self-portraits.

Bolin paints himself into the background, and then disappears. Following many hours of meticulous preparation Bolin merges with his surroundings in a performance that makes U.S. military camouflage systems look almost amateurish.

Liu Bolin’s 4th solo exhibit is currently showing at Eli Klein gallery

Spectres in the Forest

The best art is simple and evocative.

Like eerie imagined alien life forms mirrored sculptures meander through a woodland in Scotland. The life-size camouflaged figures are on display at the David Marshall Lodge near Aberfoyle, Scotland.

Contemporary artist Rob Mulholland designed the series of six mirrored sculptures, named Vestige, which are shaped from silhouettes of people he knows.

In Rob Mulholland’s own words:

The essence of who we are as individuals in relationship to others and our given environment forms a strong aspect of my artistic practise.

In Vestige I wanted to explore this relationship further by creating a group, a community within the protective elements of the woods, reflecting the past inhabitants of the space.

…

The six male and female figures not only absorb their environment, they create a notion of non – space, a link with the past that forces us both as individuals and as a society to consider our relationship with our natural environment .

[div class=attrib]See more of Rob Mulholland’s art after the jump.[end-div]

Culturomics

[div class=attrib]From the Wall Street Journal:[end-div]

Can physicists produce insights about language that have eluded linguists and English professors? That possibility was put to the test this week when a team of physicists published a paper drawing on Google’s massive collection of scanned books. They claim to have identified universal laws governing the birth, life course and death of words.

The paper marks an advance in a new field dubbed “Culturomics”: the application of data-crunching to subjects typically considered part of the humanities. Last year a group of social scientists and evolutionary theorists, plus the Google Books team, showed off the kinds of things that could be done with Google’s data, which include the contents of five-million-plus books, dating back to 1800.

Published in Science, that paper gave the best-yet estimate of the true number of words in English—a million, far more than any dictionary has recorded (the 2002 Webster’s Third New International Dictionary has 348,000). More than half of the language, the authors wrote, is “dark matter” that has evaded standard dictionaries.

The paper also tracked word usage through time (each year, for instance, 1% of the world’s English-speaking population switches from “sneaked” to “snuck”). It also showed that we seem to be putting history behind us more quickly, judging by the speed with which terms fall out of use. References to the year “1880” dropped by half in the 32 years after that date, while the half-life of “1973” was a mere decade.

In the new paper, Alexander Petersen, Joel Tenenbaum and their co-authors looked at the ebb and flow of word usage across various fields. “All these different words are battling it out against synonyms, variant spellings and related words,” says Mr. Tenenbaum. “It’s an inherently competitive, evolutionary environment.”

When the scientists analyzed the data, they found striking patterns not just in English but also in Spanish and Hebrew. There has been, the authors say, a “dramatic shift in the birth rate and death rates of words”: Deaths have increased and births have slowed.

English continues to grow—the 2011 Culturonomics paper suggested a rate of 8,500 new words a year. The new paper, however, says that the growth rate is slowing. Partly because the language is already so rich, the “marginal utility” of new words is declining: Existing things are already well described. This led them to a related finding: The words that manage to be born now become more popular than new words used to get, possibly because they describe something genuinely new (think “iPod,” “Internet,” “Twitter”).

Higher death rates for words, the authors say, are largely a matter of homogenization. The explorer William Clark (of Lewis & Clark) spelled “Sioux” 27 different ways in his journals (“Sieoux,” “Seaux,” “Souixx,” etc.), and several of those variants would have made it into 19th-century books. Today spell-checking programs and vigilant copy editors choke off such chaotic variety much more quickly, in effect speeding up the natural selection of words. (The database does not include the world of text- and Twitter-speak, so some of the verbal chaos may just have shifted online.)

[div class=attrib]Read the entire article here.[end-div]

Everything Comes in Threes

[div class=attrib]From the Guardian:[end-div]

[div class=attrib]From the Guardian:[end-div]

Last week’s results from the Daya Bay neutrino experiment were the first real measurement of the third neutrino mixing angle, ?13 (theta one-three). There have been previous experiments which set limits on the angle, but this is the first time it has been shown to be significantly different from zero.

Since ?13 is a fundamental parameter in the Standard Model of particle physics1, this would be an important measurement anyway. But there’s a bit more to it than that.

Neutrinos – whatever else they might be doing – mix up amongst themselves as they travel through space. This is a quantum mechanical effect, and comes from the fact that there are two ways of defining the three types of neutrino.

You can define them by the way they are produced. So a neutrino which is produced (or destroyed) in conjunction with an electron is an “electron neutrino”. If a muon is involved, it’s a “muon neutrino”. The third one is a “tau neutrino”. We call this the “flavour”.

Or you can define them by their masses. Usually we just call this definition neutrinos 1, 2 and 3.

The two definitions don’t line up, and there is a matrix which tells you how much of each “flavour” neutrino overlaps with each “mass” one. This is the neutrino mixing matrix. Inside this matrix in the standard model there are potentially four parameters describing how the neutrinos mix.

You could just have two-way mixing. For example, the flavour states might just mix up neutrino 1 and 2, and neutrino 2 and 3. This would be the case if the angle ?13 were zero. If it is bigger than zero (as Daya Bay have now shown) then neutrino 1 also mixes with neutrino 3. In this case, and only in this case, a fourth parameter is also allowed in the matrix. This fourth parameter (?) is one we haven’t measured yet, but now we know it is there. And the really important thing is, if it is there, and also not zero, then it introduces an asymmetry between matter and antimatter.

This is important because currently we don’t know why there is more matter than antimatter around. We also don’t know why there are three copies of neutrinos (and indeed of each class of fundamental particle). But we know that three copies is minimum number which allows some difference in the way matter and antimatter experience the weak nuclear force. This is the kind of clue which sets off big klaxons in the minds of physicists: New physics hiding somewhere here! It strongly suggests that these two not-understood facts are connected by some bigger, better theory than the one we have.

We’ve already measured a matter-antimatter difference for quarks; a non-zero ?13 means there can be a difference for neutrinos too. More clues.

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image: The first use of a hydrogen bubble chamber to detect neutrinos, on November 13, 1970. A neutrino hit a proton in a hydrogen atom. The collision occurred at the point where three tracks emanate on the right of the photograph. Courtesy of Wikipedia.[end-div]

Language Translation With a Cool Twist

The last couple of decades has shown a remarkable improvement in the ability of software to translate the written word from one language to another. Yahoo Babel Fish and Google Translate are good examples. Also, voice recognition systems, such as those you encounter every day when trying desperately to connect with a real customer service rep, have taken great leaps forward. Apple’s Siri now leads the pack.

But, what do you get if you combine translation and voice recognition technology? Well, you get a new service that translates the spoken word in your native language to a second. And, here’s the neat twist. The system translates into the second language while keeping a voice like yours. The technology springs from Microsoft’s Research division in Redmond, WA.

[div class=attrib]From Technology Review:[end-div]

Researchers at Microsoft have made software that can learn the sound of your voice, and then use it to speak a language that you don’t. The system could be used to make language tutoring software more personal, or to make tools for travelers.

In a demonstration at Microsoft’s Redmond, Washington, campus on Tuesday, Microsoft research scientist Frank Soong showed how his software could read out text in Spanish using the voice of his boss, Rick Rashid, who leads Microsoft’s research efforts. In a second demonstration, Soong used his software to grant Craig Mundie, Microsoft’s chief research and strategy officer, the ability to speak Mandarin.

Hear Rick Rashid’s voice in his native language and then translated into several other languages:

English:

Italian:

Mandarin:

In English, a synthetic version of Mundie’s voice welcomed the audience to an open day held by Microsoft Research, concluding, “With the help of this system, now I can speak Mandarin.” The phrase was repeated in Mandarin Chinese, in what was still recognizably Mundie’s voice.

“We will be able to do quite a few scenario applications,” said Soong, who created the system with colleagues at Microsoft Research Asia, the company’s second-largest research lab, in Beijing, China.

[div class=attrib]Read the entire article here.[end-div]

Skyscrapers A La Mode

Since 2006 Evolo architecture magazine has run a competition for architects to bring life to their most fantastic skyscraper designs. All the finalists of 2012 competition presented some stunning ideas, and topped by the winner, Himalaya Water Tower, from Zhi Zheng, Hongchuan Zhao, Dongbai Song of China.

[div class=attrib]From Evolo:[end-div]

Housed within 55,000 glaciers in the Himalaya Mountains sits 40 percent of the world’s fresh water. The massive ice sheets are melting at a faster-than-ever pace due to climate change, posing possible dire consequences for the continent of Asia and the entire world stand, and especially for the villages and cities that sit on the seven rivers that come are fed from the Himalayas’ runoff as they respond with erratic flooding or drought.

The “Himalaya Water Tower” is a skyscraper located high in the mountain range that serves to store water and helps regulate its dispersal to the land below as the mountains’ natural supplies dry up. The skyscraper, which can be replicated en masse, will collect water in the rainy season, purify it, freeze it into ice and store it for future use. The water distribution schedule will evolve with the needs of residents below; while it can be used to help in times of current drought, it’s also meant to store plentiful water for future generations.

Follow the other notable finalists at Evolo magazine after the jump.

Have Wormhole, Will Travel

Intergalactic travel just became a lot easier, well, if only theoretically at the moment.

[div class=attrib]From New Scientist:[end-div]

IT IS not every day that a piece of science fiction takes a step closer to nuts-and-bolts reality. But that is what seems to be happening to wormholes. Enter one of these tunnels through space-time, and a few short steps later you may emerge near Pluto or even in the Andromeda galaxy millions of light years away.

You probably won’t be surprised to learn that no one has yet come close to constructing such a wormhole. One reason is that they are notoriously unstable. Even on paper, they have a tendency to snap shut in the blink of an eye unless they are propped open by an exotic form of matter with negative energy, whose existence is itself in doubt.

Now, all that has changed. A team of physicists from Germany and Greece has shown that building wormholes may be possible without any input from negative energy at all. “You don’t even need normal matter with positive energy,” says Burkhard Kleihaus of the University of Oldenburg in Germany. “Wormholes can be propped open with nothing.”

The findings raise the tantalising possibility that we might finally be able to detect a wormhole in space. Civilisations far more advanced than ours may already be shuttling back and forth through a galactic-wide subway system constructed from wormholes. And eventually we might even be able to use them ourselves as portals to other universes.

Wormholes first emerged in Einstein’s general theory of relativity, which famously shows that gravity is nothing more than the hidden warping of space-time by energy, usually the mass-energy of stars and galaxies. Soon after Einstein published his equations in 1916, Austrian physicist Ludwig Flamm discovered that they also predicted conduits through space and time.

But it was Einstein himself who made detailed investigations of wormholes with Nathan Rosen. In 1935, they concocted one consisting of two black holes, connected by a tunnel through space-time. Travelling through their wormhole was only possible if the black holes at either end were of a special kind. A conventional black hole has such a powerful gravitational field that material sucked in can never escape once it has crossed what is called the event horizon. The black holes at the end of an Einstein-Rosen wormhole would be unencumbered by such points of no return.

Einstein and Rosen’s wormholes seemed a mere curiosity for another reason: their destination was inconceivable. The only connection the wormholes offered from our universe was to a region of space in a parallel universe, perhaps with its own stars, galaxies and planets. While today’s theorists are comfortable with the idea of our universe being just one of many, in Einstein and Rosen’s day such a multiverse was unthinkable.

Fortunately, it turned out that general relativity permitted the existence of another type of wormhole. In 1955, American physicist John Wheeler showed that it was possible to connect two regions of space in our universe, which would be far more useful for fast intergalactic travel. He coined the catchy name wormhole to add to black holes, which he can also take credit for.

The trouble is the wormholes of Wheeler and Einstein and Rosen all have the same flaw. They are unstable. Send even a single photon of light zooming through and it instantly triggers the formation of an event horizon, which effectively snaps shut the wormhole.

Bizarrely, it is the American planetary astronomer Carl Sagan who is credited with moving the field on. In his science fiction novel, Contact, he needed a quick and scientifically sound method of galactic transport for his heroine – played by Jodie Foster in the movie. Sagan asked theorist Kip Thorne at the California Institute of Technology in Pasadena for help, and Thorne realised a wormhole would do the trick. In 1987, he and his graduate students Michael Morris and Uri Yertsever worked out the recipe to create a traversable wormhole. It turned out that the mouths could be kept open by hypothetical material possessing a negative energy. Given enough negative energy, such a material has a repulsive form of gravity, which physically pushes open the wormhole mouth.

Negative energy is not such a ridiculous idea. Imagine two parallel metal plates sitting in a vacuum. If you place them close together the vacuum between them has negative energy – that is, less energy than the vacuum outside. This is because a normal vacuum is like a roiling sea of waves, and the waves that are too big to fit between the plates are naturally excluded. This leaves less energy inside the plates than outside.

Unfortunately, this kind of negative energy exists in quantities far too feeble to prop open a wormhole mouth. Not only that but a Thorne-Morris-Yertsever wormhole that is big enough for someone to crawl through requires a tremendous amount of energy – equivalent to the energy pumped out in a year by an appreciable fraction of the stars in the galaxy.

Back to the drawing board then? Not quite. There may be a way to bypass those difficulties. All the wormholes envisioned until recently assume that Einstein’s theory of gravity is correct. In fact, this is unlikely to be the case. For a start, the theory breaks down at the heart of a black hole, as well as at the beginning of time in the big bang. Also, quantum theory, which describes the microscopic world of atoms, is incompatible with general relativity. Since quantum theory is supremely successful – explaining everything from why the ground is solid to how the sun shines – many researchers believe that Einstein’s theory of gravity must be an approximation of a deeper theory.

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image of a traversable wormhole which connects the place in front of the physical institutes of Tübingen University with the sand dunes near Boulogne sur Mer in the north of France. Courtesy of Wikipedia.[end-div]

Creativity: Insight, Shower, Wine, Perspiration? Yes

Some believe creativity stems from a sudden insightful realization, a bolt from the blue that awakens the imagination. Others believe creativity comes from years of discipline and hard work. Well, both groups are correct, but the answer is a little more complex.

[div class=attrib]From the Wall Street Journal:[end-div]

Creativity can seem like magic. We look at people like Steve Jobs and Bob Dylan, and we conclude that they must possess supernatural powers denied to mere mortals like us, gifts that allow them to imagine what has never existed before. They’re “creative types.” We’re not.

But creativity is not magic, and there’s no such thing as a creative type. Creativity is not a trait that we inherit in our genes or a blessing bestowed by the angels. It’s a skill. Anyone can learn to be creative and to get better at it. New research is shedding light on what allows people to develop world-changing products and to solve the toughest problems. A surprisingly concrete set of lessons has emerged about what creativity is and how to spark it in ourselves and our work.

The science of creativity is relatively new. Until the Enlightenment, acts of imagination were always equated with higher powers. Being creative meant channeling the muses, giving voice to the gods. (“Inspiration” literally means “breathed upon.”) Even in modern times, scientists have paid little attention to the sources of creativity.

But over the past decade, that has begun to change. Imagination was once thought to be a single thing, separate from other kinds of cognition. The latest research suggests that this assumption is false. It turns out that we use “creativity” as a catchall term for a variety of cognitive tools, each of which applies to particular sorts of problems and is coaxed to action in a particular way.

Does the challenge that we’re facing require a moment of insight, a sudden leap in consciousness? Or can it be solved gradually, one piece at a time? The answer often determines whether we should drink a beer to relax or hop ourselves up on Red Bull, whether we take a long shower or stay late at the office.

The new research also suggests how best to approach the thorniest problems. We tend to assume that experts are the creative geniuses in their own fields. But big breakthroughs often depend on the naive daring of outsiders. For prompting creativity, few things are as important as time devoted to cross-pollination with fields outside our areas of expertise.

Let’s start with the hardest problems, those challenges that at first blush seem impossible. Such problems are typically solved (if they are solved at all) in a moment of insight.

Consider the case of Arthur Fry, an engineer at 3M in the paper products division. In the winter of 1974, Mr. Fry attended a presentation by Sheldon Silver, an engineer working on adhesives. Mr. Silver had developed an extremely weak glue, a paste so feeble it could barely hold two pieces of paper together. Like everyone else in the room, Mr. Fry patiently listened to the presentation and then failed to come up with any practical applications for the compound. What good, after all, is a glue that doesn’t stick?

On a frigid Sunday morning, however, the paste would re-enter Mr. Fry’s thoughts, albeit in a rather unlikely context. He sang in the church choir and liked to put little pieces of paper in the hymnal to mark the songs he was supposed to sing. Unfortunately, the little pieces of paper often fell out, forcing Mr. Fry to spend the service frantically thumbing through the book, looking for the right page. It seemed like an unfixable problem, one of those ordinary hassles that we’re forced to live with.

But then, during a particularly tedious sermon, Mr. Fry had an epiphany. He suddenly realized how he might make use of that weak glue: It could be applied to paper to create a reusable bookmark! Because the adhesive was barely sticky, it would adhere to the page but wouldn’t tear it when removed. That revelation in the church would eventually result in one of the most widely used office products in the world: the Post-it Note.

Mr. Fry’s invention was a classic moment of insight. Though such events seem to spring from nowhere, as if the cortex is surprising us with a breakthrough, scientists have begun studying how they occur. They do this by giving people “insight” puzzles, like the one that follows, and watching what happens in the brain:

A man has married 20 women in a small town. All of the women are still alive, and none of them is divorced. The man has broken no laws. Who is the man?

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image courtesy of Google search.[end-div]

Your Favorite Sitcoms in Italian, Russian or Mandarin

[tube]38iBmsCLwNM[/tube]

Secretly, you may be wishing you had a 12 foot satellite antenna in your backyard to soak up these esoteric, alien signals directly. How about the 1990’s sitcom “The Nanny” dubbed into Italian, or a Russian remake of “Everybody Loves Raymond”, a French “Law and Order”, or our favorite, a British remake of “Jersey Shore” set in Newcastle, named “Gordie Shore”. This would make an interesting anthropological study and partly highlights why other countries may have a certain and dim view of U.S. culture.

The top ten U.S. cultural exports courtesy of FlavorWire after the jump.

How to Be a Great Boss… From Hell, For Dummies

Some very basic lessons on how to be a truly bad boss. Lesson number one: keep your employees from making any contribution to or progress on meaningful work.

[div class=attrib]From the Washington Post:[end-div]

Recall your worst day at work, when events of the day left you frustrated, unmotivated by the job, and brimming with disdain for your boss and your organization. That day is probably unforgettable. But do you know exactly how your boss was able to make it so horrible for you? Our research provides insight into the precise levers you can use to re-create that sort of memorable experience for your own underlings.

Over the past 15 years, we have studied what makes people happy and engaged at work. In discovering the answer, we also learned a lot about misery at work. Our research method was pretty straightforward. We collected confidential electronic diaries from 238 professionals in seven companies, each day for several months. All told, those diaries described nearly 12,000 days – how people felt, and the events that stood out in their minds. Systematically analyzing those diaries, we compared the events occurring on the best days with those on the worst.

What we discovered is that the key factor you can use to make employees miserable on the job is to simply keep them from making progress in meaningful work.

People want to make a valuable contribution, and feel great when they make progress toward doing so. Knowing this progress principle is the first step to knowing how to destroy an employee’s work life. Many leaders, from team managers to CEOs, are already surprisingly expert at smothering employee engagement. In fact, on one-third of those 12,000 days, the person writing the diary was either unhappy at work, demotivated by the work, or both.

That’s pretty efficient work-life demolition, but it leaves room for improvement.