I can attest to the fact that living at high altitude, say above 6,000 ft, has it’s benefits. The air is usually crisper and cleaner, and the views go on forever. But, one of the drawbacks is that the air is also thinner; there’s less oxygen floating around. Some people are more susceptible to the oxygen deficit than others. The milder symptoms usually manifest themselves in the form of headache, dizziness and disorientation. In more serious cases, acute mountain sickness (AMS) can lead to severe nausea, cognitive impairment and, even, death. When AMS strikes the best advice is to seek a lower elevation immediately and rest.

But, of course, wherever there is a human ailment, there will be a snake oil salesman ready to peddle a miraculous new cure; and AMS is no different. So, if you’re visiting the high country soon beware of the altitude revival massage, oxygen-rich structured water and the high altitude lotions. Caveat emptor.

From the NYT:

When the pop band Panic! at the Disco played in Colorado at the Red Rocks amphitheater more than a mile above sea level, the frontman, Brendon Urie, joked that his “drug of choice” was oxygen.

Mr. Urie tripled his elevation to 6,400 feet when he traveled from a gig in Las Vegas to the stage outside Denver in October, so he kept an oxygen tank nearby for quick hits when he felt what he called “lightheaded” during the performance.

“It acted as a kind of security blanket,” he said in an email.

And there are a lot of security blankets being sold to Rocky Mountain visitors: oxygen therapies, oils, pills and wristbands, to name a few. They come with claims of preventing or reducing altitude sickness, promises that in most cases aren’t backed by research.

Still, many skiers are willing to spend freely on these treatments, and perhaps it’s not surprising. People can be desperate to salvage their vacations when the thin air causes headaches, nausea, fatigue, dizziness and worse. But acute mountain sickness (AMS) can be a serious condition, so it behooves travelers to understand that it can often be prevented, and that if it strikes, not all remedies are equal.

Over the last two decades, 32 people have died in Colorado from the effects of high altitude, according to data provided by Mark Salley, spokesman for the Colorado Department of Public Health and Environment. In addition, there were 1,350 trips to the state’s emergency rooms for altitude sickness last year, with 85 percent of those patients coming from out of state, he said.

Not everyone is affected by altitude, but among visitors to Colorado’s Summit County — location of the ski resorts Breckenridge, Copper Mountain, Arapahoe Basin, Loveland and Keystone — 22 percent of those staying at 7,000 to 9,000 feet experienced AMS, while at 10,000 feet, it rose to 42 percent, according to medical studies cited in an article by Dr. Peter Hackett and Robert Roach published in The New England Journal of Medicine in July 2001.

It’s impossible to predict who will be affected, though research has found that those who are obese tend to be more susceptible. Meanwhile, those over age 60 have a slightly lower risk. But whether a person is a child or adult, male or female, fit or out of shape doesn’t seem to make a significant difference, said Mr. Roach, now director of the Altitude Research Center at the University of Colorado Anschutz Medical Campus in Aurora, Colo.

Acute mountain sickness is caused by the lack of oxygen in the lower air pressure that exists at higher altitudes. It usually doesn’t affect people below 8,000 feet, although it can, according to the National Institutes of Health.

“It’s horrible,” said Laura Lane, 32, who, despite living at 5,000 feet in Fort Collins, Colo., is one of those routinely affected by higher elevations. Early on, it makes her nauseated and gives her a “crushing” feeling, while simultaneously making her feel as if her head “is being split in two,” she said.

Read the entire article here.

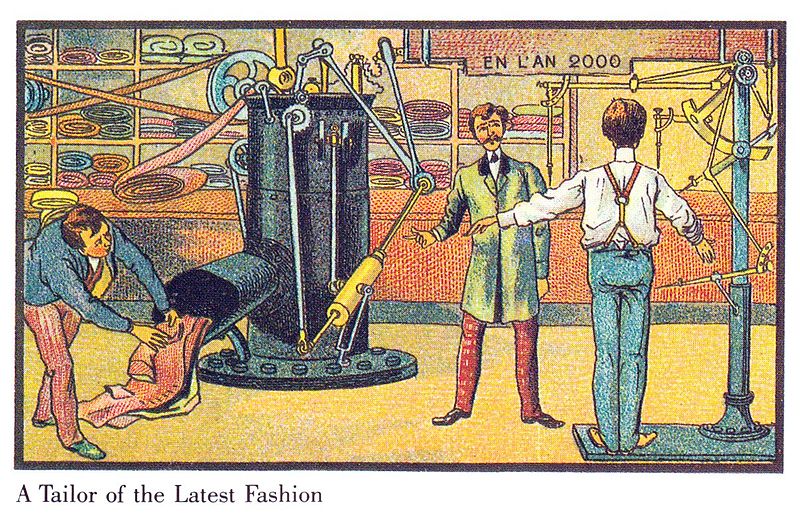

Image: View from South Arapaho Peak, Indian Peaks Wilderness, Colorado. Altitude 13,397 ft. Courtesy of the author.