Shakespeare meet thy DNA. The most famous literary figure in the English language had a recent rendezvous with that most famous and studied of molecules. Together chemists, cell biologists, geneticists and computer scientists are doing some amazing things — storing information using the base-pair sequences of amino-acids on the DNA molecule.

Shakespeare meet thy DNA. The most famous literary figure in the English language had a recent rendezvous with that most famous and studied of molecules. Together chemists, cell biologists, geneticists and computer scientists are doing some amazing things — storing information using the base-pair sequences of amino-acids on the DNA molecule.

[div class=attrib]From ars technica:[end-div]

It’s easy to get excited about the idea of encoding information in single molecules, which seems to be the ultimate end of the miniaturization that has been driving the electronics industry. But it’s also easy to forget that we’ve been beaten there—by a few billion years. The chemical information present in biomolecules was critical to the origin of life and probably dates back to whatever interesting chemical reactions preceded it.

It’s only within the past few decades, however, that humans have learned to speak DNA. Even then, it took a while to develop the technology needed to synthesize and determine the sequence of large populations of molecules. But we’re there now, and people have started experimenting with putting binary data in biological form. Now, a new study has confirmed the flexibility of the approach by encoding everything from an MP3 to the decoding algorithm into fragments of DNA. The cost analysis done by the authors suggest that the technology may soon be suitable for decade-scale storage, provided current trends continue.

Trinary encoding

Computer data is in binary, while each location in a DNA molecule can hold any one of four bases (A, T, C, and G). Rather than using all that extra information capacity, however, the authors used it to avoid a technical problem. Stretches of a single type of base (say, TTTTT) are often not sequenced properly by current techniques—in fact, this was the biggest source of errors in the previous DNA data storage effort. So for this new encoding, they used one of the bases to break up long runs of any of the other three.

(To explain how this works practically, let’s say the A, T, and C encoded information, while G represents “more of the same.” If you had a run of four A’s, you could represent it as AAGA. But since the G doesn’t encode for anything in particular, TTGT can be used to represent four T’s. The only thing that matters is that there are no more than two identical bases in a row.)

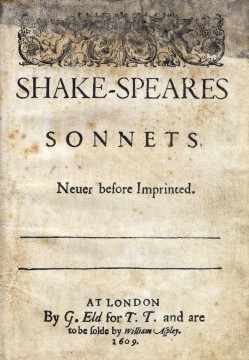

That leaves three bases to encode information, so the authors converted their information into trinary. In all, they encoded a large number of works: all 154 Shakespeare sonnets, a PDF of a scientific paper, a photograph of the lab some of them work in, and an MP3 of part of Martin Luther King’s “I have a dream” speech. For good measure, they also threw in the algorithm they use for converting binary data into trinary.

Once in trinary, the results were encoded into the error-avoiding DNA code described above. The resulting sequence was then broken into chunks that were easy to synthesize. Each chunk came with parity information (for error correction), a short file ID, and some data that indicates the offset within the file (so, for example, that the sequence holds digits 500-600). To provide an added level of data security, 100-bases-long DNA inserts were staggered by 25 bases so that consecutive fragments had a 75-base overlap. Thus, many sections of the file were carried by four different DNA molecules.

And it all worked brilliantly—mostly. For most of the files, the authors’ sequencing and analysis protocol could reconstruct an error-free version of the file without any intervention. One, however, ended up with two 25-base-long gaps, presumably resulting from a particular sequence that is very difficult to synthesize. Based on parity and other data, they were able to reconstruct the contents of the gaps, but understanding why things went wrong in the first place would be critical to understanding how well suited this method is to long-term archiving of data.

[div class=attrib]Read the entire article following the jump.[end-div]

[div class=attrib]Image: Title page of Shakespeare’s Sonnets (1609). Courtesy of Wikipedia / Public Domain.[end-div]

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.