Over the last few decades robots have been steadily replacing humans in industrial and manufacturing sectors. Increasingly, robots are appearing in a broader array of service sectors; they’re stocking shelves, cleaning hotels, buffing windows, tending bar, dispensing cash.

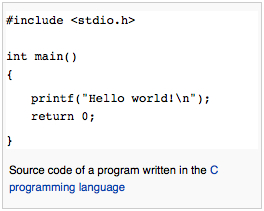

Nowadays you’re likely to be the recipient of news articles filtered, and in some cases written, by pieces of code and business algorithms. Indeed, many boilerplate financial reports are now “written” by “analysts” who reside, not as flesh-and-bones, but virtually, inside server-farms. Just recently a collection of circuitry and software trounced a human being at the strategic board game, Go.

So, can computers progress from repetitive, mechanical and programmatic roles to more creative, free-wheeling vocations? Can computers become artists?

A group of data scientists, computer engineers, software developers and art historians set out to answer the question.

Jonathan Jones over at the Guardian has a few choice words on the result:

I’ve been away for a few days and missed the April Fool stories in Friday’s papers – until I spotted the one about a team of Dutch “data analysts, developers, engineers and art historians” creating a new painting using digital technology: a virtual Rembrandt painted by a Rembrandt app. Hilarious! But wait, this was too late to be an April Fool’s joke. This is a real thing that is actually happening.

What a horrible, tasteless, insensitive and soulless travesty of all that is creative in human nature. What a vile product of our strange time when the best brains dedicate themselves to the stupidest “challenges”, when technology is used for things it should never be used for and everybody feels obliged to applaud the heartless results because we so revere everything digital.

Hey, they’ve replaced the most poetic and searching portrait painter in history with a machine. When are we going to get Shakespeare’s plays and Bach’s St Matthew Passion rebooted by computers? I cannot wait for Love’s Labours Have Been Successfully Functionalised by William Shakesbot.

You cannot, I repeat, cannot, replicate the genius of Rembrandt van Rijn. His art is not a set of algorithms or stylistic tics that can be recreated by a human or mechanical imitator. He can only be faked – and a fake is a dead, dull thing with none of the life of the original. What these silly people have done is to invent a new way to mock art. Bravo to them! But the Dutch art historians and museums who appear to have lent their authority to such a venture are fools.

Rembrandt lived from 1606 to 1669. His art only has meaning as a historical record of his encounters with the people, beliefs and anguishes of his time. Its universality is the consequence of the depth and profundity with which it does so. Looking into the eyes of Rembrandt’s Self-Portrait at the Age of 63, I am looking at time itself: the time he has lived, and the time since he lived. A man who stared, hard, at himself in his 17th-century mirror now looks back at me, at you, his gaze so deep his mottled flesh is just the surface of what we see.

We glimpse his very soul. It’s not style and surface effects that make his paintings so great but the artist’s capacity to reveal his inner life and make us aware in turn of our own interiority – to experience an uncanny contact, soul to soul. Let’s call it the Rembrandt Shudder, that feeling I long for – and get – in front of every true Rembrandt masterpiece..

Is that a mystical claim? The implication of the digital Rembrandt is that we get too sentimental and moist-eyed about art, that great art is just a set of mannerisms that can be digitised. I disagree. If it’s mystical to see Rembrandt as a special and unique human being who created unrepeatable, inexhaustible masterpieces of perception and intuition then count me a mystic.

Read the entire story here.

Image: The Next Rembrandt (based on 168,263 Rembrandt painting fragments). Courtesy: Microsoft, Delft University of Technology, Mauritshuis (Hague), Rembrandt House Museum (Amsterdam).

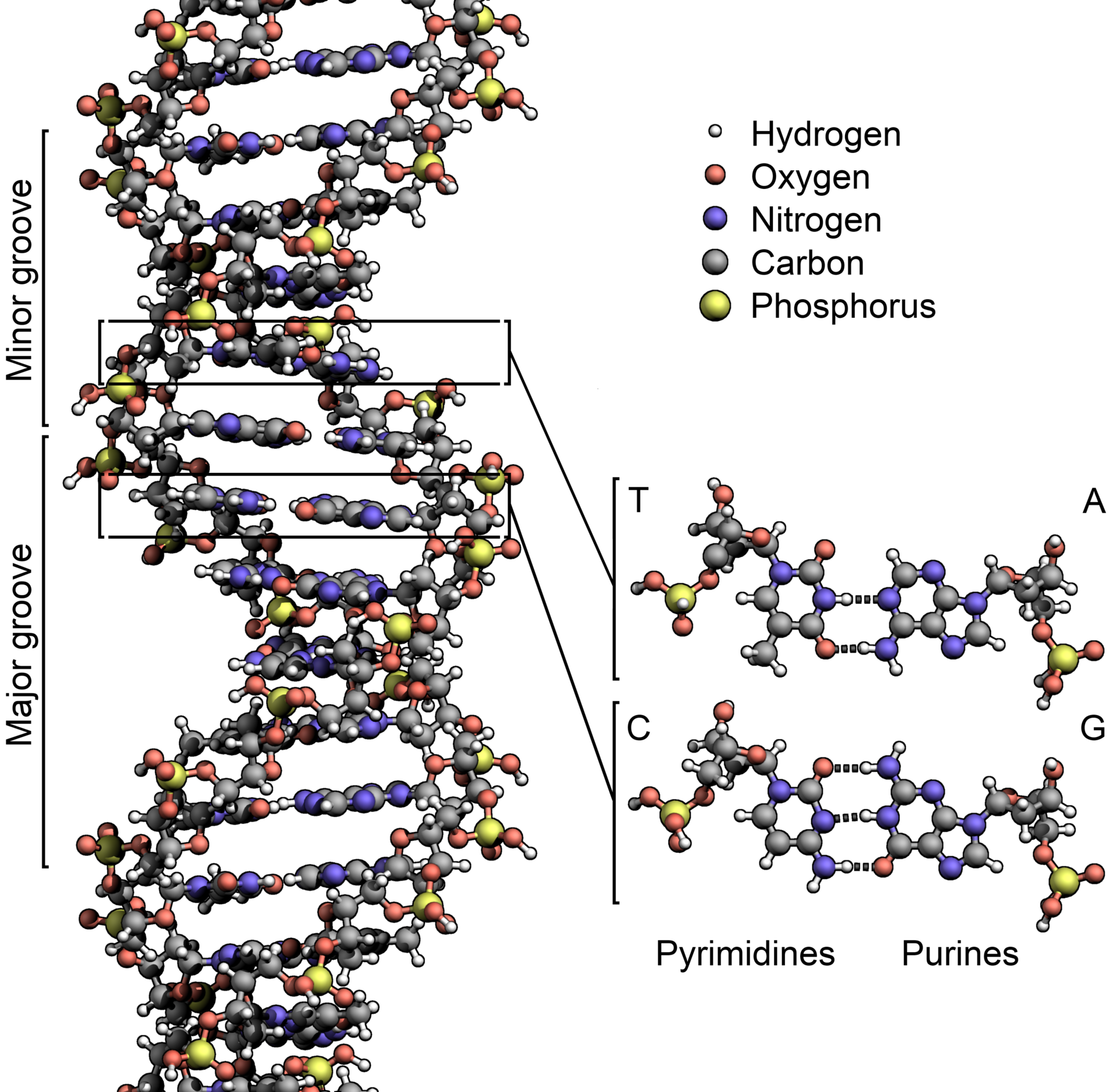

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.