By most accounts the best place to be a woman is one that offers access to quality education and comprehensive healthcare, provides gender equality with men, and meaningful career and family work-life balance. So where is this real world Shangri-La. Some might suggest this place to be the land of opportunity — the United States. But, that’s not even close. Nor is it Canada or Switzerland or Germany or the UK.

According to a recent Global Gender Gap report, and a number of other surveys, the best place to be born a girl is Iceland. Next on the list come Finland, Norway, and Sweden, with another Scandinavian country, Denmark, not too far behind in seventh place. By way of comparison, the US comes in 23rd — not great, but better than Afghanistan and Yemen.

From the Social Reader:

Icelanders are among the happiest and healthiest people on Earth. They publish more books per capita than any other country, and they have more artists. They boast the most prevalent belief in evolution — and elves, too. Iceland is the world’s most peaceful nation (the cops don’t even carry guns), and the best place for kids. Oh, and they’ve got a lesbian head of state, the world’s first. Granted, the national dish is putrefied shark meat, but you can’t have everything.

Iceland is also the best place to have a uterus, according to the folks at the World Economic Forum. The Global Gender Gap Report ranks countries based on where women have the most equal access to education and healthcare, and where they can participate most fully in the country’s political and economic life.

According to the 2013 report, Icelandic women pretty much have it all. Their sisters in Finland, Norway, and Sweden have it pretty good, too: those countries came in second, third and fourth, respectively. Denmark is not far behind at number seven.

The U.S. comes in at a dismal 23rd, which is a notch down from last year. At least we’re not Yemen, which is dead last out of 136 countries.

So how did a string of countries settled by Vikings become leaders in gender enlightenment? Bloodthirsty raiding parties don’t exactly sound like models of egalitarianism, and the early days weren’t pretty. Medieval Icelandic law prohibited women from bearing arms or even having short hair. Viking women could not be chiefs or judges, and they had to remain silent in assemblies. On the flip side, they could request a divorce and inherit property. But that’s not quite a blueprint for the world’s premier egalitarian society.

The change came with literacy, for one thing. Today almost everybody in Scandinavia can read, a legacy of the Reformation and early Christian missionaries, who were interested in teaching all citizens to read the Bible. Following a long period of turmoil, Nordic states also turned to literacy as a stabilizing force in the late 18th century. By 1842, Sweden had made education compulsory for both boys and girls.

Researchers have found that the more literate the society in general, the more egalitarian it is likely to be, and vice versa. But the literacy rate is very high in the U.S., too, so there must be something else going on in Scandinavia. Turns out that a whole smorgasbord of ingredients makes gender equality a high priority in Nordic countries.

To understand why, let’s take a look at religion. The Scandinavian Lutherans, who turned away from the excesses of the medieval Catholic Church, were concerned about equality — especially the disparity between rich and poor. They thought that individuals had some inherent rights that could not just be bestowed by the powerful, and this may have opened them to the idea of rights for women. Lutheran state churches in Denmark, Sweden, Finland, Norway and Iceland have had female priests since the middle of the 20th century, and today, the Swedish Lutheran Church even has a female archbishop.

Or maybe it’s just that there’s not much religion at all. Scandinavians aren’t big churchgoers. They tend to look at morality from a secular point of view, where there’s not so much obsessive focus on sexual issues and less interest in controlling women’s behavior and activities. Scandinavia’s secularism decoupled sex from sin, and this worked out well for females. They came to be seen as having the right to sexual experience just like men, and reproductive freedom, too. Girls and boys learn about contraception in school (and even the pleasure of orgasms), and most cities have youth clinics where contraceptives are readily available. Women may have an abortion for any reason up to the eighteenth week (they can seek permission from the National Board of Health and Welfare after that), and the issue is not politically controversial.

Scandinavia’s political economy also developed along somewhat different lines than America’s did. Sweden and Norway had some big imperialist adventures, but this behavior declined following the Napoleonic Wars. After that they invested in the military to ward off invaders, but they were less interested in building it up to deal with bloated colonial structures and foreign adventures. Overall Nordic countries devoted fewer resources to the military — the arena where patriarchal values tend to get emphasized and entrenched. Iceland, for example, spends the world’s lowest percentage of GDP on its military.

Industrialization is part of the story, too: it hit the Nordic countries late. In the 19th century, Scandinavia did have a rich and powerful merchant class, but the region never produced the Gilded Age industrial titans and extreme concentration of wealth that happened in America back then, and has returned today. (Income inequality and discrimination of all kinds seem to go hand-in-hand.)

In the 20th century, farmers and workers in the newly populated Nordic cities tended to join together in political coalitions, and they could mount a serious challenge to the business elites, who were relatively weak compared to those in the U.S. Like ordinary people everywhere, Scandinavians wanted a social and economic system where everyone could get a job, expect decent pay, and enjoy a strong social safety net. And that’s what they got — kind of like Roosevelt’s New Deal without all the restrictions added by New York bankers and southern conservatives. Strong trade unions developed, which tend to promote gender equality. The public sector grew, providing women with good job opportunities. Iceland today has the highest rate of union membership out of any OECD country.

Over time, Scandinavian countries became modern social democratic states where wealth is more evenly distributed, education is typically free up through university, and the social safety net allows women to comfortably work and raise a family. Scandinavian moms aren’t agonizing over work-family balance: parents can take a year or more of paid parental leave. Dads are expected to be equal partners in childrearing, and they seem to like it. (Check them out in the adorable photo book, The Swedish Dad.)

The folks up north have just figured out — and it’s not rocket science! — that everybody is better off when men and women share power and influence. They’re not perfect — there’s still some unfinished business about how women are treated in the private sector, and we’ve sensed an undertone of darker forces in pop culture phenoms like The Girl with the Dragon Tattoo. But Scandinavians have decided that investment in women is both good for social relations and a smart economic choice. Unsurprisingly, Nordic countries have strong economies and rank high on things like innovation — Sweden is actually ahead of the U.S. on that metric. (So please, no more nonsense about how inequality makes for innovation.)

The good news is that things are getting better for women in most places in the world. But the World Economic Forum report shows that the situation either remains the same or is deteriorating for women in 20 percent of countries.

In the U.S., we’ve evened the playing field in education, and women have good economic opportunities. But according to the WEF, American women lag behind men in terms of health and survival, and they hold relatively few political offices. Both facts become painfully clear every time a Tea Party politician betrays total ignorance of how the female body works. Instead of getting more women to participate in the political process, we’ve got setbacks like a new voter ID law in Texas, which could disenfranchise one-third of the state’s woman voters. That’s not going to help the U.S. become a world leader in gender equality.

Read the entire article here.

In almost 90 years since television was invented it has done more to re-shape our world than conquering armies and pandemics. Whether you see TV as a force for good or evil — or more recently, as a method for delivering absurd banality — you would be hard-pressed to find another human invention that has altered us so profoundly, psychologically, socially and culturally. What would its creator — John Logie Baird — think of his invention now, almost 70 years after his death?

In almost 90 years since television was invented it has done more to re-shape our world than conquering armies and pandemics. Whether you see TV as a force for good or evil — or more recently, as a method for delivering absurd banality — you would be hard-pressed to find another human invention that has altered us so profoundly, psychologically, socially and culturally. What would its creator — John Logie Baird — think of his invention now, almost 70 years after his death?

Research shows how children as young as four years empathize with some but not others. It’s all about the group: which peer group you belong to versus the rest. Thus, the uphill struggle to instill tolerance in the next generation needs to begin very early in life.

Research shows how children as young as four years empathize with some but not others. It’s all about the group: which peer group you belong to versus the rest. Thus, the uphill struggle to instill tolerance in the next generation needs to begin very early in life. Professor of Philosophy Gregory Currie tackles a thorny issue in his latest article. The question he seeks to answer is, “does great literature make us better?” It’s highly likely that a poll of most nations would show the majority of people believe that literature does in fact propel us in a forward direction, intellectually, morally, emotionally and culturally. It seem like a no-brainer. But where is the hard evidence?

Professor of Philosophy Gregory Currie tackles a thorny issue in his latest article. The question he seeks to answer is, “does great literature make us better?” It’s highly likely that a poll of most nations would show the majority of people believe that literature does in fact propel us in a forward direction, intellectually, morally, emotionally and culturally. It seem like a no-brainer. But where is the hard evidence? No, we don’t mean war on apostasy, for which many have been hung, drawn, quartered, burned and beheaded. And no, “apostrophes” are not a new sect of fundamentalist terrorists.

No, we don’t mean war on apostasy, for which many have been hung, drawn, quartered, burned and beheaded. And no, “apostrophes” are not a new sect of fundamentalist terrorists.

The gods of Norse legend are surely turning slowly in their graves. A Reykjavik, Iceland, court recently granted a 15-year-old the right to use her given name. Her first name, “Blaer” means “light breeze” in Icelandic, and until the ruling was not permitted to use the name under Iceland’s strict cultural preservation laws. So, before you name your next child Shoniqua or Te’o or Cruise, pause for a few moments to think how lucky you are that you live elsewhere (with apologies to our readers in Iceland).

The gods of Norse legend are surely turning slowly in their graves. A Reykjavik, Iceland, court recently granted a 15-year-old the right to use her given name. Her first name, “Blaer” means “light breeze” in Icelandic, and until the ruling was not permitted to use the name under Iceland’s strict cultural preservation laws. So, before you name your next child Shoniqua or Te’o or Cruise, pause for a few moments to think how lucky you are that you live elsewhere (with apologies to our readers in Iceland).

Starting up a new business was once a demanding and complex process, often undertaken in anonymity in the long shadows between the hours of a regular job. It still is over course. However nowadays “the startup” has become more of an event. The tech sector has raised this to a fine art by spawning an entire self-sustaining and self-promoting industry around startups.

Starting up a new business was once a demanding and complex process, often undertaken in anonymity in the long shadows between the hours of a regular job. It still is over course. However nowadays “the startup” has become more of an event. The tech sector has raised this to a fine art by spawning an entire self-sustaining and self-promoting industry around startups.

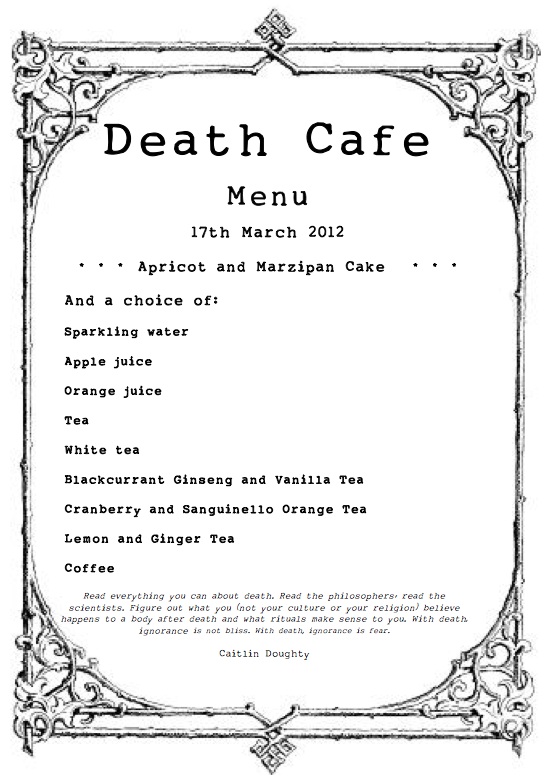

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland.

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland. It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?

It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?