I’m not sure that I fully agree with the premises and conclusions that author Paul Mason outlines in his essay below excerpted from his new book, Postcapitalism (published on 30 July 2015). However, I’d like to believe that we could all very soon thrive in a much more equitable and socially just future society. While the sharing economy has gone someway to democratizing work effort, Mason points out other, and growing, areas of society that are marching to the beat of a different, non-capitalist drum: volunteerism, alternative currencies, cooperatives, gig-economy, self-managed spaces, social sharing, time banks. This is all good.

It will undoubtedly take generations for society to grapple with the consequences of these shifts and more importantly dealing with the ongoing and accelerating upheaval wrought by ubiquitous automation. Meanwhile, the vested interests — the capitalist heads of state, the oligarchs, the monopolists, the aging plutocrats and their assorted (political) sycophants — will most certainly fight until the very bitter end to maintain an iron grip on the invisible hand of the market.

From the Guardian:

The red flags and marching songs of Syriza during the Greek crisis, plus the expectation that the banks would be nationalised, revived briefly a 20th-century dream: the forced destruction of the market from above. For much of the 20th century this was how the left conceived the first stage of an economy beyond capitalism. The force would be applied by the working class, either at the ballot box or on the barricades. The lever would be the state. The opportunity would come through frequent episodes of economic collapse.

Instead over the past 25 years it has been the left’s project that has collapsed. The market destroyed the plan; individualism replaced collectivism and solidarity; the hugely expanded workforce of the world looks like a “proletariat”, but no longer thinks or behaves as it once did.

If you lived through all this, and disliked capitalism, it was traumatic. But in the process technology has created a new route out, which the remnants of the old left – and all other forces influenced by it – have either to embrace or die. Capitalism, it turns out, will not be abolished by forced-march techniques. It will be abolished by creating something more dynamic that exists, at first, almost unseen within the old system, but which will break through, reshaping the economy around new values and behaviours. I call this postcapitalism.

As with the end of feudalism 500 years ago, capitalism’s replacement by postcapitalism will be accelerated by external shocks and shaped by the emergence of a new kind of human being. And it has started.

Postcapitalism is possible because of three major changes information technology has brought about in the past 25 years. First, it has reduced the need for work, blurred the edges between work and free time and loosened the relationship between work and wages. The coming wave of automation, currently stalled because our social infrastructure cannot bear the consequences, will hugely diminish the amount of work needed – not just to subsist but to provide a decent life for all.

Second, information is corroding the market’s ability to form prices correctly. That is because markets are based on scarcity while information is abundant. The system’s defence mechanism is to form monopolies – the giant tech companies – on a scale not seen in the past 200 years, yet they cannot last. By building business models and share valuations based on the capture and privatisation of all socially produced information, such firms are constructing a fragile corporate edifice at odds with the most basic need of humanity, which is to use ideas freely.

Third, we’re seeing the spontaneous rise of collaborative production: goods, services and organisations are appearing that no longer respond to the dictates of the market and the managerial hierarchy. The biggest information product in the world – Wikipedia – is made by volunteers for free, abolishing the encyclopedia business and depriving the advertising industry of an estimated $3bn a year in revenue.

Almost unnoticed, in the niches and hollows of the market system, whole swaths of economic life are beginning to move to a different rhythm. Parallel currencies, time banks, cooperatives and self-managed spaces have proliferated, barely noticed by the economics profession, and often as a direct result of the shattering of the old structures in the post-2008 crisis.

You only find this new economy if you look hard for it. In Greece, when a grassroots NGO mapped the country’s food co-ops, alternative producers, parallel currencies and local exchange systems they found more than 70 substantive projects and hundreds of smaller initiatives ranging from squats to carpools to free kindergartens. To mainstream economics such things seem barely to qualify as economic activity – but that’s the point. They exist because they trade, however haltingly and inefficiently, in the currency of postcapitalism: free time, networked activity and free stuff. It seems a meagre and unofficial and even dangerous thing from which to craft an entire alternative to a global system, but so did money and credit in the age of Edward III.

New forms of ownership, new forms of lending, new legal contracts: a whole business subculture has emerged over the past 10 years, which the media has dubbed the “sharing economy”. Buzzwords such as the “commons” and “peer-production” are thrown around, but few have bothered to ask what this development means for capitalism itself.

I believe it offers an escape route – but only if these micro-level projects are nurtured, promoted and protected by a fundamental change in what governments do. And this must be driven by a change in our thinking – about technology, ownership and work. So that, when we create the elements of the new system, we can say to ourselves, and to others: “This is no longer simply my survival mechanism, my bolt hole from the neoliberal world; this is a new way of living in the process of formation.”

…

The power of imagination will become critical. In an information society, no thought, debate or dream is wasted – whether conceived in a tent camp, prison cell or the table football space of a startup company.

As with virtual manufacturing, in the transition to postcapitalism the work done at the design stage can reduce mistakes in the implementation stage. And the design of the postcapitalist world, as with software, can be modular. Different people can work on it in different places, at different speeds, with relative autonomy from each other. If I could summon one thing into existence for free it would be a global institution that modelled capitalism correctly: an open source model of the whole economy; official, grey and black. Every experiment run through it would enrich it; it would be open source and with as many datapoints as the most complex climate models.

The main contradiction today is between the possibility of free, abundant goods and information; and a system of monopolies, banks and governments trying to keep things private, scarce and commercial. Everything comes down to the struggle between the network and the hierarchy: between old forms of society moulded around capitalism and new forms of society that prefigure what comes next.

…

Is it utopian to believe we’re on the verge of an evolution beyond capitalism? We live in a world in which gay men and women can marry, and in which contraception has, within the space of 50 years, made the average working-class woman freer than the craziest libertine of the Bloomsbury era. Why do we, then, find it so hard to imagine economic freedom?

It is the elites – cut off in their dark-limo world – whose project looks as forlorn as that of the millennial sects of the 19th century. The democracy of riot squads, corrupt politicians, magnate-controlled newspapers and the surveillance state looks as phoney and fragile as East Germany did 30 years ago.

All readings of human history have to allow for the possibility of a negative outcome. It haunts us in the zombie movie, the disaster movie, in the post-apocalytic wasteland of films such as The Road or Elysium. But why should we not form a picture of the ideal life, built out of abundant information, non-hierarchical work and the dissociation of work from wages?

Millions of people are beginning to realise they have been sold a dream at odds with what reality can deliver. Their response is anger – and retreat towards national forms of capitalism that can only tear the world apart. Watching these emerge, from the pro-Grexit left factions in Syriza to the Front National and the isolationism of the American right has been like watching the nightmares we had during the Lehman Brothers crisis come true.

We need more than just a bunch of utopian dreams and small-scale horizontal projects. We need a project based on reason, evidence and testable designs, that cuts with the grain of history and is sustainable by the planet. And we need to get on with it.

Read the excerpt here.

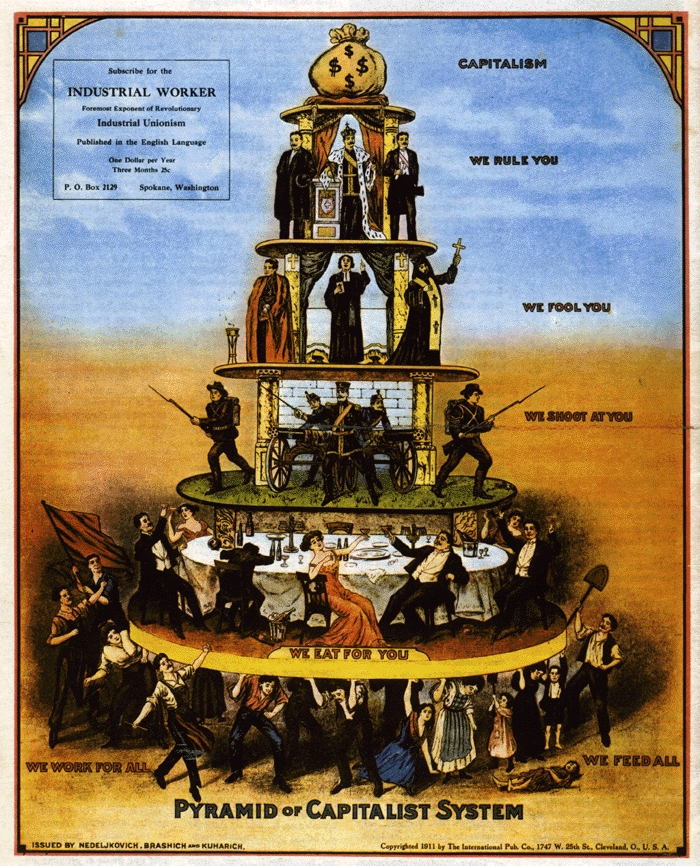

Image: The Industrial Workers of the World poster “Pyramid of Capitalist System” (1911). Courtesy of Wikipedia. Public Domain.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading. We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.