One of the most fundamental tenets of our macroscopic world is the notion that an effect has a cause. Throw a pebble (cause) into a still pond and the ripples (effect) will be visible for all to see. Down at the microscopic level, physicists have determined through their mathematical convolutions that there is no such thing — there is nothing precluding the laws of physics running in reverse. Yet, we never witness ripples in a pond diminishing and ejecting a pebble, which then finds its way back to a catcher.

Of course, this quandary has kept many a philosopher’s pencil well sharpened while physicists continue to scratch their heads. So, is cause and effect merely an coincidental illusion? Or, does our physics only operate in one direction, determined by a yet to be discovered fundamental law?

Author of Causal Reasoning in Physics, philosopher Mathias Frisch, offers great summary of current thinking, but no fundamental breakthrough.

From Aeon:

Do early childhood vaccinations cause autism, as the American model Jenny McCarthy maintains? Are human carbon emissions at the root of global warming? Come to that, if I flick this switch, will it make the light on the porch come on? Presumably I don’t need to persuade you that these would be incredibly useful things to know.

Since anthropogenic greenhouse gas emissions do cause climate change, cutting our emissions would make a difference to future warming. By contrast, autism cannot be prevented by leaving children unvaccinated. Now, there’s a subtlety here. For our judgments to be much use to us, we have to distinguish between causal relations and mere correlations. From 1999 and 2009, the number of people in the US who fell into a swimming pool and drowned varies with the number of films in which Nicholas Cage appeared – but it seems unlikely that we could reduce the number of pool drownings by keeping Cage off the screen, desirable as the remedy might be for other reasons.

In short, a working knowledge of the way in which causes and effects relate to one another seems indispensible to our ability to make our way in the world. Yet there is a long and venerable tradition in philosophy, dating back at least to David Hume in the 18th century, that finds the notions of causality to be dubious. And that might be putting it kindly.

Hume argued that when we seek causal relations, we can never discover the real power; the, as it were, metaphysical glue that binds events together. All we are able to see are regularities – the ‘constant conjunction’ of certain sorts of observation. He concluded from this that any talk of causal powers is illegitimate. Which is not to say that he was ignorant of the central importance of causal reasoning; indeed, he said that it was only by means of such inferences that we can ‘go beyond the evidence of our memory and senses’. Causal reasoning was somehow both indispensable and illegitimate. We appear to have a dilemma.

Hume’s remedy for such metaphysical quandaries was arguably quite sensible, as far as it went: have a good meal, play backgammon with friends, and try to put it out of your mind. But in the late 19th and 20th centuries, his causal anxieties were reinforced by another problem, arguably harder to ignore. According to this new line of thought, causal notions seemed peculiarly out of place in our most fundamental science – physics.

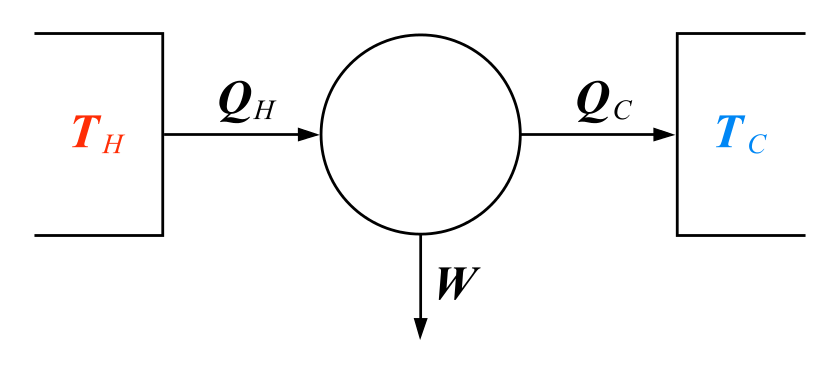

There were two reasons for this. First, causes seemed too vague for a mathematically precise science. If you can’t observe them, how can you measure them? If you can’t measure them, how can you put them in your equations? Second, causality has a definite direction in time: causes have to happen before their effects. Yet the basic laws of physics (as distinct from such higher-level statistical generalisations as the laws of thermodynamics) appear to be time-symmetric: if a certain process is allowed under the basic laws of physics, a video of the same process played backwards will also depict a process that is allowed by the laws.

The 20th-century English philosopher Bertrand Russell concluded from these considerations that, since cause and effect play no fundamental role in physics, they should be removed from the philosophical vocabulary altogether. ‘The law of causality,’ he said with a flourish, ‘like much that passes muster among philosophers, is a relic of a bygone age, surviving, like the monarchy, only because it is erroneously supposed not to do harm.’

Neo-Russellians in the 21st century express their rejection of causes with no less rhetorical vigour. The philosopher of science John Earman of the University of Pittsburgh maintains that the wooliness of causal notions makes them inappropriate for physics: ‘A putative fundamental law of physics must be stated as a mathematical relation without the use of escape clauses or words that require a PhD in philosophy to apply (and two other PhDs to referee the application, and a third referee to break the tie of the inevitable disagreement of the first two).’

This is all very puzzling. Is it OK to think in terms of causes or not? If so, why, given the apparent hostility to causes in the underlying laws? And if not, why does it seem to work so well?

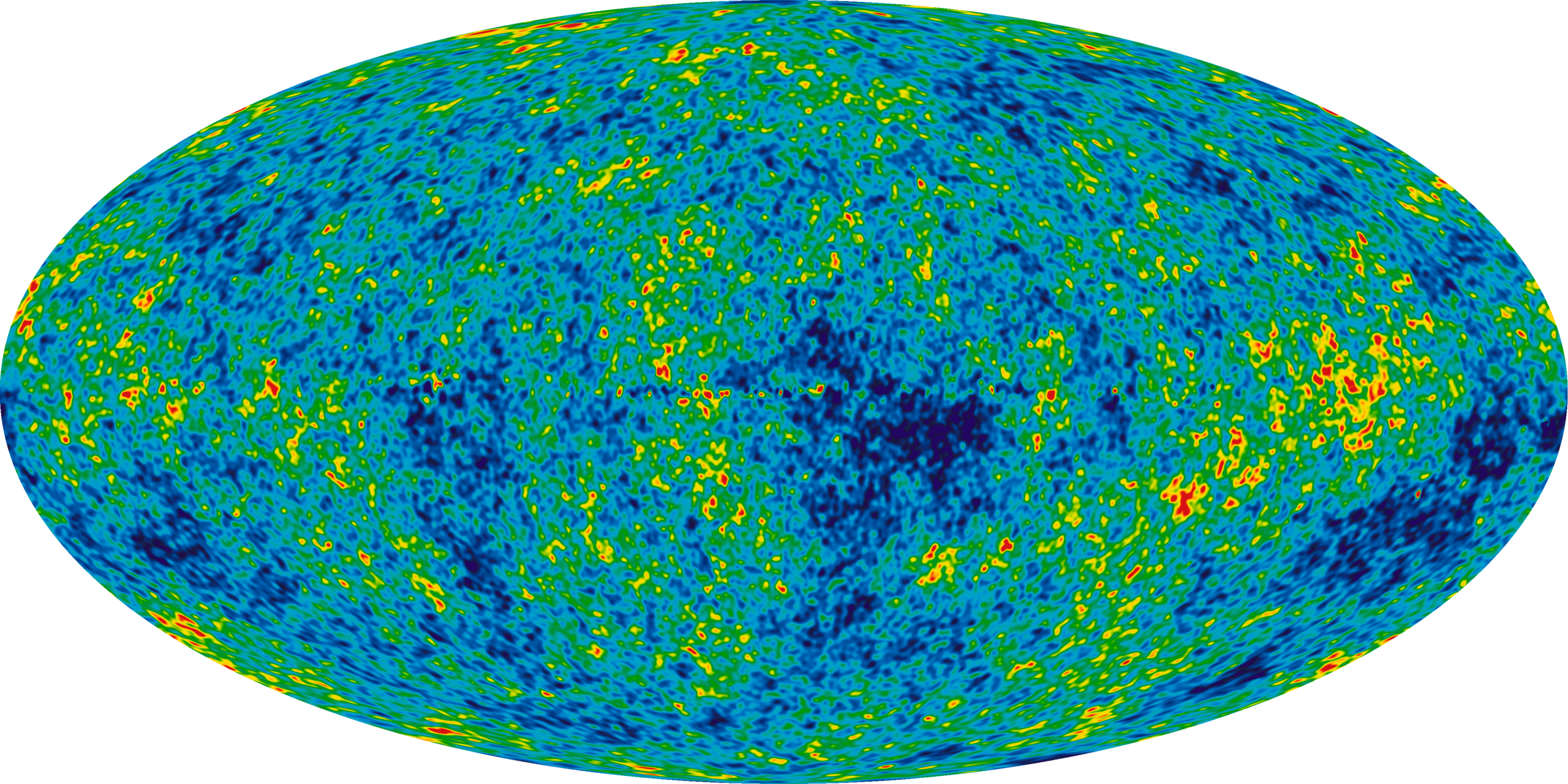

A clearer look at the physics might help us to find our way. Even though (most of) the basic laws are symmetrical in time, there are many arguably non-thermodynamic physical phenomena that can happen only one way. Imagine a stone thrown into a still pond: after the stone breaks the surface, waves spread concentrically from the point of impact. A common enough sight.

Now, imagine a video clip of the spreading waves played backwards. What we would see are concentrically converging waves. For some reason this second process, which is the time-reverse of the first, does not seem to occur in nature. The process of waves spreading from a source looks irreversible. And yet the underlying physical law describing the behaviour of waves – the wave equation – is as time-symmetric as any law in physics. It allows for both diverging and converging waves. So, given that the physical laws equally allow phenomena of both types, why do we frequently observe organised waves diverging from a source but never coherently converging waves?

Physicists and philosophers disagree on the correct answer to this question – which might be fine if it applied only to stones in ponds. But the problem also crops up with electromagnetic waves and the emission of light or radio waves: anywhere, in fact, that we find radiating waves. What to say about it?

On the one hand, many physicists (and some philosophers) invoke a causal principle to explain the asymmetry. Consider an antenna transmitting a radio signal. Since the source causes the signal, and since causes precede their effects, the radio waves diverge from the antenna after it is switched on simply because they are the repercussions of an initial disturbance, namely the switching on of the antenna. Imagine the time-reverse process: a radio wave steadily collapses into an antenna before the latter has been turned on. On the face of it, this conflicts with the idea of causality, because the wave would be present before its cause (the antenna) had done anything. David Griffiths, Emeritus Professor of Physics at Reed College in Oregon and the author of a widely used textbook on classical electrodynamics, favours this explanation, going so far as to call a time-asymmetric principle of causality ‘the most sacred tenet in all of physics’.

On the other hand, some physicists (and many philosophers) reject appeals to causal notions and maintain that the asymmetry ought to be explained statistically. The reason why we find coherently diverging waves but never coherently converging ones, they maintain, is not that wave sources cause waves, but that a converging wave would require the co?ordinated behaviour of ‘wavelets’ coming in from multiple different directions of space – delicately co?ordinated behaviour so improbable that it would strike us as nearly miraculous.

It so happens that this wave controversy has quite a distinguished history. In 1909, a few years before Russell’s pointed criticism of the notion of cause, Albert Einstein took part in a published debate concerning the radiation asymmetry. His opponent was the Swiss physicist Walther Ritz, a name you might not recognise.

It is in fact rather tragic that Ritz did not make larger waves in his own career, because his early reputation surpassed Einstein’s. The physicist Hermann Minkowski, who taught both Ritz and Einstein in Zurich, called Einstein a ‘lazy dog’ but had high praise for Ritz. When the University of Zurich was looking to appoint its first professor of theoretical physics in 1909, Ritz was the top candidate for the position. According to one member of the hiring committee, he possessed ‘an exceptional talent, bordering on genius’. But he suffered from tuberculosis, and so, due to his failing health, he was passed over for the position, which went to Einstein instead. Ritz died that very year at age 31.

Months before his death, however, Ritz published a joint letter with Einstein summarising their disagreement. While Einstein thought that the irreversibility of radiation processes could be explained probabilistically, Ritz proposed what amounted to a causal explanation. He maintained that the reason for the asymmetry is that an elementary source of radiation has an influence on other sources in the future and not in the past.

This joint letter is something of a classic text, widely cited in the literature. What is less well-known is that, in the very same year, Einstein demonstrated a striking reversibility of his own. In a second published letter, he appears to take a position very close to Ritz’s – the very view he had dismissed just months earlier. According to the wave theory of light, Einstein now asserted, a wave source ‘produces a spherical wave that propagates outward. The inverse process does not exist as elementary process’. The only way in which converging waves can be produced, Einstein claimed, was by combining a very large number of coherently operating sources. He appears to have changed his mind.

Given Einstein’s titanic reputation, you might think that such a momentous shift would occasion a few ripples in the history of science. But I know of only one significant reference to his later statement: a letter from the philosopher Karl Popper to the journal Nature in 1956. In this letter, Popper describes the wave asymmetry in terms very similar to Einstein’s. And he also makes one particularly interesting remark, one that might help us to unpick the riddle. Coherently converging waves, Popper insisted, ‘would demand a vast number of distant coherent generators of waves the co?ordination of which, to be explicable, would have to be shown as originating from the centre’ (my italics).

This is, in fact, a particular instance of a much broader phenomenon. Consider two events that are spatially distant yet correlated with one another. If they are not related as cause and effect, they tend to be joint effects of a common cause. If, for example, two lamps in a room go out suddenly, it is unlikely that both bulbs just happened to burn out simultaneously. So we look for a common cause – perhaps a circuit breaker that tripped.

Common-cause inferences are so pervasive that it is difficult to imagine what we could know about the world beyond our immediate surroundings without them. Hume was right: judgments about causality are absolutely essential in going ‘beyond the evidence of the senses’. In his book The Direction of Time (1956), the philosopher Hans Reichenbach formulated a principle underlying such inferences: ‘If an improbable coincidence has occurred, there must exist a common cause.’ To the extent that we are bound to apply Reichenbach’s rule, we are all like the hard-boiled detective who doesn’t believe in coincidences.

Read the entire article here.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading. According to Star Trek fictional history warp engines were invented in 2063. That gives us just over 50 years. While very unlikely based on our current technological prowess and general lack of understanding of the cosmos, warp engines are perhaps becoming just a little closer to being realized. But, please, no photon torpedoes!

According to Star Trek fictional history warp engines were invented in 2063. That gives us just over 50 years. While very unlikely based on our current technological prowess and general lack of understanding of the cosmos, warp engines are perhaps becoming just a little closer to being realized. But, please, no photon torpedoes! [div class=attrib]From the Physics arXiv for Technology Review:[end-div]

[div class=attrib]From the Physics arXiv for Technology Review:[end-div]

[div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the Economist:[end-div]

[div class=attrib]From the Economist:[end-div]

Editor’s Note: We are republishing this article by Paul Dirac from the May 1963 issue of Scientific American, as it might be of interest to listeners to the June 24, 2010, and June 25, 2010 Science Talk podcasts, featuring award-winning writer and physicist Graham Farmelo discussing The Strangest Man, his biography of the Nobel Prize-winning British theoretical physicist.

Editor’s Note: We are republishing this article by Paul Dirac from the May 1963 issue of Scientific American, as it might be of interest to listeners to the June 24, 2010, and June 25, 2010 Science Talk podcasts, featuring award-winning writer and physicist Graham Farmelo discussing The Strangest Man, his biography of the Nobel Prize-winning British theoretical physicist. We have, then, the development from the three-dimensional picture of the world to the four-dimensional picture. The reader will probably not be happy with this situation, because the world still appears three-dimensional to his consciousness. How can one bring this appearance into the four-dimensional picture that Einstein requires the physicist to have?

We have, then, the development from the three-dimensional picture of the world to the four-dimensional picture. The reader will probably not be happy with this situation, because the world still appears three-dimensional to his consciousness. How can one bring this appearance into the four-dimensional picture that Einstein requires the physicist to have? Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once.

Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once. Einstein said there would be days like this.

Einstein said there would be days like this.