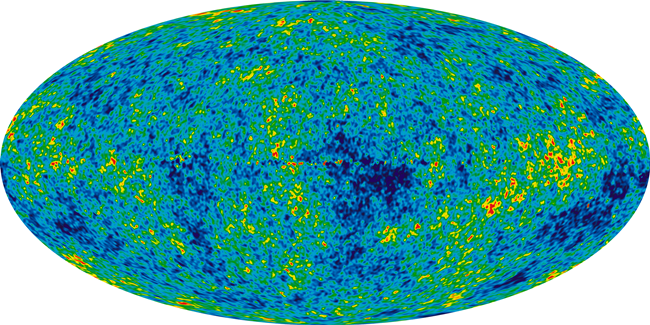

Cosmologists and particle physicists have over the last decade or so proposed the existence of Dark Matter. It’s so called because it cannot be seen or sensed directly. It is inferred from gravitational effects on visible matter. Together with it’s theoretical cousin, Dark Energy, the two were hypothesized to make up most of the universe. In fact, the regular star-stuff — matter and energy — of which we, our planet, solar system and the visible universe are made, consists of only a paltry 4 percent.

Cosmologists and particle physicists have over the last decade or so proposed the existence of Dark Matter. It’s so called because it cannot be seen or sensed directly. It is inferred from gravitational effects on visible matter. Together with it’s theoretical cousin, Dark Energy, the two were hypothesized to make up most of the universe. In fact, the regular star-stuff — matter and energy — of which we, our planet, solar system and the visible universe are made, consists of only a paltry 4 percent.

Dark Matter and Dark Energy were originally proposed to account for discrepancies in calculations of the mass of large objects such as galaxies and galaxy clusters, and calculations derived from the mass of smaller visible objects such as stars, nebulae and interstellar gas.

The problem with Dark Matter is that it remains elusive and for the most part a theoretical construct. And, now a new group of theories suggest that the dark stuff may in fact be an illusion.

[div class=attrib]From National Geographic:[end-div]

The mysterious substance known as dark matter may actually be an illusion created by gravitational interactions between short-lived particles of matter and antimatter, a new study says.

Dark matter is thought to be an invisible substance that makes up almost a quarter of the mass in the universe. The concept was first proposed in 1933 to explain why the outer galaxies in galaxy clusters orbit faster than they should, based on the galaxies’ visible mass.

(Related: “Dark-Matter Galaxy Detected: Hidden Dwarf Lurks Nearby?”)

At the observed speeds, the outer galaxies should be flung out into space, since the clusters don’t appear to have enough mass to keep the galaxies at their edges gravitationally bound.

So physicists proposed that the galaxies are surrounded by halos of invisible matter. This dark matter provides the extra mass, which in turn creates gravitational fields strong enough to hold the clusters together.

In the new study, physicist Dragan Hajdukovic at the European Organization for Nuclear Research (CERN) in Switzerland proposes an alternative explanation, based on something he calls the “gravitational polarization of the quantum vacuum.”

(Also see “Einstein’s Gravity Confirmed on a Cosmic Scale.”)

Empty Space Filled With “Virtual” Particles

The quantum vacuum is the name physicists give to what we see as empty space.

According to quantum physics, empty space is not actually barren but is a boiling sea of so-called virtual particles and antiparticles constantly popping in and out of existence.

Antimatter particles are mirror opposites of normal matter particles. For example, an antiproton is a negatively charged version of the positively charged proton, one of the basic constituents of the atom.

When matter and antimatter collide, they annihilate in a flash of energy. The virtual particles spontaneously created in the quantum vacuum appear and then disappear so quickly that they can’t be directly observed.

In his new mathematical model, Hajdukovic investigates what would happen if virtual matter and virtual antimatter were not only electrical opposites but also gravitational opposites—an idea some physicists previously proposed.

“Mainstream physics assumes that there is only one gravitational charge, while I have assumed that there are two gravitational charges,” Hajdukovic said.

According to his idea, outlined in the current issue of the journal Astrophysics and Space Science, matter has a positive gravitational charge and antimatter a negative one.

That would mean matter and antimatter are gravitationally repulsive, so that an object made of antimatter would “fall up” in the gravitational field of Earth, which is composed of normal matter.

Particles and antiparticles could still collide, however, since gravitational repulsion is much weaker than electrical attraction.

How Galaxies Could Get Gravity Boost

While the idea of particle antigravity might seem exotic, Hajdukovic says his theory is based on well-established tenants in quantum physics.

For example, it’s long been known that particles can team up to create a so-called electric dipole, with positively charge particles at one end and negatively charged particles at the other. (See “Universe’s Existence May Be Explained by New Material.”)

According to theory, there are countless electric dipoles created by virtual particles in any given volume of the quantum vacuum.

All of these electric dipoles are randomly oriented—like countless compass needles pointing every which way. But if the dipoles form in the presence of an existing electric field, they immediately align along the same direction as the field.

According to quantum field theory, this sudden snapping to order of electric dipoles, called polarization, generates a secondary electric field that combines with and strengthens the first field.

Hajdukovic suggests that a similar phenomenon happens with gravity. If virtual matter and antimatter particles have different gravitational charges, then randomly oriented gravitational dipoles would be generated in space.

[div class=attrib]More from theSource here.[end-div]

[div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] [div class=attrib]From Slate:[end-div]

[div class=attrib]From Slate:[end-div] [div class=attrib]From Frank Jacobs for Strange Maps:[end-div]

[div class=attrib]From Frank Jacobs for Strange Maps:[end-div]

Why do some words take hold in the public consciousness and persist through generations while others fall by the wayside after one season?

Why do some words take hold in the public consciousness and persist through generations while others fall by the wayside after one season? A poem by Billy Collins ushers in another week. Collins served two terms as the U.S. Poet Laureate, from 2001-2003. He is known for poetry imbued with leftfield humor and deep insight.

A poem by Billy Collins ushers in another week. Collins served two terms as the U.S. Poet Laureate, from 2001-2003. He is known for poetry imbued with leftfield humor and deep insight. Jonathan Ive, the design brains behind such iconic contraptions as the iMac, iPod and the iPhone discusses his notion of “undesign”. Ive has over 300 patents and is often cited as one of the most influential industrial designers of the last 20 years. Perhaps it’s purely coincidental that’s Ive’s understated “undesign” comes from his unassuming Britishness.

Jonathan Ive, the design brains behind such iconic contraptions as the iMac, iPod and the iPhone discusses his notion of “undesign”. Ive has over 300 patents and is often cited as one of the most influential industrial designers of the last 20 years. Perhaps it’s purely coincidental that’s Ive’s understated “undesign” comes from his unassuming Britishness.

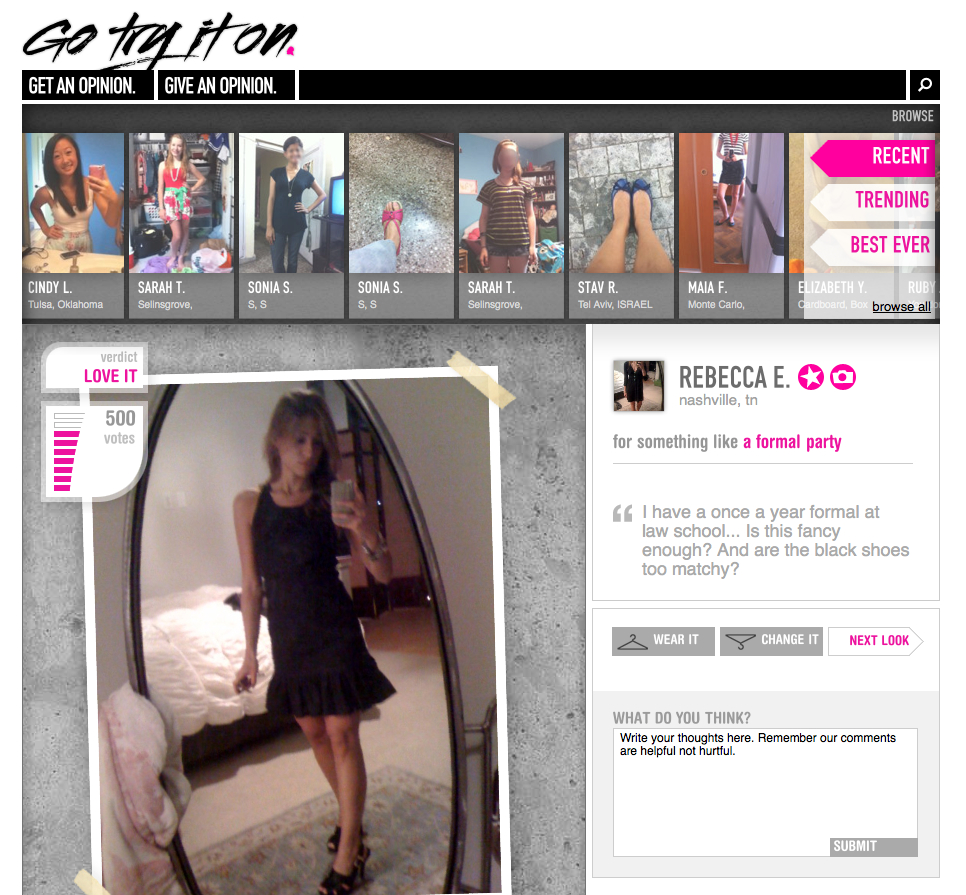

Alexander Edmonds has a thoroughly engrossing piece on the pursuit of “beauty” and the culture of vanity as commodity. And the role of plastic surgeon as both enabler and arbiter comes under a very necessary microscope.

Alexander Edmonds has a thoroughly engrossing piece on the pursuit of “beauty” and the culture of vanity as commodity. And the role of plastic surgeon as both enabler and arbiter comes under a very necessary microscope. [div class=attrib]From Neuroanthropology:[end-div]

[div class=attrib]From Neuroanthropology:[end-div]

Scents are deeply evokative. A faint whiff of a distinct and rare scent can bring back a long forgotten memory and make it vivid, and do so like no other sense. Smells can make our stomachs churn and make us swoon.

Scents are deeply evokative. A faint whiff of a distinct and rare scent can bring back a long forgotten memory and make it vivid, and do so like no other sense. Smells can make our stomachs churn and make us swoon. A thoughtful question posed below by philosopher Eric Schwitzgebel over at The Splinted Mind. Gazing in a mirror or reflection is something we all do on a frequent basis. In fact, is there any human activity that trumps this in frequency? Yet, have we ever given thought to how and why we perceive ourselves in space differently to say a car in a rearview mirror. The car in the rearview mirror is quite clearly approaching us from behind as we drive. However, where exactly is our reflection we when cast our eyes at the mirror in the bathrooom?

A thoughtful question posed below by philosopher Eric Schwitzgebel over at The Splinted Mind. Gazing in a mirror or reflection is something we all do on a frequent basis. In fact, is there any human activity that trumps this in frequency? Yet, have we ever given thought to how and why we perceive ourselves in space differently to say a car in a rearview mirror. The car in the rearview mirror is quite clearly approaching us from behind as we drive. However, where exactly is our reflection we when cast our eyes at the mirror in the bathrooom? Skeptic

Skeptic

Accumulating likes, collecting followers and quantifying one’s friends online is serious business. If you don’t have more than a couple of hundred professional connections in your LinkedIn profile or at least twice that number of “friends” through Facebook or ten times that volume of Twittering followers, you’re most likely to be a corporate wallflower, a social has-been.

Accumulating likes, collecting followers and quantifying one’s friends online is serious business. If you don’t have more than a couple of hundred professional connections in your LinkedIn profile or at least twice that number of “friends” through Facebook or ten times that volume of Twittering followers, you’re most likely to be a corporate wallflower, a social has-been.

[div class=attrib]From TreeHugger:[end-div]

[div class=attrib]From TreeHugger:[end-div] That very quaint form of communication, the printed postcard, reserved for independent children to their clingy parents and boastful travelers to their (not) distant (enough) family members, may soon become as arcane as the LP or paper-based map. Until the late-90s there were some rather common sights associated with the postcard: the tourist lounging in a cafe musing with great difficulty over the two or three pithy lines he would write from Paris; the traveler asking for a postcard stamp in broken German; the remaining 3 from a pack of 6 unwritten postcards of the Vatican now used as bookmarks; the over saturated colors of the sunset.

That very quaint form of communication, the printed postcard, reserved for independent children to their clingy parents and boastful travelers to their (not) distant (enough) family members, may soon become as arcane as the LP or paper-based map. Until the late-90s there were some rather common sights associated with the postcard: the tourist lounging in a cafe musing with great difficulty over the two or three pithy lines he would write from Paris; the traveler asking for a postcard stamp in broken German; the remaining 3 from a pack of 6 unwritten postcards of the Vatican now used as bookmarks; the over saturated colors of the sunset.

A poignant, poetic view of our relationships, increasingly mediated and recalled for us through technology. Conor O’Callaghan’s poem ushers in this week’s collection of articles at theDiagonal focused on technology.

A poignant, poetic view of our relationships, increasingly mediated and recalled for us through technology. Conor O’Callaghan’s poem ushers in this week’s collection of articles at theDiagonal focused on technology. Where evaluating artistic style was once the exclusive domain of seasoned art historians and art critics with many decades of experience, a computer armed with sophisticated image processing software is making a stir in art circles.

Where evaluating artistic style was once the exclusive domain of seasoned art historians and art critics with many decades of experience, a computer armed with sophisticated image processing software is making a stir in art circles.

In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “

In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “

[div class=attrib]From Rolling Stone:[end-div]

[div class=attrib]From Rolling Stone:[end-div]