Associate professor of philosophy, Firmin DeBrabander, argues that guns have no place in a civil society. Guns hinder free speech and free assembly for those at either end of the barrel. Guns fragment our society and undermine the sense and mechanisms of community. He is right.

Associate professor of philosophy, Firmin DeBrabander, argues that guns have no place in a civil society. Guns hinder free speech and free assembly for those at either end of the barrel. Guns fragment our society and undermine the sense and mechanisms of community. He is right.

[div class=attrib]From the New York Times:[end-div]

The night of the shootings at Sandy Hook Elementary School in Newtown, Conn., I was in the car with my wife and children, working out details for our eldest son’s 12th birthday the following Sunday — convening a group of friends at a showing of the film “The Hobbit.” The memory of the Aurora movie theatre massacre was fresh in his mind, so he was concerned that it not be a late night showing. At that moment, like so many families, my wife and I were weighing whether to turn on the radio and expose our children to coverage of the school shootings in Connecticut. We did. The car was silent in the face of the flood of gory details. When the story was over, there was a long thoughtful pause in the back of the car. Then my eldest son asked if he could be homeschooled.

That incident brought home to me what I have always suspected, but found difficult to articulate: an armed society — especially as we prosecute it at the moment in this country — is the opposite of a civil society.

The Newtown shootings occurred at a peculiar time in gun rights history in this nation. On one hand, since the mid 1970s, fewer households each year on average have had a gun. Gun control advocates should be cheered by that news, but it is eclipsed by a flurry of contrary developments. As has been well publicized, gun sales have steadily risen over the past few years, and spiked with each of Obama’s election victories.

Furthermore, of the weapons that proliferate amongst the armed public, an increasing number are high caliber weapons (the weapon of choice in the goriest shootings in recent years). Then there is the legal landscape, which looks bleak for the gun control crowd.

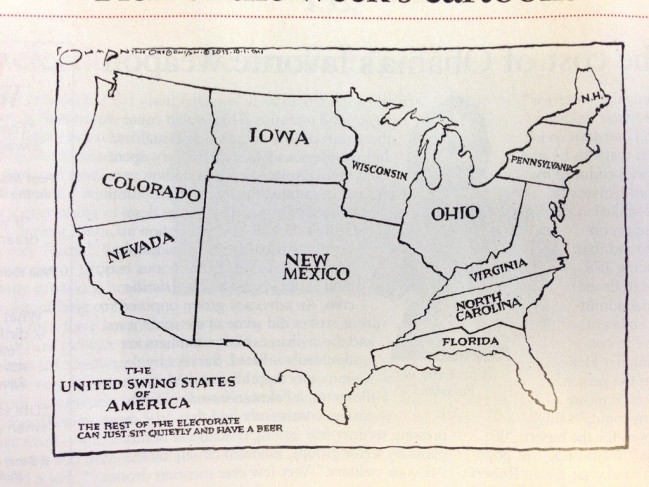

Every state except for Illinois has a law allowing the carrying of concealed weapons — and just last week, a federal court struck down Illinois’ ban. States are now lining up to allow guns on college campuses. In September, Colorado joined four other states in such a move, and statehouses across the country are preparing similar legislation. And of course, there was Oklahoma’s ominous Open Carry Law approved by voters this election day — the fifteenth of its kind, in fact — which, as the name suggests, allows those with a special permit to carry weapons in the open, with a holster on their hip.

Individual gun ownership — and gun violence — has long been a distinctive feature of American society, setting us apart from the other industrialized democracies of the world. Recent legislative developments, however, are progressively bringing guns out of the private domain, with the ultimate aim of enshrining them in public life. Indeed, the N.R.A. strives for a day when the open carry of powerful weapons might be normal, a fixture even, of any visit to the coffee shop or grocery store — or classroom.

As N.R.A. president Wayne LaPierre expressed in a recent statement on the organization’s Web site, more guns equal more safety, by their account. A favorite gun rights saying is “an armed society is a polite society.” If we allow ever more people to be armed, at any time, in any place, this will provide a powerful deterrent to potential criminals. Or if more citizens were armed — like principals and teachers in the classroom, for example — they could halt senseless shootings ahead of time, or at least early on, and save society a lot of heartache and bloodshed.

As ever more people are armed in public, however — even brandishing weapons on the street — this is no longer recognizable as a civil society. Freedom is vanished at that point.

And yet, gun rights advocates famously maintain that individual gun ownership, even of high caliber weapons, is the defining mark of our freedom as such, and the ultimate guarantee of our enduring liberty. Deeper reflection on their argument exposes basic fallacies.

In her book “The Human Condition,” the philosopher Hannah Arendt states that “violence is mute.” According to Arendt, speech dominates and distinguishes the polis, the highest form of human association, which is devoted to the freedom and equality of its component members. Violence — and the threat of it — is a pre-political manner of communication and control, characteristic of undemocratic organizations and hierarchical relationships. For the ancient Athenians who practiced an incipient, albeit limited form of democracy (one that we surely aim to surpass), violence was characteristic of the master-slave relationship, not that of free citizens.

Arendt offers two points that are salient to our thinking about guns: for one, they insert a hierarchy of some kind, but fundamental nonetheless, and thereby undermine equality. But furthermore, guns pose a monumental challenge to freedom, and particular, the liberty that is the hallmark of any democracy worthy of the name — that is, freedom of speech. Guns do communicate, after all, but in a way that is contrary to free speech aspirations: for, guns chasten speech.

This becomes clear if only you pry a little more deeply into the N.R.A.’s logic behind an armed society. An armed society is polite, by their thinking, precisely because guns would compel everyone to tamp down eccentric behavior, and refrain from actions that might seem threatening. The suggestion is that guns liberally interspersed throughout society would cause us all to walk gingerly — not make any sudden, unexpected moves — and watch what we say, how we act, whom we might offend.

As our Constitution provides, however, liberty entails precisely the freedom to be reckless, within limits, also the freedom to insult and offend as the case may be. The Supreme Court has repeatedly upheld our right to experiment in offensive language and ideas, and in some cases, offensive action and speech. Such experimentation is inherent to our freedom as such. But guns by their nature do not mix with this experiment — they don’t mix with taking offense. They are combustible ingredients in assembly and speech.

I often think of the armed protestor who showed up to one of the famously raucous town hall hearings on Obamacare in the summer of 2009. The media was very worked up over this man, who bore a sign that invoked a famous quote of Thomas Jefferson, accusing the president of tyranny. But no one engaged him at the protest; no one dared approach him even, for discussion or debate — though this was a town hall meeting, intended for just such purposes. Such is the effect of guns on speech — and assembly. Like it or not, they transform the bearer, and end the conversation in some fundamental way. They announce that the conversation is not completely unbounded, unfettered and free; there is or can be a limit to negotiation and debate — definitively.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of Wikipedia.[end-div]

Brooke Allen reviews a handy new tome for those who live in comfort and safety but who perceive threats large and small from all crevices and all angles. Paradoxically, most people in the West are generally safer than any previous generations, and yet they imagine existential threats ranging from viral pandemics to hemispheric mega-storms.

Brooke Allen reviews a handy new tome for those who live in comfort and safety but who perceive threats large and small from all crevices and all angles. Paradoxically, most people in the West are generally safer than any previous generations, and yet they imagine existential threats ranging from viral pandemics to hemispheric mega-storms. One of our favorite thinkers (and authors) here at theDiagonal is Nassim Taleb. His new work entitled Antifragile expands on ideas that he first described in his bestseller Black Swan.

One of our favorite thinkers (and authors) here at theDiagonal is Nassim Taleb. His new work entitled Antifragile expands on ideas that he first described in his bestseller Black Swan. Starting up a new business was once a demanding and complex process, often undertaken in anonymity in the long shadows between the hours of a regular job. It still is over course. However nowadays “the startup” has become more of an event. The tech sector has raised this to a fine art by spawning an entire self-sustaining and self-promoting industry around startups.

Starting up a new business was once a demanding and complex process, often undertaken in anonymity in the long shadows between the hours of a regular job. It still is over course. However nowadays “the startup” has become more of an event. The tech sector has raised this to a fine art by spawning an entire self-sustaining and self-promoting industry around startups.

Expanding on the work of Immanuel Kant in the late 18th century, German philosopher Georg Wilhelm Friedrich Hegel laid the foundations for what would later become two opposing political systems, socialism and free market capitalism. His comprehensive framework of Absolute Idealism influenced numerous philosophers and thinkers of all shades including Karl Marx and Ralph Waldo Emerson. While many thinkers later rounded on Hegel’s world view as nothing but a thinly veiled attempt to justify totalitarianism in his own nation, there is no argument as to the profound influence of his works on later thinkers from both the left and the right wings of the political spectrum.

Expanding on the work of Immanuel Kant in the late 18th century, German philosopher Georg Wilhelm Friedrich Hegel laid the foundations for what would later become two opposing political systems, socialism and free market capitalism. His comprehensive framework of Absolute Idealism influenced numerous philosophers and thinkers of all shades including Karl Marx and Ralph Waldo Emerson. While many thinkers later rounded on Hegel’s world view as nothing but a thinly veiled attempt to justify totalitarianism in his own nation, there is no argument as to the profound influence of his works on later thinkers from both the left and the right wings of the political spectrum. British voters may recall Screaming Lord Sutch, 3rd Earl of Harrow, of the Official Monster Raving Loony Party, who ran in over 40 parliamentary elections during the 1980s and 90s. He never won, but garnered a respectable number of votes and many fans (he was also a musician).

British voters may recall Screaming Lord Sutch, 3rd Earl of Harrow, of the Official Monster Raving Loony Party, who ran in over 40 parliamentary elections during the 1980s and 90s. He never won, but garnered a respectable number of votes and many fans (he was also a musician).

[div class=attrib]From the Wall Street Journal:[end-div]

[div class=attrib]From the Wall Street Journal:[end-div]

Humans have a peculiar habit of anthropomorphizing anything that moves, and for that matter, most objects that remain static as well. So, it is not surprising that evil is often personified and even stereotyped; it is said that true evil even has a home somewhere below where you currently stand.

Humans have a peculiar habit of anthropomorphizing anything that moves, and for that matter, most objects that remain static as well. So, it is not surprising that evil is often personified and even stereotyped; it is said that true evil even has a home somewhere below where you currently stand.

There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.