Physics works very well in explaining our world, yet it is also broken — it cannot, at the moment, reconcile our views of the very small (quantum theory) with those of the very large (relativity theory).

Physics works very well in explaining our world, yet it is also broken — it cannot, at the moment, reconcile our views of the very small (quantum theory) with those of the very large (relativity theory).

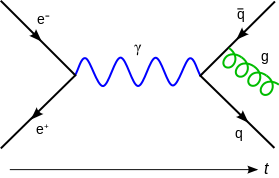

So although the probabilistic underpinnings of quantum theory have done wonders in allowing physicists to construct the Standard Model, gaps remain.

Back in the mid-1920s, the probabilistic worldview proposed by Niels Bohr and others gained favor and took hold. A competing theory, known as the pilot wave theory, proposed by a young Louis de Broglie, was given short shrift. Yet some theorists have maintained that it may do a better job of reconciling this core gap in our understanding — so it is time to revisit and breathe fresh life into pilot wave theory.

From Wired / Quanta:

For nearly a century, “reality” has been a murky concept. The laws of quantum physics seem to suggest that particles spend much of their time in a ghostly state, lacking even basic properties such as a definite location and instead existing everywhere and nowhere at once. Only when a particle is measured does it suddenly materialize, appearing to pick its position as if by a roll of the dice.

This idea that nature is inherently probabilistic — that particles have no hard properties, only likelihoods, until they are observed — is directly implied by the standard equations of quantum mechanics. But now a set of surprising experiments with fluids has revived old skepticism about that worldview. The bizarre results are fueling interest in an almost forgotten version of quantum mechanics, one that never gave up the idea of a single, concrete reality.

The experiments involve an oil droplet that bounces along the surface of a liquid. The droplet gently sloshes the liquid with every bounce. At the same time, ripples from past bounces affect its course. The droplet’s interaction with its own ripples, which form what’s known as a pilot wave, causes it to exhibit behaviors previously thought to be peculiar to elementary particles — including behaviors seen as evidence that these particles are spread through space like waves, without any specific location, until they are measured.

Particles at the quantum scale seem to do things that human-scale objects do not do. They can tunnel through barriers, spontaneously arise or annihilate, and occupy discrete energy levels. This new body of research reveals that oil droplets, when guided by pilot waves, also exhibit these quantum-like features.

To some researchers, the experiments suggest that quantum objects are as definite as droplets, and that they too are guided by pilot waves — in this case, fluid-like undulations in space and time. These arguments have injected new life into a deterministic (as opposed to probabilistic) theory of the microscopic world first proposed, and rejected, at the birth of quantum mechanics.

“This is a classical system that exhibits behavior that people previously thought was exclusive to the quantum realm, and we can say why,” said John Bush, a professor of applied mathematics at the Massachusetts Institute of Technology who has led several recent bouncing-droplet experiments. “The more things we understand and can provide a physical rationale for, the more difficult it will be to defend the ‘quantum mechanics is magic’ perspective.”

Magical Measurements

The orthodox view of quantum mechanics, known as the “Copenhagen interpretation” after the home city of Danish physicist Niels Bohr, one of its architects, holds that particles play out all possible realities simultaneously. Each particle is represented by a “probability wave” weighting these various possibilities, and the wave collapses to a definite state only when the particle is measured. The equations of quantum mechanics do not address how a particle’s properties solidify at the moment of measurement, or how, at such moments, reality picks which form to take. But the calculations work. As Seth Lloyd, a quantum physicist at MIT, put it, “Quantum mechanics is just counterintuitive and we just have to suck it up.”

A classic experiment in quantum mechanics that seems to demonstrate the probabilistic nature of reality involves a beam of particles (such as electrons) propelled one by one toward a pair of slits in a screen. When no one keeps track of each electron’s trajectory, it seems to pass through both slits simultaneously. In time, the electron beam creates a wavelike interference pattern of bright and dark stripes on the other side of the screen. But when a detector is placed in front of one of the slits, its measurement causes the particles to lose their wavelike omnipresence, collapse into definite states, and travel through one slit or the other. The interference pattern vanishes. The great 20th-century physicist Richard Feynman said that this double-slit experiment “has in it the heart of quantum mechanics,” and “is impossible, absolutely impossible, to explain in any classical way.”

Some physicists now disagree. “Quantum mechanics is very successful; nobody’s claiming that it’s wrong,” said Paul Milewski, a professor of mathematics at the University of Bath in England who has devised computer models of bouncing-droplet dynamics. “What we believe is that there may be, in fact, some more fundamental reason why [quantum mechanics] looks the way it does.”

Riding Waves

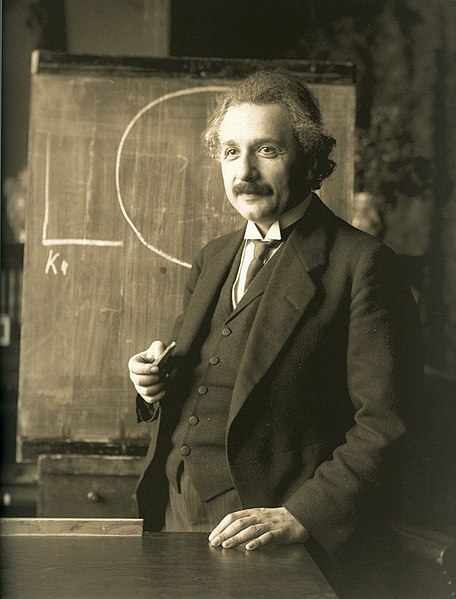

The idea that pilot waves might explain the peculiarities of particles dates back to the early days of quantum mechanics. The French physicist Louis de Broglie presented the earliest version of pilot-wave theory at the 1927 Solvay Conference in Brussels, a famous gathering of the founders of the field. As de Broglie explained that day to Bohr, Albert Einstein, Erwin Schrödinger, Werner Heisenberg and two dozen other celebrated physicists, pilot-wave theory made all the same predictions as the probabilistic formulation of quantum mechanics (which wouldn’t be referred to as the “Copenhagen” interpretation until the 1950s), but without the ghostliness or mysterious collapse.

The probabilistic version, championed by Bohr, involves a single equation that represents likely and unlikely locations of particles as peaks and troughs of a wave. Bohr interpreted this probability-wave equation as a complete definition of the particle. But de Broglie urged his colleagues to use two equations: one describing a real, physical wave, and another tying the trajectory of an actual, concrete particle to the variables in that wave equation, as if the particle interacts with and is propelled by the wave rather than being defined by it.

For example, consider the double-slit experiment. In de Broglie’s pilot-wave picture, each electron passes through just one of the two slits, but is influenced by a pilot wave that splits and travels through both slits. Like flotsam in a current, the particle is drawn to the places where the two wavefronts cooperate, and does not go where they cancel out.

De Broglie could not predict the exact place where an individual particle would end up — just like Bohr’s version of events, pilot-wave theory predicts only the statistical distribution of outcomes, or the bright and dark stripes — but the two men interpreted this shortcoming differently. Bohr claimed that particles don’t have definite trajectories; de Broglie argued that they do, but that we can’t measure each particle’s initial position well enough to deduce its exact path.

In principle, however, the pilot-wave theory is deterministic: The future evolves dynamically from the past, so that, if the exact state of all the particles in the universe were known at a given instant, their states at all future times could be calculated.

At the Solvay conference, Einstein objected to a probabilistic universe, quipping, “God does not play dice,” but he seemed ambivalent about de Broglie’s alternative. Bohr told Einstein to “stop telling God what to do,” and (for reasons that remain in dispute) he won the day. By 1932, when the Hungarian-American mathematician John von Neumann claimed to have proven that the probabilistic wave equation in quantum mechanics could have no “hidden variables” (that is, missing components, such as de Broglie’s particle with its well-defined trajectory), pilot-wave theory was so poorly regarded that most physicists believed von Neumann’s proof without even reading a translation.

More than 30 years would pass before von Neumann’s proof was shown to be false, but by then the damage was done. The physicist David Bohm resurrected pilot-wave theory in a modified form in 1952, with Einstein’s encouragement, and made clear that it did work, but it never caught on. (The theory is also known as de Broglie-Bohm theory, or Bohmian mechanics.)

Later, the Northern Irish physicist John Stewart Bell went on to prove a seminal theorem that many physicists today misinterpret as rendering hidden variables impossible. But Bell supported pilot-wave theory. He was the one who pointed out the flaws in von Neumann’s original proof. And in 1986 he wrote that pilot-wave theory “seems to me so natural and simple, to resolve the wave-particle dilemma in such a clear and ordinary way, that it is a great mystery to me that it was so generally ignored.”

The neglect continues. A century down the line, the standard, probabilistic formulation of quantum mechanics has been combined with Einstein’s theory of special relativity and developed into the Standard Model, an elaborate and precise description of most of the particles and forces in the universe. Acclimating to the weirdness of quantum mechanics has become a physicists’ rite of passage. The old, deterministic alternative is not mentioned in most textbooks; most people in the field haven’t heard of it. Sheldon Goldstein, a professor of mathematics, physics and philosophy at Rutgers University and a supporter of pilot-wave theory, blames the “preposterous” neglect of the theory on “decades of indoctrination.” At this stage, Goldstein and several others noted, researchers risk their careers by questioning quantum orthodoxy.

A Quantum Drop

Now at last, pilot-wave theory may be experiencing a minor comeback — at least, among fluid dynamicists. “I wish that the people who were developing quantum mechanics at the beginning of last century had access to these experiments,” Milewski said. “Because then the whole history of quantum mechanics might be different.”

The experiments began a decade ago, when Yves Couder and colleagues at Paris Diderot University discovered that vibrating a silicon oil bath up and down at a particular frequency can induce a droplet to bounce along the surface. The droplet’s path, they found, was guided by the slanted contours of the liquid’s surface generated from the droplet’s own bounces — a mutual particle-wave interaction analogous to de Broglie’s pilot-wave concept.

Read the entire article here.

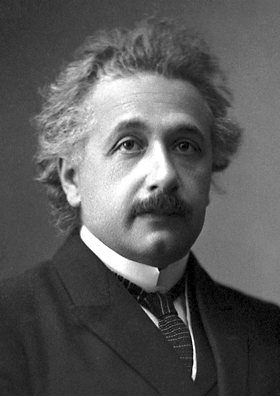

Image: Louis de Broglie. Courtesy of Wikipedia.

Since Einstein first published his elegant theory of General Relativity almost 100 years ago it has proved to be one of most powerful and enduring cornerstones of modern science. Yet theorists and researchers the world over know that it cannot possibly remain the sole answer to our cosmological questions. It answers questions about the very, very large — galaxies, stars and planets and the gravitational relationship between them. But it fails to tackle the science of the very, very small — atoms, their constituents and the forces that unite and repel them, which is addressed by the elegant and complex, but mutually incompatible Quantum Theory.

Since Einstein first published his elegant theory of General Relativity almost 100 years ago it has proved to be one of most powerful and enduring cornerstones of modern science. Yet theorists and researchers the world over know that it cannot possibly remain the sole answer to our cosmological questions. It answers questions about the very, very large — galaxies, stars and planets and the gravitational relationship between them. But it fails to tackle the science of the very, very small — atoms, their constituents and the forces that unite and repel them, which is addressed by the elegant and complex, but mutually incompatible Quantum Theory. There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible?

There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible? [div class=attrib]From Project Syndicate:[end-div]

[div class=attrib]From Project Syndicate:[end-div] Einstein said there would be days like this.

Einstein said there would be days like this.