A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

He suggests that it would be surprising if simple, bacterial-like, life were not common throughout the universe. However, the acquisition of one cell by another — an event that led to all higher organisms on planet Earth, is an altogether much rarer occurrence. So are we alone in the universe?

[div class=attrib]From the New Scientist:[end-div]

UNDER the intense stare of the Kepler space telescope, more and more planets similar to our own are revealing themselves to us. We haven’t found one exactly like Earth yet, but so many are being discovered that it appears the galaxy must be teeming with habitable planets.

These discoveries are bringing an old paradox back into focus. As physicist Enrico Fermi asked in 1950, if there are many suitable homes for life out there and alien life forms are common, where are they all? More than half a century of searching for extraterrestrial intelligence has so far come up empty-handed.

Of course, the universe is a very big place. Even Frank Drake’s famously optimistic “equation” for life’s probability suggests that we will be lucky to stumble across intelligent aliens: they may be out there, but we’ll never know it. That answer satisfies no one, however.

There are deeper explanations. Perhaps alien civilisations appear and disappear in a galactic blink of an eye, destroying themselves long before they become capable of colonising new planets. Or maybe life very rarely gets started even when conditions are perfect.

If we cannot answer these kinds of questions by looking out, might it be possible to get some clues by looking in? Life arose only once on Earth, and if a sample of one were all we had to go on, no grand conclusions could be drawn. But there is more than that. Looking at a vital ingredient for life – energy – suggests that simple life is common throughout the universe, but it does not inevitably evolve into more complex forms such as animals. I might be wrong, but if I’m right, the immense delay between life first appearing on Earth and the emergence of complex life points to another, very different explanation for why we have yet to discover aliens.

Living things consume an extraordinary amount of energy, just to go on living. The food we eat gets turned into the fuel that powers all living cells, called ATP. This fuel is continually recycled: over the course of a day, humans each churn through 70 to 100 kilograms of the stuff. This huge quantity of fuel is made by enzymes, biological catalysts fine-tuned over aeons to extract every last joule of usable energy from reactions.

The enzymes that powered the first life cannot have been as efficient, and the first cells must have needed a lot more energy to grow and divide – probably thousands or millions of times as much energy as modern cells. The same must be true throughout the universe.

This phenomenal energy requirement is often left out of considerations of life’s origin. What could the primordial energy source have been here on Earth? Old ideas of lightning or ultraviolet radiation just don’t pass muster. Aside from the fact that no living cells obtain their energy this way, there is nothing to focus the energy in one place. The first life could not go looking for energy, so it must have arisen where energy was plentiful.

Today, most life ultimately gets its energy from the sun, but photosynthesis is complex and probably didn’t power the first life. So what did? Reconstructing the history of life by comparing the genomes of simple cells is fraught with problems. Nevertheless, such studies all point in the same direction. The earliest cells seem to have gained their energy and carbon from the gases hydrogen and carbon dioxide. The reaction of H2 with CO2 produces organic molecules directly, and releases energy. That is important, because it is not enough to form simple molecules: it takes buckets of energy to join them up into the long chains that are the building blocks of life.

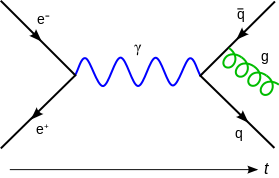

A second clue to how the first life got its energy comes from the energy-harvesting mechanism found in all known life forms. This mechanism was so unexpected that there were two decades of heated altercations after it was proposed by British biochemist Peter Mitchell in 1961.

Universal force field

Mitchell suggested that cells are powered not by chemical reactions, but by a kind of electricity, specifically by a difference in the concentration of protons (the charged nuclei of hydrogen atoms) across a membrane. Because protons have a positive charge, the concentration difference produces an electrical potential difference between the two sides of the membrane of about 150 millivolts. It might not sound like much, but because it operates over only 5 millionths of a millimetre, the field strength over that tiny distance is enormous, around 30 million volts per metre. That’s equivalent to a bolt of lightning.

Mitchell called this electrical driving force the proton-motive force. It sounds like a term from Star Wars, and that’s not inappropriate. Essentially, all cells are powered by a force field as universal to life on Earth as the genetic code. This tremendous electrical potential can be tapped directly, to drive the motion of flagella, for instance, or harnessed to make the energy-rich fuel ATP.

However, the way in which this force field is generated and tapped is extremely complex. The enzyme that makes ATP is a rotating motor powered by the inward flow of protons. Another protein that helps to generate the membrane potential, NADH dehydrogenase, is like a steam engine, with a moving piston for pumping out protons. These amazing nanoscopic machines must be the product of prolonged natural selection. They could not have powered life from the beginning, which leaves us with a paradox.

[div class=attrib]Read the entire article following the jump.[end-div]

[div class=attrib]Image: Transmission electron microscope image of a thin section cut through an area of mammalian lung tissue. The high magnification image shows a mitochondria. Courtesy of Wikipedia.[end-div]

The following story is surely a sign of the impending implosion of the next tech bubble — too much easy money flowing to too many bad and lazy ideas.

The following story is surely a sign of the impending implosion of the next tech bubble — too much easy money flowing to too many bad and lazy ideas.

Despite what seems to be an overwhelmingly digital shift in our lives, we still live in a world of steam. Steam plays a vital role in generating most of the world’s electricity, steam heats our buildings (especially if you live in New York City), steam sterilizes our medical supplies.

Despite what seems to be an overwhelmingly digital shift in our lives, we still live in a world of steam. Steam plays a vital role in generating most of the world’s electricity, steam heats our buildings (especially if you live in New York City), steam sterilizes our medical supplies.

A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “

In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “