If you’re an office worker you will relate. Recently, you will have participated on a team meeting or conference call only to have at least one person say, when asked a question, “sorry can you please repeat that, I was multitasking.”

If you’re an office worker you will relate. Recently, you will have participated on a team meeting or conference call only to have at least one person say, when asked a question, “sorry can you please repeat that, I was multitasking.”

Many of us believe, or have been tricked into believing, that doing multiple things at once makes us more productive. This phenomenon was branded by business as multitasking. After all, if computers could do it, then why not humans. Yet, experience shows that humans are woefully inadequate at performing multiple concurrent tasks that require dedicated attention. Of course, humans are experts at walking and chewing gum at the same time. However, in the majority of cases these activities require very little involvement from the higher functions of the brain. There is a growing body of anecdotal and experimental evidence that shows poorer performance on multiple tasks done concurrently versus the same tasks performed sequentially. In fact, for quite some time, researchers have shown that dealing with multiple streams of information at once is a real problem for our limited brains.

Yet, most businesses seem to demand or reward multitasking behavior. And damagingly, the multitasking epidemic now seems to be the norm in the home as well.

[div class=attrib]From the WSJ:[end-div]

In the few minutes it takes to read this article, chances are you’ll pause to check your phone, answer a text, switch to your desktop to read an email from the boss’s assistant, or glance at the Facebook or Twitter messages popping up in the corner of your screen. Off-screen, in your open-plan office, crosstalk about a colleague’s preschooler might lure you away, or a co-worker may stop by your desk for a quick question.

And bosses wonder why it is tough to get any work done.

Distraction at the office is hardly new, but as screens multiply and managers push frazzled workers to do more with less, companies say the problem is worsening and is affecting business.

While some firms make noises about workers wasting time on the Web, companies are realizing the problem is partly their own fault.

Even though digital technology has led to significant productivity increases, the modern workday seems custom-built to destroy individual focus. Open-plan offices and an emphasis on collaborative work leave workers with little insulation from colleagues’ chatter. A ceaseless tide of meetings and internal emails means that workers increasingly scramble to get their “real work” done on the margins, early in the morning or late in the evening. And the tempting lure of social-networking streams and status updates make it easy for workers to interrupt themselves.

“It is an epidemic,” says Lacy Roberson, a director of learning and organizational development at eBay Inc. At most companies, it’s a struggle “to get work done on a daily basis, with all these things coming at you,” she says.

Office workers are interrupted—or self-interrupt—roughly every three minutes, academic studies have found, with numerous distractions coming in both digital and human forms. Once thrown off track, it can take some 23 minutes for a worker to return to the original task, says Gloria Mark, a professor of informatics at the University of California, Irvine, who studies digital distraction.

Companies are experimenting with strategies to keep workers focused. Some are limiting internal emails—with one company moving to ban them entirely—while others are reducing the number of projects workers can tackle at a time.

Last year, Jamey Jacobs, a divisional vice president at Abbott Vascular, a unit of health-care company Abbott Laboratories learned that his 200 employees had grown stressed trying to squeeze in more heads-down, focused work amid the daily thrum of email and meetings.

“It became personally frustrating that they were not getting the things they wanted to get done,” he says. At meetings, attendees were often checking email, trying to multitask and in the process obliterating their focus.

Part of the solution for Mr. Jacobs’s team was that oft-forgotten piece of office technology: the telephone.

Mr. Jacobs and productivity consultant Daniel Markovitz found that employees communicated almost entirely over email, whether the matter was mundane, such as cake in the break room, or urgent, like an equipment issue.

The pair instructed workers to let the importance and complexity of their message dictate whether to use cellphones, office phones or email. Truly urgent messages and complex issues merited phone calls or in-person conversations, while email was reserved for messages that could wait.

Workers now pick up the phone more, logging fewer internal emails and say they’ve got clarity on what’s urgent and what’s not, although Mr. Jacobs says staff still have to stay current with emails from clients or co-workers outside the group.

[div class=attrib]Read the entire article after the jump, and learn more in this insightful article on multitasking over at Big Think.[end-div]

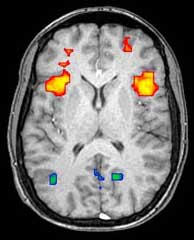

[div class=attrib]Image courtesy of Big Think.[end-div]

Auditory neuroscientist Seth Horowitz guides us through the science of hearing and listening in his new book, “The Universal Sense: How Hearing Shapes the Mind.” He clarifies the important distinction between attentive listening with the mind and the more passive act of hearing, and laments the many modern distractions that threaten our ability to listen effectively.

Auditory neuroscientist Seth Horowitz guides us through the science of hearing and listening in his new book, “The Universal Sense: How Hearing Shapes the Mind.” He clarifies the important distinction between attentive listening with the mind and the more passive act of hearing, and laments the many modern distractions that threaten our ability to listen effectively. A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact. For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”.

For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”. [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

There is a small but mounting body of evidence that supports the notion of the so-called Runner’s High, a state of euphoria attained by athletes during and immediately following prolonged and vigorous exercise. But while the neurochemical basis for this may soon be understood little is known as to why this happens. More on the how and the why from Scicurious Brain.

There is a small but mounting body of evidence that supports the notion of the so-called Runner’s High, a state of euphoria attained by athletes during and immediately following prolonged and vigorous exercise. But while the neurochemical basis for this may soon be understood little is known as to why this happens. More on the how and the why from Scicurious Brain.

[div class=attrib]From the Economist:[end-div]

[div class=attrib]From the Economist:[end-div]

[div class=attrib]From the New Scientist:[end-div]

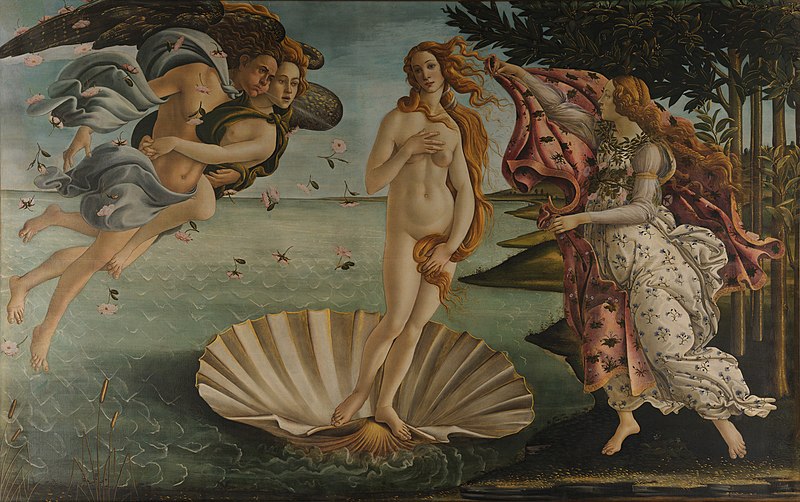

[div class=attrib]From the New Scientist:[end-div] As in all other branches of science, there seem to be fascinating new theories, research and discoveries in neuroscience on a daily, if not hourly, basis. With this in mind, brain and cognitive researchers have recently turned their attentions to the science of art, or more specifically to addressing the question “how does the human brain appreciate art?” Yes, welcome to the world of “neuroaesthetics”.

As in all other branches of science, there seem to be fascinating new theories, research and discoveries in neuroscience on a daily, if not hourly, basis. With this in mind, brain and cognitive researchers have recently turned their attentions to the science of art, or more specifically to addressing the question “how does the human brain appreciate art?” Yes, welcome to the world of “neuroaesthetics”. Contemporary medical and surgical procedures have been completely transformed through the use of patient anaesthesia. Prior to the first use of diethyl ether as an anaesthetic in the United States in 1842, surgery, even for minor ailments, was often a painful process of last resort.

Contemporary medical and surgical procedures have been completely transformed through the use of patient anaesthesia. Prior to the first use of diethyl ether as an anaesthetic in the United States in 1842, surgery, even for minor ailments, was often a painful process of last resort. Daniel Kahneman brings together for the first time his decades of groundbreaking research and profound thinking in social psychology and cognitive science in his new book, Thinking Fast and Slow. He presents his current understanding of judgment and decision making and offers insight into how we make choices in our daily lives. Importantly, Kahneman describes how we can identify and overcome the cognitive biases that frequently lead us astray. This is an important work by one of our leading thinkers.

Daniel Kahneman brings together for the first time his decades of groundbreaking research and profound thinking in social psychology and cognitive science in his new book, Thinking Fast and Slow. He presents his current understanding of judgment and decision making and offers insight into how we make choices in our daily lives. Importantly, Kahneman describes how we can identify and overcome the cognitive biases that frequently lead us astray. This is an important work by one of our leading thinkers.

Neuroscientists continue to find interesting experimental evidence that we do not have free will. Many philosophers continue to dispute this notion and cite inconclusive results and lack of holistic understanding of decision-making on the part of brain scientists. An

Neuroscientists continue to find interesting experimental evidence that we do not have free will. Many philosophers continue to dispute this notion and cite inconclusive results and lack of holistic understanding of decision-making on the part of brain scientists. An  [div class=attrib]From Neuroskeptic:[end-div]

[div class=attrib]From Neuroskeptic:[end-div] But what of the “why” of beauty. Why is the perception of beauty socially and cognitively important and why did it evolve? After all, as Jonah Lehrer over at Wired questions:

But what of the “why” of beauty. Why is the perception of beauty socially and cognitively important and why did it evolve? After all, as Jonah Lehrer over at Wired questions: [div class attrib]From Wilson Quarterly:[end-div]

[div class attrib]From Wilson Quarterly:[end-div] [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]