Much has been written in the humanities and scientific journals about consciousness. Scholars continue to probe and pontificate and theorize. And yet we seem to know more of the ocean depths and our cosmos than we do of that interminable, self-aware inner voice that sits behind our eyes.

From the Guardian:

One spring morning in Tucson, Arizona, in 1994, an unknown philosopher named David Chalmers got up to give a talk on consciousness, by which he meant the feeling of being inside your head, looking out – or, to use the kind of language that might give a neuroscientist an aneurysm, of having a soul. Though he didn’t realise it at the time, the young Australian academic was about to ignite a war between philosophers and scientists, by drawing attention to a central mystery of human life – perhaps the central mystery of human life – and revealing how embarrassingly far they were from solving it.

The scholars gathered at the University of Arizona – for what would later go down as a landmark conference on the subject – knew they were doing something edgy: in many quarters, consciousness was still taboo, too weird and new agey to take seriously, and some of the scientists in the audience were risking their reputations by attending. Yet the first two talks that day, before Chalmers’s, hadn’t proved thrilling. “Quite honestly, they were totally unintelligible and boring – I had no idea what anyone was talking about,” recalled Stuart Hameroff, the Arizona professor responsible for the event. “As the organiser, I’m looking around, and people are falling asleep, or getting restless.” He grew worried. “But then the third talk, right before the coffee break – that was Dave.” With his long, straggly hair and fondness for all-body denim, the 27-year-old Chalmers looked like he’d got lost en route to a Metallica concert. “He comes on stage, hair down to his butt, he’s prancing around like Mick Jagger,” Hameroff said. “But then he speaks. And that’s when everyone wakes up.”

The brain, Chalmers began by pointing out, poses all sorts of problems to keep scientists busy. How do we learn, store memories, or perceive things? How do you know to jerk your hand away from scalding water, or hear your name spoken across the room at a noisy party? But these were all “easy problems”, in the scheme of things: given enough time and money, experts would figure them out. There was only one truly hard problem of consciousness, Chalmers said. It was a puzzle so bewildering that, in the months after his talk, people started dignifying it with capital letters – the Hard Problem of Consciousness – and it’s this: why on earth should all those complicated brain processes feel like anything from the inside? Why aren’t we just brilliant robots, capable of retaining information, of responding to noises and smells and hot saucepans, but dark inside, lacking an inner life? And how does the brain manage it? How could the 1.4kg lump of moist, pinkish-beige tissue inside your skull give rise to something as mysterious as the experience of being that pinkish-beige lump, and the body to which it is attached?

What jolted Chalmers’s audience from their torpor was how he had framed the question. “At the coffee break, I went around like a playwright on opening night, eavesdropping,” Hameroff said. “And everyone was like: ‘Oh! The Hard Problem! The Hard Problem! That’s why we’re here!’” Philosophers had pondered the so-called “mind-body problem” for centuries. But Chalmers’s particular manner of reviving it “reached outside philosophy and galvanised everyone. It defined the field. It made us ask: what the hell is this that we’re dealing with here?”

Two decades later, we know an astonishing amount about the brain: you can’t follow the news for a week without encountering at least one more tale about scientists discovering the brain region associated with gambling, or laziness, or love at first sight, or regret – and that’s only the research that makes the headlines. Meanwhile, the field of artificial intelligence – which focuses on recreating the abilities of the human brain, rather than on what it feels like to be one – has advanced stupendously. But like an obnoxious relative who invites himself to stay for a week and then won’t leave, the Hard Problem remains. When I stubbed my toe on the leg of the dining table this morning, as any student of the brain could tell you, nerve fibres called “C-fibres” shot a message to my spinal cord, sending neurotransmitters to the part of my brain called the thalamus, which activated (among other things) my limbic system. Fine. But how come all that was accompanied by an agonising flash of pain? And what is pain, anyway?

Questions like these, which straddle the border between science and philosophy, make some experts openly angry. They have caused others to argue that conscious sensations, such as pain, don’t really exist, no matter what I felt as I hopped in anguish around the kitchen; or, alternatively, that plants and trees must also be conscious. The Hard Problem has prompted arguments in serious journals about what is going on in the mind of a zombie, or – to quote the title of a famous 1974 paper by the philosopher Thomas Nagel – the question “What is it like to be a bat?” Some argue that the problem marks the boundary not just of what we currently know, but of what science could ever explain. On the other hand, in recent years, a handful of neuroscientists have come to believe that it may finally be about to be solved – but only if we are willing to accept the profoundly unsettling conclusion that computers or the internet might soon become conscious, too.

Next week, the conundrum will move further into public awareness with the opening of Tom Stoppard’s new play, The Hard Problem, at the National Theatre – the first play Stoppard has written for the National since 2006, and the last that the theatre’s head, Nicholas Hytner, will direct before leaving his post in March. The 77-year-old playwright has revealed little about the play’s contents, except that it concerns the question of “what consciousness is and why it exists”, considered from the perspective of a young researcher played by Olivia Vinall. Speaking to the Daily Mail, Stoppard also clarified a potential misinterpretation of the title. “It’s not about erectile dysfunction,” he said.

Stoppard’s work has long focused on grand, existential themes, so the subject is fitting: when conversation turns to the Hard Problem, even the most stubborn rationalists lapse quickly into musings on the meaning of life. Christof Koch, the chief scientific officer at the Allen Institute for Brain Science, and a key player in the Obama administration’s multibillion-dollar initiative to map the human brain, is about as credible as neuroscientists get. But, he told me in December: “I think the earliest desire that drove me to study consciousness was that I wanted, secretly, to show myself that it couldn’t be explained scientifically. I was raised Roman Catholic, and I wanted to find a place where I could say: OK, here, God has intervened. God created souls, and put them into people.” Koch assured me that he had long ago abandoned such improbable notions. Then, not much later, and in all seriousness, he said that on the basis of his recent research he thought it wasn’t impossible that his iPhone might have feelings.

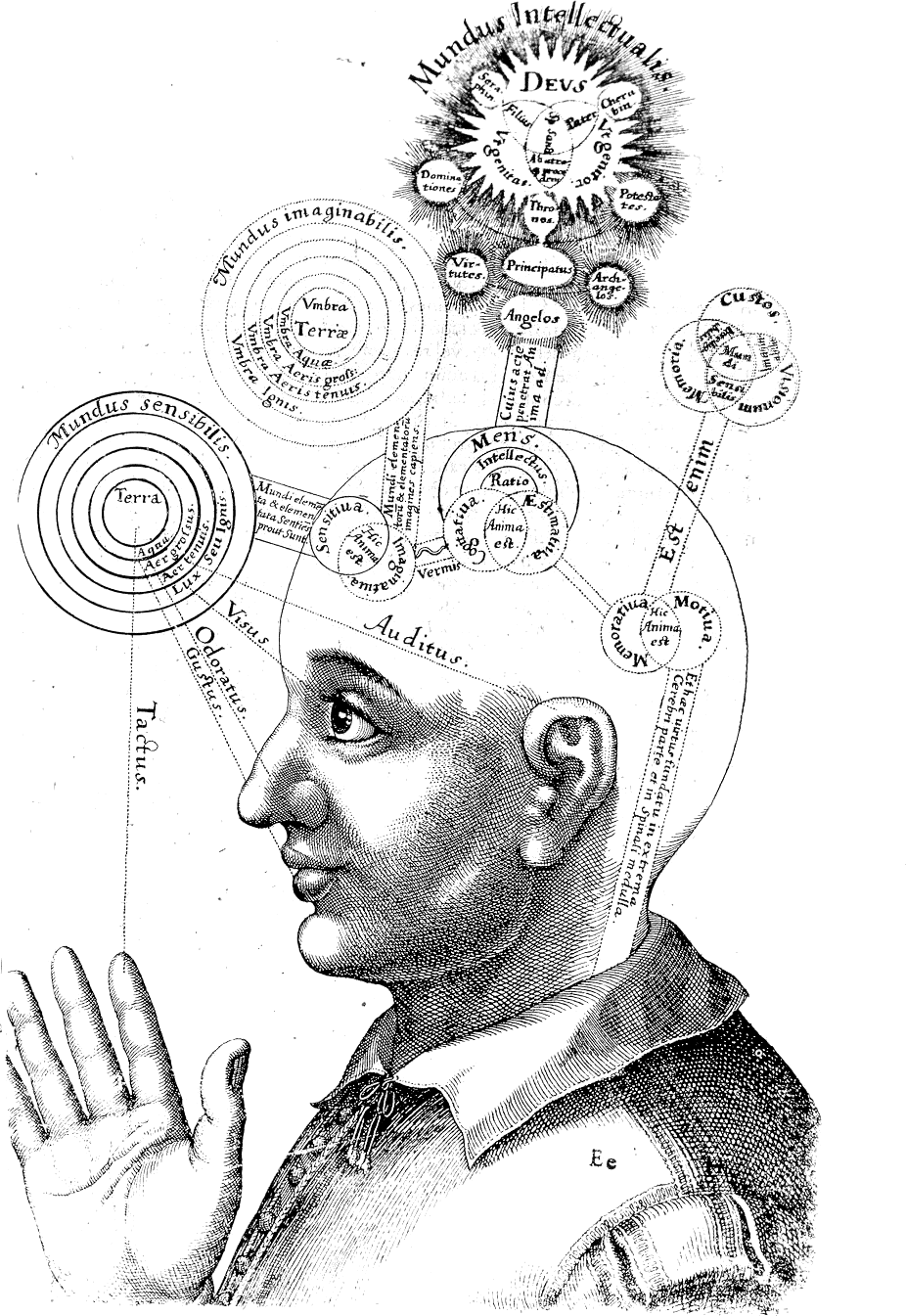

By the time Chalmers delivered his speech in Tucson, science had been vigorously attempting to ignore the problem of consciousness for a long time. The source of the animosity dates back to the 1600s, when René Descartes identified the dilemma that would tie scholars in knots for years to come. On the one hand, Descartes realised, nothing is more obvious and undeniable than the fact that you’re conscious. In theory, everything else you think you know about the world could be an elaborate illusion cooked up to deceive you – at this point, present-day writers invariably invoke The Matrix – but your consciousness itself can’t be illusory. On the other hand, this most certain and familiar of phenomena obeys none of the usual rules of science. It doesn’t seem to be physical. It can’t be observed, except from within, by the conscious person. It can’t even really be described. The mind, Descartes concluded, must be made of some special, immaterial stuff that didn’t abide by the laws of nature; it had been bequeathed to us by God.

This religious and rather hand-wavy position, known as Cartesian dualism, remained the governing assumption into the 18th century and the early days of modern brain study. But it was always bound to grow unacceptable to an increasingly secular scientific establishment that took physicalism – the position that only physical things exist – as its most basic principle. And yet, even as neuroscience gathered pace in the 20th century, no convincing alternative explanation was forthcoming. So little by little, the topic became taboo. Few people doubted that the brain and mind were very closely linked: if you question this, try stabbing your brain repeatedly with a kitchen knife, and see what happens to your consciousness. But how they were linked – or if they were somehow exactly the same thing – seemed a mystery best left to philosophers in their armchairs. As late as 1989, writing in the International Dictionary of Psychology, the British psychologist Stuart Sutherland could irascibly declare of consciousness that “it is impossible to specify what it is, what it does, or why it evolved. Nothing worth reading has been written on it.”

It was only in 1990 that Francis Crick, the joint discoverer of the double helix, used his position of eminence to break ranks. Neuroscience was far enough along by now, he declared in a slightly tetchy paper co-written with Christof Koch, that consciousness could no longer be ignored. “It is remarkable,” they began, “that most of the work in both cognitive science and the neurosciences makes no reference to consciousness” – partly, they suspected, “because most workers in these areas cannot see any useful way of approaching the problem”. They presented their own “sketch of a theory”, arguing that certain neurons, firing at certain frequencies, might somehow be the cause of our inner awareness – though it was not clear how.

Read the entire story here.

Image courtesy of Google Search.

Contemporary medical and surgical procedures have been completely transformed through the use of patient anaesthesia. Prior to the first use of diethyl ether as an anaesthetic in the United States in 1842, surgery, even for minor ailments, was often a painful process of last resort.

Contemporary medical and surgical procedures have been completely transformed through the use of patient anaesthesia. Prior to the first use of diethyl ether as an anaesthetic in the United States in 1842, surgery, even for minor ailments, was often a painful process of last resort.

Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once.

Graham Fleming sits down at an L-shaped lab bench, occupying a footprint about the size of two parking spaces. Alongside him, a couple of off-the-shelf lasers spit out pulses of light just millionths of a billionth of a second long. After snaking through a jagged path of mirrors and lenses, these minuscule flashes disappear into a smoky black box containing proteins from green sulfur bacteria, which ordinarily obtain their energy and nourishment from the sun. Inside the black box, optics manufactured to billionths-of-a-meter precision detect something extraordinary: Within the bacterial proteins, dancing electrons make seemingly impossible leaps and appear to inhabit multiple places at once.