What do you get when you set AI (artificial intelligence) the task of reading through 30,000 Danish folk and fairy tales? Well, you get a host of fascinating, newly discovered insights into Scandinavian witches and trolls.

What do you get when you set AI (artificial intelligence) the task of reading through 30,000 Danish folk and fairy tales? Well, you get a host of fascinating, newly discovered insights into Scandinavian witches and trolls.

More importantly, you hammer another nail into the coffin of literary criticism and set AI on a collision course with yet another preserve of once exclusive human endeavor. It’s probably safe to assume that creative writing will fall to intelligent machines in the not too distant future (as well) — certainly human-powered investigative journalism seemed to became extinct in 2016; replaced by algorithmic aggregation, social bots and fake-mongers.

From aeon:

Where do witches come from, and what do those places have in common? While browsing a large collection of traditional Danish folktales, the folklorist Timothy Tangherlini and his colleague Peter Broadwell, both at the University of California, Los Angeles, decided to find out. Armed with a geographical index and some 30,000 stories, they developed WitchHunter, an interactive ‘geo-semantic’ map of Denmark that highlights the hotspots for witchcraft.

The system used artificial intelligence (AI) techniques to unearth a trove of surprising insights. For example, they found that evil sorcery often took place close to Catholic monasteries. This made a certain amount of sense, since Catholic sites in Denmark were tarred with diabolical associations after the Protestant Reformation in the 16th century. By plotting the distance and direction of witchcraft relative to the storyteller’s location, WitchHunter also showed that enchantresses tend to be found within the local community, much closer to home than other kinds of threats. ‘Witches and robbers are human threats to the economic stability of the community,’ the researchers write. ‘Yet, while witches threaten from within, robbers are generally situated at a remove from the well-described village, often living in woods, forests, or the heath … it seems that no matter how far one goes, nor where one turns, one is in danger of encountering a witch.’

Such ‘computational folkloristics’ raise a big question: what can algorithms tell us about the stories we love to read? Any proposed answer seems to point to as many uncertainties as it resolves, especially as AI technologies grow in power. Can literature really be sliced up into computable bits of ‘information’, or is there something about the experience of reading that is irreducible? Could AI enhance literary interpretation, or will it alter the field of literary criticism beyond recognition? And could algorithms ever derive meaning from books in the way humans do, or even produce literature themselves?

Author and computational linguist Inderjeet Mani concludes his essay thus:

Computational analysis and ‘traditional’ literary interpretation need not be a winner-takes-all scenario. Digital technology has already started to blur the line between creators and critics. In a similar way, literary critics should start combining their deep expertise with ingenuity in their use of AI tools, as Broadwell and Tangherlini did with WitchHunter. Without algorithmic assistance, researchers would be hard-pressed to make such supernaturally intriguing findings, especially as the quantity and diversity of writing proliferates online.

In the future, scholars who lean on digital helpmates are likely to dominate the rest, enriching our literary culture and changing the kinds of questions that can be explored. Those who resist the temptation to unleash the capabilities of machines will have to content themselves with the pleasures afforded by smaller-scale, and fewer, discoveries. While critics and book reviewers may continue to be an essential part of public cultural life, literary theorists who do not embrace AI will be at risk of becoming an exotic species – like the librarians who once used index cards to search for information.

Read the entire tale here.

Image: Portrait of the Danish writer Hans Christian Andersen. Courtesy: Thora Hallager, 10/16 October 1869. Wikipedia. Public Domain.

If you live somewhere rather toasty you know how painful your electricity bills can be during the summer months. So, wouldn’t it be good to have a system automatically find you the cheapest electricity when you need it most? Welcome to the artificially intelligent smarter smart grid.

If you live somewhere rather toasty you know how painful your electricity bills can be during the summer months. So, wouldn’t it be good to have a system automatically find you the cheapest electricity when you need it most? Welcome to the artificially intelligent smarter smart grid. The collective IQ of Google, the company, inched up a few notches in January of 2013 when they hired

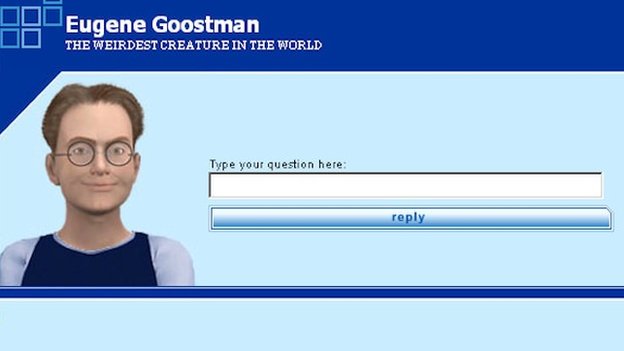

The collective IQ of Google, the company, inched up a few notches in January of 2013 when they hired  One wonders what the world would look like today had Alan Turing been criminally prosecuted and jailed by the British government for his homosexuality before the Second World War, rather than in 1952. Would the British have been able to break German Naval ciphers encoded by their Enigma machine? Would the German Navy have prevailed, and would the Nazis have gone on to conquer the British Isles?

One wonders what the world would look like today had Alan Turing been criminally prosecuted and jailed by the British government for his homosexuality before the Second World War, rather than in 1952. Would the British have been able to break German Naval ciphers encoded by their Enigma machine? Would the German Navy have prevailed, and would the Nazis have gone on to conquer the British Isles?

Fans of science fiction and Isaac Asimov in particular may recall his three laws of robotics:

Fans of science fiction and Isaac Asimov in particular may recall his three laws of robotics:

[div class=attrib]From The New York Times:[end-div]

[div class=attrib]From The New York Times:[end-div] [div class=attrib]From The New York Times:[end-div]

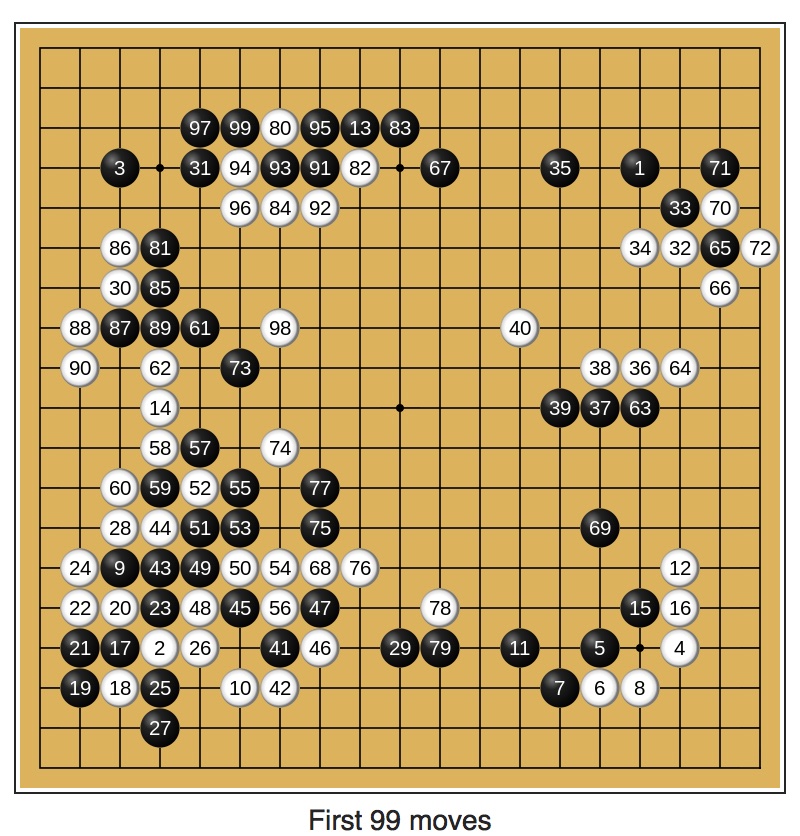

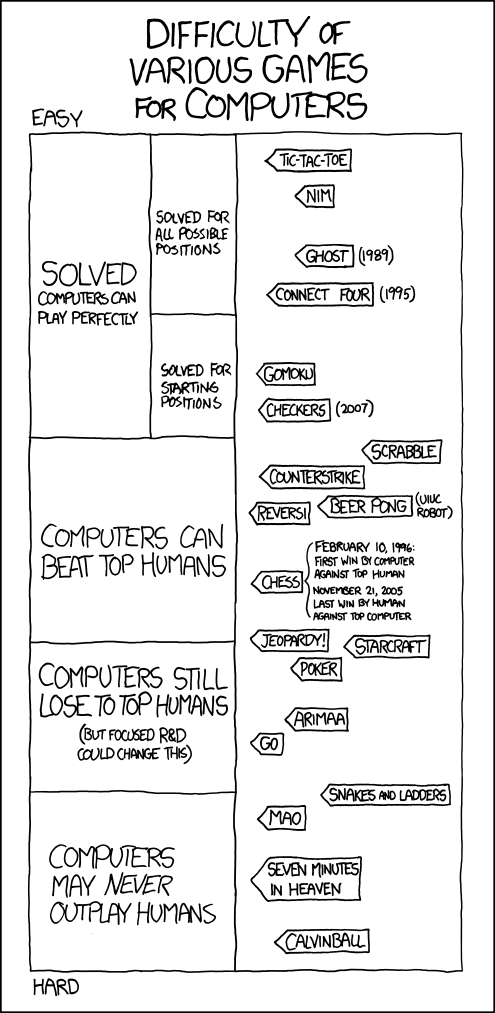

[div class=attrib]From The New York Times:[end-div] [div class=attrib]By Gary Kasparov, From the New York Review of Books:[end-div]

[div class=attrib]By Gary Kasparov, From the New York Review of Books:[end-div] [div class=attrib]From Discover:[end-div]

[div class=attrib]From Discover:[end-div]