The United States is often cited as the most generous nation on Earth. Unfortunately, it is also one of the most violent, having one of the highest murder rates of any industrialized country. Why this tragic paradox?

The United States is often cited as the most generous nation on Earth. Unfortunately, it is also one of the most violent, having one of the highest murder rates of any industrialized country. Why this tragic paradox?

In an absorbing article excerpted below, backed by sound research, Anthropologist Eric Michael Johnson points to the lack of social capital on a local and national scale. Here, social capital is defined as interpersonal trust that promotes cooperation between citizens and groups for mutual benefit.

So, combine a culture that allows convenient access to very effective weapons with broad inequality, social isolation and distrust, and you get a very sobering picture — a country where around 70 people are killed each day by others wielding guns (25,423 firearm homicides in 2006-2007, based on Centers for Disease Control statistics).

[div class=attrib]From Scientific American:[end-div]

The United States is the deadliest wealthy country in the world. Can science help us explain, or even solve, our national crisis?

His tortured and sadistic grin beamed like a full moon on that dark night. “Madness, as you know, is like gravity,” he cackled. “All it takes is a little push.” But once the house lights rose, the terror was lifted for most of us. Few imagined that the fictive evil on screen back in 2008 would later inspire a depraved act of mass murder by a young man sitting with us in the audience, a student of neuroscience whose mind was teetering on the edge. What was it that pushed him over?

In the wake of the tragedy that struck Aurora, Colorado last Friday there remain more questions than answers. Just like last time–in January, 2011 when Congresswoman Gabrielle Giffords and 18 others were shot in Tucson, Arizona or before that in April, 2007 when a deranged gunman attacked students and staff at Virginia Tech–this senseless mass shooting has given rise to a national conversation as we struggle to find meaning in the madness.

While everyone agrees the blame should ultimately be placed on the perpetrator of this violence, the fact remains that the United States has one of the highest murder rates in the industrialized world. Of the 34 countries in the Organisation for Economic Co-operation and Development (OECD), the U.S. ranks fifth in homicides just behind Brazil (highest), Mexico, Russia, and Estonia. Our nation also holds the dubious honor of being responsible for half of the worst mass shootings in the last 30 years. How can we explain why the United States has nearly three times more murders per capita than neighboring Canada and ten times more than Japan? What makes the land of the free such a dangerous place to live?

Diagnosing a Murder

There have been hundreds of thoughtful explorations of this problem in the last week, though three in particular have encapsulated the major issues. Could it be, as science writer David Dobbs argues at Wired, that “an American culture that fetishizes violence,” such as the Batman franchise itself, has contributed to our fall? “Culture shapes the expression of mental dysfunction,” Dobbs writes, “just as it does other traits.”

Perhaps the push arrived with the collision of other factors, as veteran journalist Bill Moyers maintains, when the dark side of human nature encountered political allies who nurture our destructive impulses? “Violence is our alter ego, wired into our Stone Age brains,” he says. “The NRA is the best friend a killer’s instinct ever had.”

But then again maybe there is an economic explanation, as my Scientific American colleague John Horgan believes, citing a hypothesis by McMaster University evolutionary psychologists Martin Daly and his late wife Margo Wilson. “Daly and Wilson found a strong correlation between high Gini scores [a measure of inequality] and high homicide rates in Canadian provinces and U.S. counties,” Horgan writes, “blaming homicides not on poverty per se but on the collision of poverty and affluence, the ancient tug-of-war between haves and have-nots.”

In all three cases, as it was with other culprits such as the lack of religion in public schools or the popularity of violent video games (both of which are found in other wealthy countries and can be dismissed), commentators are looking at our society as a whole rather than specific details of the murderer’s background. The hope is that, if we can isolate the factor which pushes some people to murder their fellow citizens, perhaps we can alter our social environment and reduce the likelihood that these terrible acts will be repeated in the future. The only problem is, which one could it be?

…

The Exceptionalism of American Violence

As it turns out, the “social capital” Sapolsky found that made the Forest Troop baboons so peaceful is an important missing factor that can explain our high homicide rate in the United States. In 1999 Ichiro Kawachi at the Harvard School of Public Health led a study investigating the factors in American homicide for the journal Social Science and Medicine (pdf here). His diagnosis was dire.

“If the level of crime is an indicator of the health of society,” Kawachi wrote, “then the US provides an illustrative case study as one of the most unhealthy of modern industrialized nations.” The paper outlined what the most significant causal factors were for this exaggerated level of violence by developing what was called “an ecological theory of crime.” Whereas many other analyses of homicide take a criminal justice approach to the problem–such as the number of cops on the beat, harshness of prison sentences, or adoption of the death penalty–Kawachi used a public health perspective that emphasized social relations.

In all 50 states and the District of Columbia data were collected using the General Social Survey that measured social capital (defined as interpersonal trust that promotes cooperation between citizens for mutual benefit), along with measures of poverty and relative income inequality, homicide rates, incidence of other crimes–rape, robbery, aggravated assault, burglary, larceny, and motor vehicle theft–unemployment, percentage of high school graduates, and average alcohol consumption. By using a statistical method known as principal component analysis Kawachi was then able to identify which ecologic variables were most associated with particular types of crime.

The results were unambiguous: when income inequality was higher, so was the rate of homicide. Income inequality alone explained 74% of the variance in murder rates and half of the aggravated assaults. However, social capital had an even stronger association and, by itself, accounted for 82% of homicides and 61% of assaults. Other factors such as unemployment, poverty, or number of high school graduates were only weakly associated and alcohol consumption had no connection to violent crime at all. A World Bank sponsored study subsequently confirmed these results on income inequality concluding that, worldwide, homicide and the unequal distribution of resources are inextricably tied. (see Figure 2). However, the World Bank study didn’t measure social capital. According to Kawachi it is this factor that should be considered primary; when the ties that bind a community together are severed inequality is allowed to run free, and with deadly consequences.

But what about guns? Multiple studies have shown a direct correlation between the number of guns and the number of homicides. The United States is the most heavily armed country in the world with 90 guns for every 100 citizens. Doesn’t this over-saturation of American firepower explain our exaggerated homicide rate? Maybe not. In a follow-up study in 2001 Kawachi looked specifically at firearm prevalence and social capital among U.S. states. The results showed that when social capital and community involvement declined, gun ownership increased (see Figure 3).

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Smith & Wesson M&P Victory model revolver. Courtesy of Oleg Volk / Wikpedia.[end-div]

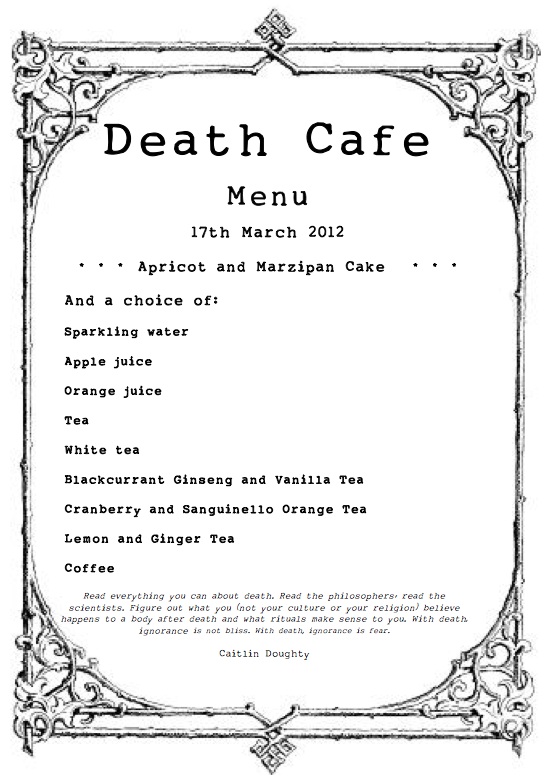

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland.

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland.

Professional photographers take note: there will always be room for high-quality images that tell a story or capture a timeless event or exude artistic elegance. But, your domain is under attack, again — and the results are not particularly pretty. This time courtesy of Instagram.

Professional photographers take note: there will always be room for high-quality images that tell a story or capture a timeless event or exude artistic elegance. But, your domain is under attack, again — and the results are not particularly pretty. This time courtesy of Instagram. [div class=attrib]From the New York Times:[end-div]

[div class=attrib]From the New York Times:[end-div] Social scientists have had Generation-Y, also known as “millenials”, under their microscopes for a while. Born between 1982 and 1999, Gen-Y is now coming of age and becoming a force in the workplace displacing aging “boomers” as they retire to the hills. So, researchers are now looking at how Gen-Y is faring inside corporate America. Remember, Gen-Y is the “it’s all about me generation”; members are characterized as typically lazy and spoiled, have a grandiose sense of entitlement, inflated self-esteem and deep emotional fragility. Their predecessors, the baby boomers, on the other hand are often seen as over-bearing, work-obsessed, competitive and narrow-minded. A clash of cultures is taking shape in office cubes across the country as these groups, with such differing personalities and philosophies, tussle within the workplace. However, it may not be all bad, as columnist Emily Matchar, argues below — corporate America needs the kind of shake-up that Gen-Y promises.

Social scientists have had Generation-Y, also known as “millenials”, under their microscopes for a while. Born between 1982 and 1999, Gen-Y is now coming of age and becoming a force in the workplace displacing aging “boomers” as they retire to the hills. So, researchers are now looking at how Gen-Y is faring inside corporate America. Remember, Gen-Y is the “it’s all about me generation”; members are characterized as typically lazy and spoiled, have a grandiose sense of entitlement, inflated self-esteem and deep emotional fragility. Their predecessors, the baby boomers, on the other hand are often seen as over-bearing, work-obsessed, competitive and narrow-minded. A clash of cultures is taking shape in office cubes across the country as these groups, with such differing personalities and philosophies, tussle within the workplace. However, it may not be all bad, as columnist Emily Matchar, argues below — corporate America needs the kind of shake-up that Gen-Y promises. Ayn Rand: anti-collectivist ideologue, standard-bearer for unapologetic individualism and rugged self-reliance, or selfish, fantasist and elitist hypocrite?

Ayn Rand: anti-collectivist ideologue, standard-bearer for unapologetic individualism and rugged self-reliance, or selfish, fantasist and elitist hypocrite? We excerpt an fascinating article from I09 on the association of science fiction to philosophical inquiry. It’s quiet remarkable that this genre of literature can provide such a rich vein for philosophers to mine, often more so than reality itself. Though, it is no coincidence that our greatest authors of science fiction were, and are, amateur philosophers at heart.

We excerpt an fascinating article from I09 on the association of science fiction to philosophical inquiry. It’s quiet remarkable that this genre of literature can provide such a rich vein for philosophers to mine, often more so than reality itself. Though, it is no coincidence that our greatest authors of science fiction were, and are, amateur philosophers at heart.

There is no doubt that online reviews for products and services, from books to news cars to a vacation spot, have revolutionized shopping behavior. Internet and mobile technology has made gathering, reviewing and publishing open and honest crowdsourced opinion simple, efficient and ubiquitous.

There is no doubt that online reviews for products and services, from books to news cars to a vacation spot, have revolutionized shopping behavior. Internet and mobile technology has made gathering, reviewing and publishing open and honest crowdsourced opinion simple, efficient and ubiquitous.

A fascinating case study shows how Microsoft failed its employees through misguided HR (human resources) policies that pitted colleague against colleague.

A fascinating case study shows how Microsoft failed its employees through misguided HR (human resources) policies that pitted colleague against colleague. Robert J. Samuelson paints a sobering picture of the once credible and seemingly attainable American Dream — the generational progress of upward mobility is no longer a given. He is the author of “The Great Inflation and Its Aftermath: The Past and Future of American Affluence”.

Robert J. Samuelson paints a sobering picture of the once credible and seemingly attainable American Dream — the generational progress of upward mobility is no longer a given. He is the author of “The Great Inflation and Its Aftermath: The Past and Future of American Affluence”. The United States is gripped by political deadlock. The Do-Nothing Congress consistently gets lower approval ratings than our banks, Paris Hilton, lawyers and BP during the catastrophe in the Gulf of Mexico. This stasis is driven by seemingly intractable ideological beliefs and a no-compromise attitude from both the left and right sides of the aisle.

The United States is gripped by political deadlock. The Do-Nothing Congress consistently gets lower approval ratings than our banks, Paris Hilton, lawyers and BP during the catastrophe in the Gulf of Mexico. This stasis is driven by seemingly intractable ideological beliefs and a no-compromise attitude from both the left and right sides of the aisle.

Ex-Facebook employee number 51, gives us a glimpse from within the social network giant. It’s a tale of social isolation, shallow relationships, voyeurism, and narcissistic performance art. It’s also a tale of the re-discovery of life prior to “likes”, “status updates”, “tweets” and “followers”.

Ex-Facebook employee number 51, gives us a glimpse from within the social network giant. It’s a tale of social isolation, shallow relationships, voyeurism, and narcissistic performance art. It’s also a tale of the re-discovery of life prior to “likes”, “status updates”, “tweets” and “followers”.

The United States is often cited as the most generous nation on Earth. Unfortunately, it is also one of the most violent, having one of the highest murder rates of any industrialized country. Why this tragic paradox?

The United States is often cited as the most generous nation on Earth. Unfortunately, it is also one of the most violent, having one of the highest murder rates of any industrialized country. Why this tragic paradox? Hot from the TechnoSensual Exposition in Vienna, Austria, come clothes that can be made transparent or opaque, and clothes that can detect a wearer telling a lie. While the value of the former may seem dubious outside of the home, the latter invention should be a mandatory garment for all politicians and bankers. Or, for the less adventurous, millinery fashionistas, how about a hat that reacts to ambient radio waves?

Hot from the TechnoSensual Exposition in Vienna, Austria, come clothes that can be made transparent or opaque, and clothes that can detect a wearer telling a lie. While the value of the former may seem dubious outside of the home, the latter invention should be a mandatory garment for all politicians and bankers. Or, for the less adventurous, millinery fashionistas, how about a hat that reacts to ambient radio waves? We excerpt below a fascinating article from the WSJ on the increasingly incestuous and damaging relationship between the finance industry and our political institutions.

We excerpt below a fascinating article from the WSJ on the increasingly incestuous and damaging relationship between the finance industry and our political institutions. When it comes to music a generational gap has always been with us, separating young from old. Thus, without fail, parents will remark that the music listened to by their kids is loud and monotonous, nothing like the varied and much better music that they consumed in their younger days.

When it comes to music a generational gap has always been with us, separating young from old. Thus, without fail, parents will remark that the music listened to by their kids is loud and monotonous, nothing like the varied and much better music that they consumed in their younger days. Yet another research study of gender differences shows some fascinating variation in the way men and women see and process their perceptions of others. Men tend to be perceived as a whole, women, on the other hand, are more likely to be perceived as parts.

Yet another research study of gender differences shows some fascinating variation in the way men and women see and process their perceptions of others. Men tend to be perceived as a whole, women, on the other hand, are more likely to be perceived as parts.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

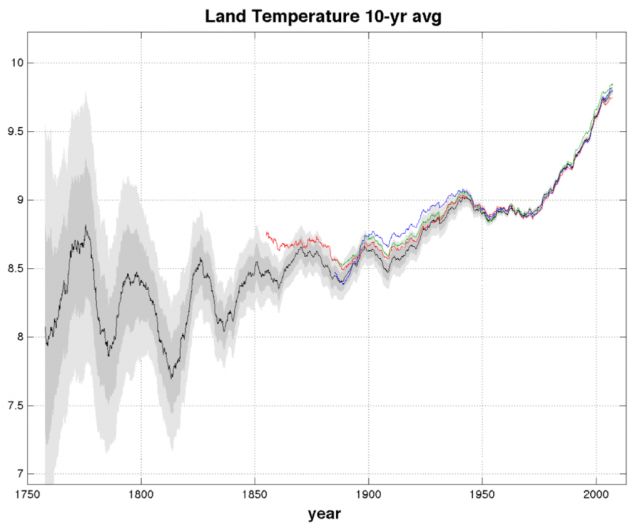

Author and environmentalist Bill McKibben has been writing about climate change and environmental issues for over 20 years. His first book, The End of Nature, was published in 1989, and is considered to be the first book aimed at the general public on the subject of climate change.

Author and environmentalist Bill McKibben has been writing about climate change and environmental issues for over 20 years. His first book, The End of Nature, was published in 1989, and is considered to be the first book aimed at the general public on the subject of climate change.