Long before the first galaxy clusters and the first galaxies appeared in our universe, and before the first stars, came the first basic elements — hydrogen, helium and lithium.

Long before the first galaxy clusters and the first galaxies appeared in our universe, and before the first stars, came the first basic elements — hydrogen, helium and lithium.

Results from a just published study identify these raw materials from what is theorized to be the universe’s first few minutes of existence.

[div class=attrib]From Scientific American:[end-div]

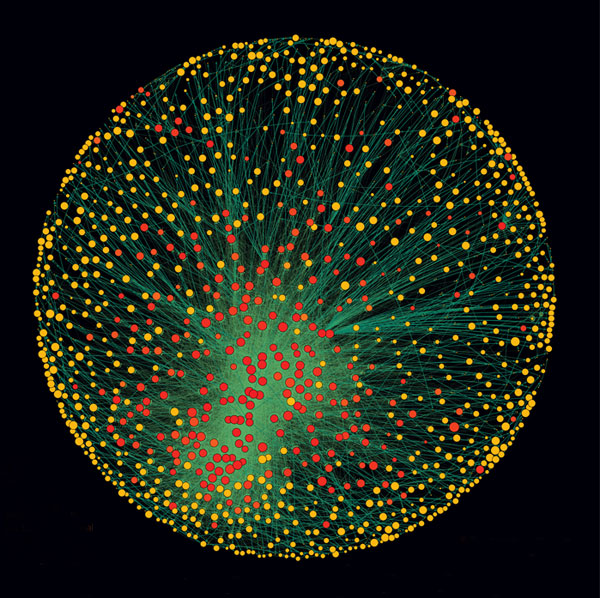

By peering into the distance with the biggest and best telescopes in the world, astronomers have managed to glimpse exploding stars, galaxies and other glowing cosmic beacons as they appeared just hundreds of millions of years after the big bang. They are so far away that their light is only now reaching Earth, even though it was emitted more than 13 billion years ago.

Astronomers have been able to identify those objects in the early universe because their bright glow has remained visible even after a long, universe-spanning journey. But spotting the raw materials from which the first cosmic structures formed—the gas produced as the infant universe expanded and cooled in the first few minutes after the big bang—has not been possible. That material is not itself luminous, and everywhere astronomers have looked they have found not the primordial light-element gases hydrogen, helium and lithium from the big bang but rather material polluted by heavier elements, which form only in stellar interiors and in cataclysms such as supernovae.

Now a group of researchers reports identifying the first known pockets of pristine gas, two relics of those first minutes of the universe’s existence. The team found a pair of gas clouds that contain no detectable heavy elements whatsoever by looking at distant quasars and the intervening material they illuminate. Quasars are bright objects powered by a ravenous black hole, and the spectral quality of their light reveals what it passed through on its way to Earth, in much the same way that the lamp of a projector casts the colors of film onto a screen. The findings appeared online November 10 in Science.

“We found two gas clouds that show a significant abundance of hydrogen, so we know that they are there,” says lead study author Michele Fumagalli, a graduate student at the University of California, Santa Cruz. One of the clouds also shows traces of deuterium, also known as heavy hydrogen, the nucleus of which contains not only a proton, as ordinary hydrogen does, but also a neutron. Deuterium should have been produced in big bang nucleosynthesis but is easily destroyed, so its presence is indicative of a pristine environment. The amount of deuterium present agrees with theoretical predictions about the mixture of elements that should have emerged from the big bang. “But we don’t see any trace of heavier elements like carbon, oxygen and iron,” Fumagalli says. “That’s what tells us that this is primordial gas.”

The newfound gas clouds, as Fumagalli and his colleagues see them, existed about two billion years after the big bang, at an epoch of cosmic evolution known as redshift 3. (Redshift is a sort of cosmological distance measure, corresponding to the degree that light waves have been stretched on their trip across an expanding universe.) By that time the first generation of stars, initially comprising only the primordial light elements, had formed and were distributing the heavier elements they forged via nuclear fusion reactions into interstellar space.

But the new study shows that some nooks of the universe remained pristine long after stars had begun to spew heavy elements. “They have looked for these special corners of the universe, where things just haven’t been polluted yet,” says Massachusetts Institute of Technology astronomer Rob Simcoe, who did not contribute to the new study. “Everyplace else that we’ve looked in these environments, we do find these heavy elements.”

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image: Simulation by Ceverino, Dekel and Primack. Courtesy of Scientific American.[end-div]

In early 2010 a Japanese research team grew retina-like structures from a culture of mouse embryonic stem cells. Now, only a year later, the same team at the RIKEN Center for Developmental Biology announced their success in growing a much more complex structure following a similar process — a mouse pituitary gland. This is seen as another major step towards bioengineering replacement organs for human transplantation.

In early 2010 a Japanese research team grew retina-like structures from a culture of mouse embryonic stem cells. Now, only a year later, the same team at the RIKEN Center for Developmental Biology announced their success in growing a much more complex structure following a similar process — a mouse pituitary gland. This is seen as another major step towards bioengineering replacement organs for human transplantation. [div class=attrib]From Wired:[end-div]

[div class=attrib]From Wired:[end-div] Poet, essayist and playwright Todd Hearon grew up in North Carolina. He earned a PhD in editorial studies from Boston University. He is winner of a number of national poetry and playwriting awards including the 2007 Friends of Literature Prize and a Dobie Paisano Fellowship from the University of Texas at Austin.

Poet, essayist and playwright Todd Hearon grew up in North Carolina. He earned a PhD in editorial studies from Boston University. He is winner of a number of national poetry and playwriting awards including the 2007 Friends of Literature Prize and a Dobie Paisano Fellowship from the University of Texas at Austin.

Joy Harjo is an acclaimed poet, musician and noted teacher. Her poetry is grounded in the United States’ Southwest and often encompasses Native American stories and values.

Joy Harjo is an acclaimed poet, musician and noted teacher. Her poetry is grounded in the United States’ Southwest and often encompasses Native American stories and values. We promise. There is no screeching embedded audio of someone slowly dragging a piece of chalk, or worse, fingernails, across a blackboard! Though, even the thought of this sound causes many to shudder. Why? A plausible explanation over at Wired UK.

We promise. There is no screeching embedded audio of someone slowly dragging a piece of chalk, or worse, fingernails, across a blackboard! Though, even the thought of this sound causes many to shudder. Why? A plausible explanation over at Wired UK. The lowly incandescent light bulb continues to come under increasing threat. First, came the fluorescent tube, then the compact fluorescent. More recently the LED (light emitting diode) seems to be gaining ground. Now LED technology takes another leap forward with printed LED “light sheets”.

The lowly incandescent light bulb continues to come under increasing threat. First, came the fluorescent tube, then the compact fluorescent. More recently the LED (light emitting diode) seems to be gaining ground. Now LED technology takes another leap forward with printed LED “light sheets”.

Our current educational process in one sentence: assume student is empty vessel; provide student with content; reward student for remembering and regurgitating content; repeat.

Our current educational process in one sentence: assume student is empty vessel; provide student with content; reward student for remembering and regurgitating content; repeat.

[div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]

Computer hardware reached (or plummeted, depending upon your viewpoint) the level of commodity a while ago. And of course, some types of operating systems platforms, and software and applications have followed suit recently — think Platform as a Service (PaaS) and Software as a Service (SaaS). So, it should come as no surprise to see new services arise that try to match supply and demand, and profit in the process. Welcome to the “cloud brokerage”.

Computer hardware reached (or plummeted, depending upon your viewpoint) the level of commodity a while ago. And of course, some types of operating systems platforms, and software and applications have followed suit recently — think Platform as a Service (PaaS) and Software as a Service (SaaS). So, it should come as no surprise to see new services arise that try to match supply and demand, and profit in the process. Welcome to the “cloud brokerage”. Tens of thousands of independent bookstores have disappeared from the United States and Europe over the last decade. Even mega-chains like Borders have fallen prey to monumental shifts in the distribution of ideas and content. The very notion of the physical book is under increasing threat from the accelerating momentum of digitalization.

Tens of thousands of independent bookstores have disappeared from the United States and Europe over the last decade. Even mega-chains like Borders have fallen prey to monumental shifts in the distribution of ideas and content. The very notion of the physical book is under increasing threat from the accelerating momentum of digitalization. Charles Fishman has a fascinating new book entitled The Big Thirst: The Secret Life and Turbulent Future of Water. In it Fishman examines the origins of water on our planet and postulates an all to probable future where water becomes an increasingly limited and precious resource.

Charles Fishman has a fascinating new book entitled The Big Thirst: The Secret Life and Turbulent Future of Water. In it Fishman examines the origins of water on our planet and postulates an all to probable future where water becomes an increasingly limited and precious resource. The 2011 Nobel Prize in Physics was recently awarded to three scientists: Adam Riess, Saul Perlmutter and Brian Schmidt. Their computations and observations of a very specific type of exploding star upended decades of commonly accepted beliefs of our universe. Namely, that the expansion of the universe is accelerating.

The 2011 Nobel Prize in Physics was recently awarded to three scientists: Adam Riess, Saul Perlmutter and Brian Schmidt. Their computations and observations of a very specific type of exploding star upended decades of commonly accepted beliefs of our universe. Namely, that the expansion of the universe is accelerating. This week, theDiagonal focuses its energies on that most precious of natural resources — water.

This week, theDiagonal focuses its energies on that most precious of natural resources — water.