Deborah Blum’s story begins with Marie Curie’s analysis of a “strange energy” released from uranium ore, and ends with the assassination of Russian dissident, Alexander Litveninko in 2006.

[div class=attrib]From Wired:[end-div]

In the late 19th century, a then-unknown chemistry student named Marie Curie was searching for a thesis subject. With encouragement from her husband, Pierre, she decided to study the strange energy released by uranium ores, a sizzle of power far greater than uranium alone could explain.

The results of that study are today among the most famous in the history of science. The Curies discovered not one but two new radioactive elements in their slurry of material (and Marie invented the word radioactivity to help explain them.) One was the glowing element radium. The other, which burned brighter and briefer, she named after her home country of Poland — Polonium (from the Latin root, polonia). In honor of that discovery, the Curies shared the 1903 Nobel Prize in Physics with their French colleague Henri Becquerel for his work with uranium.

Radium was always Marie Curie’s first love – “radium, my beautiful radium”, she used to call it. Her continued focus gained her a second Nobel Prize in chemistry in 1911. (Her Nobel lecture was titled Radium and New Concepts in Chemistry.) It was also the higher-profile radium — embraced in a host of medical, industrial, and military uses — that first called attention to the health risks of radioactive elements. I’ve told some of that story here before in a look at the deaths and illnesses suffered by the “Radium Girls,” young women who in the 1920s painted watch-dial faces with radium-based luminous paint.

Polonium remained the unstable, mostly ignored step-child element of the story, less famous, less interesting, less useful than Curie’s beautiful radium. Until the last few years, that is. Until the reported 2006 assassination by polonium 210 of Russian spy turned dissident, Alexander Litveninko. And until the news this week, first reported by Al Jazeera, that surprisingly high levels of polonium-210 were detected by a Swiss laboratory in the clothes and other effects of the late Palestinian leader Yasser Arafat.

Arafat, 75, had been held for almost two years under an Israeli form of house arrest when he died in 2004 of a sudden wasting illness. His rapid deterioration led to a welter of conspiracy theories that he’d been poisoned, some accusing his political rivals and many more accusing Israel, which has steadfastly denied any such plot.

Recently (and for undisclosed reasons) his widow agreed to the forensic analysis of articles including clothes, a toothbrush, bed sheets, and his favorite kaffiyeh. Al Jazeera arranged for the analysis and took the materials to Europe for further study. After the University of Lausanne’s Institute of Radiation Physics released the findings, Suha Arafat asked that her husband’s body be exhumed and tested for polonium. Palestinian authorities have indicated that they may do so within the week.

And at this point, as we anticipate those results, it’s worth asking some questions about the use of a material like polonium as an assassination poison. Why, for instance, pick a poison that leaves such a durable trail of evidence behind? In the case of the Radium Girls, I mentioned earlier, scientists found that their bones were still hissing with radiation years after their deaths. In the case of Litvinenko, public health investigators found that he’d literally left a trail of radioactive residues across London where he was living at the time of his death.

In what we might imagine as the clever world of covert killings why would a messy element like polonium even be on the assassination list? To answer that, it helps to begin by stepping back to some of the details provided in the Curies’ seminal work. Both radium and polonium are links in a chain of radioactive decay (element changes due to particle emission) that begins with uranium. Polonium, which eventually decays to an isotope of lead, is one of the more unstable points in this chain, unstable enough that there are some 33 known variants (isotopes) of the element.

Of these, the best known and most abundant is the energetic isotope polonium-210, with its half life of 138 days. Half-life refers to the time it takes for a radioactive element to burn through its energy supply, essentially the time it takes for activity to decrease by half. For comparison, the half life of the uranium isotope U-235, which often features in weapon design, is 700 million years. In other words, polonium is a little blast furnace of radioactive energy. The speed of its decay means that eight years after Arafat’s death, it would probably be identified by the its breakdown products. And it’s on that note – its life as a radioactive element – that it becomes interesting as an assassin’s weapon.

Like radium, polonium’s radiation is primarily in the form of alpha rays — the emission of alpha particles. Compared to other subatomic particles, alpha particles tend to be high energy and high mass. Their relatively larger mass means that they don’t penetrate as well as other forms of radiation, in fact, alpha particles barely penetrate the skin. And they can stopped from even that by a piece of paper or protective clothing.

That may make them sound safe. It shouldn’t. It should just alert us that these are only really dangerous when they are inside the body. If a material emitting alpha radiation is swallowed or inhaled, there’s nothing benign about it. Scientists realized, for instance, that the reason the Radium Girls died of radiation poisoning was because they were lip-pointing their paintbrushes and swallowing radium-laced paint. The radioactive material deposited in their bones — which literally crumbled. Radium, by the way, has a half-life of about 1,600 years. Which means that it’s not in polonium’s league as an alpha emitter. How bad is this? By mass, polonium-210 is considered to be about 250,000 times more poisonous than hydrogen cyanide. Toxicologists estimate that an amount the size of a grain of salt could be fatal to the average adult.

In other words, a victim would never taste a lethal dose in food or drink. In the case of Litvinenko, investigators believed that he received his dose of polonium-210 in a cup of tea, dosed during a meeting with two Russian agents. (Just as an aside, alpha particles tend not to set off radiation detectors so it’s relatively easy to smuggle from country to country.) Another assassin advantage is that illness comes on gradually, making it hard to pinpoint the event. Yet another advantage is that polonium poisoning is so rare that it’s not part of a standard toxics screen. In Litvinenko’s case, the poison wasn’t identified until shortly after his death. In Arafat’s case — if polonium-210 killed him and that has not been established — obviously it wasn’t considered at the time. And finally, it gets the job done. “Once absorbed,” notes the U.S. Regulatory Commission, “The alpha radiation can rapidly destroy major organs, DNA and the immune system.”

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Pierre and Marie Curie in the laboratory, Paris c1906. Courtesy of Wikipedia.[end-div]

Auditory neuroscientist Seth Horowitz guides us through the science of hearing and listening in his new book, “The Universal Sense: How Hearing Shapes the Mind.” He clarifies the important distinction between attentive listening with the mind and the more passive act of hearing, and laments the many modern distractions that threaten our ability to listen effectively.

Auditory neuroscientist Seth Horowitz guides us through the science of hearing and listening in his new book, “The Universal Sense: How Hearing Shapes the Mind.” He clarifies the important distinction between attentive listening with the mind and the more passive act of hearing, and laments the many modern distractions that threaten our ability to listen effectively. With the election in the United States now decided, the dissection of the result is well underway. And, perhaps the biggest winner of all is the science of big data. Yes, mathematical analysis of vast quantities of demographic and polling data won over the voodoo proclamations and gut felt predictions of the punditocracy. Now, that’s a result truly worth celebrating.

With the election in the United States now decided, the dissection of the result is well underway. And, perhaps the biggest winner of all is the science of big data. Yes, mathematical analysis of vast quantities of demographic and polling data won over the voodoo proclamations and gut felt predictions of the punditocracy. Now, that’s a result truly worth celebrating.

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household.

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household. Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading. Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

According to Star Trek fictional history warp engines were invented in 2063. That gives us just over 50 years. While very unlikely based on our current technological prowess and general lack of understanding of the cosmos, warp engines are perhaps becoming just a little closer to being realized. But, please, no photon torpedoes!

According to Star Trek fictional history warp engines were invented in 2063. That gives us just over 50 years. While very unlikely based on our current technological prowess and general lack of understanding of the cosmos, warp engines are perhaps becoming just a little closer to being realized. But, please, no photon torpedoes! Some recent experiments out of the University of Toronto show for the first time an anomaly in measurements predicted by Werner Heisenberg’s fundamental law of quantum mechanics, the Uncertainty Principle.

Some recent experiments out of the University of Toronto show for the first time an anomaly in measurements predicted by Werner Heisenberg’s fundamental law of quantum mechanics, the Uncertainty Principle.

As children we all learn our abc’s; as adults very few ponder the ABC Conjecture in mathematics. The first is often a simple task of rote memorization; the second is a troublesome mathematical problem with a fiendishly complex solution (maybe).

As children we all learn our abc’s; as adults very few ponder the ABC Conjecture in mathematics. The first is often a simple task of rote memorization; the second is a troublesome mathematical problem with a fiendishly complex solution (maybe). The title could be mistaken for a dark and violent crime novel from the likes of (Stieg) Larrson, Nesbø, Sjöwall-Wahlöö, or Henning Mankell. But, this story is somewhat more mundane, though much more consequential. It’s a story about a Swedish cancer killer.

The title could be mistaken for a dark and violent crime novel from the likes of (Stieg) Larrson, Nesbø, Sjöwall-Wahlöö, or Henning Mankell. But, this story is somewhat more mundane, though much more consequential. It’s a story about a Swedish cancer killer. For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

For the first time scientists have built a computer software model of an entire organism from its molecular building blocks. This allows the model to predict previously unobserved cellular biological processes and behaviors. While the organism in question is a simple bacterium, this represents another huge advance in computational biology.

[div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London.

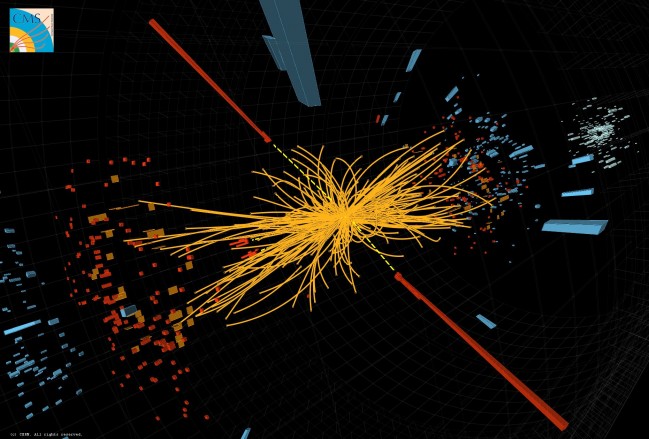

A fascinating article by Nick Lane a leading researcher into the origins of life. Lane is a Research Fellow at University College London. We think CDM sounds much more fun than LHC, a rather dry acronym for Large Hadron Collider.

We think CDM sounds much more fun than LHC, a rather dry acronym for Large Hadron Collider. [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]