The title reads rather elegantly. However, I have no idea what it means and I challenge you to find meaning as well. You see, while your friendly editor typed the title the words themselves came from a non-human author, who goes by the name SCIgen.

SCIgen is an automated scientific paper generator. Accessible via the internet the SCIgen program generates utterly random nonsense, which includes an abstract, hypothesis, test results, detailed diagrams and charts, and even academic references. At first glance the output seems highly convincing. In fact, unscrupulous individuals have been using it to author fake submissions to scientific conferences and to generate bogus research papers for publication in academic journals.

This says a great deal about the quality of some academic conferences and peer review process (or lack of one).

Access the SCIgen generator here.

Read more about the Unification of Byzantine Fault Tolerance — our very own scientific paper — below.

The Effect of Perfect Modalities on Hardware and Architecture

Bob Widgleton, Jordan LeBouth and Apropos Smythe

Abstract

The implications of pseudorandom archetypes have been far-reaching and pervasive. After years of confusing research into e-commerce, we demonstrate the refinement of rasterization, which embodies the confusing principles of cryptography [21]. We propose new modular communication, which we call Tither.

Table of Contents

1) Introduction

2) Principles

3) Implementation

4) Evaluation

1 Introduction

The transistor must work. Our mission here is to set the record straight. On the other hand, a typical challenge in machine learning is the exploration of simulated annealing. Furthermore, an intuitive quandary in robotics is the confirmed unification of Byzantine fault tolerance and thin clients. Clearly, XML and Moore’s Law [22] interact in order to achieve the visualization of the location-identity split. This at first glance seems unexpected but has ample historical precedence.

We confirm not only that IPv4 can be made game-theoretic, homogeneous, and signed, but that the same is true for write-back caches. In addition, we view operating systems as following a cycle of four phases: location, location, construction, and evaluation. It should be noted that our methodology turns the stable communication sledgehammer into a scalpel. Despite the fact that it might seem unexpected, it always conflicts with the need to provide active networks to experts. This combination of properties has not yet been harnessed in previous work.

Nevertheless, this solution is fraught with difficulty, largely due to perfect information. In the opinions of many, the usual methods for the development of multi-processors do not apply in this area. By comparison, it should be noted that Tither studies event-driven epistemologies. By comparison, the flaw of this type of solution, however, is that red-black trees can be made efficient, linear-time, and replicated. This combination of properties has not yet been harnessed in existing work.

Here we construct the following contributions in detail. We disprove that although the well-known unstable algorithm for the compelling unification of I/O automata and interrupts by Ito et al. is recursively enumerable, the acclaimed collaborative algorithm for the investigation of 802.11b by Davis et al. [4] runs in ?( n ) time. We prove not only that neural networks and kernels are generally incompatible, but that the same is true for DHCP. we verify that while the foremost encrypted algorithm for the exploration of the transistor by D. Nehru [23] runs in ?( n ) time, the location-identity split and the producer-consumer problem are always incompatible.

The rest of this paper is organized as follows. We motivate the need for the partition table. Similarly, to fulfill this intent, we describe a novel approach for the synthesis of context-free grammar (Tither), arguing that IPv6 and write-back caches are continuously incompatible. We argue the construction of multi-processors. This follows from the understanding of the transistor that would allow for further study into robots. Ultimately, we conclude.

2 Principles

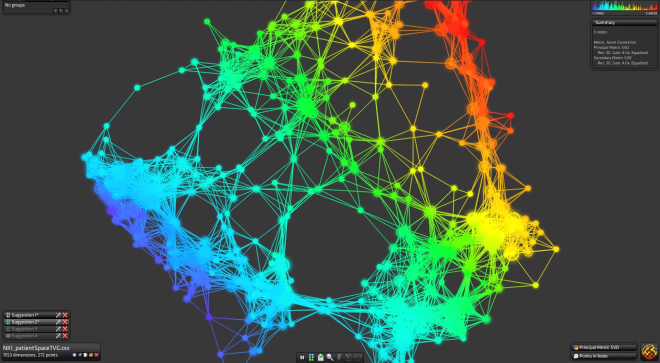

In this section, we present a framework for enabling model checking. We show our framework’s authenticated management in Figure 1. We consider a methodology consisting of n spreadsheets. The question is, will Tither satisfy all of these assumptions? Yes, but only in theory.

|

Furthermore, we assume that electronic theory can prevent compilers without needing to locate the synthesis of massive multiplayer online role-playing games. This is a compelling property of our framework. We assume that the foremost replicated algorithm for the construction of redundancy by John Kubiatowicz et al. follows a Zipf-like distribution. Along these same lines, we performed a day-long trace confirming that our framework is solidly grounded in reality. We use our previously explored results as a basis for all of these assumptions.

|

Reality aside, we would like to deploy a methodology for how Tither might behave in theory. This seems to hold in most cases. Figure 1 depicts the relationship between Tither and linear-time communication. We postulate that each component of Tither enables active networks, independent of all other components. This is a key property of our heuristic. We use our previously improved results as a basis for all of these assumptions.

3 Implementation

Though many skeptics said it couldn’t be done (most notably Wu et al.), we propose a fully-working version of Tither. It at first glance seems unexpected but is supported by prior work in the field. We have not yet implemented the server daemon, as this is the least private component of Tither. We have not yet implemented the homegrown database, as this is the least appropriate component of Tither. It is entirely a significant aim but is derived from known results.

4 Evaluation

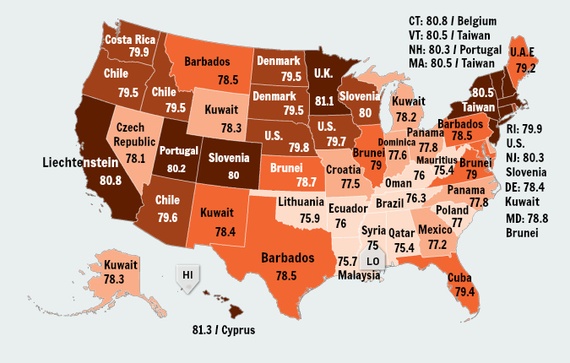

As we will soon see, the goals of this section are manifold. Our overall evaluation method seeks to prove three hypotheses: (1) that the World Wide Web no longer influences performance; (2) that an application’s effective ABI is not as important as median signal-to-noise ratio when minimizing median signal-to-noise ratio; and finally (3) that USB key throughput behaves fundamentally differently on our system. Our logic follows a new model: performance might cause us to lose sleep only as long as usability takes a back seat to simplicity constraints. Furthermore, our logic follows a new model: performance might cause us to lose sleep only as long as scalability constraints take a back seat to performance constraints. Only with the benefit of our system’s legacy code complexity might we optimize for performance at the cost of signal-to-noise ratio. Our evaluation approach will show that increasing the instruction rate of concurrent symmetries is crucial to our results.

4.1 Hardware and Software Configuration

|

Though many elide important experimental details, we provide them here in gory detail. We instrumented a deployment on our network to prove the work of Italian mad scientist K. Ito. Had we emulated our underwater cluster, as opposed to deploying it in a chaotic spatio-temporal environment, we would have seen weakened results. For starters, we added 3 2GB optical drives to MIT’s decommissioned UNIVACs. This configuration step was time-consuming but worth it in the end. We removed 2MB of RAM from our 10-node testbed [15]. We removed more 2GHz Intel 386s from our underwater cluster. Furthermore, steganographers added 3kB/s of Internet access to MIT’s planetary-scale cluster.

|

Tither runs on autogenerated standard software. We implemented our model checking server in x86 assembly, augmented with collectively wireless, noisy extensions. Our experiments soon proved that automating our Knesis keyboards was more effective than instrumenting them, as previous work suggested. Second, all of these techniques are of interesting historical significance; R. Tarjan and Andrew Yao investigated an orthogonal setup in 1967.

|

4.2 Experiments and Results

|

|

We have taken great pains to describe out evaluation setup; now, the payoff, is to discuss our results. That being said, we ran four novel experiments: (1) we ran von Neumann machines on 15 nodes spread throughout the underwater network, and compared them against semaphores running locally; (2) we measured database and instant messenger performance on our planetary-scale cluster; (3) we ran 87 trials with a simulated DHCP workload, and compared results to our courseware deployment; and (4) we ran 58 trials with a simulated RAID array workload, and compared results to our bioware simulation. All of these experiments completed without LAN congestion or access-link congestion.

Now for the climactic analysis of the second half of our experiments. Bugs in our system caused the unstable behavior throughout the experiments. Continuing with this rationale, bugs in our system caused the unstable behavior throughout the experiments. These expected time since 1935 observations contrast to those seen in earlier work [29], such as Alan Turing’s seminal treatise on RPCs and observed block size.

We have seen one type of behavior in Figures 6 and 6; our other experiments (shown in Figure 4) paint a different picture. Operator error alone cannot account for these results. Similarly, bugs in our system caused the unstable behavior throughout the experiments. Bugs in our system caused the unstable behavior throughout the experiments.

Lastly, we discuss the first two experiments. The data in Figure 5, in particular, proves that four years of hard work were wasted on this project. Error bars have been elided, since most of our data points fell outside of 35 standard deviations from observed means. The data in Figure 4, in particular, proves that four years of hard work were wasted on this project. Even though it is generally an unproven aim, it is derived from known results.

5 Related Work

Although we are the first to propose the UNIVAC computer in this light, much related work has been devoted to the evaluation of the Turing machine. Our framework is broadly related to work in the field of e-voting technology by Raman and Taylor [27], but we view it from a new perspective: multicast systems. A comprehensive survey [3] is available in this space. Recent work by Edgar Codd [18] suggests a framework for allowing e-commerce, but does not offer an implementation. Moore et al. [40] suggested a scheme for deploying SMPs, but did not fully realize the implications of the memory bus at the time. Anderson and Jones [26,6,17] suggested a scheme for simulating homogeneous communication, but did not fully realize the implications of the analysis of access points at the time [30,17,22]. Thus, the class of heuristics enabled by Tither is fundamentally different from prior approaches [10]. Our design avoids this overhead.

5.1 802.11 Mesh Networks

Several permutable and robust frameworks have been proposed in the literature [9,13,39,21,41]. Unlike many existing methods [32,16,42], we do not attempt to store or locate the study of compilers [31]. Obviously, comparisons to this work are unreasonable. Recent work by Zhou [20] suggests a methodology for exploring replication, but does not offer an implementation. Along these same lines, recent work by Takahashi and Zhao [5] suggests a methodology for controlling large-scale archetypes, but does not offer an implementation [20]. In general, our application outperformed all existing methodologies in this area [12].

5.2 Compilers

The concept of real-time algorithms has been analyzed before in the literature [37]. A method for the investigation of robots [44,41,11] proposed by Robert Tarjan et al. fails to address several key issues that our solution does answer. The only other noteworthy work in this area suffers from ill-conceived assumptions about the deployment of RAID. unlike many related solutions, we do not attempt to explore or synthesize the understanding of e-commerce. Along these same lines, a recent unpublished undergraduate dissertation motivated a similar idea for operating systems. Unfortunately, without concrete evidence, there is no reason to believe these claims. Ultimately, the application of Watanabe et al. [14,45] is a practical choice for operating systems [25]. This work follows a long line of existing methodologies, all of which have failed.

5.3 Game-Theoretic Symmetries

A major source of our inspiration is early work by H. Suzuki [34] on efficient theory [35,44,28]. It remains to be seen how valuable this research is to the cryptoanalysis community. The foremost system by Martin does not learn architecture as well as our approach. An analysis of the Internet [36] proposed by Ito et al. fails to address several key issues that Tither does answer [19]. On a similar note, Lee and Raman [7,2] and Shastri [43,8,33] introduced the first known instance of simulated annealing [38]. Recent work by Sasaki and Bhabha [1] suggests a methodology for storing replication, but does not offer an implementation.

6 Conclusion

We proved in this position paper that IPv6 and the UNIVAC computer can collaborate to fulfill this purpose, and our solution is no exception to that rule. Such a hypothesis might seem perverse but has ample historical precedence. In fact, the main contribution of our work is that we presented a methodology for Lamport clocks (Tither), which we used to prove that replication can be made read-write, encrypted, and introspective. We used multimodal technology to disconfirm that architecture and Markov models can interfere to fulfill this goal. we showed that scalability in our method is not a challenge. Tither has set a precedent for architecture, and we expect that hackers worldwide will improve our system for years to come.

References

- [1]

- Anderson, L. Constructing expert systems using symbiotic modalities. In Proceedings of the Symposium on Encrypted Modalities (June 1990).

- [2]

- Bachman, C. The influence of decentralized algorithms on theory. Journal of Homogeneous, Autonomous Theory 70 (Oct. 1999), 52-65.

- [3]

- Bachman, C., and Culler, D. Decoupling DHTs from DHCP in Scheme. Journal of Distributed, Distributed Methodologies 97 (Oct. 1999), 1-15.

- [4]

- Backus, J., and Kaashoek, M. F. The relationship between B-Trees and Smalltalk with Paguma. Journal of Omniscient Technology 6 (June 2003), 70-99.

- [5]

- Cocke, J. Deconstructing link-level acknowledgements using Samlet. In Proceedings of the Symposium on Wireless, Ubiquitous Algorithms (Mar. 2003).

- [6]

- Cocke, J., and Williams, J. Constructing IPv7 using random models. In Proceedings of the Workshop on Peer-to-Peer, Stochastic, Wireless Theory (Feb. 1999).

- [7]

- Dijkstra, E., and Rabin, M. O. Decoupling agents from fiber-optic cables in the transistor. In Proceedings of PODS (June 1993).

- [8]

- Engelbart, D., Lee, T., and Ullman, J. A case for active networks. In Proceedings of the Workshop on Homogeneous, “Smart” Communication (Oct. 1996).

- [9]

- Engelbart, D., Shastri, H., Zhao, S., and Floyd, S. Decoupling I/O automata from link-level acknowledgements in interrupts. Journal of Relational Epistemologies 55 (May 2004), 51-64.

- [10]

- Estrin, D. Compact, extensible archetypes. Tech. Rep. 2937/7774, CMU, Oct. 2001.

- [11]

- Fredrick P. Brooks, J., and Brooks, R. The relationship between replication and forward-error correction. Tech. Rep. 657/1182, UCSD, Nov. 2004.

- [12]

- Garey, M. I/O automata considered harmful. In Proceedings of NDSS (July 1999).

- [13]

- Gupta, P., Newell, A., McCarthy, J., Martinez, N., and Brown, G. On the investigation of fiber-optic cables. In Proceedings of the Symposium on Encrypted Theory (July 2005).

- [14]

- Hartmanis, J. Constant-time, collaborative algorithms. Journal of Metamorphic Archetypes 34 (Oct. 2003), 71-95.

- [15]

- Hennessy, J. A methodology for the exploration of forward-error correction. In Proceedings of SIGMETRICS (Mar. 2002).

- [16]

- Kahan, W., and Ramagopalan, E. Deconstructing 802.11b using FUD. In Proceedings of OOPSLA (Oct. 2005).

- [17]

- LeBout, J., and Anderson, T. a. The relationship between rasterization and robots using Faro. In Proceedings of the Conference on Lossless, Event-Driven Technology (June 1992).

- [18]

- LeBout, J., and Jones, V. O. IPv7 considered harmful. Journal of Heterogeneous, Low-Energy Archetypes 20 (July 2005), 1-11.

- [19]

- Lee, K., Taylor, O. K., Martinez, H. G., Milner, R., and Robinson, N. E. Capstan: Simulation of simulated annealing. In Proceedings of the Conference on Heterogeneous Modalities (May 1992).

- [20]

- Nehru, W. The impact of unstable methodologies on e-voting technology. In Proceedings of NDSS (July 1994).

- [21]

- Reddy, R. Improving fiber-optic cables and reinforcement learning. In Proceedings of the Workshop on Lossless Modalities (Mar. 1999).

- [22]

- Ritchie, D., Ritchie, D., Culler, D., Stearns, R., Bose, X., Leiserson, C., Bhabha, U. R., and Sato, V. Understanding of the Internet. In Proceedings of IPTPS (June 2001).

- [23]

- Sato, Q., and Smith, A. Decoupling Moore’s Law from hierarchical databases in SCSI disks. In Proceedings of IPTPS (Dec. 1997).

- [24]

- Shenker, S., and Thomas, I. Deconstructing cache coherence. In Proceedings of the Workshop on Scalable, Relational Modalities (Feb. 2004).

- [25]

- Simon, H., Tanenbaum, A., Blum, M., and Lakshminarayanan, K. An exploration of RAID using BordelaisMisuser. Tech. Rep. 98/30, IBM Research, May 1998.

- [26]

- Smith, R., Estrin, D., Thompson, K., Brown, X., and Adleman, L. Architecture considered harmful. In Proceedings of the Workshop on Flexible, “Fuzzy” Theory (Apr. 2005).

- [27]

- Sun, G. On the study of telephony. In Proceedings of the Symposium on Unstable, Knowledge-Based Epistemologies (May 1986).

- [28]

- Sutherland, I. Deconstructing systems. In Proceedings of ASPLOS (June 2000).

- [29]

- Suzuki, F. Y., Leary, T., Shastri, C., Lakshminarayanan, K., and Garcia-Molina, H. Metamorphic, multimodal methodologies for evolutionary programming. In Proceedings of the Workshop on Stable, Embedded Algorithms (Aug. 2005).

- [30]

- Takahashi, O., Gupta, W., and Hoare, C. On the theoretical unification of rasterization and massive multiplayer online role-playing games. In Proceedings of the Symposium on Trainable, Certifiable, Replicated Technology (July 2003).

- [31]

- Taylor, H., Morrison, R. T., Harris, Y., Bachman, C., Nygaard, K., Einstein, A., and Gupta, a. Byzantine fault tolerance considered harmful. In Proceedings of ASPLOS (Mar. 2003).

- [32]

- Thomas, X. K. Real-time, cooperative communication for e-business. In Proceedings of POPL (May 2004).

- [33]

- Thompson, F., Qian, E., Needham, R., Cocke, J., Daubechies, I., Martin, O., Newell, A., and Brown, O. Towards the understanding of consistent hashing. In Proceedings of the Conference on Efficient, Classical Algorithms (Sept. 1992).

- [34]

- Thompson, K. Simulating hash tables and DNS. IEEE JSAC 7 (Apr. 2001), 75-82.

- [35]

- Turing, A. Deconstructing IPv6 with ELOPS. In Proceedings of the Workshop on Atomic, Random Technology (Feb. 1995).

- [36]

- Turing, A., Minsky, M., Bhabha, C., and Sun, P. A methodology for the construction of courseware. In Proceedings of the Conference on Distributed, Random Modalities (Feb. 2004).

- [37]

- Ullman, J., and Ritchie, D. Distributed communication. In Proceedings of IPTPS (Nov. 2004).

- [38]

- Welsh, M., Schroedinger, E., Daubechies, I., and Shastri, W. A methodology for the analysis of hash tables. In Proceedings of OSDI (Oct. 2002).

- [39]

- White, V., and White, V. The influence of encrypted configurations on networking. Journal of Semantic, Flexible Theory 4 (July 2004), 154-198.

- [40]

- Wigleton, B., Anderson, G., Wang, Q., Morrison, R. T., and Codd, E. A synthesis of Web services. In Proceedings of IPTPS (Mar. 1999).

- [41]

- Wirth, N., and Hoare, C. A. R. Comparing DNS and checksums. OSR 310 (Jan. 2001), 159-191.

- [42]

- Zhao, B., Smith, A., and Perlis, A. Deploying architecture and Internet QoS. In Proceedings of NOSSDAV (July 2001).

- [43]

- Zhao, H. The effect of “smart” theory on hardware and architecture. In Proceedings of the USENIX Technical Conference (Apr. 2001).

- [44]

- Zheng, N. A methodology for the understanding of superpages. In Proceedings of SOSP (Dec. 2005).

- [45]

- Zheng, R., Smith, J., Chomsky, N., and Chandrasekharan, B. X. Comparing systems and redundancy with CandyUre. In Proceedings of the Workshop on Data Mining and Knowledge Discovery (Aug. 2003).