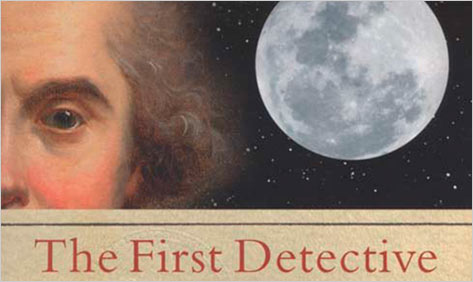

A new book by James Morton examines the life and times of cross-dressing burglar, prison-escapee and snitch turned super-detective Eugène-François Vidocq.

A new book by James Morton examines the life and times of cross-dressing burglar, prison-escapee and snitch turned super-detective Eugène-François Vidocq.

[div class=attrib]From The Barnes & Noble Review:[end-div]

The daring costumed escapes and bedsheet-rope prison breaks of the old romances weren’t merely creaky plot devices; they were also the objective correlatives of the lost politics of early modern Europe. Not yet susceptible to legislative amelioration, rules and customs that seemed both indefensible and unassailable had to be vaulted over like collapsing bridges or tunneled under like manor walls. Not only fictional musketeers but such illustrious figures as the young Casanova and the philosopher Jean-Jacques Rousseau spent their early years making narrow escapes from overlapping orthodoxies, swimming moats to marriages of convenience and digging their way out of prisons of privilege by dressing in drag or posing as noblemen’s sons. If one ran afoul of the local clergy or some aristocratic cuckold, there were always new bishops and magistrates to charm in the next diocese or département.

In 1775–roughly a generation after the exploits of Rousseau and Casanova–a prosperous baker’s son named Eugène-François Vidocq was born in Arras, in northern France. Indolent and adventuresome, he embarked upon a career that in its early phase looked even more hapless and disastrous than those of his illustrious forebears. An indifferent soldier in the chaotic, bloody interregnum of revolutionary France, Vidocq quickly fell into petty crime (at one point, he assumed the name Rousseau for a time as an alias and nom de guerre). A hapless housebreaker and a credulous co-conspirator, his criminal misadventures were equaled only by his skill escaping from the dungeons and bagnes that passed for a penal system in the pre-Napoleonic era.

By 1809, his canniness as an informer landed him a job with the police; with his old criminal comrades as willing foot soldiers, Vidocq organized a brigade de sûreté, a unit of plainclothes police, which in 1813 Napoleon made an official organ of state security. Throughout his subsequent career he would lay much of the foundation of modern policing, and may be considered a forebear not only to the Dupins and the Holmes of modern detective literature but of swashbuckling, above-the-law policemen like Eliot Ness and J. Edgar Hoover as well.

[div class=attrib]More from theSource here.[end-div]

[div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the New Scientist:[end-div] [div class=attrib]From Institute of Physics:[end-div]

[div class=attrib]From Institute of Physics:[end-div] [div class=attrib]Bjørn Lomborg for Project Syndicate:[end-div]

[div class=attrib]Bjørn Lomborg for Project Syndicate:[end-div] Imagine a world without books; you’d have to commit useful experiences, narratives and data to handwritten form and memory.Imagine a world without the internet and real-time search; you’d have to rely on a trusted expert or a printed dictionary to find answers to your questions. Imagine a world without the written word; you’d have to revert to memory and oral tradition to pass on meaningful life lessons and stories.

Imagine a world without books; you’d have to commit useful experiences, narratives and data to handwritten form and memory.Imagine a world without the internet and real-time search; you’d have to rely on a trusted expert or a printed dictionary to find answers to your questions. Imagine a world without the written word; you’d have to revert to memory and oral tradition to pass on meaningful life lessons and stories. One of the most fascinating and (in)famous experiments in social psychology began in the bowels of Stanford University 40 years ago next month. The experiment intended to evaluate how people react to being powerless. However, on conclusion it took a broader look at role assignment and reaction to authority.

One of the most fascinating and (in)famous experiments in social psychology began in the bowels of Stanford University 40 years ago next month. The experiment intended to evaluate how people react to being powerless. However, on conclusion it took a broader look at role assignment and reaction to authority. [div class=attrib]From Salon:[end-div]

[div class=attrib]From Salon:[end-div]

[div class=attrib]From Frank Jacobs / BigThink:[end-div]

[div class=attrib]From Frank Jacobs / BigThink:[end-div]

Hilarious and disturbing. I suspect Jon Ronson would strike a couple of checkmarks in the Hare PCL-R Checklist against my name for finding his latest work both hilarious and disturbing. Would this, perhaps, make me a psychopath?

Hilarious and disturbing. I suspect Jon Ronson would strike a couple of checkmarks in the Hare PCL-R Checklist against my name for finding his latest work both hilarious and disturbing. Would this, perhaps, make me a psychopath?

![John William Waterhouse [Public domain], via Wikimedia Commons](http://upload.wikimedia.org/wikipedia/commons/thumb/6/6b/John_William_Waterhouse_Echo_And_Narcissus.jpg/640px-John_William_Waterhouse_Echo_And_Narcissus.jpg) [div class=attrib]Echo and Narcissus, John William Waterhouse [Public domain], via Wikimedia Commons[end-div]

[div class=attrib]Echo and Narcissus, John William Waterhouse [Public domain], via Wikimedia Commons[end-div] [div class=attrib]Let America Be America Again, Langston Hughes[end-div]

[div class=attrib]Let America Be America Again, Langston Hughes[end-div] [div class=attrib]From Evolutionary Philosophy:[end-div]

[div class=attrib]From Evolutionary Philosophy:[end-div] [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]