[div class=attrib]From the Wall Street Journal:[end-div]

“What was he thinking?” It’s the familiar cry of bewildered parents trying to understand why their teenagers act the way they do.

How does the boy who can thoughtfully explain the reasons never to drink and drive end up in a drunken crash? Why does the girl who knows all about birth control find herself pregnant by a boy she doesn’t even like? What happened to the gifted, imaginative child who excelled through high school but then dropped out of college, drifted from job to job and now lives in his parents’ basement?

Adolescence has always been troubled, but for reasons that are somewhat mysterious, puberty is now kicking in at an earlier and earlier age. A leading theory points to changes in energy balance as children eat more and move less.

At the same time, first with the industrial revolution and then even more dramatically with the information revolution, children have come to take on adult roles later and later. Five hundred years ago, Shakespeare knew that the emotionally intense combination of teenage sexuality and peer-induced risk could be tragic—witness “Romeo and Juliet.” But, on the other hand, if not for fate, 13-year-old Juliet would have become a wife and mother within a year or two.

Our Juliets (as parents longing for grandchildren will recognize with a sigh) may experience the tumult of love for 20 years before they settle down into motherhood. And our Romeos may be poetic lunatics under the influence of Queen Mab until they are well into graduate school.

What happens when children reach puberty earlier and adulthood later? The answer is: a good deal of teenage weirdness. Fortunately, developmental psychologists and neuroscientists are starting to explain the foundations of that weirdness.

The crucial new idea is that there are two different neural and psychological systems that interact to turn children into adults. Over the past two centuries, and even more over the past generation, the developmental timing of these two systems has changed. That, in turn, has profoundly changed adolescence and produced new kinds of adolescent woe. The big question for anyone who deals with young people today is how we can go about bringing these cogs of the teenage mind into sync once again.

The first of these systems has to do with emotion and motivation. It is very closely linked to the biological and chemical changes of puberty and involves the areas of the brain that respond to rewards. This is the system that turns placid 10-year-olds into restless, exuberant, emotionally intense teenagers, desperate to attain every goal, fulfill every desire and experience every sensation. Later, it turns them back into relatively placid adults.

Recent studies in the neuroscientist B.J. Casey’s lab at Cornell University suggest that adolescents aren’t reckless because they underestimate risks, but because they overestimate rewards—or, rather, find rewards more rewarding than adults do. The reward centers of the adolescent brain are much more active than those of either children or adults. Think about the incomparable intensity of first love, the never-to-be-recaptured glory of the high-school basketball championship.

What teenagers want most of all are social rewards, especially the respect of their peers. In a recent study by the developmental psychologist Laurence Steinberg at Temple University, teenagers did a simulated high-risk driving task while they were lying in an fMRI brain-imaging machine. The reward system of their brains lighted up much more when they thought another teenager was watching what they did—and they took more risks.

From an evolutionary point of view, this all makes perfect sense. One of the most distinctive evolutionary features of human beings is our unusually long, protected childhood. Human children depend on adults for much longer than those of any other primate. That long protected period also allows us to learn much more than any other animal. But eventually, we have to leave the safe bubble of family life, take what we learned as children and apply it to the real adult world.

Becoming an adult means leaving the world of your parents and starting to make your way toward the future that you will share with your peers. Puberty not only turns on the motivational and emotional system with new force, it also turns it away from the family and toward the world of equals.

[div class=attrib]Read more here.[end-div]

Author Susan Cain reviews her intriguing book, “Quiet : The Power of Introverts” in an interview with Gareth Cook over at Mind Matters / Scientific American.

Author Susan Cain reviews her intriguing book, “Quiet : The Power of Introverts” in an interview with Gareth Cook over at Mind Matters / Scientific American.

theDiagonal has carried several recent articles (

theDiagonal has carried several recent articles ( A popular stereotype suggests that we become increasingly conservative in our values as we age. Thus, one would expect that older voters would be more likely to vote for Republican candidates. However, a recent social study debunks this view.

A popular stereotype suggests that we become increasingly conservative in our values as we age. Thus, one would expect that older voters would be more likely to vote for Republican candidates. However, a recent social study debunks this view.

A group of new research studies show that our left- or right-handedness shapes our perception of “goodness” and “badness”.

A group of new research studies show that our left- or right-handedness shapes our perception of “goodness” and “badness”.

Once in a while a photographer comes along with a simple yet thoroughly new perspective. Japanese artist Photographer Hal fits this description. His images of young Japanese in a variety of contorted and enclosed situations are sometimes funny and disturbing, but certainly different and provocative.

Once in a while a photographer comes along with a simple yet thoroughly new perspective. Japanese artist Photographer Hal fits this description. His images of young Japanese in a variety of contorted and enclosed situations are sometimes funny and disturbing, but certainly different and provocative.

[div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the New Scientist:[end-div] The social standing of atheists seems to be on the rise. Back in December we

The social standing of atheists seems to be on the rise. Back in December we  Seventeenth century polymath Blaise Pascal had it right when he remarked, “Distraction is the only thing that consoles us for our miseries, and yet it is itself the greatest of our miseries.”

Seventeenth century polymath Blaise Pascal had it right when he remarked, “Distraction is the only thing that consoles us for our miseries, and yet it is itself the greatest of our miseries.” Let’s face it, taking money out of politics in the United States, especially since the 2010 Supreme Court Decision (

Let’s face it, taking money out of politics in the United States, especially since the 2010 Supreme Court Decision (

Over the last 40 years or so physicists and cosmologists have sought to construct a single grand theory that describes our entire universe from the subatomic soup that makes up particles and describes all forces to the vast constructs of our galaxies, and all in between and beyond. Yet a major stumbling block has been how to bring together the quantum theories that have so successfully described, and predicted, the microscopic with our current understanding of gravity. String theory is one such attempt to develop a unified theory of everything, but it remains jumbled with many possible solutions and, currently, is beyond experimental verification.

Over the last 40 years or so physicists and cosmologists have sought to construct a single grand theory that describes our entire universe from the subatomic soup that makes up particles and describes all forces to the vast constructs of our galaxies, and all in between and beyond. Yet a major stumbling block has been how to bring together the quantum theories that have so successfully described, and predicted, the microscopic with our current understanding of gravity. String theory is one such attempt to develop a unified theory of everything, but it remains jumbled with many possible solutions and, currently, is beyond experimental verification.

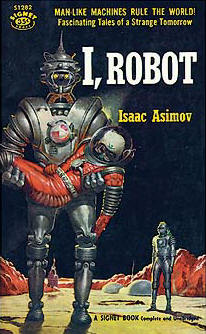

Fans of science fiction and Isaac Asimov in particular may recall his three laws of robotics:

Fans of science fiction and Isaac Asimov in particular may recall his three laws of robotics: