Simon Critchley, professor of philosophy, continues his serialized analysis of Philip K. Dick. Part I first appeared here. Part II examines the events around 2-3-74 that led to Dick’s 8,000 page Gnostic treatise “Exegesis”.

[div class=attrib]From the New York Times:[end-div]

In the previous post, we looked at the consequences and possible philosophic import of the events of February and March of 1974 (also known as 2-3-74) in the life and work of Philip K. Dick, a period in which a dose of sodium pentathol, a light-emitting fish pendant and decades of fiction writing and quasi-philosophic activity came together in revelation that led to Dick’s 8,000-page “Exegesis.”

So, what is the nature of the true reality that Dick claims to have intuited during psychedelic visions of 2-3-74? Does it unwind into mere structureless ranting and raving or does it suggest some tradition of thought or belief? I would argue the latter. This is where things admittedly get a little weirder in an already weird universe, so hold on tight.

In the very first lines of “Exegesis” Dick writes, “We see the Logos addressing the many living entities.” Logos is an important concept that litters the pages of “Exegesis.” It is a word with a wide variety of meaning in ancient Greek, one of which is indeed “word.” It can also mean speech, reason (in Latin, ratio) or giving an account of something. For Heraclitus, to whom Dick frequently refers, logos is the universal law that governs the cosmos of which most human beings are somnolently ignorant. Dick certainly has this latter meaning in mind, but — most important — logos refers to the opening of John’s Gospel, “In the beginning was the word” (logos), where the word becomes flesh in the person of Christ.

But the core of Dick’s vision is not quite Christian in the traditional sense; it is Gnostical: it is the mystical intellection, at its highest moment a fusion with a transmundane or alien God who is identified with logos and who can communicate with human beings in the form of a ray of light or, in Dick’s case, hallucinatory visions.

There is a tension throughout “Exegesis” between a monistic view of the cosmos (where there is just one substance in the universe, which can be seen in Dick’s references to Spinoza’s idea as God as nature, Whitehead’s idea of reality as process and Hegel’s dialectic where “the true is the whole”) and a dualistic or Gnostical view of the cosmos, with two cosmic forces in conflict, one malevolent and the other benevolent. The way I read Dick, the latter view wins out. This means that the visible, phenomenal world is fallen and indeed a kind of prison cell, cage or cave.

Christianity, lest it be forgotten, is a metaphysical monism where it is the obligation of every Christian to love every aspect of creation – even the foulest and smelliest – because it is the work of God. Evil is nothing substantial because if it were it would have to be caused by God, who is by definition good. Against this, Gnosticism declares a radical dualism between the false God who created this world – who is usually called the “demiurge” – and the true God who is unknown and alien to this world. But for the Gnostic, evil is substantial and its evidence is the world. There is a story of a radical Gnostic who used to wash himself in his own saliva in order to have as little contact as possible with creation. Gnosticism is the worship of an alien God by those alienated from the world.

The novelty of Dick’s Gnosticism is that the divine is alleged to communicate with us through information. This is a persistent theme in Dick, and he refers to the universe as information and even Christ as information. Such information has a kind of electrostatic life connected to the theory of what he calls orthogonal time. The latter is rich and strange idea of time that is completely at odds with the standard, linear conception, which goes back to Aristotle, as a sequence of now-points extending from the future through the present and into the past. Dick explains orthogonal time as a circle that contains everything rather than a line both of whose ends disappear in infinity. In an arresting image, Dick claims that orthogonal time contains, “Everything which was, just as grooves on an LP contain that part of the music which has already been played; they don’t disappear after the stylus tracks them.”

It is like that seemingly endless final chord in the Beatles’ “A Day in the Life” that gathers more and more momentum and musical complexity as it decays. In other words, orthogonal time permits total recall.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]From Anthropology in Practice:[end-div]

[div class=attrib]From Anthropology in Practice:[end-div] No surprise. Women and men use online social networks differently. A new study of online behavior by researchers in Vienna, Austria, shows that the sexes organize their networks very differently and for different reasons.

No surprise. Women and men use online social networks differently. A new study of online behavior by researchers in Vienna, Austria, shows that the sexes organize their networks very differently and for different reasons. No, not Ted Nugent or Ted Koppel or Ted Turner; we are talking about the TED.

No, not Ted Nugent or Ted Koppel or Ted Turner; we are talking about the TED. It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?

It takes no expert neuroscientist, anthropologist or evolutionary biologist to recognize that human evolution has probably stalled. After all, one only needs to observe our obsession with reality TV. Yes, evolution screeched to a halt around 1999, when reality TV hit critical mass in the mainstream public consciousness. So, what of evolution?

Professor of philosophy Simon Critchley has an insightful examination (serialized) of Philip K. Dick’s writings. Philip K. Dick had a tragically short, but richly creative writing career. Since his death twenty years ago, many of his novels have profoundly influenced contemporary culture.

Professor of philosophy Simon Critchley has an insightful examination (serialized) of Philip K. Dick’s writings. Philip K. Dick had a tragically short, but richly creative writing career. Since his death twenty years ago, many of his novels have profoundly influenced contemporary culture.

Professor of philosopher Shelly Kagan has an interesting take on death. After all, how bad can something be for you if you’re not alive to experience it?

Professor of philosopher Shelly Kagan has an interesting take on death. After all, how bad can something be for you if you’re not alive to experience it? Christopher Mims over at the Technology Review revisits a recent study of our social networks, both real-world and online. It’s startling to see the growth in our social isolation despite the corresponding growth in technologies that increase our ability to communicate and interact with one another. Is the suburbanization of our species to blame, and can Facebook save us?

Christopher Mims over at the Technology Review revisits a recent study of our social networks, both real-world and online. It’s startling to see the growth in our social isolation despite the corresponding growth in technologies that increase our ability to communicate and interact with one another. Is the suburbanization of our species to blame, and can Facebook save us?

The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing.

The practical science behind quantum computers continues to make exciting progress. Quantum computers promise, in theory, immense gains in power and speed through the use of atomic scale parallel processing. An insightful opinion on the benefits and perils of nanotechnology from essayist and naturalist, Diane Ackerman.

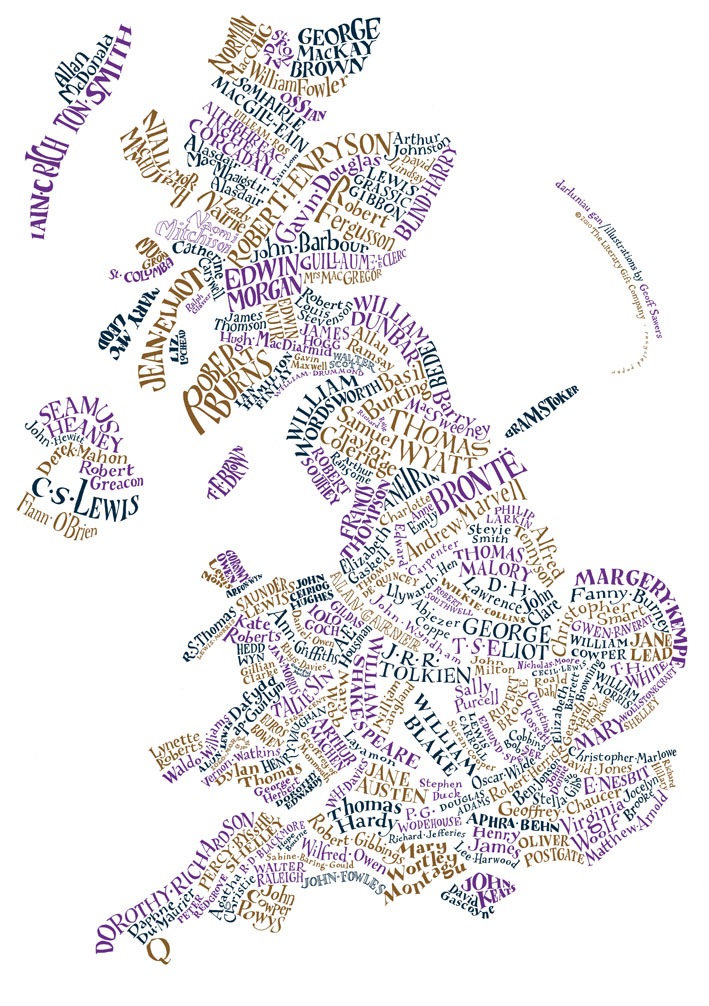

An insightful opinion on the benefits and perils of nanotechnology from essayist and naturalist, Diane Ackerman. May the Fourth was Star Wars Day. Why? Say, “May the Fourth” slowly while pretending to lisp slightly, and you’ll understand. Appropriately, Matt Cresswen over at the Guardian took this day to review the growing Jedi religion in the UK.

May the Fourth was Star Wars Day. Why? Say, “May the Fourth” slowly while pretending to lisp slightly, and you’ll understand. Appropriately, Matt Cresswen over at the Guardian took this day to review the growing Jedi religion in the UK. Google has been variously praised and derided for its corporate manta, “Don’t Be Evil”. For those who like to believe that Google has good intentions recent events strain these assumptions. The company was found to have been snooping on and collecting data from personal Wi-Fi routers. Is this the case of a lone-wolf or a corporate strategy?

Google has been variously praised and derided for its corporate manta, “Don’t Be Evil”. For those who like to believe that Google has good intentions recent events strain these assumptions. The company was found to have been snooping on and collecting data from personal Wi-Fi routers. Is this the case of a lone-wolf or a corporate strategy? [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

A small, but growing, idea in theoretical physics and cosmology is that spacetime may be emergent. That is, spacetime emerges from something much more fundamental, in much the same way that our perception of temperature emerges from the motion and characteristics of underlying particles.

A small, but growing, idea in theoretical physics and cosmology is that spacetime may be emergent. That is, spacetime emerges from something much more fundamental, in much the same way that our perception of temperature emerges from the motion and characteristics of underlying particles.