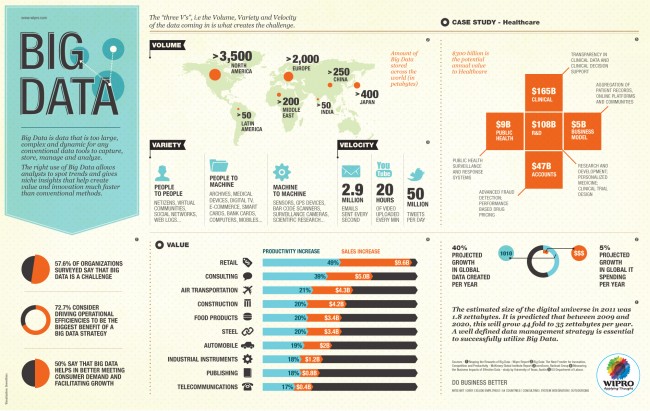

First a definition. Big data: typically a collection of large and complex datasets that are too cumbersome to process and analyze using traditional computational approaches and database applications. Usually the big data moniker will be accompanied by an IT vendor’s pitch for shiny new software (and possible hardware) solution able to crunch through petabytes (one petabyte is a million gigabytes) of data and produce a visualizable result that mere mortals can decipher.

Many companies see big data and related solutions as a panacea to a range of business challenges: customer service, medical diagnostics, product development, shipping and logistics, climate change studies, genomic analysis and so on. A great example was the last U.S. election. Many political wonks — from both sides of the aisle — agreed that President Obama was significantly aided in his won re-election with the help of big data. So, with that in mind, many are now looking at more important big data problems.

From Technology Review:

As chief scientist for President Obama’s reëlection effort, Rayid Ghani helped revolutionize the use of data in politics. During the final 18 months of the campaign, he joined a sprawling team of data and software experts who sifted, collated, and combined dozens of pieces of information on each registered U.S. voter to discover patterns that let them target fund-raising appeals and ads.

Now, with Obama again ensconced in the Oval Office, some veterans of the campaign’s data squad are applying lessons from the campaign to tackle social issues such as education and environmental stewardship. Edgeflip, a startup Ghani founded in January with two other campaign members, plans to turn the ad hoc data analysis tools developed for Obama for America into software that can make nonprofits more effective at raising money and recruiting volunteers.

Ghani isn’t the only one thinking along these lines. In Chicago, Ghani’s hometown and the site of Obama for America headquarters, some campaign members are helping the city make available records of utility usage and crime statistics so developers can build apps that attempt to improve life there. It’s all part of a bigger idea to engineer social systems by scanning the numerical exhaust from mundane activities for patterns that might bear on everything from traffic snarls to human trafficking. Among those pursuing such humanitarian goals are startups like DataKind as well as large companies like IBM, which is redrawing bus routes in Ivory Coast (see “African Bus Routes Redrawn Using Cell-Phone Data”), and Google, with its flu-tracking software (see “Sick Searchers Help Track Flu”).

Ghani, who is 35, has had a longstanding interest in social causes, like tutoring disadvantaged kids. But he developed his data-mining savvy during 10 years as director of analytics at Accenture, helping retail chains forecast sales, creating models of consumer behavior, and writing papers with titles like “Data Mining for Business Applications.”

Before joining the Obama campaign in July 2011, Ghani wasn’t even sure his expertise in machine learning and predicting online prices could have an impact on a social cause. But the campaign’s success in applying such methods on the fly to sway voters is now recognized as having been potentially decisive in the election’s outcome (see “A More Perfect Union”).

“I realized two things,” says Ghani. “It’s doable at the massive scale of the campaign, and that means it’s doable in the context of other problems.”

At Obama for America, Ghani helped build statistical models that assessed each voter along five axes: support for the president; susceptibility to being persuaded to support the president; willingness to donate money; willingness to volunteer; and likelihood of casting a vote. These models allowed the campaign to target door knocks, phone calls, TV spots, and online ads to where they were most likely to benefit Obama.

One of the most important ideas he developed, dubbed “targeted sharing,” now forms the basis of Edgeflip’s first product. It’s a Facebook app that prompts people to share information from a nonprofit, but only with those friends predicted to respond favorably. That’s a big change from the usual scattershot approach of posting pleas for money or help and hoping they’ll reach the right people.

Edgeflip’s app, like the one Ghani conceived for Obama, will ask people who share a post to provide access to their list of friends. This will pull in not only friends’ names but also personal details, like their age, that can feed models of who is most likely to help.

Say a hurricane strikes the southeastern United States and the Red Cross needs clean-up workers. The app would ask Facebook users to share the Red Cross message, but only with friends who live in the storm zone, are young and likely to do manual labor, and have previously shown interest in content shared by that user. But if the same person shared an appeal for donations instead, he or she would be prompted to pass it along to friends who are older, live farther away, and have donated money in the past.

Michael Slaby, a senior technology official for Obama who hired Ghani for the 2012 election season, sees great promise in the targeted sharing technique. “It’s one of the most compelling innovations to come out of the campaign,” says Slaby. “It has the potential to make online activism much more efficient and effective.”

For instance, Ghani has been working with Fidel Vargas, CEO of the Hispanic Scholarship Fund, to increase that organization’s analytical savvy. Vargas thinks social data could predict which scholarship recipients are most likely to contribute to the fund after they graduate. “Then you’d be able to give away scholarships to qualified students who would have a higher probability of giving back,” he says. “Everyone would be much better off.”

Ghani sees a far bigger role for technology in the social sphere. He imagines online petitions that act like open-source software, getting passed around and improved. Social programs, too, could get constantly tested and improved. “I can imagine policies being designed a lot more collaboratively,” he says. “I don’t know if the politicians are ready to deal with it.” He also thinks there’s a huge amount of untapped information out there about childhood obesity, gang membership, and infant mortality, all ready for big data’s touch.

Read the entire article here.

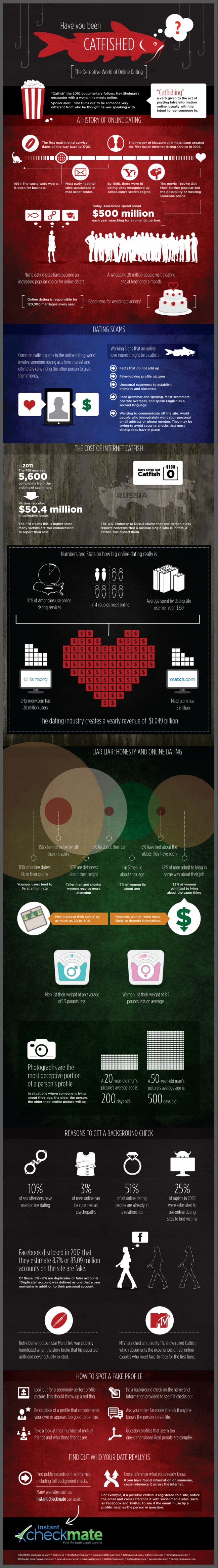

Inforgraphic courtesy of visua.ly. See the original here.

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart).

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart). “… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California).

“… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California).

In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

If you are an English speaker and are over the age of 39 you may be pondering the fate of the English language. As the younger generations fill cyberspace with terabytes of misspelled texts and tweets do you not wonder if gorgeous grammatical language will survive? Are the technophobes and anti-Twitterites doomed to a future world of #hashtag-driven conversation and ADHD-like literature? Those of us who care are reminded of George Orwell’s 1946 essay “Politics and the English Language”, in which he decried the swelling ugliness of the language at the time.

If you are an English speaker and are over the age of 39 you may be pondering the fate of the English language. As the younger generations fill cyberspace with terabytes of misspelled texts and tweets do you not wonder if gorgeous grammatical language will survive? Are the technophobes and anti-Twitterites doomed to a future world of #hashtag-driven conversation and ADHD-like literature? Those of us who care are reminded of George Orwell’s 1946 essay “Politics and the English Language”, in which he decried the swelling ugliness of the language at the time.

Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy?

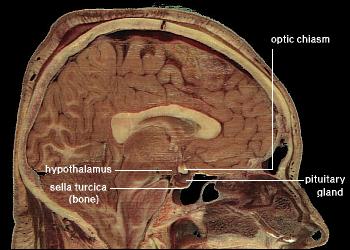

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy? Researchers are continuing to make great progress in unraveling the complexities of aging. While some fingers point to the shortening of telomeres — end caps — in our chromosomal DNA as a contributing factor, other research points to the hypothalamus. This small sub-region of the brain has been found to play a major role in aging and death (though, at the moment only in mice).

Researchers are continuing to make great progress in unraveling the complexities of aging. While some fingers point to the shortening of telomeres — end caps — in our chromosomal DNA as a contributing factor, other research points to the hypothalamus. This small sub-region of the brain has been found to play a major role in aging and death (though, at the moment only in mice).

Stephen Wolfram, physicist, mathematician and complexity theorist, has taken big data ideas to an entirely new level — he’s quantifying himself and his relationships. He calls this discipline personal analytics.

Stephen Wolfram, physicist, mathematician and complexity theorist, has taken big data ideas to an entirely new level — he’s quantifying himself and his relationships. He calls this discipline personal analytics. Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s.

Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s. We live in a world of brands, pitches, advertising, promotions, PR, consumer research, product placement, focus groups, and 24/7 spin. So, it should come as no surprise that even that ubiquitous and utilitarian listing of food and drink items from your local restaurant — the menu — would come in for some 21st century marketing treatment.

We live in a world of brands, pitches, advertising, promotions, PR, consumer research, product placement, focus groups, and 24/7 spin. So, it should come as no surprise that even that ubiquitous and utilitarian listing of food and drink items from your local restaurant — the menu — would come in for some 21st century marketing treatment.

It’s official — teens can’t stay off social media for more than 15 minutes. It’s no secret that many kids aged between 8 and 18 spend most of their time texting, tweeting and checking their real-time social status. The profound psychological and sociological consequences of this behavior will only start to become apparent ten to fifteen year from now. In the meantime, researchers are finding a general degradation in kids’ memory skills from using social media and multi-tasking while studying.

It’s official — teens can’t stay off social media for more than 15 minutes. It’s no secret that many kids aged between 8 and 18 spend most of their time texting, tweeting and checking their real-time social status. The profound psychological and sociological consequences of this behavior will only start to become apparent ten to fifteen year from now. In the meantime, researchers are finding a general degradation in kids’ memory skills from using social media and multi-tasking while studying.

It should come as no surprise that those who deny the science of climate change and human-propelled impact on the environment would also shirk from purchasing products and services that are friendly to the environment.

It should come as no surprise that those who deny the science of climate change and human-propelled impact on the environment would also shirk from purchasing products and services that are friendly to the environment. That a small group of Young British Artists (YBA) made an impact on the art scene in the UK and across the globe over the last 25 years is without question. Though, whether the public at large will, 10, 25 or 50 years from now (and beyond), recognize a Damien Hirst spin painting or Tracy Emin’s “My Bed” or a Sarah Lucas self-portrait — “The Artist Eating a Banana” springs to mind — remains an open question.

That a small group of Young British Artists (YBA) made an impact on the art scene in the UK and across the globe over the last 25 years is without question. Though, whether the public at large will, 10, 25 or 50 years from now (and beyond), recognize a Damien Hirst spin painting or Tracy Emin’s “My Bed” or a Sarah Lucas self-portrait — “The Artist Eating a Banana” springs to mind — remains an open question.