There is a long-held belief that economic growth and prosperity makes for a happier, healthier populace. Most economists and social scientists, and indeed lay-people, have subscribed to this idea for many decades.

But, this may be completely wrong.

A handful of contrarian economists began noticing a strange paradox in their research studies from 2000. Evidence suggests that rising incomes and personal well-being are linked in the opposite way. It seems that when the US economy is improving, people suffer more medical problems and die faster.

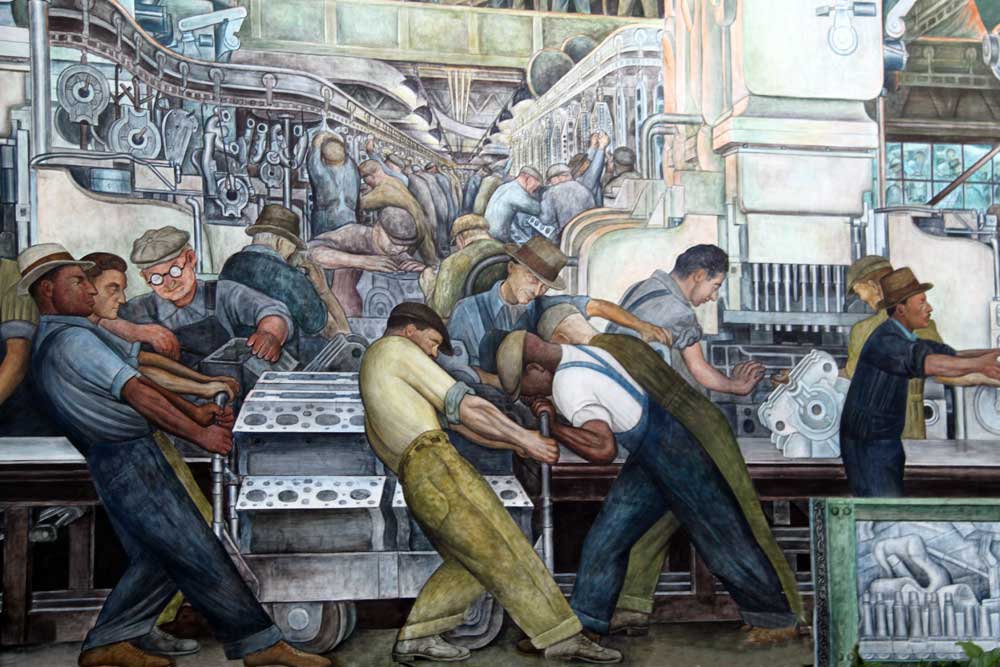

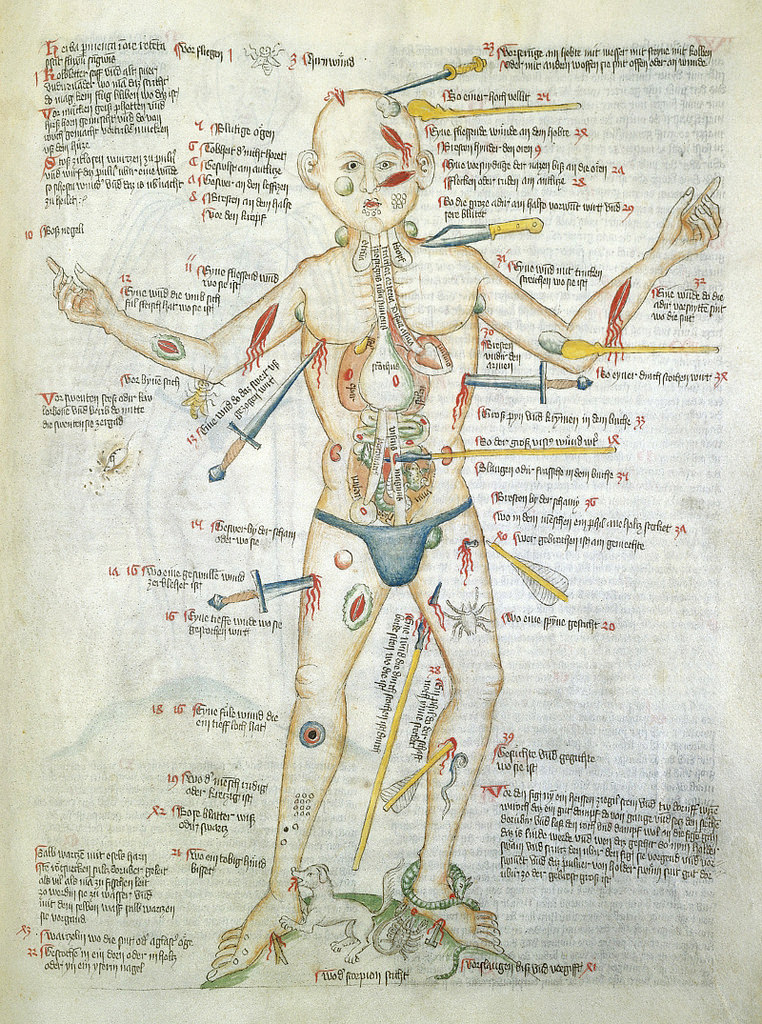

How could this be? Well, put simply, there are three main factors: increased pollution from increased industrial activity; greater occupational hazards from increased work; and, higher exposure to risky behaviors from greater income.

From the Washington Post:

Yet in recent years, accumulating evidence suggests that rising incomes and personal well-being are linked in the opposite way. It seems that economic growth actually kills people.

Christopher Ruhm, an economics professor at the University of Virginia, was one of the first to notice this paradox. In a 2000 paper, he showed that when the American economy is on an upswing, people suffer more medical problems and die faster; when the economy falters, people tend to live longer.

“It’s very puzzling,” says Adriana Lleras-Muney, an economics professor at the University of California, Los Angeles. “We know that people in rich countries live longer than people in poor countries. There’s a strong relationship between GDP and life expectancy, suggesting that more money is better. And yet, when the economy is doing well, when it’s growing faster than average, we find that more people are dying.”

In other words, there are great benefits to being wealthy. But the process of becoming wealthy — well, that seems to be dangerous.

Lleras-Muney and her colleagues, David Cutler of Harvard and Wei Huang of the National Bureau of Economic Research, believe they can explain why. They have conducted one of the most comprehensive investigations yet of this phenomenon, analyzing over 200 years of data from 32 countries. In a draft of their research, released last week, they lay out something of a grand unified theory of life, death and economic growth.

To start, the economists confirm that when a country’s economic output — its GDP — is higher than expected, mortality rates are also higher than expected.

…

The data show that when economies are growing particularly fast, emissions and pollution are also on the rise. After controlling for changes in air quality, the economists find that economic growth doesn’t seem to impact death rates as much. “As much as two-thirds of the adverse effect of booms may be the result of increased pollution,” they write.

…

A booming economy spurs death in other ways too. People start to spend more time at their jobs, exposing them to occupational hazards, as well as the stress of overwork. People drive more, leading to an increase in traffic-related fatalities. People also drink more, causing health problems and accidents. In particular, the economists’ data suggest that alcohol-related mortality is the second-most important explanation, after pollution, for the connection between economic growth and death rates.

This is consistent with other studies finding that people are more likely to die right after they receive their tax rebates. More income makes it easier for people to pay for health care and other basic necessities, but it also makes it easier for people to engage in risky activities and hurt themselves.

Read the entire story here.

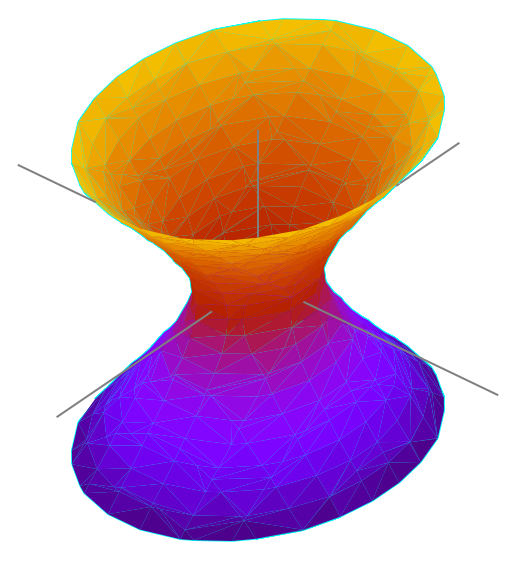

Since they were first dreamed up explanations of the very small (quantum mechanics) and the very large (general relativity) have both been highly successful at describing their respective spheres of influence. Yet, these two descriptions of our physical universe are not compatible, particularly when it comes to describing gravity. Indeed, physicists and theorists have struggled for decades to unite these two frameworks. Many agree that we need a new theory (of everything).

Since they were first dreamed up explanations of the very small (quantum mechanics) and the very large (general relativity) have both been highly successful at describing their respective spheres of influence. Yet, these two descriptions of our physical universe are not compatible, particularly when it comes to describing gravity. Indeed, physicists and theorists have struggled for decades to unite these two frameworks. Many agree that we need a new theory (of everything).

What do you get when you set AI (artificial intelligence) the task of reading through 30,000 Danish folk and fairy tales? Well, you get a host of fascinating, newly discovered insights into Scandinavian witches and trolls.

What do you get when you set AI (artificial intelligence) the task of reading through 30,000 Danish folk and fairy tales? Well, you get a host of fascinating, newly discovered insights into Scandinavian witches and trolls.

Should we blame the creative originators of fake news, conspiracy theories, disinformation and click-bait hype? Or, should we blame the media for disseminating, spinning and aggrandizing these stories for their own profit or political motives? Or, should we blame us — the witless consumers.

Should we blame the creative originators of fake news, conspiracy theories, disinformation and click-bait hype? Or, should we blame the media for disseminating, spinning and aggrandizing these stories for their own profit or political motives? Or, should we blame us — the witless consumers.