General scientific consensus suggests that our universe has no pre-defined destiny. While a number of current theories propose anything from a final Big Crush to an accelerating expansion into cold nothingness the future plan for the universe is not pre-determined. Unfortunately, our increasingly sophisticated scientific tools are still to meager to test and answer these questions definitively. So, theorists currently seem to have the upper hand. And, now yet another theory puts current cosmological thinking on its head by proposing that the future is pre-destined and that it may even reach back into the past to shape the present. Confused? Read on!

General scientific consensus suggests that our universe has no pre-defined destiny. While a number of current theories propose anything from a final Big Crush to an accelerating expansion into cold nothingness the future plan for the universe is not pre-determined. Unfortunately, our increasingly sophisticated scientific tools are still to meager to test and answer these questions definitively. So, theorists currently seem to have the upper hand. And, now yet another theory puts current cosmological thinking on its head by proposing that the future is pre-destined and that it may even reach back into the past to shape the present. Confused? Read on!

[div class=attrib]From FQXi:[end-div]

The universe has a destiny—and this set fate could be reaching backwards in time and combining with influences from the past to shape the present. It’s a mind-bending claim, but some cosmologists now believe that a radical reformulation of quantum mechanics in which the future can affect the past could solve some of the universe’s biggest mysteries, including how life arose. What’s more, the researchers claim that recent lab experiments are dramatically confirming the concepts underpinning this reformulation.

Cosmologist Paul Davies, at Arizona State University in Tempe, is embarking on a project to investigate the future’s reach into the present, with the help of a $70,000 grant from the Foundational Questions Institute. It is a project that has been brewing for more than 30 years, since Davies first heard of attempts by physicist Yakir Aharonov to get to root of some of the paradoxes of quantum mechanics. One of these is the theory’s apparent indeterminism: You cannot predict the outcome of experiments on a quantum particle precisely; perform exactly the same experiment on two identical particles and you will get two different results.

While most physicists faced with this have concluded that reality is fundamentally, deeply random, Aharonov argues that there is order hidden within the uncertainty. But to understand its source requires a leap of imagination that takes us beyond our traditional view of time and causality. In his radical reinterpretation of quantum mechanics, Aharonov argues that two seemingly identical particles behave differently under the same conditions because they are fundamentally different. We just do not appreciate this difference in the present because it can only be revealed by experiments carried out in the future.

“It’s a very, very profound idea,” says Davies. Aharonov’s take on quantum mechanics can explain all the usual results that the conventional interpretations can, but with the added bonus that it also explains away nature’s apparent indeterminism. What’s more, a theory in which the future can influence the past may have huge—and much needed—repercussions for our understanding of the universe, says Davies.

[div class=attrib]More from theSource here.[end-div]

[div class=attrib]From Neuroskeptic:[end-div]

[div class=attrib]From Neuroskeptic:[end-div] [div class=attrib]From Project Syndicate:[end-div]

[div class=attrib]From Project Syndicate:[end-div] Frequent fliers the world over may soon find themselves thanking a physicist named Jason Steffen. Back in 2008 he ran some computer simulations to find a more efficient way for travelers to board an airplane. Recent tests inside a mock cabin interior confirmed Steffen’s model to be both faster for the airline and easier for passengers, and best of all less time spent waiting in the aisle and jostling for overhead bin space.

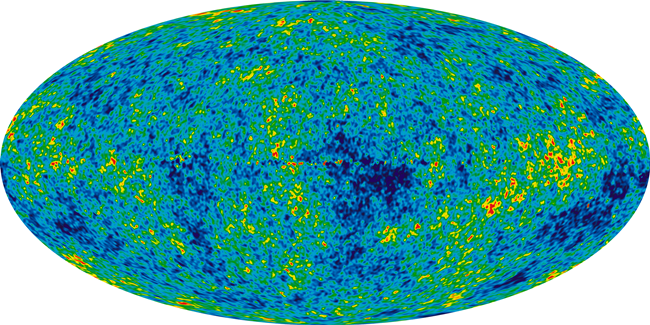

Frequent fliers the world over may soon find themselves thanking a physicist named Jason Steffen. Back in 2008 he ran some computer simulations to find a more efficient way for travelers to board an airplane. Recent tests inside a mock cabin interior confirmed Steffen’s model to be both faster for the airline and easier for passengers, and best of all less time spent waiting in the aisle and jostling for overhead bin space. Cosmologists and particle physicists have over the last decade or so proposed the existence of Dark Matter. It’s so called because it cannot be seen or sensed directly. It is inferred from gravitational effects on visible matter. Together with it’s theoretical cousin, Dark Energy, the two were hypothesized to make up most of the universe. In fact, the regular star-stuff — matter and energy — of which we, our planet, solar system and the visible universe are made, consists of only a paltry 4 percent.

Cosmologists and particle physicists have over the last decade or so proposed the existence of Dark Matter. It’s so called because it cannot be seen or sensed directly. It is inferred from gravitational effects on visible matter. Together with it’s theoretical cousin, Dark Energy, the two were hypothesized to make up most of the universe. In fact, the regular star-stuff — matter and energy — of which we, our planet, solar system and the visible universe are made, consists of only a paltry 4 percent. [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

The invisibility cloak of science fiction takes another step further into science fact this week. Researchers over at Physics arVix report a practical method for building a device that repels electromagnetic waves. Alvaro Sanchez and colleagues at Spain’s Universitat Autonoma de Barcelona describe the design of a such a device utilizing the bizarre properties of metamaterials.

The invisibility cloak of science fiction takes another step further into science fact this week. Researchers over at Physics arVix report a practical method for building a device that repels electromagnetic waves. Alvaro Sanchez and colleagues at Spain’s Universitat Autonoma de Barcelona describe the design of a such a device utilizing the bizarre properties of metamaterials. theDiagonal usually does not report on the news. Though we do make a few worthy exceptions based on the import or surreal nature of the event. A case in point below.

theDiagonal usually does not report on the news. Though we do make a few worthy exceptions based on the import or surreal nature of the event. A case in point below. The principle of a holographic universe, not to be confused with the Holographic Universe, an album by swedish death metal rock band Scar Symmetry, continues to hold serious sway among a not insignificant group of even more serious cosmologists.

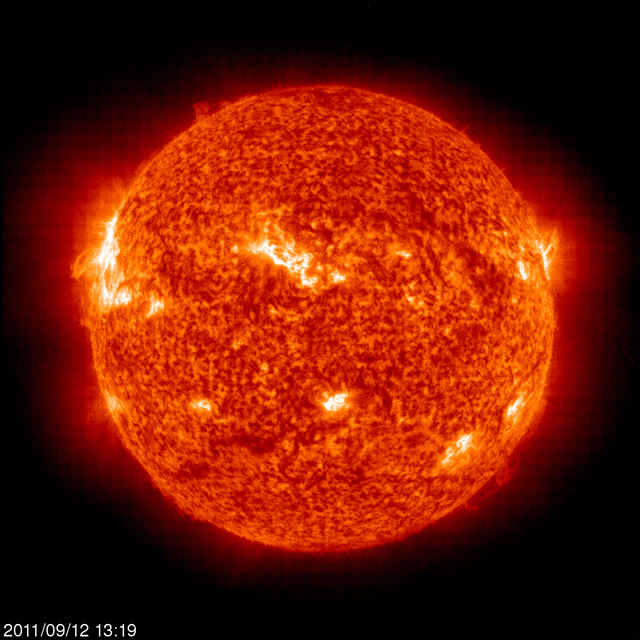

The principle of a holographic universe, not to be confused with the Holographic Universe, an album by swedish death metal rock band Scar Symmetry, continues to hold serious sway among a not insignificant group of even more serious cosmologists. NASA’s latest spacecraft to visit Mars, the Mars Reconnaissance Orbiter, has made some stunning observations that show the possibility of flowing water on the red planet. Intriguingly, repeated observations of the same regions over several Martian seasons show visible changes attributable to some kind of dynamic flow.

NASA’s latest spacecraft to visit Mars, the Mars Reconnaissance Orbiter, has made some stunning observations that show the possibility of flowing water on the red planet. Intriguingly, repeated observations of the same regions over several Martian seasons show visible changes attributable to some kind of dynamic flow. [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] More precisely NASA’s Dawn spacecraft entered into orbit around the asteroid Vesta on July 15, 2011. Vesta is the second largest of our solar system’s asteroids and is located in the asteroid belt between Mars and Jupiter.

More precisely NASA’s Dawn spacecraft entered into orbit around the asteroid Vesta on July 15, 2011. Vesta is the second largest of our solar system’s asteroids and is located in the asteroid belt between Mars and Jupiter. The Seven Sisters star cluster, also known as the Pleiades, consists of many, young, bright and hot stars. While the cluster contains hundreds of stars it is so named because only seven are typically visible to the naked eye. The Seven Sisters is visible from the northern hemisphere, and resides in the constellation Taurus.

The Seven Sisters star cluster, also known as the Pleiades, consists of many, young, bright and hot stars. While the cluster contains hundreds of stars it is so named because only seven are typically visible to the naked eye. The Seven Sisters is visible from the northern hemisphere, and resides in the constellation Taurus.

Jonathan Jones over at the Guardian puts an creative spin (pun intended) on the latest developments in the world of particle physics. He suggests that we might borrow from the world of modern and contemporary art to help us take the vast imaginative leaps necessary to understand our physical world and its underlying quantum mechanical nature bound up in uncertainty and paradox.

Jonathan Jones over at the Guardian puts an creative spin (pun intended) on the latest developments in the world of particle physics. He suggests that we might borrow from the world of modern and contemporary art to help us take the vast imaginative leaps necessary to understand our physical world and its underlying quantum mechanical nature bound up in uncertainty and paradox. Two exciting races tracked through Grenoble, France this passed week. First, the Tour de France held one of the definitive stages of the 2011 race in Grenoble, the individual time trial. Second, Grenoble hosted the

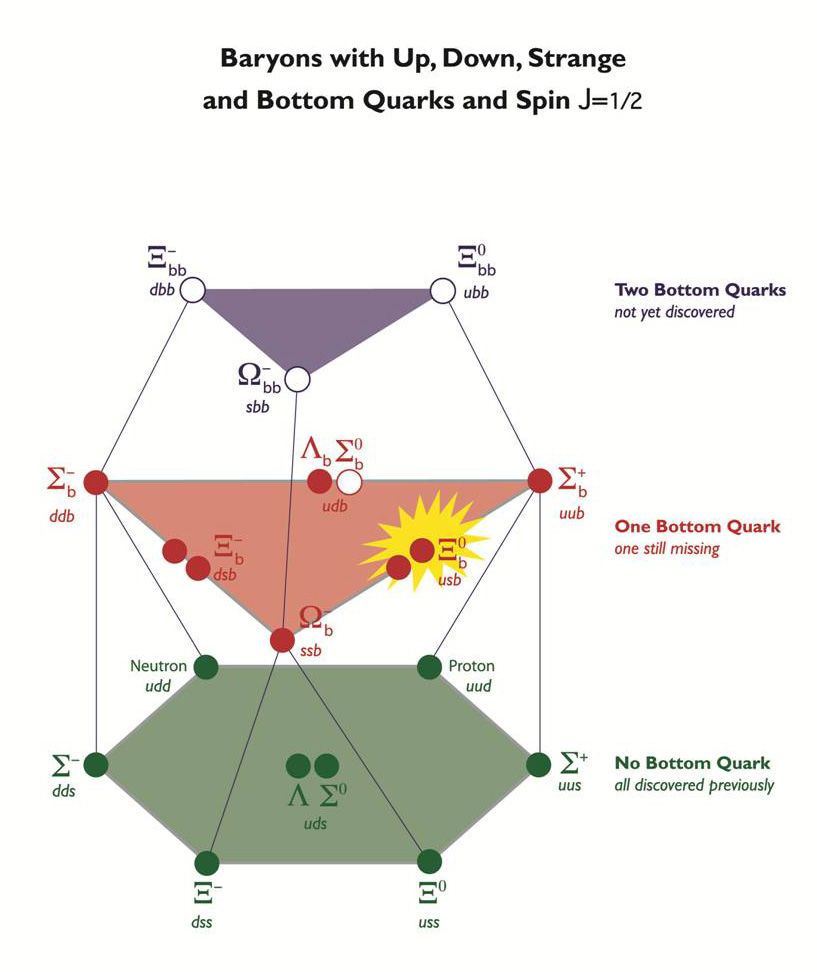

Two exciting races tracked through Grenoble, France this passed week. First, the Tour de France held one of the definitive stages of the 2011 race in Grenoble, the individual time trial. Second, Grenoble hosted the  Both colliders have been smashing particles together in their ongoing quest to refine our understanding of the building blocks of matter, and to determine the existence of the Higgs particle. The Higgs is believed to convey mass to other particles, and remains one of the remaining undiscovered components of the Standard Model of physics.

Both colliders have been smashing particles together in their ongoing quest to refine our understanding of the building blocks of matter, and to determine the existence of the Higgs particle. The Higgs is believed to convey mass to other particles, and remains one of the remaining undiscovered components of the Standard Model of physics. [div class=attrib]From the Physics arXiv for Technology Review:[end-div]

[div class=attrib]From the Physics arXiv for Technology Review:[end-div] [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] [div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the New Scientist:[end-div] [div class=attrib]From Institute of Physics:[end-div]

[div class=attrib]From Institute of Physics:[end-div] One of the most fascinating and (in)famous experiments in social psychology began in the bowels of Stanford University 40 years ago next month. The experiment intended to evaluate how people react to being powerless. However, on conclusion it took a broader look at role assignment and reaction to authority.

One of the most fascinating and (in)famous experiments in social psychology began in the bowels of Stanford University 40 years ago next month. The experiment intended to evaluate how people react to being powerless. However, on conclusion it took a broader look at role assignment and reaction to authority.

[div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div]

[div class=attrib]From the Economist:[end-div]

[div class=attrib]From the Economist:[end-div] [div class=attrib]From New Scientist:[end-div]

[div class=attrib]From New Scientist:[end-div]