In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “sinister” side is fatefully less attractive and still branded as unlucky or evil. Chinese culture brands left-handedness as improper as well.

In 2007 UPS made the headlines by declaring left-hand turns for its army of delivery truck drivers undesirable. Of course, we left-handers have always known that our left or “sinister” side is fatefully less attractive and still branded as unlucky or evil. Chinese culture brands left-handedness as improper as well.

UPS had other motives for poo-pooing left-hand turns. For a company which runs over 95,000 big brown delivery trucks optimizing delivery routes could result in tremendous savings. In fact, careful research showed that the company could reduce its annual delivery routes by 28.5 million miles, save around 3 million gallons of fuel and reduce CO2 emissions by over 30,000 metric tons. And, eliminating or reducing left-hand turns would be safer as well. Of the 2.4 million crashes at intersections in the United States in 2007, most involved left-hand turns, according to the U.S. Federal Highway Administration.

Now urban planners and highway designers in the United States are evaluating the same thing — how to reduce the need for left-hand turns. Drivers in Europe, especially the United Kingdom, will be all too familiar with the roundabout technique for reducing left-handed turns on many A and B roads. Roundabouts have yet to gain significant traction in the United States, so now comes the Diverging Diamond Interchange.

[div class=attrib]From Slate:[end-div]

. . . Left turns are the bane of traffic engineers. Their idea of utopia runs clockwise. (UPS’ routing software famously has drivers turn right whenever possible, to save money and time.) The left-turning vehicle presents not only the aforementioned safety hazard, but a coagulation in the smooth flow of traffic. It’s either a car stopped in an active traffic lane, waiting to turn; or, even worse, it’s cars in a dedicated left-turn lane that, when traffic is heavy enough, requires its own “dedicated signal phase,” lengthening the delay for through traffic as well as cross traffic. And when traffic volumes really increase, as in the junction of two suburban arterials, multiple left-turn lanes are required, costing even more in space and money.

And, increasingly, because of shifting demographics and “lollipop” development patterns, suburban arterials are where the action is: They represent, according to one report, less than 10 percent of the nation’s road mileage, but account for 48 percent of its vehicle-miles traveled.

. . . What can you do when you’ve tinkered all you can with the traffic signals, added as many left-turn lanes as you can, rerouted as much traffic as you can, in areas that have already been built to a sprawling standard? Welcome to the world of the “unconventional intersection,” where left turns are engineered out of existence.

. . . “Grade separation” is the most extreme way to eliminate traffic conflicts. But it’s not only aesthetically unappealing in many environments, it’s expensive. There is, however, a cheaper, less disruptive approach, one that promises its own safety and efficiency gains, that has become recently popular in the United States: the diverging diamond interchange. There’s just one catch: You briefly have to drive the wrong way. But more on that in a bit.

The “DDI” is the brainchild of Gilbert Chlewicki, who first theorized what he called the “criss-cross interchange” as an engineering student at the University of Maryland in 2000.

The DDI is the sort of thing that is easier to visualize than describe (this simulation may help), but here, roughly, is how a DDI built under a highway overpass works: As the eastbound driver approaches the highway interchange (whose lanes run north-south), traffic lanes “criss cross” at a traffic signal. The driver will now find himself on the “left” side of the road, where he can either make an unimpeded left turn onto the highway ramp, or cross over again to the right once he has gone under the highway overpass.

[div class=attrib]More from theSource here.[end-div]

[div class=attrib]From Rolling Stone:[end-div]

[div class=attrib]From Rolling Stone:[end-div] The invisibility cloak of science fiction takes another step further into science fact this week. Researchers over at Physics arVix report a practical method for building a device that repels electromagnetic waves. Alvaro Sanchez and colleagues at Spain’s Universitat Autonoma de Barcelona describe the design of a such a device utilizing the bizarre properties of metamaterials.

The invisibility cloak of science fiction takes another step further into science fact this week. Researchers over at Physics arVix report a practical method for building a device that repels electromagnetic waves. Alvaro Sanchez and colleagues at Spain’s Universitat Autonoma de Barcelona describe the design of a such a device utilizing the bizarre properties of metamaterials. theDiagonal usually does not report on the news. Though we do make a few worthy exceptions based on the import or surreal nature of the event. A case in point below.

theDiagonal usually does not report on the news. Though we do make a few worthy exceptions based on the import or surreal nature of the event. A case in point below. [div class attrib]From Wilson Quarterly:[end-div]

[div class attrib]From Wilson Quarterly:[end-div] [div class=attrib]From the New Scientist:[end-div]

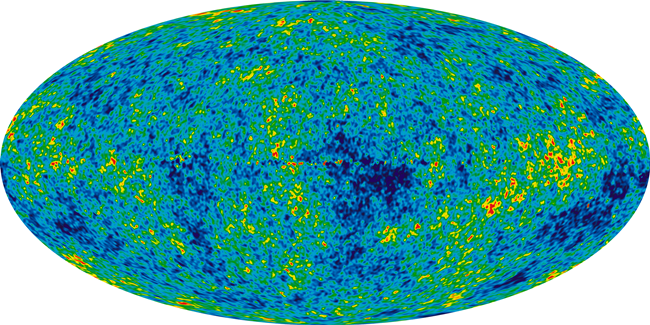

[div class=attrib]From the New Scientist:[end-div] The principle of a holographic universe, not to be confused with the Holographic Universe, an album by swedish death metal rock band Scar Symmetry, continues to hold serious sway among a not insignificant group of even more serious cosmologists.

The principle of a holographic universe, not to be confused with the Holographic Universe, an album by swedish death metal rock band Scar Symmetry, continues to hold serious sway among a not insignificant group of even more serious cosmologists. NASA’s latest spacecraft to visit Mars, the Mars Reconnaissance Orbiter, has made some stunning observations that show the possibility of flowing water on the red planet. Intriguingly, repeated observations of the same regions over several Martian seasons show visible changes attributable to some kind of dynamic flow.

NASA’s latest spacecraft to visit Mars, the Mars Reconnaissance Orbiter, has made some stunning observations that show the possibility of flowing water on the red planet. Intriguingly, repeated observations of the same regions over several Martian seasons show visible changes attributable to some kind of dynamic flow. In early 1990 at CERN headquarters in Geneva, Switzerland,

In early 1990 at CERN headquarters in Geneva, Switzerland,  Five years in internet time is analogous to several entire human lifespans. So, it’s no surprise that Twitter seems to have been with us forever. Despite the near ubiquity of the little blue bird, most of the service’s tweeters have no idea why they are constrained to using a mere 140 characters to express themselves.

Five years in internet time is analogous to several entire human lifespans. So, it’s no surprise that Twitter seems to have been with us forever. Despite the near ubiquity of the little blue bird, most of the service’s tweeters have no idea why they are constrained to using a mere 140 characters to express themselves. A recently opened solo art show takes an fascinating inside peek at the cryonics industry. Entitled “The Prospect of Immortality” the show features photography by Murray Ballard. Ballard’s collection of images follows a 5-year investigation of cryonics in England, the United States and Russia. Cryonics is the practice of freezing the human body just after death in the hope that future science will one day have the capability of restoring it to life.

A recently opened solo art show takes an fascinating inside peek at the cryonics industry. Entitled “The Prospect of Immortality” the show features photography by Murray Ballard. Ballard’s collection of images follows a 5-year investigation of cryonics in England, the United States and Russia. Cryonics is the practice of freezing the human body just after death in the hope that future science will one day have the capability of restoring it to life. [div class=attrib]From Scientific American:[end-div]

[div class=attrib]From Scientific American:[end-div] [div class=attrib]From Wired:[end-div]

[div class=attrib]From Wired:[end-div] Clarifying intent, emotion, wishes and meaning is a rather tricky and cumbersome process that we all navigate each day. Online in the digital world this is even more challenging, if not sometimes impossible. The pre-digital method of exchanging information in a social context would have been face-to-face. Such a method provides the full gamut of verbal and non-verbal dialogue between two or more parties. Importantly, it also provides a channel for the exchange of unconscious cues between people, which researchers are increasingly finding to be of critical importance during communication.

Clarifying intent, emotion, wishes and meaning is a rather tricky and cumbersome process that we all navigate each day. Online in the digital world this is even more challenging, if not sometimes impossible. The pre-digital method of exchanging information in a social context would have been face-to-face. Such a method provides the full gamut of verbal and non-verbal dialogue between two or more parties. Importantly, it also provides a channel for the exchange of unconscious cues between people, which researchers are increasingly finding to be of critical importance during communication.

[div class=attrib]From Salon:[end-div]

[div class=attrib]From Salon:[end-div] A fascinating and disturbing series of still photographs from Andris Feldmanis shows us what the television “sees” as its viewers glare seemingly mindlessly at the box. As Feldmanis describes,

A fascinating and disturbing series of still photographs from Andris Feldmanis shows us what the television “sees” as its viewers glare seemingly mindlessly at the box. As Feldmanis describes, More precisely NASA’s Dawn spacecraft entered into orbit around the asteroid Vesta on July 15, 2011. Vesta is the second largest of our solar system’s asteroids and is located in the asteroid belt between Mars and Jupiter.

More precisely NASA’s Dawn spacecraft entered into orbit around the asteroid Vesta on July 15, 2011. Vesta is the second largest of our solar system’s asteroids and is located in the asteroid belt between Mars and Jupiter. It’s #$% hot in the southern plains of the United States, with high temperatures constantly above 100 degrees F, and lows never dipping below 80. For that matter, it’s hotter than average this year in most parts of the country. So, a timely article over at Slate gives a great overview of the history of the air conditioning system, courtesy of inventor Willis Carrier.

It’s #$% hot in the southern plains of the United States, with high temperatures constantly above 100 degrees F, and lows never dipping below 80. For that matter, it’s hotter than average this year in most parts of the country. So, a timely article over at Slate gives a great overview of the history of the air conditioning system, courtesy of inventor Willis Carrier. The Seven Sisters star cluster, also known as the Pleiades, consists of many, young, bright and hot stars. While the cluster contains hundreds of stars it is so named because only seven are typically visible to the naked eye. The Seven Sisters is visible from the northern hemisphere, and resides in the constellation Taurus.

The Seven Sisters star cluster, also known as the Pleiades, consists of many, young, bright and hot stars. While the cluster contains hundreds of stars it is so named because only seven are typically visible to the naked eye. The Seven Sisters is visible from the northern hemisphere, and resides in the constellation Taurus. Monday’s poem authored by William Meredith, was selected for it is in keeping with our

Monday’s poem authored by William Meredith, was selected for it is in keeping with our

The next time you wander through an art gallery and feel lightheaded after seeing a Monroe silkscreen by Warhol, or feel reflective and soothed by a scene from Monet’s garden you’ll be in good company. New research shows that the body reacts to art not just our grey matter.

The next time you wander through an art gallery and feel lightheaded after seeing a Monroe silkscreen by Warhol, or feel reflective and soothed by a scene from Monet’s garden you’ll be in good company. New research shows that the body reacts to art not just our grey matter. [div class=attrib]From BigThink:[end-div]

[div class=attrib]From BigThink:[end-div]