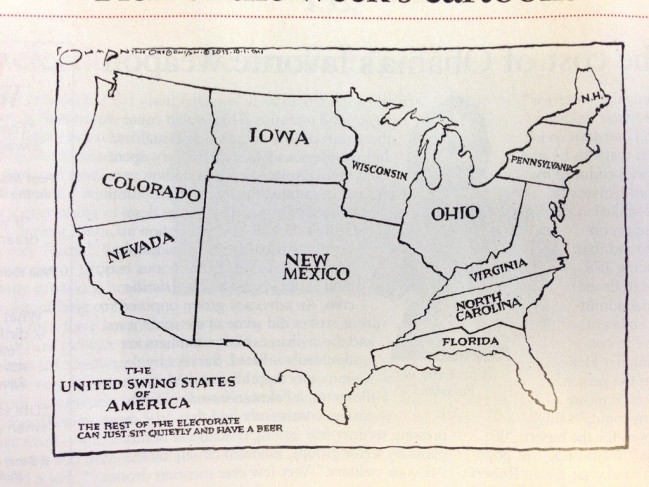

While this election cycle in the United States has been especially partisan this season, it’s worth remembering that politics in an open democracy is sometimes brutal, frequently nasty and often petty. Partisan fights, both metaphorical and physical, have been occuring since the Republic was founded

[div class=attrib]From the New York Times:[end-div]

As the cable news channels count down the hours before the first polls close on Tuesday, an entire election cycle will have passed since President Obama last sat down with Fox News. The organization’s standing request to interview the president is now almost two years old.

At NBC News, the journalists reporting on the Romney campaign will continue to absorb taunts from their sources about their sister cable channel, MSNBC. “You mean, Al Sharpton’s network,” as Stuart Stevens, a senior Romney adviser, is especially fond of reminding them.

Spend just a little time watching either Fox News or MSNBC, and it is easy to see why such tensions run high. In fact, by some measures, the partisan bitterness on cable news has never been as stark — and in some ways, as silly or small.

Martin Bashir, the host of MSNBC’s 4 p.m. hour, recently tried to assess why Mitt Romney seemed irritable on the campaign trail and offered a provocative theory: that he might have mental problems.

“Mrs. Romney has expressed concerns about her husband’s mental well-being,” Mr. Bashir told one of his guests. “But do you get the feeling that perhaps there’s more to this than she’s saying?”

Over on Fox News, similar psychological evaluations were under way on “Fox & Friends.” Keith Ablow, a psychiatrist and a member of the channel’s “Medical A-Team,” suggested that Joseph R. Biden Jr.’s “bizarre laughter” during the vice-presidential debate might have something to do with a larger mental health issue. “You have to put dementia on the differential diagnosis,” he noted matter-of-factly.

Neither outlet has built its reputation on moderation and restraint, but during this presidential election, research shows that both are pushing their stridency to new levels.

A Pew Research Center study found that of Fox News stories about Mr. Obama from the end of August through the end of October, just 6 percent were positive and 46 percent were negative.

Pew also found that Mr. Obama was covered far more than Mr. Romney. The president was a significant figure in 74 percent of Fox’s campaign stories, compared with 49 percent for Romney. In 2008, Pew found that the channel reported on Mr. Obama and John McCain in roughly equal amounts.

The greater disparity was on MSNBC, which gave Mr. Romney positive coverage just 3 percent of the time, Pew found. It examined 259 segments about Mr. Romney and found that 71 percent were negative.

MSNBC, whose programs are hosted by a new crop of extravagant partisans like Mr. Bashir, Mr. Sharpton and Lawrence O’Donnell, has tested the limits of good taste this year. Mr. O’Donnell was forced to apologize in April after describing the Mormon Church as nothing more than a scheme cooked up by a man who “got caught having sex with the maid and explained to his wife that God told him to do it.”

The channel’s hosts recycle talking points handed out by the Obama campaign, even using them as titles for program segments, like Mr. Bashir did recently with a segment he called “Romnesia,” referring to Mr. Obama’s term to explain his opponent’s shifting positions.

The hosts insult and mock, like Alex Wagner did in recently describing Mr. Romney’s trip overseas as “National Lampoon’s European Vacation” — a line she borrowed from an Obama spokeswoman. Mr. Romney was not only hapless, Ms. Wagner said, he also looked “disheveled” and “a little bit sweaty” in a recent appearance.

Not that they save their scorn just for their programs. Some MSNBC hosts even use the channel’s own ads promoting its slogan “Lean Forward,” to criticize Mr. Romney and the Republicans. Mr. O’Donnell accuses the Republican nominee of basing his campaign on the false notion that Mr. Obama is inciting class warfare. “You have to come up with a lie,” he says, when your campaign is based on empty rhetoric.

In her ad, Rachel Maddow breathlessly decodes the logic behind the push to overhaul state voting laws. “The idea is to shrink the electorate,” she says, “so a smaller number of people get to decide what happens to all of us.”

Such stridency has put NBC News journalists who cover Republicans in awkward and compromised positions, several people who work for the network said. To distance themselves from their sister channel, they have started taking steps to reassure Republican sources, like pointing out that they are reporting for NBC programs like “Today” and “Nightly News” — not for MSNBC.

At Fox News, there is a palpable sense that the White House punishes the outlet for its coverage, not only by withholding the president, who has done interviews with every other major network, but also by denying them access to Michelle Obama.

This fall, Mrs. Obama has done a spate of television appearances, from CNN to “Jimmy Kimmel Live” on ABC. But when officials from Fox News recently asked for an interview with the first lady, they were told no. She has not appeared on the channel since 2010, when she sat down with Mike Huckabee.

Lately the White House and Fox News have been at odds over the channel’s aggressive coverage of the attack on the American diplomatic mission in Benghazi, Libya. Fox initially raised questions over the White House’s explanation of the events that led to the attack — questions that other news organizations have since started reporting on more fully.

But the commentary on the channel quickly and often turns to accusations that the White House played politics with American lives. “Everything they told us was a lie,” Sean Hannity said recently as he and John H. Sununu, a former governor of New Hampshire and a Romney campaign supporter, took turns raising questions about how the Obama administration misled the public. “A hoax,” Mr. Hannity called the administration’s explanation. “A cover-up.”

Mr. Hannity has also taken to selectively fact-checking Mr. Obama’s claims, co-opting a journalistic tool that has proliferated in this election as news outlets sought to bring more accountability to their coverage.

Mr. Hannity’s guest fact-checkers have included hardly objective sources, like Dick Morris, the former Clinton aide turned conservative commentator; Liz Cheney, the daughter of former Vice President Dick Cheney; and Michelle Malkin, the right-wing provocateur.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of University of Maine at Farmington.[end-div]

Humans have a peculiar habit of anthropomorphizing anything that moves, and for that matter, most objects that remain static as well. So, it is not surprising that evil is often personified and even stereotyped; it is said that true evil even has a home somewhere below where you currently stand.

Humans have a peculiar habit of anthropomorphizing anything that moves, and for that matter, most objects that remain static as well. So, it is not surprising that evil is often personified and even stereotyped; it is said that true evil even has a home somewhere below where you currently stand. Blame corporate euphemisms and branding for the obfuscation of everyday things. More sinister yet, is the constant re-working of names for our ever increasingly processed foodstuffs. Only last year as several influential health studies pointed towards the detrimental health effects of high fructose corn syrup (HFC) did the food industry act, but not by removing copious amounts of the addictive additive from many processed foods. Rather, the industry attempted to re-brand HFC as “corn sugar”. And, now on to the battle over “soylent pink” also known as “pink slim”.

Blame corporate euphemisms and branding for the obfuscation of everyday things. More sinister yet, is the constant re-working of names for our ever increasingly processed foodstuffs. Only last year as several influential health studies pointed towards the detrimental health effects of high fructose corn syrup (HFC) did the food industry act, but not by removing copious amounts of the addictive additive from many processed foods. Rather, the industry attempted to re-brand HFC as “corn sugar”. And, now on to the battle over “soylent pink” also known as “pink slim”.

A month in to fall and it really does now seem like Autumn — leaves are turning and falling, jackets have reappeared, brisk morning walks are now shrouded in darkness.

A month in to fall and it really does now seem like Autumn — leaves are turning and falling, jackets have reappeared, brisk morning walks are now shrouded in darkness.

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household.

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household. There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

Author Joe Queenan explains why reading over 6,000 books may be because, as he puts it, he “find[s] ‘reality’ a bit of a disappointment”.

Author Joe Queenan explains why reading over 6,000 books may be because, as he puts it, he “find[s] ‘reality’ a bit of a disappointment”. A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading. Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”.

For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”.

QTWTAIN is a Twitterspeak acronym for a Question To Which The Answer Is No.

QTWTAIN is a Twitterspeak acronym for a Question To Which The Answer Is No.

The long-term downward trend in the number injuries to young children is no longer. Sadly, urgent care and emergency room doctors are now seeing more children aged 0-14 years with unintentional injuries. While the exact causes are yet to be determined, there is a growing body of anecdotal evidence that points to distraction among patents and supervisors — it’s the texting stupid!

The long-term downward trend in the number injuries to young children is no longer. Sadly, urgent care and emergency room doctors are now seeing more children aged 0-14 years with unintentional injuries. While the exact causes are yet to be determined, there is a growing body of anecdotal evidence that points to distraction among patents and supervisors — it’s the texting stupid!