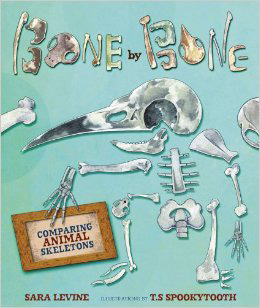

One of the most engaging new books for young children is a picture book that explains evolution. By way of whimsical illustrations and comparisons of animal skeletons the book — Bone By Bone — is able to deliver the story of evolutionary theory in an entertaining and compelling way.

One of the most engaging new books for young children is a picture book that explains evolution. By way of whimsical illustrations and comparisons of animal skeletons the book — Bone By Bone — is able to deliver the story of evolutionary theory in an entertaining and compelling way.

Perhaps, it could be used just as well for those adults who have trouble grappling with the fruits of the scientific method. The Texas School Board of Education would make an ideal place to begin.

Bone By Bone is written by veterinarian Sara Levine.

From Slate:

In some of the best children’s books, dandelions turn into stars, sharks and radishes merge, and pancakes fall from the sky. No one would confuse these magical tales for descriptions of nature. Small children can differentiate between “the real world and the imaginary world,” as psychologist Alison Gopnik has written. They just “don’t see any particular reason for preferring to live in the real one.”

Children’s nuanced understanding of the not-real surely extends to the towering heap of books that feature dinosaurs as playmates who fill buckets of sand or bake chocolate-chip cookies. The imaginative play of these books may be no different to kids than radishsharks and llama dramas.

But as a parent, friendly dinos never steal my heart. I associate them, just a little, with old creationist images of animals frolicking near the Garden of Eden, which carried the message that dinosaurs and man, both created by God on the sixth day, co-existed on the Earth until after the flood. (Never mind the evidence that dinosaurs went extinct millions of years before humans appeared.) The founder of the Creation Museum in Kentucky calls dinosaurs “missionary lizards,” and that phrase echoes in my head when I see all those goofy illustrations of dinosaurs in sunglasses and hats.

I’ve been longing for another kind of picture book: one that appeals to young children’s wildest imagination in service of real evolutionary thinking. Such a book could certainly include dinosaur skeletons or fossils. But Bone by Bone, by veterinarian and professor Sara Levine, fills the niche to near perfection by relying on dogs, rabbits, bats, whales, and humans. Levine plays with differences in their skeletons to groom kids for grand scientific concepts.

Bone by Bone asks kids to imagine what their bodies would look like if they had different configurations of bones, like extra vertebrae, longer limbs, or fewer fingers. “What if your vertebrae didn’t stop at your rear end? What if they kept going?” Levine writes, as a boy peers over his shoulder at the spinal column. “You’d have a tail!”

“What kind of animal would you be if your leg bones were much, much longer than your arm bones?” she wonders, as a girl in pink sneakers rises so tall her face disappears from the page. “A rabbit or a kangaroo!” she says, later adding a pike and a hare. “These animals need strong hind leg bones for jumping.” Levine’s questions and answers are delightfully simple for the scientific heft they carry.

With the lightest possible touch, Levine introduces the idea that bones in different vertebrates are related and that they morph over time. She starts with vertebrae, skulls and ribs. But other structures bear strong kinships in these animals, too. The bone in the center of a horse’s hoof, for instance, is related to a human finger. (“What would happen if your middle fingers and the middle toes were so thick that they supported your whole body?”) The bones that radiate out through a bat’s wing are linked to those in a human hand. (“A web of skin connects the bones to make wings so that a bat can fly.”) This is different from the wings of a bird or an insect; with bats, it’s almost as if they’re swimming through air.

Of course, human hands did not shape-shift into bats’ wings, or vice versa. Both derive from a common ancestral structure, which means they share an evolutionary past. Homology, as this kind of relatedness is called, is among “the first and in many ways the best evidence for evolution,” says Josh Rosenau of the National Center for Science Education. Comparing bones also paves the way for comparing genes and molecules, for grasping evolution at the next level of sophistication. Indeed, it’s hard to look at the bat wings and human hands as presented here without lighting up, at least a little, with these ideas. So many smart writers focus on preparing young kids to read or understand numbers. Why not do more to ready them for the big ideas of science? Why not pave the way for evolution? (This is easier to do with older kids, with books like The Evolution of Calpurnia Tate and Why Don’t Your Eyelashes Grow?)

Read the entire story here.

Image: Bone By Bone, book cover. Courtesy: Lerner Publishing Group

Pseudoscience can be fun — for comedic purposes only of course. But when it is taken seriously and dogmatically, as it often is by a significant number of people, it imperils rational dialogue and threatens real scientific and cultural progress. There is no end to the lengthy list of fake scientific claims and theories — some of our favorites include: the moon “landing” conspiracy, hollow Earth, Bermuda triangle, crop circles, psychic surgery, body earthing, room temperature fusion, perpetual and motion machines.

Pseudoscience can be fun — for comedic purposes only of course. But when it is taken seriously and dogmatically, as it often is by a significant number of people, it imperils rational dialogue and threatens real scientific and cultural progress. There is no end to the lengthy list of fake scientific claims and theories — some of our favorites include: the moon “landing” conspiracy, hollow Earth, Bermuda triangle, crop circles, psychic surgery, body earthing, room temperature fusion, perpetual and motion machines.

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”.

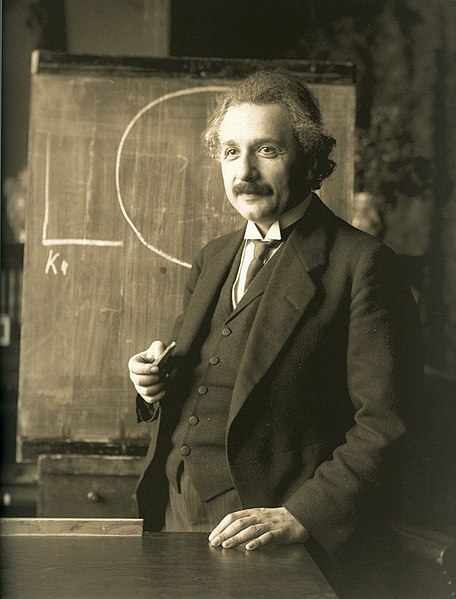

Hot on the heels of recent successes by the Texas School Board of Education (SBOE) to revise history and science curricula, legislators in Missouri are planning to redefine commonly accepted scientific principles. Much like the situation in Texas the Missouri House is mandating that intelligent design be taught alongside evolution, in equal measure, in all the state’s schools. But, in a bid to take the lead in reversing thousands of years of scientific progress Missouri plans to redefine the actual scientific framework. So, if you can’t make “intelligent design” fit the principles of accepted science, then just change the principles themselves — first up, change the meanings of the terms “scientific hypothesis” and “scientific theory”. There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible?

There is a certain school of thought that asserts that scientific genius is a thing of the past. After all, we haven’t seen the recent emergence of pivotal talents such as Galileo, Newton, Darwin or Einstein. Is it possible that fundamentally new ways to look at our world — that a new mathematics or a new physics is no longer possible?

Regardless of how flawed old scientific concepts may be researchers have found that it is remarkably difficult for people to give these up and accept sound, new reasoning. Even scientists are creatures of habit.

Regardless of how flawed old scientific concepts may be researchers have found that it is remarkably difficult for people to give these up and accept sound, new reasoning. Even scientists are creatures of habit.

The tension between science, religion and politics that began several millennia ago continues unabated.

The tension between science, religion and politics that began several millennia ago continues unabated.

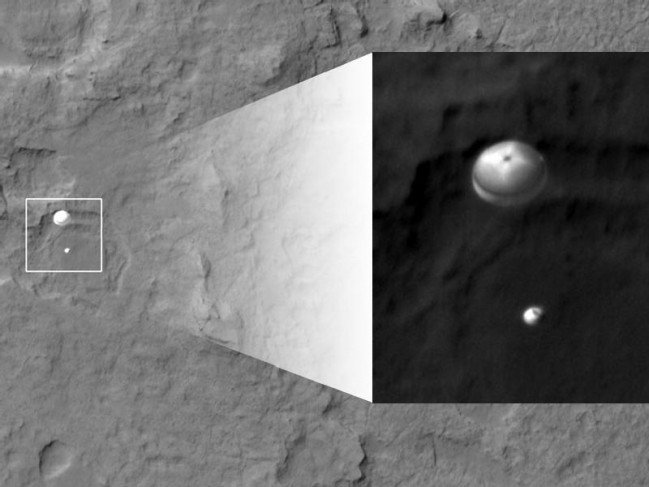

The 2011 Nobel Prize in Physics was recently awarded to three scientists: Adam Riess, Saul Perlmutter and Brian Schmidt. Their computations and observations of a very specific type of exploding star upended decades of commonly accepted beliefs of our universe. Namely, that the expansion of the universe is accelerating.

The 2011 Nobel Prize in Physics was recently awarded to three scientists: Adam Riess, Saul Perlmutter and Brian Schmidt. Their computations and observations of a very specific type of exploding star upended decades of commonly accepted beliefs of our universe. Namely, that the expansion of the universe is accelerating.