Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy?

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy?

From the Guardian:

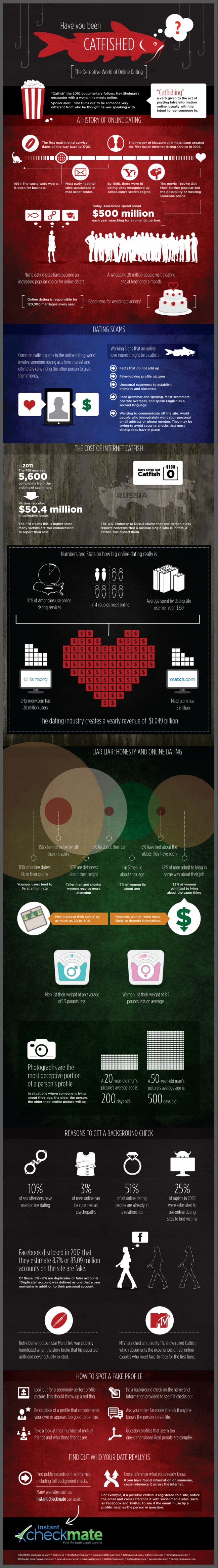

The internet has turned into a massive surveillance tool. We’re constantly monitored on the internet by hundreds of companies — both familiar and unfamiliar. Everything we do there is recorded, collected, and collated – sometimes by corporations wanting to sell us stuff and sometimes by governments wanting to keep an eye on us.

Ephemeral conversation is over. Wholesale surveillance is the norm. Maintaining privacy from these powerful entities is basically impossible, and any illusion of privacy we maintain is based either on ignorance or on our unwillingness to accept what’s really going on.

It’s about to get worse, though. Companies such as Google may know more about your personal interests than your spouse, but so far it’s been limited by the fact that these companies only see computer data. And even though your computer habits are increasingly being linked to your offline behaviour, it’s still only behaviour that involves computers.

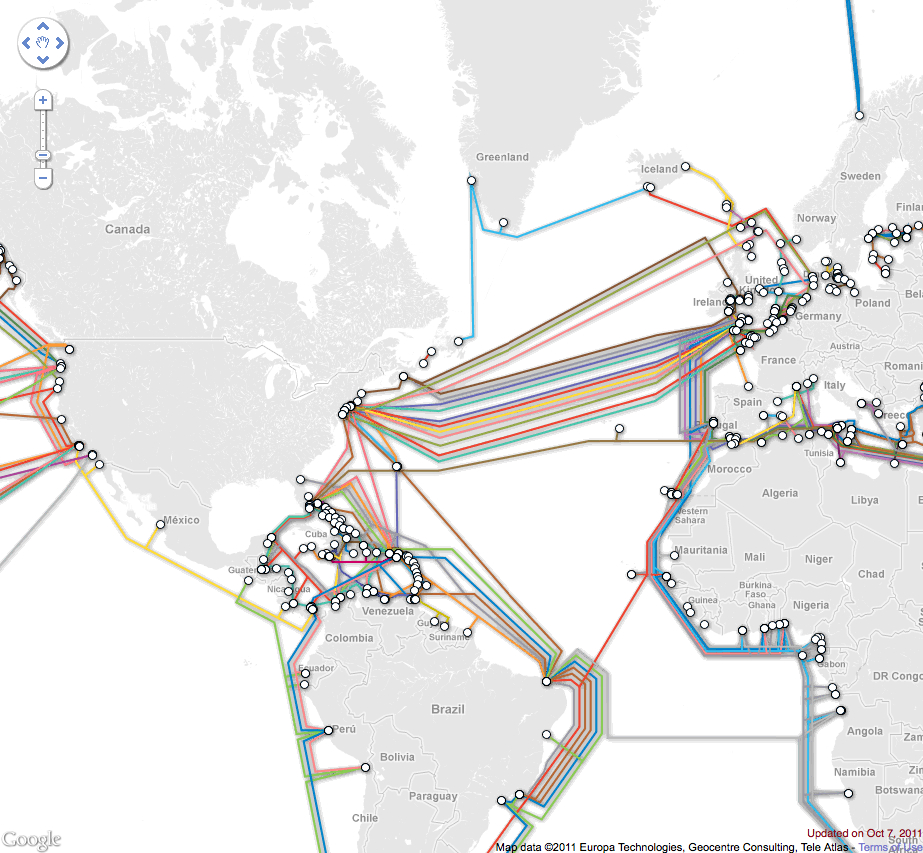

The Internet of Things refers to a world where much more than our computers and cell phones is internet-enabled. Soon there will be internet-connected modules on our cars and home appliances. Internet-enabled medical devices will collect real-time health data about us. There’ll be internet-connected tags on our clothing. In its extreme, everything can be connected to the internet. It’s really just a matter of time, as these self-powered wireless-enabled computers become smaller and cheaper.

Lots has been written about the “Internet of Things” and how it will change society for the better. It’s true that it will make a lot of wonderful things possible, but the “Internet of Things” will also allow for an even greater amount of surveillance than there is today. The Internet of Things gives the governments and corporations that follow our every move something they don’t yet have: eyes and ears.

Soon everything we do, both online and offline, will be recorded and stored forever. The only question remaining is who will have access to all of this information, and under what rules.

We’re seeing an initial glimmer of this from how location sensors on your mobile phone are being used to track you. Of course your cell provider needs to know where you are; it can’t route your phone calls to your phone otherwise. But most of us broadcast our location information to many other companies whose apps we’ve installed on our phone. Google Maps certainly, but also a surprising number of app vendors who collect that information. It can be used to determine where you live, where you work, and who you spend time with.

Another early adopter was Nike, whose Nike+ shoes communicate with your iPod or iPhone and track your exercising. More generally, medical devices are starting to be internet-enabled, collecting and reporting a variety of health data. Wiring appliances to the internet is one of the pillars of the smart electric grid. Yes, there are huge potential savings associated with the smart grid, but it will also allow power companies – and anyone they decide to sell the data to – to monitor how people move about their house and how they spend their time.

Drones are the another “thing” moving onto the internet. As their price continues to drop and their capabilities increase, they will become a very powerful surveillance tool. Their cameras are powerful enough to see faces clearly, and there are enough tagged photographs on the internet to identify many of us. We’re not yet up to a real-time Google Earth equivalent, but it’s not more than a few years away. And drones are just a specific application of CCTV cameras, which have been monitoring us for years, and will increasingly be networked.

Google’s internet-enabled glasses – Google Glass – are another major step down this path of surveillance. Their ability to record both audio and video will bring ubiquitous surveillance to the next level. Once they’re common, you might never know when you’re being recorded in both audio and video. You might as well assume that everything you do and say will be recorded and saved forever.

In the near term, at least, the sheer volume of data will limit the sorts of conclusions that can be drawn. The invasiveness of these technologies depends on asking the right questions. For example, if a private investigator is watching you in the physical world, she or he might observe odd behaviour and investigate further based on that. Such serendipitous observations are harder to achieve when you’re filtering databases based on pre-programmed queries. In other words, it’s easier to ask questions about what you purchased and where you were than to ask what you did with your purchases and why you went where you did. These analytical limitations also mean that companies like Google and Facebook will benefit more from the Internet of Things than individuals – not only because they have access to more data, but also because they have more sophisticated query technology. And as technology continues to improve, the ability to automatically analyse this massive data stream will improve.

In the longer term, the Internet of Things means ubiquitous surveillance. If an object “knows” you have purchased it, and communicates via either Wi-Fi or the mobile network, then whoever or whatever it is communicating with will know where you are. Your car will know who is in it, who is driving, and what traffic laws that driver is following or ignoring. No need to show ID; your identity will already be known. Store clerks could know your name, address, and income level as soon as you walk through the door. Billboards will tailor ads to you, and record how you respond to them. Fast food restaurants will know what you usually order, and exactly how to entice you to order more. Lots of companies will know whom you spend your days – and nights – with. Facebook will know about any new relationship status before you bother to change it on your profile. And all of this information will all be saved, correlated, and studied. Even now, it feels a lot like science fiction.

Read the entire article here.

Image: Big Brother, 1984. Poster. Courtesy of Telegraph.

There is no doubt that online reviews for products and services, from books to news cars to a vacation spot, have revolutionized shopping behavior. Internet and mobile technology has made gathering, reviewing and publishing open and honest crowdsourced opinion simple, efficient and ubiquitous.

There is no doubt that online reviews for products and services, from books to news cars to a vacation spot, have revolutionized shopping behavior. Internet and mobile technology has made gathering, reviewing and publishing open and honest crowdsourced opinion simple, efficient and ubiquitous. No surprise. Women and men use online social networks differently. A new study of online behavior by researchers in Vienna, Austria, shows that the sexes organize their networks very differently and for different reasons.

No surprise. Women and men use online social networks differently. A new study of online behavior by researchers in Vienna, Austria, shows that the sexes organize their networks very differently and for different reasons. The term “Internet of Things” was first coined in 1999 by Kevin Ashton. It refers to the notion whereby physical objects of all kinds are equipped with small identifying devices and connected to a network. In essence: everything connected to anytime, anywhere by anyone. One of the potential benefits is that this would allow objects to be tracked, inventoried and status continuously monitored.

The term “Internet of Things” was first coined in 1999 by Kevin Ashton. It refers to the notion whereby physical objects of all kinds are equipped with small identifying devices and connected to a network. In essence: everything connected to anytime, anywhere by anyone. One of the potential benefits is that this would allow objects to be tracked, inventoried and status continuously monitored. [div class=attrib]From Eurozine:[end-div]

[div class=attrib]From Eurozine:[end-div]

Twenty or so years ago the economic prognosticators and technology pundits would all have had us believe that the internet would transform society; it would level the playing field; it would help the little guy compete against the corporate behemoth; it would make us all “socially” rich if not financially. Yet, the promise of those early, heady days seems remarkably narrow nowadays. What happened? Or rather, what didn’t happen?

Twenty or so years ago the economic prognosticators and technology pundits would all have had us believe that the internet would transform society; it would level the playing field; it would help the little guy compete against the corporate behemoth; it would make us all “socially” rich if not financially. Yet, the promise of those early, heady days seems remarkably narrow nowadays. What happened? Or rather, what didn’t happen?

In early 1990 at CERN headquarters in Geneva, Switzerland,

In early 1990 at CERN headquarters in Geneva, Switzerland,  [div class=attrib]From the New York Times:[end-div]

[div class=attrib]From the New York Times:[end-div] The lives of 2 technological marvels came to a close this week. First, NASA officially concluded the space shuttle program with the

The lives of 2 technological marvels came to a close this week. First, NASA officially concluded the space shuttle program with the  Phew! Another heartfelt call to action from business blogger Seth Godin to become indispensable.

Phew! Another heartfelt call to action from business blogger Seth Godin to become indispensable. Imagine a world without books; you’d have to commit useful experiences, narratives and data to handwritten form and memory.Imagine a world without the internet and real-time search; you’d have to rely on a trusted expert or a printed dictionary to find answers to your questions. Imagine a world without the written word; you’d have to revert to memory and oral tradition to pass on meaningful life lessons and stories.

Imagine a world without books; you’d have to commit useful experiences, narratives and data to handwritten form and memory.Imagine a world without the internet and real-time search; you’d have to rely on a trusted expert or a printed dictionary to find answers to your questions. Imagine a world without the written word; you’d have to revert to memory and oral tradition to pass on meaningful life lessons and stories.![John William Waterhouse [Public domain], via Wikimedia Commons](http://upload.wikimedia.org/wikipedia/commons/thumb/6/6b/John_William_Waterhouse_Echo_And_Narcissus.jpg/640px-John_William_Waterhouse_Echo_And_Narcissus.jpg) [div class=attrib]Echo and Narcissus, John William Waterhouse [Public domain], via Wikimedia Commons[end-div]

[div class=attrib]Echo and Narcissus, John William Waterhouse [Public domain], via Wikimedia Commons[end-div] [div class=attrib]From The Observer:[end-div]

[div class=attrib]From The Observer:[end-div] RETHINKING THE WEB Jaron Lanier, pictured here in 1999, was an early proponent of the Internet’s open culture. His new book examines the downsides.

RETHINKING THE WEB Jaron Lanier, pictured here in 1999, was an early proponent of the Internet’s open culture. His new book examines the downsides.