Google’s oft quoted corporate mantra — do no evil — reminds us to remain vigilant even if the company believes it does good and can do no wrong.

Google’s oft quoted corporate mantra — do no evil — reminds us to remain vigilant even if the company believes it does good and can do no wrong.

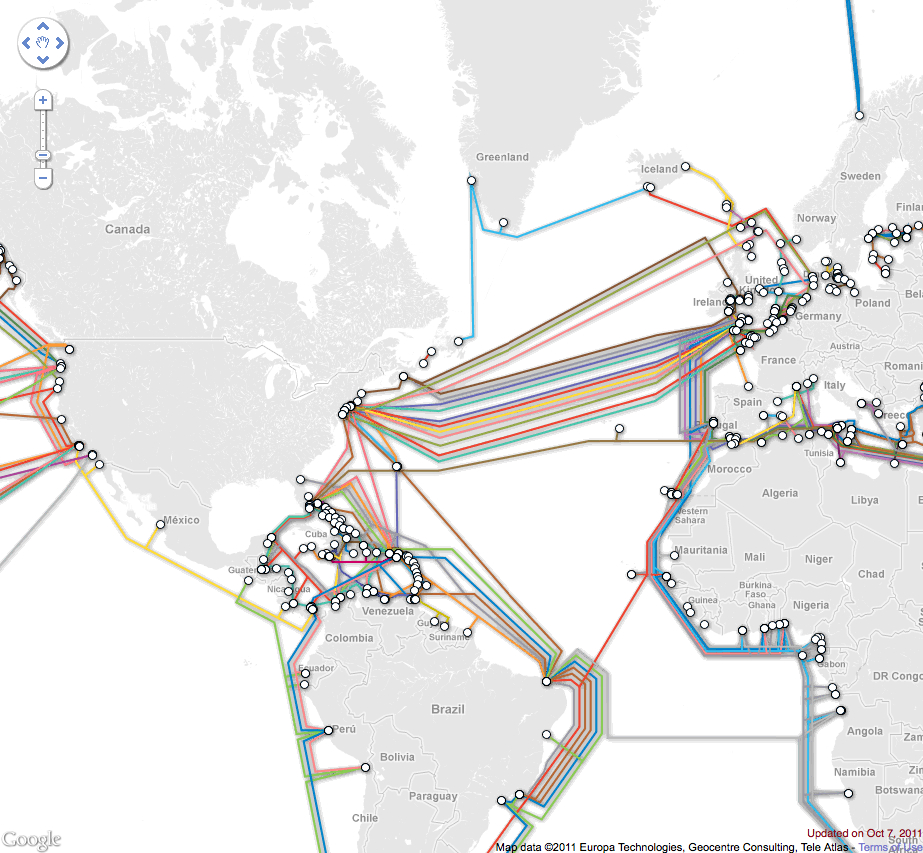

Google serves up countless search results to ease our never-ending thirst for knowledge, deals, news, quotes, jokes, user manuals, contacts, products and so on. This is clearly of tremendous benefit to us, to Google and to Google’s advertisers. Of course in fulfilling our searches Google collects equally staggering amounts of information — about us. Increasingly the company will know where we are, what we like and dislike, what we prefer, what we do, where we travel, with whom and why, how our friends are, what we read, what we buy.

As Jaron Lanier remarked in a recent post, there is a fine line between being a global index to the world’s free and open library of information and being the paid gatekeeper to our collective knowledge and hoarder of our collective online (and increasingly offline) behaviors, tracks and memories. We have already seen how Google, and others, can personalize search results based on our previous tracks thus filtering and biasing what we see and read, limiting our exposure to alternate views and opinions.

It’s quite easy to imagine a rather more dystopian view of a society gone awry manipulated by a not-so-benevolent Google when, eventually, founders Brin and Page retire to their vacation bases on the moon.

With this in mind Daniel Soar over at London Review of Books reviews several recent books about Google and offers some interesting insights.

[div class=attrib]London Review of Books:[end-div]

This spring, the billionaire Eric Schmidt announced that there were only four really significant technology companies: Apple, Amazon, Facebook and Google, the company he had until recently been running. People believed him. What distinguished his new ‘gang of four’ from the generation it had superseded – companies like Intel, Microsoft, Dell and Cisco, which mostly exist to sell gizmos and gadgets and innumerable hours of expensive support services to corporate clients – was that the newcomers sold their products and services to ordinary people. Since there are more ordinary people in the world than there are businesses, and since there’s nothing that ordinary people don’t want or need, or can’t be persuaded they want or need when it flashes up alluringly on their screens, the money to be made from them is virtually limitless. Together, Schmidt’s four companies are worth more than half a trillion dollars. The technology sector isn’t as big as, say, oil, but it’s growing, as more and more traditional industries – advertising, travel, real estate, used cars, new cars, porn, television, film, music, publishing, news – are subsumed into the digital economy. Schmidt, who as the ex-CEO of a multibillion-dollar corporation had learned to take the long view, warned that not all four of his disruptive gang could survive. So – as they all converge from their various beginnings to compete in the same area, the place usually referred to as ‘the cloud’, a place where everything that matters is online – the question is: who will be the first to blink?

If the company that falters is Google, it won’t be because it didn’t see the future coming. Of Schmidt’s four technology juggernauts, Google has always been the most ambitious, and the most committed to getting everything possible onto the internet, its mission being ‘to organise the world’s information and make it universally accessible and useful’. Its ubiquitous search box has changed the way information can be got at to such an extent that ten years after most people first learned of its existence you wouldn’t think of trying to find out anything without typing it into Google first. Searching on Google is automatic, a reflex, just part of what we do. But an insufficiently thought-about fact is that in order to organise the world’s information Google first has to get hold of the stuff. And in the long run ‘the world’s information’ means much more than anyone would ever have imagined it could. It means, of course, the totality of the information contained on the World Wide Web, or the contents of more than a trillion webpages (it was a trillion at the last count, in 2008; now, such a number would be meaningless). But that much goes without saying, since indexing and ranking webpages is where Google began when it got going as a research project at Stanford in 1996, just five years after the web itself was invented. It means – or would mean, if lawyers let Google have its way – the complete contents of every one of the more than 33 million books in the Library of Congress or, if you include slightly varying editions and pamphlets and other ephemera, the contents of the approximately 129,864,880 books published in every recorded language since printing was invented. It means every video uploaded to the public internet, a quantity – if you take the Google-owned YouTube alone – that is increasing at the rate of nearly an hour of video every second.

[div class=attrib]Read more here.[end-div]

The world will miss Steve Jobs.

The world will miss Steve Jobs. Tomas Tranströmer is one of Sweden’s leading poets. He studied poetry and psychology at the University of Stockholm. Tranströmer was awarded the 2011 Nobel Prize for Literature “because, through his condensed, translucent images, he gives us fresh access to reality”.

Tomas Tranströmer is one of Sweden’s leading poets. He studied poetry and psychology at the University of Stockholm. Tranströmer was awarded the 2011 Nobel Prize for Literature “because, through his condensed, translucent images, he gives us fresh access to reality”.

We live in violent times. Or do we?

We live in violent times. Or do we? [div class=attrib]From the Economist:[end-div]

[div class=attrib]From the Economist:[end-div] It is undeniable that there is ever increasing societal pressure on children to perform compete, achieve and succeed, and to do so at ever younger ages. However, while average college test admission scores have improved it’s also arguable that admission standards have dropped. So, the picture painted by James Atlas in the article below is far from clear. Nonetheless, it’s disturbing that our children get less and less time to dream, play, explore and get dirty.

It is undeniable that there is ever increasing societal pressure on children to perform compete, achieve and succeed, and to do so at ever younger ages. However, while average college test admission scores have improved it’s also arguable that admission standards have dropped. So, the picture painted by James Atlas in the article below is far from clear. Nonetheless, it’s disturbing that our children get less and less time to dream, play, explore and get dirty. [div class=attrib]From Jonathan Jones over at the Guardian:[end-div]

[div class=attrib]From Jonathan Jones over at the Guardian:[end-div]

The Autumnal Equinox finally ushers in some cooler temperatures for the northern hemisphere, and with that we reflect on this most human of seasons courtesy of a poem by Archibald MacLeish.

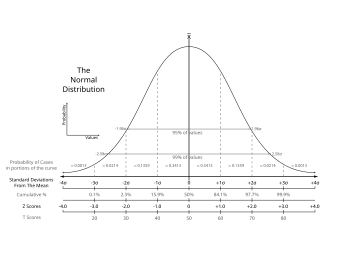

The Autumnal Equinox finally ushers in some cooler temperatures for the northern hemisphere, and with that we reflect on this most human of seasons courtesy of a poem by Archibald MacLeish. Test grades once measured student performance. Nowadays test grades are used to measure teacher and parent, educational institution and even national performance. Gary Cutting over at the Stone forum has some instructive commentary.

Test grades once measured student performance. Nowadays test grades are used to measure teacher and parent, educational institution and even national performance. Gary Cutting over at the Stone forum has some instructive commentary. This season’s Beaujolais Nouveau is just over a month away so what better way to pave the road to French wines than a viticultural map. The wine map is based on the 1930’s iconic design by Harry Beck of the London Tube (subway).

This season’s Beaujolais Nouveau is just over a month away so what better way to pave the road to French wines than a viticultural map. The wine map is based on the 1930’s iconic design by Harry Beck of the London Tube (subway). Counterintuitive results show that we are more likely to resist changing our minds when more people tell us where are wrong. A team of researchers from HP’s Social Computing Research Group found that humans are more likely to change their minds when fewer, rather than more, people disagree with them.

Counterintuitive results show that we are more likely to resist changing our minds when more people tell us where are wrong. A team of researchers from HP’s Social Computing Research Group found that humans are more likely to change their minds when fewer, rather than more, people disagree with them. Over the last couple of years a number of researchers have upended conventional wisdom by finding that complex decisions, for instance, those having lots of variables, are better “made” through our emotional system. This flies in the face of the commonly held belief that complexity is best handled by our rational side.

Over the last couple of years a number of researchers have upended conventional wisdom by finding that complex decisions, for instance, those having lots of variables, are better “made” through our emotional system. This flies in the face of the commonly held belief that complexity is best handled by our rational side.

[div class=attrib]From Rationally Speaking:[end-div]

[div class=attrib]From Rationally Speaking:[end-div]

The world of particle physics is agog with recent news of an experiment that shows a very unexpected result – sub-atomic particles traveling faster than the speed of light. If verified and independently replicated the results would violate one of the universe’s fundamental properties described by Einstein in the Special Theory of Relativity. The speed of light — 186,282 miles per second (299,792 kilometers per second) — has long been considered an absolute cosmic speed limit.

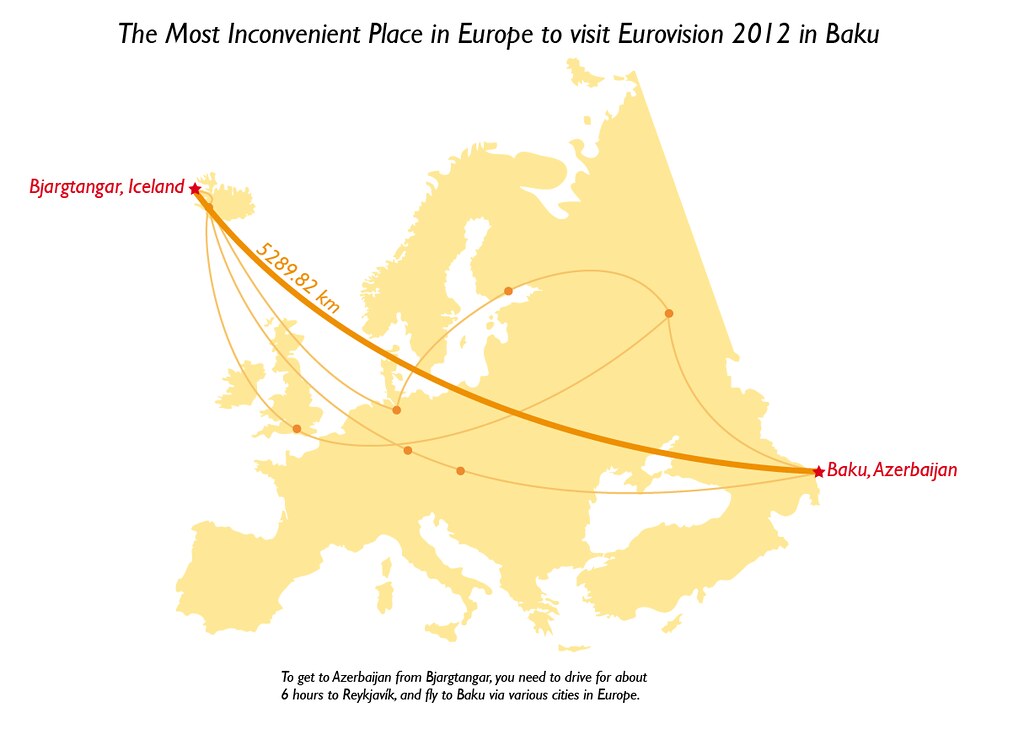

The world of particle physics is agog with recent news of an experiment that shows a very unexpected result – sub-atomic particles traveling faster than the speed of light. If verified and independently replicated the results would violate one of the universe’s fundamental properties described by Einstein in the Special Theory of Relativity. The speed of light — 186,282 miles per second (299,792 kilometers per second) — has long been considered an absolute cosmic speed limit. If you grew up in Europe or have spent at least 6 months there over the last 50 years you’ll have collided with the Eurovision Song Contest.

If you grew up in Europe or have spent at least 6 months there over the last 50 years you’ll have collided with the Eurovision Song Contest. You will have heard of the River Thames, the famous swathe of grey that cuts a watery path through London. You may even have heard of several of London’s prominent canals, such as the Grand Union Canal and Regent’s Canal. But, you probably will not have heard of the mysterious River Fleet that meanders through eerie tunnels beneath the city.

You will have heard of the River Thames, the famous swathe of grey that cuts a watery path through London. You may even have heard of several of London’s prominent canals, such as the Grand Union Canal and Regent’s Canal. But, you probably will not have heard of the mysterious River Fleet that meanders through eerie tunnels beneath the city.

Aside from founding classical mechanics — think universal gravitation and laws of motion, laying the building blocks of calculus, and inventing the reflecting telescope Isaac Newton made time for spiritual pursuits. In fact, Newton was a highly religious individual (though a somewhat unorthodox Christian).

Aside from founding classical mechanics — think universal gravitation and laws of motion, laying the building blocks of calculus, and inventing the reflecting telescope Isaac Newton made time for spiritual pursuits. In fact, Newton was a highly religious individual (though a somewhat unorthodox Christian). [div class=attrib]From The Stone forum, New York Times:[end-div]

[div class=attrib]From The Stone forum, New York Times:[end-div] A poem by Anthony Hecht this week. On Hecht, Poetry Foundation remarks, “[o]ne of the leading voices of his generation, Anthony Hecht’s poetry is known for its masterful use of traditional forms and linguistic control.”

A poem by Anthony Hecht this week. On Hecht, Poetry Foundation remarks, “[o]ne of the leading voices of his generation, Anthony Hecht’s poetry is known for its masterful use of traditional forms and linguistic control.”

General scientific consensus suggests that our universe has no pre-defined destiny. While a number of current theories propose anything from a final Big Crush to an accelerating expansion into cold nothingness the future plan for the universe is not pre-determined. Unfortunately, our increasingly sophisticated scientific tools are still to meager to test and answer these questions definitively. So, theorists currently seem to have the upper hand. And, now yet another theory puts current cosmological thinking on its head by proposing that the future is pre-destined and that it may even reach back into the past to shape the present. Confused? Read on!

General scientific consensus suggests that our universe has no pre-defined destiny. While a number of current theories propose anything from a final Big Crush to an accelerating expansion into cold nothingness the future plan for the universe is not pre-determined. Unfortunately, our increasingly sophisticated scientific tools are still to meager to test and answer these questions definitively. So, theorists currently seem to have the upper hand. And, now yet another theory puts current cosmological thinking on its head by proposing that the future is pre-destined and that it may even reach back into the past to shape the present. Confused? Read on!