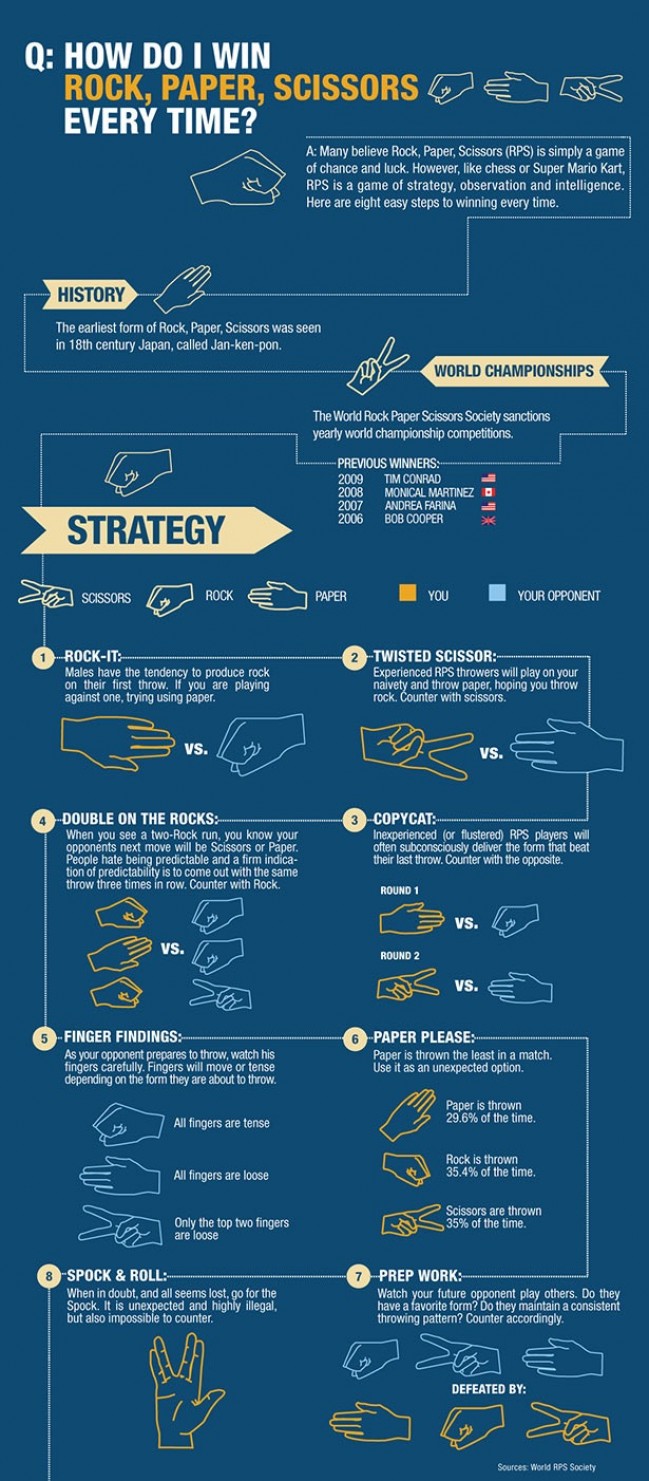

If you’re a competitive person, then this important infographic is for you. Never again will you lose at Rock, Paper, Scissors — well, almost never. Inforgraphic courtesy of the RPS Society.

Yearly Archives: 2012

MondayPoem: End of Summer

A month in to fall and it really does now seem like Autumn — leaves are turning and falling, jackets have reappeared, brisk morning walks are now shrouded in darkness.

A month in to fall and it really does now seem like Autumn — leaves are turning and falling, jackets have reappeared, brisk morning walks are now shrouded in darkness.

So, we turn to the first Poet Laureate of the United States of the new millenium — Stanley Kunitz, to remind us of Summer’s end. Kunitz was anointed Laureate at the age of ninety-five, and died six years later. His published works span almost eight decades of thoughtful creativity.

By Stanley Kunitz

– End of Summer

An agitation of the air,

A perturbation of the light

Admonished me the unloved year

Would turn on its hinge that night.

I stood in the disenchanted field

Amid the stubble and the stones,

Amazed, while a small worm lisped to me

The song of my marrow-bones.

Blue poured into summer blue,

A hawk broke from his cloudless tower,

The roof of the silo blazed, and I knew

That part of my life was over.

Already the iron door of the north

Clangs open: birds, leaves, snows

Order their populations forth,

And a cruel wind blows.

Connectedness: A Force For Good

The internet has the potential to make our current political process obsolete. A review of “The End of Politics” by British politician Douglas Carswell shows how connectedness provides a significant opportunity to reshape the political process, and in some cases completely undermine government, for the good.

[div class=attrib]Charles Moore for the Telegraph:[end-div]

I think I can help you tackle this thought-provoking book. First of all, the title misleads. Enchanting though the idea will sound to many people, this is not about the end of politics. It is, after all, written by a Member of Parliament, Douglas Carswell (Con., Clacton) and he is fascinated by the subject. There’ll always be politics, he is saying, but not as we know it.

Second, you don’t really need to read the first half. It is essentially a passionately expressed set of arguments about why our current political arrangements do not work. It is good stuff, but there is plenty of it in the more independent-minded newspapers most days. The important bit is Part Two, beginning on page 145 and running for a modest 119 pages. It is called “The Birth of iDemocracy”.

Mr Carswell resembles those old barometers in which, in bad weather (Part One), a man with a mackintosh, an umbrella and a scowl comes out of the house. In good weather (Part Two), he pops out wearing a white suit, a straw hat and a broad smile. What makes him happy is the feeling that the digital revolution can restore to the people the power which, in the early days of the universal franchise, they possessed – and much, much more. He believes that the digital revolution has at last harnessed technology to express the “collective brain” of humanity. We develop our collective intelligence by exchanging the properties of our individual ones.

Throughout history, we have been impeded in doing this by physical barriers, such as distance, and by artificial ones, such as priesthoods of bureaucrats and experts. Today, i-this and e-that are cutting out these middlemen. He quotes the internet sage, Clay Shirky: “Here comes everybody”. Mr Carswell directs magnificent scorn at the aides to David Cameron who briefed the media that the Prime Minister now has an iPad app which will allow him, at a stroke of his finger, “to judge the success or failure of ministers with reference to performance-related data”.

The effect of the digital revolution is exactly the opposite of what the aides imagine. Far from now being able to survey everything, always, like God, the Prime Minister – any prime minister – is now in an unprecedentedly weak position in relation to the average citizen: “Digital technology is starting to allow us to choose for ourselves things that until recently Digital Dave and Co decided for us.”

A non-physical business, for instance, can often decide pretty freely where, for the purposes of taxation, it wants to live. Naturally, it will choose benign jurisdictions. Governments can try to ban it from doing so, but they will either fail, or find that they are cutting off their nose to spite their face. The very idea of a “tax base”, on which treasuries depend, wobbles when so much value lies in intellectual property and intellectual property is mobile. So taxes need to be flatter to keep their revenues up. If they are flatter, they will be paid by more people.

Therefore it becomes much harder for government to grow, since most people do not want to pay more.

[div class=attrib]Read the entire article after the jump.[end-div]

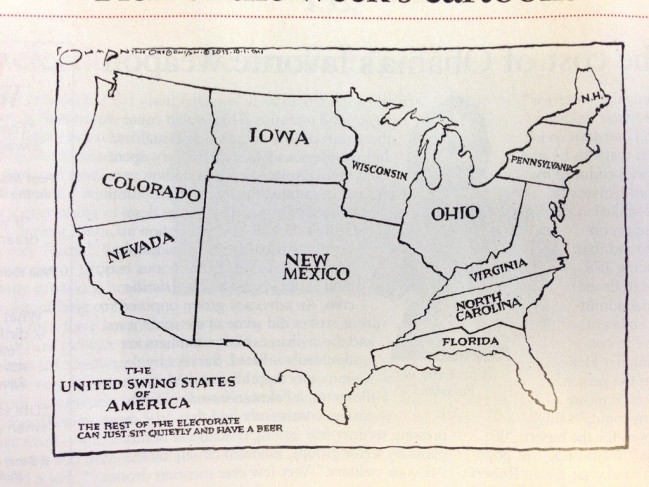

The United Swing States of America

Frank Jacobs over at Strange Maps has found a timely reminder that shows the inordinate influence that a few voters in several crucial States have over the rest of us.

[div class=attrib]From Strange Maps:[end-div]

At the stroke of midnight on November 6th, the 21 registered voters of Dixville Notch, gathering in the wood-panelled Ballot Room of the Balsams Grand Resort Hotel, will have just one minute to cast their vote. Speed is of the essence, if the tiny New Hampshire town is to uphold its reputation (est. 1960) as the first place to declare its results in the US presidential elections.

Later that day, well over 200 million other American voters will face the same choice as the good folks of the Notch: returning Barack Obama to the White House for a second and final four-year term, or electing Mitt Romney as the 45th President of the United States.

The winner of that contest will not be determined by whoever wins a simple majority (i.e. 50% of all votes cast, plus at least one). Like many electoral processes across the world, the system to elect the next president of the United States is riddled with idiosyncrasies and peculiarities – the quadrennial quorum in Dixville Notch being just one example.

Even though most US Presidents have indeed gained office by winning the popular vote, but this is not always the case. What is needed, is winning the electoral vote. For the US presidential election is an indirect one: depending on the outcome in each of the 50 states, an Electoral College convenes in Washington DC to elect the President.

The total of 538 electors is distributed across the states in proportion to their population size, and is regularly adjusted to reflect increases or decreases. In 2008 Louisiana had 9 electors and South Carolina had 8; reflecting a relative population decrease, resp. increase, those numbers are now reversed.

Maine and Nebraska are the only states to assign their electors proportionally; the other 48 states (and DC) operate on the ABBA principle: however slight the majority of either candidate in any of those states, he would win all its electoral votes. This rather convoluted system underlines the fact that the US Presidential elections are the sum of 50, state-level contests. It also brings into focus that some states are more important than others.

Obviously, in this system the more populous states carry much more weight than the emptier ones. Consider the map of the United States, and focus on the 17 states west of the straight-ish line of state borders from North Dakota-Minnesota in the north to Texas-Louisiana in the south. Just two states – Texas and California – outweigh the electoral votes of the 15 others.

So presidential candidates concentrate their efforts on the states where they can hope to gain the greatest advantage. This excludes the fairly large number of states that are solidly ‘blue’ (i.e. Democratic) or ‘red’ (Republican). Texas, for example, is reliably Republican, while California can be expected to fall in the Democratic column.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Map courtesy of Strange Maps / Big Think.[end-div]

Teenagers and Time

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household.

Parents have long known that the sleep-wake cycles of their adolescent offspring are rather different to those of anyone else in the household.

Several new and detailed studies of teenagers tell us why teens are impossible to awaken at 7 am, suddenly awake at 10 pm, and often able to sleep anywhere for stretches of 16 hours.

[div class=attrib]From the Wall Street Journal:[end-div]

Many parents know the scene: The groggy, sleep-deprived teenager stumbles through breakfast and falls asleep over afternoon homework, only to spring to life, wide-eyed and alert, at 10 p.m.—just as Mom and Dad are nodding off.

Fortunately for parents, science has gotten more sophisticated at explaining why, starting at puberty, a teen’s internal sleep-wake clock seems to go off the rails. Researchers are also connecting the dots between the resulting sleep loss and behavior long chalked up to just “being a teenager.” This includes more risk-taking, less self-control, a drop in school performance and a rise in the incidence of depression.

One 2010 study from the University of British Columbia, for example, found that sleep loss can hamper neuron growth in the brain during adolescence, a critical period for cognitive development.

Findings linking sleep loss to adolescent turbulence are “really revelatory,” says Michael Terman, a professor of clinical psychology and psychiatry at Columbia University Medical Center and co-author of “Chronotherapy,” a forthcoming book on resetting the body clock. “These are reactions to a basic change in the way teens’ physiology and behavior is organized.”

Despite such revelations, there are still no clear solutions for the teen-zombie syndrome. Should a parent try to enforce strict wake-up and bedtimes, even though they conflict with the teen’s body clock? Or try to create a workable sleep schedule around that natural cycle? Coupled with a trend toward predawn school start times and peer pressure to socialize online into the wee hours, the result can upset kids’ health, school performance—and family peace.

Jeremy Kern, 16 years old, of San Diego, gets up at 6:30 a.m. for school and tries to fall asleep by 10 p.m. But a heavy load of homework and extracurricular activities, including playing saxophone in his school marching band and in a theater orchestra, often keep him up later.

“I need 10 hours of sleep to not feel tired, and every single day I have to deal with being exhausted,” Jeremy says. He stays awake during early-afternoon classes “by sheer force of will.” And as research shows, sleep loss makes him more emotionally volatile, Jeremy says, like when he recently broke up with his girlfriend: “You are more irrational when you’re sleep deprived. Your emotions are much harder to control.”

Only 7.6% of teens get the recommended 9 to 10 hours of sleep, 23.5% get eight hours and 38.7% are seriously sleep-deprived at six or fewer hours a night, says a 2011 study by the Centers for Disease Control and Prevention.

It’s a biological 1-2-3 punch. First, the onset of puberty brings a median 1.5-hour delay in the body’s release of the sleep-inducing hormone melatonin, says Mary Carskadon, a professor of psychiatry and human behavior at the Brown University medical school and a leading sleep researcher.

Second, “sleep pressure,” or the buildup of the need to sleep as the day wears on, slows during adolescence. That is, kids don’t become sleepy as early. This sleep delay isn’t just a passing impulse: It continues to increase through adolescence, peaking at age 19.5 in girls and age 20.9 in boys, Dr. Carskadon’s research shows.

Finally, teens lose some of their sensitivity to morning light, the kind that spurs awakening and alertness. And they become more reactive to nighttime light, sparking activity later into the evening.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of the Guardian / Alamy.[end-div]

Human Civilization and Weapons Go Hand in Hand

There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

There is great irony in knowing that we humans would not be as civilized were it not for our passion for lethal, projectile weapons.

[div class=attrib]From the New Scientist:[end-div]

IT’S about 2 metres long, made of tough spruce wood and carved into a sharp point at one end. The widest part, and hence its centre of gravity, is in the front third, suggesting it was thrown like a javelin. At 400,000 years old, this is the world’s oldest spear. And, according to a provocative theory, on its carved length rests nothing less than the foundation of human civilisation as we know it, including democracy, class divisions and the modern nation state.

At the heart of this theory is a simple idea: the invention of weapons that could kill at a distance meant that power became uncoupled from physical strength. Even the puniest subordinate could now kill an alpha male, with the right weapon and a reasonable aim. Those who wanted power were forced to obtain it by other means – persuasion, cunning, charm – and so began the drive for the cognitive attributes that make us human. “In short, 400,000 years of evolution in the presence of lethal weapons gave rise to Homo sapiens,” says Herbert Gintis, an economist at the Santa Fe Institute in New Mexico who studies the evolution of social complexity and cooperation.

The puzzle of how humans became civilised has received new impetus from studies of the evolution of social organisation in other primates. These challenge the long-held view that political structure is a purely cultural phenomenon, suggesting that genes play a role too. If they do, the fact that we alone of all the apes have built highly complex societies becomes even more intriguing. Earlier this year, an independent institute called the Ernst Strüngmann Forum assembled a group of scientists in Frankfurt, Germany, to discuss how this complexity came about. Hot debate centred on the possibility that, at pivotal points in history, advances in lethal weapons technology drove human societies to evolve in new directions.

The idea that weapons have catalysed social change came to the fore three decades ago, when British anthropologist James Woodburn spent time with the Hadza hunter-gatherers of Tanzania. Their lifestyle, which has not changed in millennia, is thought to closely resemble that of our Stone Age ancestors, and Woodburn observed that they are fiercely egalitarian. Although the Hadza people include individuals who take a lead in different arenas, no one person has overriding authority. They also have mechanisms for keeping their leaders from growing too powerful – not least, the threat that a bully could be ambushed or killed in his sleep. The hunting weapon, Woodburn suggested, acts as an equaliser.

Some years later, anthropologist Christopher Boehm at the University of Southern California pointed out that the social organisation of our closest primate relative, the chimpanzee, is very different. They live in hierarchical, mixed-sex groups in which the alpha male controls access to food and females. In his 2000 book, Hierarchy in the Forest, Boehm proposed that egalitarianism arose in early hominin societies as a result of the reversal of this strength-based dominance hierarchy – made possible, in part, by projectile weapons. However, in reviving Woodburn’s idea, Boehm also emphasised the genetic heritage that we share with chimps. “We are prone to the formation of hierarchies, but also prone to form alliances in order to keep from being ruled too harshly or arbitrarily,” he says. At the Strüngmann forum, Gintis argued that this inherent tension accounts for much of human history, right up to the present day.

[div class=attrib]Read the entire article following the jump.[end-div]

[div class=attrib]Image: M777 howitzer. Courtesy of Wikipedia.[end-div]

Want Your Kids to Be Conservative or Liberal?

Researchers have confirmed what we already know: Parents who endorse a more authoritarian parenting style towards their toddlers are more likely to have children who are ideologically conservative when they reach age 18; parents who support more egalitarian parenting are more likely to have children who grow up to be liberal.

[div class=attrib]From the Pacific Standard:[end-div]

Parents: Do you find yourselves arguing with your adult children over who deserves to win the upcoming election? Does it confuse and frustrate you to realize your political viewpoints are so different?

Newly published research suggests you may only have yourself to blame.

Providing the best evidence yet to back up a decades-old theory, researchers writing in the journal Psychological Science report a link between a mother’s attitude toward parenting and the political ideology her child eventually adopts. In short, authoritarian parents are more prone to produce conservatives, while those who gave their kids more latitude are more likely to produce liberals.

This dynamic was theorized as early as 1950. But until now, almost all the research supporting it has been based on retrospective reports, with parents assessing their child-rearing attitudes in hindsight.

This new study, by a team led by psychologist R. Chris Fraley of the University of Illinois at Urbana-Champaign, begins with new mothers describing their intentions and approach in 1991, and ends with a survey of their children 18 years later. In between, it features an assessment of the child’s temperament at age 4.

The study looked at roughly 700 American children and their parents, who were recruited for the National Institute of Child Health and Human Development’s Study of Early Child Care and Youth Development. When each child was one month old, his or her mother completed a 30-item questionnaire designed to reveal her approach to parenting.

Those who strongly agreed with such statements as “the most important thing to teach children is absolute obedience to whoever is in authority” were categorized as holding authoritarian parenting attitudes. Those who robustly endorsed such sentiments as “children should be allowed to disagree with their parents” were categorized as holding egalitarian parenting attitudes.

When their kids were 54 months old, the mothers assessed their child’s temperament by answering 80 questions about their behavior. The children were evaluated for such traits as shyness, restlessness, attentional focusing (determined by their ability to follow directions and complete tasks) and fear.

Finally, at age 18, the youngsters completed a 28-item survey measuring their political attitudes on a liberal-to-conservative scale.

“Parents who endorsed more authoritarian parenting attitudes when their children were one month old were more likely to have children who were conservative in their ideologies at age 18,” the researchers report. “Parents who endorsed more egalitarian parenting attitudes were more likely to have children who were liberal.”

Temperament at age 4—which, of course, was very likely impacted by those parenting styles—was also associated with later ideological leanings.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of the Daily Show with Jon Stewart and the Colbert Report via Wired.[end-div]

LBPD – Love of Books Personality Disorder

Author Joe Queenan explains why reading over 6,000 books may be because, as he puts it, he “find[s] ‘reality’ a bit of a disappointment”.

Author Joe Queenan explains why reading over 6,000 books may be because, as he puts it, he “find[s] ‘reality’ a bit of a disappointment”.

[div class=attrib]From the Wall Street Journal:[end-div]

I started borrowing books from a roving Quaker City bookmobile when I was 7 years old. Things quickly got out of hand. Before I knew it I was borrowing every book about the Romans, every book about the Apaches, every book about the spindly third-string quarterback who comes off the bench in the fourth quarter to bail out his team. I had no way of knowing it at the time, but what started out as a harmless juvenile pastime soon turned into a lifelong personality disorder.

Fifty-five years later, with at least 6,128 books under my belt, I still organize my daily life—such as it is—around reading. As a result, decades go by without my windows getting washed.

My reading habits sometimes get a bit loopy. I often read dozens of books simultaneously. I start a book in 1978 and finish it 34 years later, without enjoying a single minute of the enterprise. I absolutely refuse to read books that critics describe as “luminous” or “incandescent.” I never read books in which the hero went to private school or roots for the New York Yankees. I once spent a year reading nothing but short books. I spent another year vowing to read nothing but books I picked off the library shelves with my eyes closed. The results were not pretty.

I even tried to spend an entire year reading books I had always suspected I would hate: “Middlemarch,” “Look Homeward, Angel,” “Babbitt.” Luckily, that project ran out of gas quickly, if only because I already had a 14-year-old daughter when I took a crack at “Lolita.”

Six thousand books is a lot of reading, true, but the trash like “Hell’s Belles” and “Kid Colt and the Legend of the Lost Arroyo” and even “Part-Time Harlot, Full-Time Tramp” that I devoured during my misspent teens really puff up the numbers. And in any case, it is nowhere near a record. Winston Churchill supposedly read a book every day of his life, even while he was saving Western Civilization from the Nazis. This is quite an accomplishment, because by some accounts Winston Churchill spent all of World War II completely hammered.

A case can be made that people who read a preposterous number of books are not playing with a full deck. I prefer to think of us as dissatisfied customers. If you have read 6,000 books in your lifetime, or even 600, it’s probably because at some level you find “reality” a bit of a disappointment. People in the 19th century fell in love with “Ivanhoe” and “The Count of Monte Cristo” because they loathed the age they were living through. Women in our own era read “Pride and Prejudice” and “Jane Eyre” and even “The Bridges of Madison County”—a dimwit, hayseed reworking of “Madame Bovary”—because they imagine how much happier they would be if their husbands did not spend quite so much time with their drunken, illiterate golf buddies down at Myrtle Beach. A blind bigamist nobleman with a ruined castle and an insane, incinerated first wife beats those losers any day of the week. Blind, two-timing noblemen never wear belted shorts.

Similarly, finding oneself at the epicenter of a vast, global conspiracy involving both the Knights Templar and the Vatican would be a huge improvement over slaving away at the Bureau of Labor Statistics for the rest of your life or being married to someone who is drowning in dunning notices from Williams-Sonoma. No matter what they may tell themselves, book lovers do not read primarily to obtain information or to while away the time. They read to escape to a more exciting, more rewarding world. A world where they do not hate their jobs, their spouses, their governments, their lives. A world where women do not constantly say things like “Have a good one!” and “Sounds like a plan!” A world where men do not wear belted shorts. Certainly not the Knights Templar.

I read books—mostly fiction—for at least two hours a day, but I also spend two hours a day reading newspapers and magazines, gathering material for my work, which consists of ridiculing idiots or, when they are not available, morons. I read books in all the obvious places—in my house and office, on trains and buses and planes—but I’ve also read them at plays and concerts and prizefights, and not just during the intermissions. I’ve read books while waiting for friends to get sprung from the drunk tank, while waiting for people to emerge from comas, while waiting for the Iceman to cometh.

[div class=attrib]Read the entire article following the jump.[end-div]

[div class=attrib]Image courtesy of Southern Illinois University.[end-div]

The Great Blue Monday Fallacy

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

A yearlong survey of moodiness shows that the so-called Monday Blues may be more figment of the imagination than fact.

[div class=attrib]From the New York Times:[end-div]

DESPITE the beating that Mondays have taken in pop songs — Fats Domino crooned “Blue Monday, how I hate blue Monday” — the day does not deserve its gloomy reputation.

Two colleagues and I recently published an analysis of a remarkable yearlong survey by the Gallup Organization, which conducted 1,000 live interviews a day, asking people across the United States to recall their mood in the prior day. We scoured the data for evidence that Monday was bluer than Tuesday or Wednesday. We couldn’t find any.

Mood was evaluated with several adjectives measuring positive or negative feelings. Spanish-only speakers were queried in Spanish. Interviewers spoke to people in every state on cellphones and land lines. The data unequivocally showed that Mondays are as pleasant to Americans as the three days that follow, and only a trifle less joyful than Fridays. Perhaps no surprise, people generally felt good on the weekend — though for retirees, the distinction between weekend and weekdays was only modest.

Likewise, day-of-the-week mood was gender-blind. Over all, women assessed their daily moods more negatively than men did, but relative changes from day to day were similar for both sexes.

And yet still, the belief in blue Mondays persists.

Several years ago, in another study, I examined expectations about mood and day of the week: two-thirds of the sample nominated Monday as the “worst” day of the week. Other research has confirmed that this sentiment is widespread, despite the fact that, well, we don’t really feel any gloomier on that day.

The question is, why? Why do we believe something that our own immediate experience indicates simply isn’t true?

As it turns out, the blue Monday mystery highlights a phenomenon familiar to behavioral scientists: that beliefs or judgments about experience can be at odds with actual experience. Indeed, the disconnection between beliefs and experience is common.

Vacations, for example, are viewed more pleasantly after they are over compared with how they were experienced at the time. And motorists who drive fancy cars report having more fun driving than those who own more modest vehicles, though in-car monitoring shows this isn’t the case. The same is often true in reverse as well: we remember pain or symptoms of illness at higher levels than real-time experience suggests, in part because we ignore symptom-free periods in between our aches and pains.

HOW do we make sense of these findings? The human brain has vast, but limited, capacities to store, retrieve and process information. Yet we are often confronted with questions that challenge these capacities. And this is often when the disconnect between belief and experience occurs. When information isn’t available for answering a question — say, when it did not make it into our memories in the first place — we use whatever information is available, even if it isn’t particularly relevant to the question at hand.

When asked about pain for the last week, most people cannot completely remember all of its ups and downs over seven days. However, we are likely to remember it at its worst and may use that as a way of summarizing pain for the entire week. When asked about our current satisfaction with life, we may focus on the first things that come to mind — a recent spat with a spouse or maybe a compliment from the boss at work.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: “I Don’t Like Mondays” single cover. Courtesy of The Boomtown Rats / Ensign Records.[end-div]

La Serrata: Why the Rise Always Followed by the Fall

Humans do learn from their mistakes. Yet, history does repeat. Nations will continue to rise, and inevitably fall. Why? Chrystia Freeland, author of “Plutocrats: The Rise of the New Global Super-Rich and the Fall of Everyone Else,” offers an insightful analysis based in part on 14th century Venice.

[div class=attrib]From the New York Times:[end-div]

IN the early 14th century, Venice was one of the richest cities in Europe. At the heart of its economy was the colleganza, a basic form of joint-stock company created to finance a single trade expedition. The brilliance of the colleganza was that it opened the economy to new entrants, allowing risk-taking entrepreneurs to share in the financial upside with the established businessmen who financed their merchant voyages.

Venice’s elites were the chief beneficiaries. Like all open economies, theirs was turbulent. Today, we think of social mobility as a good thing. But if you are on top, mobility also means competition. In 1315, when the Venetian city-state was at the height of its economic powers, the upper class acted to lock in its privileges, putting a formal stop to social mobility with the publication of the Libro d’Oro, or Book of Gold, an official register of the nobility. If you weren’t on it, you couldn’t join the ruling oligarchy.

The political shift, which had begun nearly two decades earlier, was so striking a change that the Venetians gave it a name: La Serrata, or the closure. It wasn’t long before the political Serrata became an economic one, too. Under the control of the oligarchs, Venice gradually cut off commercial opportunities for new entrants. Eventually, the colleganza was banned. The reigning elites were acting in their immediate self-interest, but in the longer term, La Serrata was the beginning of the end for them, and for Venetian prosperity more generally. By 1500, Venice’s population was smaller than it had been in 1330. In the 17th and 18th centuries, as the rest of Europe grew, the city continued to shrink.

The story of Venice’s rise and fall is told by the scholars Daron Acemoglu and James A. Robinson, in their book “Why Nations Fail: The Origins of Power, Prosperity, and Poverty,” as an illustration of their thesis that what separates successful states from failed ones is whether their governing institutions are inclusive or extractive. Extractive states are controlled by ruling elites whose objective is to extract as much wealth as they can from the rest of society. Inclusive states give everyone access to economic opportunity; often, greater inclusiveness creates more prosperity, which creates an incentive for ever greater inclusiveness.

The history of the United States can be read as one such virtuous circle. But as the story of Venice shows, virtuous circles can be broken. Elites that have prospered from inclusive systems can be tempted to pull up the ladder they climbed to the top. Eventually, their societies become extractive and their economies languish.

That was the future predicted by Karl Marx, who wrote that capitalism contained the seeds of its own destruction. And it is the danger America faces today, as the 1 percent pulls away from everyone else and pursues an economic, political and social agenda that will increase that gap even further — ultimately destroying the open system that made America rich and allowed its 1 percent to thrive in the first place.

You can see America’s creeping Serrata in the growing social and, especially, educational chasm between those at the top and everyone else. At the bottom and in the middle, American society is fraying, and the children of these struggling families are lagging the rest of the world at school.

Economists point out that the woes of the middle class are in large part a consequence of globalization and technological change. Culture may also play a role. In his recent book on the white working class, the libertarian writer Charles Murray blames the hollowed-out middle for straying from the traditional family values and old-fashioned work ethic that he says prevail among the rich (whom he castigates, but only for allowing cultural relativism to prevail).

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: The Grand Canal and the Church of the Salute (1730) by Canaletto. Courtesy of Museum of Fine Arts, Houston / WikiCommons.[end-div]

The Tubes of the Internets

Google lets the world peek at the many tubes that form a critical part of its search engine infrastructure — functional and pretty too.

[div class=attrib]From the Independent:[end-div]

They are the cathedrals of the information age – with the colour scheme of an adventure playground.

For the first time, Google has allowed cameras into its high security data centres – the beating hearts of its global network that allow the web giant to process 3 billion internet searches every day.

Only a small band of Google employees have ever been inside the doors of the data centres, which are hidden away in remote parts of North America, Belgium and Finland.

Their workplaces glow with the blinking lights of LEDs on internet servers reassuring technicians that all is well with the web, and hum to the sound of hundreds of giant fans and thousands of gallons of water, that stop the whole thing overheating.

“Very few people have stepped inside Google’s data centers [sic], and for good reason: our first priority is the privacy and security of your data, and we go to great lengths to protect it, keeping our sites under close guard,” the company said yesterday. Row upon row of glowing servers send and receive information from 20 billion web pages every day, while towering libraries store all the data that Google has ever processed – in case of a system failure.

With data speeds 200,000 times faster than an ordinary home internet connection, Google’s centres in America can share huge amounts of information with European counterparts like the remote, snow-packed Hamina centre in Finland, in the blink of an eye.

[div class=attrib]Read the entire article after the jump, or take a look at more images from the bowels of Google after the leap.[end-div]

3D Printing Coming to a Home Near You

It seems that not too long ago we were writing about pioneering research into 3D printing and start-up businesses showing off their industrially focused, prototype 3D printers. Now, only a couple of years later there is a growing, consumer market, home-based printers for under $3,000, and even a a 3D printing expo — 3D Printshow. The future looks bright and very much three dimensional.

[div class=attrib]From the Independent:[end-div]

It is Star Trek science made reality, with the potential for production-line replacement body parts, aeronautical spares, fashion, furniture and virtually any other object on demand. It is 3D printing, and now people in Britain can try it for themselves.

The cutting-edge technology, which layers plastic resin in a manner similar to an inkjet printer to create 3D objects, is on its way to becoming affordable for home use. Some of its possibilities will be on display at the UK’s first 3D-printing trade show from Friday to next Sunday at The Brewery in central London .

Clothes made using the technique will be exhibited in a live fashion show, which will include the unveiling of a hat designed for the event by the milliner Stephen Jones, and a band playing a specially composed score on 3D-printed musical instruments.

Some 2,000 consumers are expected to join 1,000 people from the burgeoning industry to see what the technique has to offer, including jewellery and art. A 3D body scanner, which can reproduce a “mini” version of the person scanned, will also be on display.

Workshops run by Jason Lopes of Legacy Effects, which provided 3D-printed models and props for cinema blockbusters such as the Iron Man series and Snow White and the Huntsman, will add a sprinkling of Hollywood glamour.

Kerry Hogarth, the woman behind 3D Printshow, said yesterday she aims to showcase the potential of the technology for families. While prices for printers start at around £1,500 – with DIY kits for less – they are expected to drop steadily over the coming year. One workshop, run by the Birmingham-based Black Country Atelier, will invite people to design a model vehicle and then see the result “printed” off for them to take home.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: 3D scanning and printing. Courtesy of Wikipedia.[end-div]

The Promise of Quantum Computation

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

Advanced in quantum physics and in the associated realm of quantum information promise to revolutionize computing. Imagine a computer several trillions of times faster than the present day supercomputers — well, that’s where we are heading.

[div class=attrib]From the New York Times:[end-div]

THIS summer, physicists celebrated a triumph that many consider fundamental to our understanding of the physical world: the discovery, after a multibillion-dollar effort, of the Higgs boson.

Given its importance, many of us in the physics community expected the event to earn this year’s Nobel Prize in Physics. Instead, the award went to achievements in a field far less well known and vastly less expensive: quantum information.

It may not catch as many headlines as the hunt for elusive particles, but the field of quantum information may soon answer questions even more fundamental — and upsetting — than the ones that drove the search for the Higgs. It could well usher in a radical new era of technology, one that makes today’s fastest computers look like hand-cranked adding machines.

The basis for both the work behind the Higgs search and quantum information theory is quantum physics, the most accurate and powerful theory in all of science. With it we created remarkable technologies like the transistor and the laser, which, in time, were transformed into devices — computers and iPhones — that reshaped human culture.

But the very usefulness of quantum physics masked a disturbing dissonance at its core. There are mysteries — summed up neatly in Werner Heisenberg’s famous adage “atoms are not things” — lurking at the heart of quantum physics suggesting that our everyday assumptions about reality are no more than illusions.

Take the “principle of superposition,” which holds that things at the subatomic level can be literally two places at once. Worse, it means they can be two things at once. This superposition animates the famous parable of Schrödinger’s cat, whereby a wee kitty is left both living and dead at the same time because its fate depends on a superposed quantum particle.

For decades such mysteries were debated but never pushed toward resolution, in part because no resolution seemed possible and, in part, because useful work could go on without resolving them (an attitude sometimes called “shut up and calculate”). Scientists could attract money and press with ever larger supercolliders while ignoring such pesky questions.

But as this year’s Nobel recognizes, that’s starting to change. Increasingly clever experiments are exploiting advances in cheap, high-precision lasers and atomic-scale transistors. Quantum information studies often require nothing more than some equipment on a table and a few graduate students. In this way, quantum information’s progress has come not by bludgeoning nature into submission but by subtly tricking it to step into the light.

Take the superposition debate. One camp claims that a deeper level of reality lies hidden beneath all the quantum weirdness. Once the so-called hidden variables controlling reality are exposed, they say, the strangeness of superposition will evaporate.

Another camp claims that superposition shows us that potential realities matter just as much as the single, fully manifested one we experience. But what collapses the potential electrons in their two locations into the one electron we actually see? According to this interpretation, it is the very act of looking; the measurement process collapses an ethereal world of potentials into the one real world we experience.

And a third major camp argues that particles can be two places at once only because the universe itself splits into parallel realities at the moment of measurement, one universe for each particle location — and thus an infinite number of ever splitting parallel versions of the universe (and us) are all evolving alongside one another.

These fundamental questions might have lived forever at the intersection of physics and philosophy. Then, in the 1980s, a steady advance of low-cost, high-precision lasers and other “quantum optical” technologies began to appear. With these new devices, researchers, including this year’s Nobel laureates, David J. Wineland and Serge Haroche, could trap and subtly manipulate individual atoms or light particles. Such exquisite control of the nano-world allowed them to design subtle experiments probing the meaning of quantum weirdness.

Soon at least one interpretation, the most common sense version of hidden variables, was completely ruled out.

At the same time new and even more exciting possibilities opened up as scientists began thinking of quantum physics in terms of information, rather than just matter — in other words, asking if physics fundamentally tells us more about our interaction with the world (i.e., our information) than the nature of the world by itself (i.e., matter). And so the field of quantum information theory was born, with very real new possibilities in the very real world of technology.

What does this all mean in practice? Take one area where quantum information theory holds promise, that of quantum computing.

Classical computers use “bits” of information that can be either 0 or 1. But quantum-information technologies let scientists consider “qubits,” quantum bits of information that are both 0 and 1 at the same time. Logic circuits, made of qubits directly harnessing the weirdness of superpositions, allow a quantum computer to calculate vastly faster than anything existing today. A quantum machine using no more than 300 qubits would be a million, trillion, trillion, trillion times faster than the most modern supercomputer.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Bloch sphere representation of a qubit, the fundamental building block of quantum computers. Courtesy of Wikipedia.[end-div]

The Half Life of Facts

There is no doubting the ever expanding reach of science and the acceleration of scientific discovery. Yet the accumulation, and for that matter the acceleration in the accumulation, of ever more knowledge does come with a price — many historical facts that we learned as kids are no longer true. This is especially important in areas such as medical research where new discoveries are constantly making obsolete our previous notions of disease and treatment.

Author Samuel Arbesman, tells us why facts should have an expiration date in his new book, A review of The Half-Life of Facts.

[div class=attrib]From Reason:[end-div]

Dinosaurs were cold-blooded. Vast increases in the money supply produce inflation. Increased K-12 spending and lower pupil/teacher ratios boosts public school student outcomes. Most of the DNA in the human genome is junk. Saccharin causes cancer and a high fiber diet prevents it. Stars cannot be bigger than 150 solar masses. And by the way, what are the ten most populous cities in the United States?

In the past half century, all of the foregoing facts have turned out to be wrong (except perhaps the one about inflation rates). We’ll revisit the ten biggest cities question below. In the modern world facts change all of the time, according to Samuel Arbesman, author of The Half-Life of Facts: Why Everything We Know Has an Expiration Date.

Arbesman, a senior scholar at the Kaufmann Foundation and an expert in scientometrics, looks at how facts are made and remade in the modern world. And since fact-making is speeding up, he worries that most of us don’t keep up to date and base our decisions on facts we dimly remember from school and university classes that turn out to be wrong.

The field of scientometrics – the science of measuring and analyzing science – took off in 1947 when mathematician Derek J. de Solla Price was asked to store a complete set of the Philosophical Transactions of the Royal Society temporarily in his house. He stacked them in order and he noticed that the height of the stacks fit an exponential curve. Price started to analyze all sorts of other kinds of scientific data and concluded in 1960 that scientific knowledge had been growing steadily at a rate of 4.7 percent annually since the 17th century. The upshot was that scientific data was doubling every 15 years.

In 1965, Price exuberantly observed, “All crude measures, however arrived at, show to a first approximation that science increases exponentially, at a compound interest of about 7 percent per annum, thus doubling in size every 10–15 years, growing by a factor of 10 every half century, and by something like a factor of a million in the 300 years which separate us from the seventeenth-century invention of the scientific paper when the process began.” A 2010 study in the journal Scientometrics looked at data between 1907 and 2007 and concluded that so far the “overall growth rate for science still has been at least 4.7 percent per year.”

Since scientific knowledge is still growing by a factor of ten every 50 years, it should not be surprising that lots of facts people learned in school and universities have been overturned and are now out of date. But at what rate do former facts disappear? Arbesman applies the concept of half-life, the time required for half the atoms of a given amount of a radioactive substance to disintegrate, to the dissolution of facts. For example, the half-life of the radioactive isotope strontium-90 is just over 29 years. Applying the concept of half-life to facts, Arbesman cites research that looked into the decay in the truth of clinical knowledge about cirrhosis and hepatitis. “The half-life of truth was 45 years,” reported the researchers.

In other words, half of what physicians thought they knew about liver diseases was wrong or obsolete 45 years later. As interesting and persuasive as this example is, Arbesman’s book would have been strengthened by more instances drawn from the scientific literature.

Facts are being manufactured all of the time, and, as Arbesman shows, many of them turn out to be wrong. Checking each by each is how the scientific process is supposed work, i.e., experimental results need to be replicated by other researchers. How many of the findings in 845,175 articles published in 2009 and recorded in PubMed, the free online medical database, were actually replicated? Not all that many. In 2011, a disheartening study in Nature reported that a team of researchers over ten years was able to reproduce the results of only six out of 53 landmark papers in preclinical cancer research.

[div class=attrib]Read the entire article after the jump.[end-div]

Remembering the Future

Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

Memory is a very useful cognitive tool. After all, where would we be if we had no recall of our family, friends, foods, words, tasks and dangers.

But, it turns our that memory may also help us imagine the future — another very important human trait.

[div class=attrib]From the New Scientist:[end-div]

WHEN thinking about the workings of the mind, it is easy to imagine memory as a kind of mental autobiography – the private book of you. To relive the trepidation of your first day at school, say, you simply dust off the cover and turn to the relevant pages. But there is a problem with this idea. Why are the contents of that book so unreliable? It is not simply our tendency to forget key details. We are also prone to “remember” events that never actually took place, almost as if a chapter from another book has somehow slipped into our autobiography. Such flaws are puzzling if you believe that the purpose of memory is to record your past – but they begin to make sense if it is for something else entirely.

That is exactly what memory researchers are now starting to realise. They believe that human memory didn’t evolve so that we could remember but to allow us to imagine what might be. This idea began with the work of Endel Tulving, now at the Rotman Research Institute in Toronto, Canada, who discovered a person with amnesia who could remember facts but not episodic memories relating to past events in his life. Crucially, whenever Tulving asked him about his plans for that evening, the next day or the summer, his mind went blank – leading Tulving to suspect that foresight was the flipside of episodic memory.

Subsequent brain scans supported the idea, suggesting that every time we think about a possible future, we tear up the pages of our autobiographies and stitch together the fragments into a montage that represents the new scenario. This process is the key to foresight and ingenuity, but it comes at the cost of accuracy, as our recollections become frayed and shuffled along the way. “It’s not surprising that we confuse memories and imagination, considering that they share so many processes,” says Daniel Schacter, a psychologist at Harvard University.

Over the next 10 pages, we will show how this theory has brought about a revolution in our understanding of memory. Given the many survival benefits of being able to imagine the future, for instance, it is not surprising that other creatures show a rudimentary ability to think in this way (“Do animals ever forget?”). Memory’s role in planning and problem solving, meanwhile, suggests that problems accessing the past may lie behind mental illnesses like depression and post-traumatic stress disorder, offering a new approach to treating these conditions (“Boosting your mental fortress”). Equally, a growing understanding of our sense of self can explain why we are so selective in the events that we weave into our life story – again showing definite parallels with the way we imagine the future (“How the brain spins your life story”). The work might even suggest some dieting tips (“Lost in the here and now”).

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: The Persistence of Memory, 1931. Salvador Dalí. Courtesy of Salvador Dalí, Gala-Salvador Dalí Foundation/Artists Rights Society.[end-div]

Mourning the Lost Art of Handwriting

In this age of digital everything handwriting does still matter. Some of you may even still have a treasured fountain pen. Novelist Philip Hensher suggests why handwriting has import and value in his new book, The Missing Ink.

[div class=attrib]From the Guardian:[end-div]

About six months ago, I realised that I had no idea what the handwriting of a good friend of mine looked like. I had known him for over a decade, but somehow we had never communicated using handwritten notes. He had left voice messages for me, emailed me, sent text messages galore. But I don’t think I had ever had a letter from him written by hand, a postcard from his holidays, a reminder of something pushed through my letter box. I had no idea whether his handwriting was bold or crabbed, sloping or upright, italic or rounded, elegant or slapdash.

It hit me that we are at a moment when handwriting seems to be about to vanish from our lives altogether. At some point in recent years, it has stopped being a necessary and inevitable intermediary between people – a means by which individuals communicate with each other, putting a little bit of their personality into the form of their message as they press the ink-bearing point on to the paper. It has started to become just one of many options, and often an unattractive, elaborate one.

For each of us, the act of putting marks on paper with ink goes back as far as we can probably remember. At some point, somebody comes along and tells us that if you make a rounded shape and then join it to a straight vertical line, that means the letter “a”, just like the ones you see in the book. (But the ones in the book have a little umbrella over the top, don’t they? Never mind that, for the moment: this is how we make them for ourselves.) If you make a different rounded shape, in the opposite direction, and a taller vertical line, then that means the letter “b”. Do you see? And then a rounded shape, in the same direction as the first letter, but not joined to anything – that makes a “c”. And off you go.

Actually, I don’t think I have any memory of this initial introduction to the art of writing letters on paper. Our handwriting, like ourselves, seems always to have been there.

But if I don’t have any memory of first learning to write, I have a clear memory of what followed: instructions in refinements, suggestions of how to purify the forms of your handwriting.

You longed to do “joined-up writing”, as we used to call the cursive hand when we were young. Instructed in print letters, I looked forward to the ability to join one letter to another as a mark of huge sophistication. Adult handwriting was unreadable, true, but perhaps that was its point. I saw the loops and impatient dashes of the adult hand as a secret and untrustworthy way of communicating that one day I would master.

There was, also, wanting to make your handwriting more like other people’s. Often, this started with a single letter or figure. In the second year at school, our form teacher had a way of writing a 7 in the European way, with a cross-bar. A world of glamour and sophistication hung on that cross-bar; it might as well have had a beret on, be smoking Gitanes in the maths cupboard.

Your hand is formed by aspiration to the hand of others – by the beautiful italic strokes of a friend which seem altogether wasted on a mere postcard, or a note on your door reading “Dropped by – will come back later”. It’s formed, too, by anti-aspiration, the desire not to be like Denise in the desk behind who reads with her mouth open and whose writing, all bulging “m”s and looping “p”s, contains the atrocity of a little circle on top of every i. Or still more horrible, on occasion, usually when she signs her name, a heart. (There may be men in the world who use a heart-shaped jot, as the dot over the i is called, but I have yet to meet one. Or run a mile from one.)

…

Those other writing apparatuses, mobile phones, occupy a little bit more of the same psychological space as the pen. Ten years ago, people kept their mobile phone in their pockets. Now, they hold them permanently in their hand like a small angry animal, gazing crossly into our faces, in apparent need of constant placation. Clearly, people do regard their mobile phones as, in some degree, an extension of themselves. And yet we have not evolved any of those small, pleasurable pieces of behaviour towards them that seem so ordinary in the case of our pens. If you saw someone sucking one while they thought of the next phrase to text, you would think them dangerously insane.

We have surrendered our handwriting for something more mechanical, less distinctively human, less telling about ourselves and less present in our moments of the highest happiness and the deepest emotion. Ink runs in our veins, and shows the world what we are like. The shaping of thought and written language by a pen, moved by a hand to register marks of ink on paper, has for centuries, millennia, been regarded as key to our existence as human beings. In the past, handwriting has been regarded as almost the most powerful sign of our individuality. In 1847, in an American case, a witness testified without hesitation that a signature was genuine, though he had not seen an example of the handwriting for 63 years: the court accepted his testimony.

Handwriting is what registers our individuality, and the mark which our culture has made on us. It has been seen as the unknowing key to our souls and our innermost nature. It has been regarded as a sign of our health as a society, of our intelligence, and as an object of simplicity, grace, fantasy and beauty in its own right. Yet at some point, the ordinary pleasures and dignity of handwriting are going to be replaced permanently.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Stipula fountain pen. Courtesy of Wikipedia.[end-div]

The Rise of Neurobollocks

For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”.

For readers of thediagonal in North America “neurobollocks” would roughly translate to “neurobullshit”.

So what is this growing “neuro-trend”, why is there an explosion in “neuro-babble” and all things with a “neuro-” prefix, and is Malcolm Gladwell to blame?

[div class=attrib]From the New Statesman:[end-div]

An intellectual pestilence is upon us. Shop shelves groan with books purporting to explain, through snazzy brain-imaging studies, not only how thoughts and emotions function, but how politics and religion work, and what the correct answers are to age-old philosophical controversies. The dazzling real achievements of brain research are routinely pressed into service for questions they were never designed to answer. This is the plague of neuroscientism – aka neurobabble, neurobollocks, or neurotrash – and it’s everywhere.

In my book-strewn lodgings, one literally trips over volumes promising that “the deepest mysteries of what makes us who we are are gradually being unravelled” by neuroscience and cognitive psychology. (Even practising scientists sometimes make such grandiose claims for a general audience, perhaps urged on by their editors: that quotation is from the psychologist Elaine Fox’s interesting book on “the new science of optimism”, Rainy Brain, Sunny Brain, published this summer.) In general, the “neural” explanation has become a gold standard of non-fiction exegesis, adding its own brand of computer-assisted lab-coat bling to a whole new industry of intellectual quackery that affects to elucidate even complex sociocultural phenomena. Chris Mooney’s The Republican Brain: the Science of Why They Deny Science – and Reality disavows “reductionism” yet encourages readers to treat people with whom they disagree more as pathological specimens of brain biology than as rational interlocutors.

The New Atheist polemicist Sam Harris, in The Moral Landscape, interprets brain and other research as showing that there are objective moral truths, enthusiastically inferring – almost as though this were the point all along – that science proves “conservative Islam” is bad.

Happily, a new branch of the neuroscienceexplains everything genre may be created at any time by the simple expedient of adding the prefix “neuro” to whatever you are talking about. Thus, “neuroeconomics” is the latest in a long line of rhetorical attempts to sell the dismal science as a hard one; “molecular gastronomy” has now been trumped in the scientised gluttony stakes by “neurogastronomy”; students of Republican and Democratic brains are doing “neuropolitics”; literature academics practise “neurocriticism”. There is “neurotheology”, “neuromagic” (according to Sleights of Mind, an amusing book about how conjurors exploit perceptual bias) and even “neuromarketing”. Hoping it’s not too late to jump on the bandwagon, I have decided to announce that I, too, am skilled in the newly minted fields of neuroprocrastination and neuroflâneurship.

Illumination is promised on a personal as well as a political level by the junk enlightenment of the popular brain industry. How can I become more creative? How can I make better decisions? How can I be happier? Or thinner? Never fear: brain research has the answers. It is self-help armoured in hard science. Life advice is the hook for nearly all such books. (Some cram the hard sell right into the title – such as John B Arden’s Rewire Your Brain: Think Your Way to a Better Life.) Quite consistently, heir recommendations boil down to a kind of neo- Stoicism, drizzled with brain-juice. In a selfcongratulatory egalitarian age, you can no longer tell people to improve themselves morally. So self-improvement is couched in instrumental, scientifically approved terms.

The idea that a neurological explanation could exhaust the meaning of experience was already being mocked as “medical materialism” by the psychologist William James a century ago. And today’s ubiquitous rhetorical confidence about how the brain works papers over a still-enormous scientific uncertainty. Paul Fletcher, professor of health neuroscience at the University of Cambridge, says that he gets “exasperated” by much popular coverage of neuroimaging research, which assumes that “activity in a brain region is the answer to some profound question about psychological processes. This is very hard to justify given how little we currently know about what different regions of the brain actually do.” Too often, he tells me in an email correspondence, a popular writer will “opt for some sort of neuro-flapdoodle in which a highly simplistic and questionable point is accompanied by a suitably grand-sounding neural term and thus acquires a weightiness that it really doesn’t deserve. In my view, this is no different to some mountebank selling quacksalve by talking about the physics of water molecules’ memories, or a beautician talking about action liposomes.”

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of Amazon.[end-div]

Power and Baldness

Since behavioral scientists and psychologists first began roaming the globe we have come to know how and (sometimes) why visual appearance is so important in human interactions. Of course, anecdotally, humans have known this for thousands of years — that image is everything. After all it, was not Mary Kay or L’Oreal who brought us make-up but the ancient Egyptians. Yet, it is still fascinating to see how markedly the perception of an individual can change with a basic alteration, and only at the surface. Witness the profound difference in characteristics that we project onto a male with male pattern baldness (wimp) when he shaves his head (tough guy). And, of course, corporations can now assign a monetary value to the shaven look. As for comb-overs, well that is another topic entirely.

[div class=attrib]From the Wall Street Journal:[end-div]

Up for a promotion? If you’re a man, you might want to get out the clippers.

Men with shaved heads are perceived to be more masculine, dominant and, in some cases, to have greater leadership potential than those with longer locks or with thinning hair, according to a recent study out of the University of Pennsylvania’s Wharton School.

That may explain why the power-buzz look has caught on among business leaders in recent years. Venture capitalist and Netscape founder Marc Andreessen, 41 years old, DreamWorks Animation Chief Executive Jeffrey Katzenberg, 61, and Amazon.com Inc. CEO Jeffrey Bezos, 48, all sport some variant of the close-cropped look.

Some executives say the style makes them appear younger—or at least, makes their age less evident—and gives them more confidence than a comb-over or monk-like pate.

“I’m not saying that shaving your head makes you successful, but it starts the conversation that you’ve done something active,” says tech entrepreneur and writer Seth Godin, 52, who has embraced the bare look for two decades. “These are people who decide to own what they have, as opposed to trying to pretend to be something else.”

Wharton management lecturer Albert Mannes conducted three experiments to test peoples’ perceptions of men with shaved heads. In one of the experiments, he showed 344 subjects photos of the same men in two versions: one showing the man with hair and the other showing him with his hair digitally removed, so his head appears shaved.

In all three tests, the subjects reported finding the men with shaved heads as more dominant than their hirsute counterparts. In one test, men with shorn heads were even perceived as an inch taller and about 13% stronger than those with fuller manes. The paper, “Shorn Scalps and Perceptions of Male Dominance,” was published online, and will be included in a coming issue of journal Social Psychological and Personality Science.

The study found that men with thinning hair were viewed as the least attractive and powerful of the bunch, a finding that tracks with other studies showing that people perceive men with typical male-pattern baldness—which affects roughly 35 million Americans—as older and less attractive. For those men, the solution could be as cheap and simple as a shave.

According to Wharton’s Dr. Mannes—who says he was inspired to conduct the research after noticing that people treated him more deferentially when he shaved off his own thinning hair—head shavers may seem powerful because the look is associated with hypermasculine images, such as the military, professional athletes and Hollywood action heroes like Bruce Willis. (Male-pattern baldness, by contrast, conjures images of “Seinfeld” character George Costanza.)

New York image consultant Julie Rath advises her clients to get closely cropped when they start thinning up top. “There’s something really strong, powerful and confident about laying it all bare,” she says, describing the thinning or combed-over look as “kind of shlumpy.”

The look is catching on. A 2010 study from razor maker Gillette, a unit of Procter & Gamble Co., found that 13% of respondents said they shaved their heads, citing reasons as varied as fashion, sports and already thinning hair, according to a company spokesman. HeadBlade Inc., which sells head-shaving accessories, says revenues have grown 30% a year in the past decade.

Shaving his head gave 60-year-old Stephen Carley, CEO of restaurant chain Red Robin Gourmet Burgers Inc., a confidence boost when he was working among 20-somethings at tech start-ups in the 1990s. With his thinning hair shorn, “I didn’t feel like the grandfather in the office anymore.” He adds that the look gave him “the impression that it was much harder to figure out how old I was.”

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Comb-over patent, 1977. Courtesy of Wikipedia.[end-div]

QTWTAIN: Are there Nazis living on the moon?

QTWTAIN is a Twitterspeak acronym for a Question To Which The Answer Is No.

QTWTAIN is a Twitterspeak acronym for a Question To Which The Answer Is No.

QTWTAINs are a relatively recent journalistic phenomenon. They are often used as headlines to great effect by media organizations to grab a reader’s attention. But importantly, QTWTAINs imply that something ridiculous is true — by posing a headline as a question no evidence seems to be required. Here’s an example of a recent headline:

“Europe: Are there Nazis living on the moon?”

Author and journalist John Rentoul has done all connoisseurs of QTWTAINs a great service by collecting an outstanding selection from hundreds of his favorites into a new book, Questions to Which the Answer is No. Rentoul tells us his story, excerpted, below.

[div class=attrib]From the Independent:[end-div]

I have an unusual hobby. I collect headlines in the form of questions to which the answer is no. This is a specialist art form that has long been a staple of “prepare to be amazed” journalism. Such questions allow newspapers, television programmes and websites to imply that something preposterous is true without having to provide the evidence.

If you see a question mark after a headline, ask yourself why it is not expressed as a statement, such as “Church of England threatened by excess of cellulite” or “Revealed: Marlene Dietrich plotted to murder Hitler” or, “This penguin is a communist”.

My collection started with a bishop, a grudge against Marks & Spencer and a theft in broad daylight. The theft was carried out by me: I had been inspired by Oliver Kamm, a friend and hero of mine, who wrote about Great Historical Questions to Which the Answer is No on his blog. Then I came across this long headline in Britain’s second-best-selling newspaper three years ago: “He’s the outcast bishop who denies the Holocaust – yet has been welcomed back by the Pope. But are Bishop Williamson’s repugnant views the result of a festering grudge against Marks & Spencer?” Thus was an internet meme born.

Since then readers of The Independent blog and people on Twitter with nothing better to do have supplied me with a constant stream of QTWTAIN. If this game had a serious purpose, which it does not, it would be to make fun of conspiracy theories. After a while, a few themes recurred: flying saucers, yetis, Jesus, the murder of John F Kennedy, the death of Marilyn Monroe and reincarnation.

An enterprising PhD student could use my series as raw material for a thesis entitled: “A Typology of Popular Irrationalism in Early 21st-Century Media”. But that would be to take it too seriously. The proper use of the series is as a drinking game, to be followed by a rousing chorus of “Jerusalem”, which consists largely of questions to which the answer is no.

My only rule in compiling the series is that the author or publisher of the question has to imply that the answer is yes (“Does Nick Clegg Really Expect Us to Accept His Apology?” for example, would be ruled out of order). So far I have collected 841 of them, and the best have been selected for a book published this week. I hope you like them.

Is the Loch Ness monster on Google Earth?

Daily Telegraph, 26 August 2009

A picture of something that actually looked like a giant squid had been spotted by a security guard as he browsed the digital planet. A similar question had been asked by the Telegraph six months earlier, on 19 February, about a different picture: “Has the Loch Ness Monster emigrated to Borneo?”

Would Boudicca have been a Liberal Democrat?

This one is cheating, because Paul Richards, who asked it in an article in Progress magazine, 12 March 2010, did not imply that the answer was yes. He was actually making a point about the misuse of historical conjecture, comparing Douglas Carswell, the Conservative MP, who suggested that the Levellers were early Tories, to the spiritualist interviewed by The Sun in 1992, who was asked how Winston Churchill, Joseph Stalin, Karl Marx and Chairman Mao would have voted (Churchill was for John Major; the rest for Neil Kinnock, naturally).

Is Tony Blair a Mossad agent?

A question asked by Peza, who appears to be a cat, on an internet forum on 9 April 2010. One reader had a good reply: “Peza, are you drinking that vodka-flavoured milk?”

Could Angelina Jolie be the first female US President?

Daily Express, 24 June 2009

An awkward one this, because one of my early QTWTAIN was “Is the Express a newspaper?” I had formulated an arbitrary rule that its headlines did not count. But what are rules for, if not for changing?

[div class=attrib]Read the entire article after the jump?[end-div]

[div class=attrib]Book Cover: Questions to Which the Answer is No, by John Rentoul. Courtesy of the Independent / John Rentoul.[end-div]

Brilliant! The Brits are Coming

Following decades of one-way cultural osmosis — from the United States to the UK, it seems that the trend may be reversing. Well, at least in the linguistic department. Although it may be a while before “blimey” enters the American lexicon, other words and phrases such as “spot on”, “chat up”, “ginger” to describe hair color, “gormless”

[div class=attrib]From the BBC:[end-div]

There is little that irks British defenders of the English language more than Americanisms, which they see creeping insidiously into newspaper columns and everyday conversation. But bit by bit British English is invading America too.

“Spot on – it’s just ludicrous!” snaps Geoffrey Nunberg, a linguist at the University of California at Berkeley.

“You are just impersonating an Englishman when you say spot on.”

“Will do – I hear that from Americans. That should be put into quarantine,” he adds.

And don’t get him started on the chattering classes – its overtones of a distinctly British class system make him quiver.

But not everyone shares his revulsion at the drip, drip, drip of Britishisms – to use an American term – crossing the Atlantic.

“I enjoy seeing them,” says Ben Yagoda, professor of English at the University of Delaware, and author of the forthcoming book, How to Not Write Bad.

“It’s like a birdwatcher. If I find an American saying one, it makes my day!”

Last year Yagoda set up a blog dedicated to spotting the use of British terms in American English.

So far he has found more than 150 – from cheeky to chat-up via sell-by date, and the long game – an expression which appears to date back to 1856, and comes not from golf or chess, but the card game whist. President Barack Obama has used it in at least one speech.

Yagoda notices changes in pronunciation too – for example his students sometimes use “that sort of London glottal stop”, dropping the T in words like “important” or “Manhattan”.

Kory Stamper, Associate Editor for Merriam-Webster, whose dictionaries are used by many American publishers and news organisations, agrees that more and more British words are entering the American vocabulary.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image: Ngram graph showing online usage of the phrase “chat up”. Courtesy of Google / BBC.[end-div]

Integrated Space Plan

The Integrated Space Plan is a 100 year vision of space exploration as envisioned over 20 years ago. It is a beautiful and intricate timeline covering the period 1983 to 2100. The timeline was developed in 1989 by Ronald M. Jones at Rockwell International, using long range planning data from NASA, the National Space Policy Directive and other Western space agencies.

While optimistic the plan nonetheless outlined unmanned rover exploration on Mars (done), a comet sample return mission (done), and an orbiter around Mercury (done). Over the longer-term the plan foresaw “human expansion into the inner solar system” by 2018, with “triplanetary, earth-moon-mars infrastructure” in place by 2023, “small martian settlements” followed in 2060, and “Venus terraforming operations” in 2080. The plan concludes with “human interstellar travel” sometime after the year 2100. So, perhaps there is hope for humans beyond this Pale Blue Dot after all.

More below on this fascinating diagram and how it was re-discovered from Sean Ragan over at Make Magazine. A detailed and large download of the plan follows: Integrated Space Plan.

[div class=attrib]From Make:[end-div]

I first encountered this amazing infographic hanging on a professor’s office wall when I was visiting law schools back in 1999. I’ve been trying, off and on, to run down my own copy ever since. It’s been one of those back-burner projects that I’ll poke at when it comes to mind, every now and again, but until quite recently all my leads had come up dry. All I really knew about the poster was that it had been created in the 80s by analysts at Rockwell International and that it was called the “Integrated Space Plan.”

About a month ago, all the little threads I’d been pulling on suddenly unraveled, and I was able to connect with a generous donor willing to entrust an original copy of the poster to me long enough to have it scanned at high resolution. It’s a large document, at 28 x 45?, but fortunately it’s monochrome, and reproduces well using 1-bit color at 600dpi, so even uncompressed bitmaps come in at under 5MB.

[div class=attrib]Read the entire article following the jump.[end-div]

Childhood Injuries on the Rise: Blame Parental Texting

The long-term downward trend in the number injuries to young children is no longer. Sadly, urgent care and emergency room doctors are now seeing more children aged 0-14 years with unintentional injuries. While the exact causes are yet to be determined, there is a growing body of anecdotal evidence that points to distraction among patents and supervisors — it’s the texting stupid!

The long-term downward trend in the number injuries to young children is no longer. Sadly, urgent care and emergency room doctors are now seeing more children aged 0-14 years with unintentional injuries. While the exact causes are yet to be determined, there is a growing body of anecdotal evidence that points to distraction among patents and supervisors — it’s the texting stupid!

The great irony is that should your child suffer an injury while you were using your smartphone, you’ll be able to contact the emergency room much more quickly now — courtesy of the very same smartphone.

[div class=attrib]From the Wall Street Journal:[end-div]

One sunny July afternoon in a San Francisco park, tech recruiter Phil Tirapelle was tapping away on his cellphone while walking with his 18-month-old son. As he was texting his wife, his son wandered off in front of a policeman who was breaking up a domestic dispute.

“I was looking down at my mobile, and the police officer was looking forward,” and his son “almost got trampled over,” he says. “One thing I learned is that multitasking makes you dumber.”

Yet a few minutes after the incident, he still had his phone out. “I’m a hypocrite. I admit it,” he says. “We all are.”