We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

While there is no exact definition of “big data” it tends to be characterized quantitatively and qualitatively differently from data commonly used by most organizations. Where regular data can be stored, processed and analyzed using common database tools and analytical engines, big data refers to vast collections of data that often lie beyond the realm of regular computation. So, often big data requires vast and specialized storage and enormous processing capabilities. Data sets that fall into the big data area cover such areas as climate science, genomics, particle physics, and computational social science.

Big data holds true promise. However, while storage and processing power now enable quick and efficient crunching of tera- and even petabytes of data, tools for comprehensive analysis and visualization lag behind.

[div class=attrib]Alex Pentland via the Edge:[end-div]

Recently I seem to have become MIT’s Big Data guy, with people like Tim O’Reilly and “Forbes” calling me one of the seven most powerful data scientists in the world. I’m not sure what all of that means, but I have a distinctive view about Big Data, so maybe it is something that people want to hear.

I believe that the power of Big Data is that it is information about people’s behavior instead of information about their beliefs. It’s about the behavior of customers, employees, and prospects for your new business. It’s not about the things you post on Facebook, and it’s not about your searches on Google, which is what most people think about, and it’s not data from internal company processes and RFIDs. This sort of Big Data comes from things like location data off of your cell phone or credit card, it’s the little data breadcrumbs that you leave behind you as you move around in the world.

What those breadcrumbs tell is the story of your life. It tells what you’ve chosen to do. That’s very different than what you put on Facebook. What you put on Facebook is what you would like to tell people, edited according to the standards of the day. Who you actually are is determined by where you spend time, and which things you buy. Big data is increasingly about real behavior, and by analyzing this sort of data, scientists can tell an enormous amount about you. They can tell whether you are the sort of person who will pay back loans. They can tell you if you’re likely to get diabetes.

They can do this because the sort of person you are is largely determined by your social context, so if I can see some of your behaviors, I can infer the rest, just by comparing you to the people in your crowd. You can tell all sorts of things about a person, even though it’s not explicitly in the data, because people are so enmeshed in the surrounding social fabric that it determines the sorts of things that they think are normal, and what behaviors they will learn from each other.

As a consequence analysis of Big Data is increasingly about finding connections, connections with the people around you, and connections between people’s behavior and outcomes. You can see this in all sorts of places. For instance, one type of Big Data and connection analysis concerns financial data. Not just the flash crash or the Great Recession, but also all the other sorts of bubbles that occur. What these are is these are systems of people, communications, and decisions that go badly awry. Big Data shows us the connections that cause these events. Big data gives us the possibility of understanding how these systems of people and machines work, and whether they’re stable.

The notion that it is connections between people that is really important is key, because researchers have mostly been trying to understand things like financial bubbles using what is called Complexity Science or Web Science. But these older ways of thinking about Big Data leaves the humans out of the equation. What actually matters is how the people are connected together by the machines and how, as a whole, they create a financial market, a government, a company, and other social structures.

Because it is so important to understand these connections Asu Ozdaglar and I have recently created the MIT Center for Connection Science and Engineering, which spans all of the different MIT departments and schools. It’s one of the very first MIT-wide Centers, because people from all sorts of specialties are coming to understand that it is the connections between people that is actually the core problem in making transportation systems work well, in making energy grids work efficiently, and in making financial systems stable. Markets are not just about rules or algorithms; they’re about people and algorithms together.

Understanding these human-machine systems is what’s going to make our future social systems stable and safe. We are getting beyond complexity, data science and web science, because we are including people as a key part of these systems. That’s the promise of Big Data, to really understand the systems that make our technological society. As you begin to understand them, then you can build systems that are better. The promise is for financial systems that don’t melt down, governments that don’t get mired in inaction, health systems that actually work, and so on, and so forth.

The barriers to better societal systems are not about the size or speed of data. They’re not about most of the things that people are focusing on when they talk about Big Data. Instead, the challenge is to figure out how to analyze the connections in this deluge of data and come to a new way of building systems based on understanding these connections.

Changing The Way We Design Systems

With Big Data traditional methods of system building are of limited use. The data is so big that any question you ask about it will usually have a statistically significant answer. This means, strangely, that the scientific method as we normally use it no longer works, because almost everything is significant! As a consequence the normal laboratory-based question-and-answering process, the method that we have used to build systems for centuries, begins to fall apart.

Big data and the notion of Connection Science is outside of our normal way of managing things. We live in an era that builds on centuries of science, and our methods of building of systems, governments, organizations, and so on are pretty well defined. There are not a lot of things that are really novel. But with the coming of Big Data, we are going to be operating very much out of our old, familiar ballpark.

With Big Data you can easily get false correlations, for instance, “On Mondays, people who drive to work are more likely to get the flu.” If you look at the data using traditional methods, that may actually be true, but the problem is why is it true? Is it causal? Is it just an accident? You don’t know. Normal analysis methods won’t suffice to answer those questions. What we have to come up with is new ways to test the causality of connections in the real world far more than we have ever had to do before. We no can no longer rely on laboratory experiments; we need to actually do the experiments in the real world.

The other problem with Big Data is human understanding. When you find a connection that works, you’d like to be able to use it to build new systems, and that requires having human understanding of the connection. The managers and the owners have to understand what this new connection means. There needs to be a dialogue between our human intuition and the Big Data statistics, and that’s not something that’s built into most of our management systems today. Our managers have little concept of how to use big data analytics, what they mean, and what to believe.

In fact, the data scientists themselves don’t have much of intuition either…and that is a problem. I saw an estimate recently that said 70 to 80 percent of the results that are found in the machine learning literature, which is a key Big Data scientific field, are probably wrong because the researchers didn’t understand that they were overfitting the data. They didn’t have that dialogue between intuition and causal processes that generated the data. They just fit the model and got a good number and published it, and the reviewers didn’t catch it either. That’s pretty bad because if we start building our world on results like that, we’re going to end up with trains that crash into walls and other bad things. Management using Big Data is actually a radically new thing.

[div class=attrib]Read the entire article after the jump.[end-div]

[div class=attrib]Image courtesy of Techcrunch.[end-div]

Apparently the Great Depression in the United States is to blame for the mega-sized soda drinks that many now consume on a daily basis, except in New York City of course (sugary drinks larger than 16oz were banned for sale in restaurants beginning September 13, 2012).

As children we all learn our abc’s; as adults very few ponder the ABC Conjecture in mathematics. The first is often a simple task of rote memorization; the second is a troublesome mathematical problem with a fiendishly complex solution (maybe).

As children we all learn our abc’s; as adults very few ponder the ABC Conjecture in mathematics. The first is often a simple task of rote memorization; the second is a troublesome mathematical problem with a fiendishly complex solution (maybe). Hint. The answer is not shameless self-promotion or exploitative voyeurism; images used in this way may scratch a personal itch, but rarely influence fundamental societal or political behavior. Importantly, photography has given us a rich, nuanced and lasting medium for artistic expression since cameras and film were first invented. However, the principal answer is lies in photography’s ability to tell truth about and to power.

Hint. The answer is not shameless self-promotion or exploitative voyeurism; images used in this way may scratch a personal itch, but rarely influence fundamental societal or political behavior. Importantly, photography has given us a rich, nuanced and lasting medium for artistic expression since cameras and film were first invented. However, the principal answer is lies in photography’s ability to tell truth about and to power.

Gary Gutting, professor of philosophy at the University of Notre Dame reminds us that work is punishment for Adam’s sin, according to the Book of Genesis. No doubt, many who hold other faiths, as well as those who don’t, may tend to agree with this basic notion.

Gary Gutting, professor of philosophy at the University of Notre Dame reminds us that work is punishment for Adam’s sin, according to the Book of Genesis. No doubt, many who hold other faiths, as well as those who don’t, may tend to agree with this basic notion. Product driven companies, inventors from all backgrounds and market researchers have long studied how some innovations take off while others fizzle. So, why do some innovations gain traction? Given two similar but competing inventions, what factors lead to one eclipsing the other? Why do some pioneering ideas and inventions fail only to succeed from a different instigator years, sometimes decades, later? Answers to these questions would undoubtedly make many inventors household names, but as is the case in most human endeavors, the process of innovation is murky and more of an art than a science.

Product driven companies, inventors from all backgrounds and market researchers have long studied how some innovations take off while others fizzle. So, why do some innovations gain traction? Given two similar but competing inventions, what factors lead to one eclipsing the other? Why do some pioneering ideas and inventions fail only to succeed from a different instigator years, sometimes decades, later? Answers to these questions would undoubtedly make many inventors household names, but as is the case in most human endeavors, the process of innovation is murky and more of an art than a science.

Nathan Myhrvold, former CTO of Microsoft, suggests that the wealthy should “think big” by funding large-scale and long-term innovation. Arguably, this would be a much preferred alternative to the wealthy using their millions to gain (more) political influence in much of the West, especially the United States. Myhrvold is now a backer of TerraPower, a nuclear energy startup.

Nathan Myhrvold, former CTO of Microsoft, suggests that the wealthy should “think big” by funding large-scale and long-term innovation. Arguably, this would be a much preferred alternative to the wealthy using their millions to gain (more) political influence in much of the West, especially the United States. Myhrvold is now a backer of TerraPower, a nuclear energy startup. We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

We excerpt an interview with big data pioneer and computer scientist, Alex Pentland, via the Edge. Pentland is a leading thinker in computational social science and currently directs the Human Dynamics Laboratory at MIT.

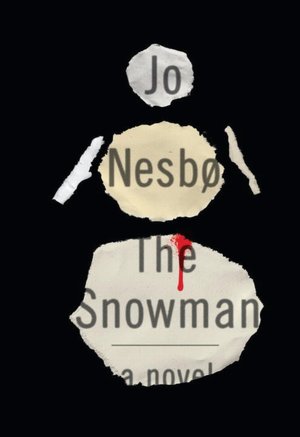

The title could be mistaken for a dark and violent crime novel from the likes of (Stieg) Larrson, Nesbø, Sjöwall-Wahlöö, or Henning Mankell. But, this story is somewhat more mundane, though much more consequential. It’s a story about a Swedish cancer killer.

The title could be mistaken for a dark and violent crime novel from the likes of (Stieg) Larrson, Nesbø, Sjöwall-Wahlöö, or Henning Mankell. But, this story is somewhat more mundane, though much more consequential. It’s a story about a Swedish cancer killer.

Thirty years ago today Professor Scott Fahlman of Carnegie Mellon University sent what is believed to be the first emoticon embedded in an email. The symbol, :-), which he proposed as a joke marker, spread rapidly, morphed and evolved into a universe of symbolic nods, winks, and cyber-emotions.

Thirty years ago today Professor Scott Fahlman of Carnegie Mellon University sent what is believed to be the first emoticon embedded in an email. The symbol, :-), which he proposed as a joke marker, spread rapidly, morphed and evolved into a universe of symbolic nods, winks, and cyber-emotions. Regardless of how flawed old scientific concepts may be researchers have found that it is remarkably difficult for people to give these up and accept sound, new reasoning. Even scientists are creatures of habit.

Regardless of how flawed old scientific concepts may be researchers have found that it is remarkably difficult for people to give these up and accept sound, new reasoning. Even scientists are creatures of habit.

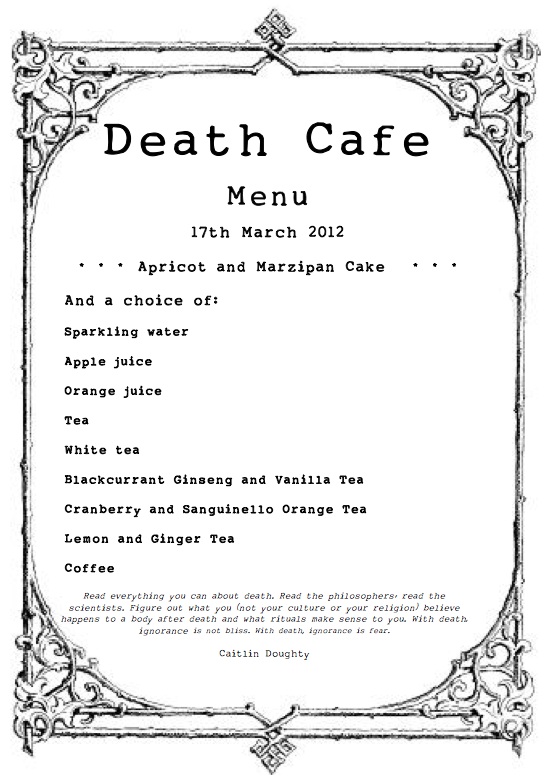

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland.

“Death Cafe” sounds like the name of a group of alternative musicians from Denmark. But it’s not. Its rather more literal definition is a coffee shop where customers go to talk about death over a cup of earl grey tea or double shot espresso. And, while it’s not displacing Starbucks (yet), death cafes are a growing trend in Europe, first inspired by the pop-up Cafe Mortels of Switzerland.

Professional photographers take note: there will always be room for high-quality images that tell a story or capture a timeless event or exude artistic elegance. But, your domain is under attack, again — and the results are not particularly pretty. This time courtesy of Instagram.

Professional photographers take note: there will always be room for high-quality images that tell a story or capture a timeless event or exude artistic elegance. But, your domain is under attack, again — and the results are not particularly pretty. This time courtesy of Instagram. [div class=attrib]From the New York Times:[end-div]

[div class=attrib]From the New York Times:[end-div] Social scientists have had Generation-Y, also known as “millenials”, under their microscopes for a while. Born between 1982 and 1999, Gen-Y is now coming of age and becoming a force in the workplace displacing aging “boomers” as they retire to the hills. So, researchers are now looking at how Gen-Y is faring inside corporate America. Remember, Gen-Y is the “it’s all about me generation”; members are characterized as typically lazy and spoiled, have a grandiose sense of entitlement, inflated self-esteem and deep emotional fragility. Their predecessors, the baby boomers, on the other hand are often seen as over-bearing, work-obsessed, competitive and narrow-minded. A clash of cultures is taking shape in office cubes across the country as these groups, with such differing personalities and philosophies, tussle within the workplace. However, it may not be all bad, as columnist Emily Matchar, argues below — corporate America needs the kind of shake-up that Gen-Y promises.

Social scientists have had Generation-Y, also known as “millenials”, under their microscopes for a while. Born between 1982 and 1999, Gen-Y is now coming of age and becoming a force in the workplace displacing aging “boomers” as they retire to the hills. So, researchers are now looking at how Gen-Y is faring inside corporate America. Remember, Gen-Y is the “it’s all about me generation”; members are characterized as typically lazy and spoiled, have a grandiose sense of entitlement, inflated self-esteem and deep emotional fragility. Their predecessors, the baby boomers, on the other hand are often seen as over-bearing, work-obsessed, competitive and narrow-minded. A clash of cultures is taking shape in office cubes across the country as these groups, with such differing personalities and philosophies, tussle within the workplace. However, it may not be all bad, as columnist Emily Matchar, argues below — corporate America needs the kind of shake-up that Gen-Y promises. Ayn Rand: anti-collectivist ideologue, standard-bearer for unapologetic individualism and rugged self-reliance, or selfish, fantasist and elitist hypocrite?

Ayn Rand: anti-collectivist ideologue, standard-bearer for unapologetic individualism and rugged self-reliance, or selfish, fantasist and elitist hypocrite?