In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

The Drake equation is:

where:

N = the number of civilizations in our galaxy with which communication might be possible (i.e. which are on our current past light cone); and

R* = the average number of star formation per year in our galaxy

fp = the fraction of those stars that have planets

ne = the average number of planets that can potentially support life per star that has planets

fl = the fraction of planets that could support life that actually develop life at some point

fi = the fraction of planets with life that actually go on to develop intelligent life (civilizations)

fc = the fraction of civilizations that develop a technology that releases detectable signs of their existence into space

L = the length of time for which such civilizations release detectable signals into space

Now, based on recent discoveries of hundreds of extra-solar planets, or exoplanets (those beyond our solar system), by the Kepler space telescope and other Earth-bound observatories, researchers are fine-tuning the original Drake Equation for the 21st century.

From the New Scientist:

An iconic tool in the search for extraterrestrial life is getting a 21st-century reboot – just as our best planet-hunting telescope seems to have died. Though the loss of NASA’s Kepler telescope is a blow, the reboot could mean we find signs of life on extrasolar planets within a decade.

The new tool takes the form of an equation. In 1961 astronomer Frank Drake scribbled his now-famous equation for calculating the number of detectable civilisations in the Milky Way. The Drake equation includes a number of terms that at the time seemed unknowable – including the very existence of planets beyond our solar system.

But the past two decades have seen exoplanets pop up like weeds, particularly in the last few years thanks in large part to the Kepler space telescope. Launched in 2009 , Kepler has found more than 130 worlds and detected 3000 or so more possibles. The bounty has given astronomers the first proper census of planets in one region of our galaxy, allowing us to make estimates of the total population of life-friendly worlds across the Milky Way.

, Kepler has found more than 130 worlds and detected 3000 or so more possibles. The bounty has given astronomers the first proper census of planets in one region of our galaxy, allowing us to make estimates of the total population of life-friendly worlds across the Milky Way.

With that kind of data in hand, Sara Seager at the Massachusetts Institute of Technology reckons the Drake equation is ripe for a revamp. Her version narrows a few of the original terms to account for our new best bets of finding life, based in part on what Kepler has revealed. If the original Drake equation was a hatchet, the new Seager equation is a scalpel.

Seager presented her work this week at a conference in Cambridge, Massachusetts, entitled “Exoplanets in the Post-Kepler Era”. The timing could not be more prescient. Last week Kepler suffered a surprise hardware failure that knocked out its ability to see planetary signals clearly. If it can’t be fixed, the mission is over.

“When we talked about the post-Kepler era, we thought that would be three to four years from now,” co-organiser David Charbonneau of the Harvard-Smithsonian Center for Astrophysics said last week. “We now know the post-Kepler era probably started two days ago.”

But Kepler has collected data for four years, slightly longer than the mission’s original goal, and so far only the first 18 months’ worth have been analysed. That means it may have already gathered enough information to give alien-hunters a fighting chance.

The original Drake equation includes seven terms, which multiplied together give the number of intelligent alien civilisations we could hope to detect (see diagram). Kepler was supposed to pin down two terms: the fraction of stars that have planets, and the number of those planets that are habitable.

To do that, Kepler had been staring unflinchingly at some 150,000 stars near the constellation Cygnus, looking for periodic changes in brightness caused by a planet crossing, or transiting, a star’s face as seen from Earth. This method tells us a planet’s size and its rough distance from its host star.

Size gives a clue to a planet’s composition, which tells us whether it is rocky like Earth or gassy like Neptune. Before Kepler, only a few exoplanets had been identified as small enough to be rocky, because other search methods were better suited to spotting larger, gas giant worlds.

“Kepler is the single most revolutionary project that has ever been undertaken in exoplanets,” says Charbonneau. “It broke open the piggybank and rocky planets poured out.” A planet’s distance from its star is also crucial, because that tells us whether the temperature is right for liquid water – and so perhaps life – to exist.

But with Kepler’s recent woes, hopes of finding enough potentially habitable planets, or Earth twins, to satisfy the Drake equation have dimmed. The mission was supposed to run for three-and-a-half years, which should have been enough to pinpoint Earth-sized planets with years of a similar length. After the telescope came online, the mission team realised that other sun-like stars are more active than ours, and they bounce around too much in the telescope’s field of view. To find enough Earths, they would need seven or eight years of data.

Read the entire article here.

Image courtesy of the BBC. Drake Equation courtesy of Wikipedia.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world. “There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

“There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

Artist Ai Weiwei has suffered at the hands of the Chinese authorities much more so than Andy Warhol’s brushes with surveillance from the FBI. Yet the two are remarkably similar: brash and polarizing views, distinctive art and creative processes, masterful self-promotion, savvy media manipulation and global ubiquity. This is all the more astounding given Ai Weiwei’s arrest, detentions and prohibition on travel outside of Beijing. He’s even made it to the Venice Biennale this year — only his art of course.

Artist Ai Weiwei has suffered at the hands of the Chinese authorities much more so than Andy Warhol’s brushes with surveillance from the FBI. Yet the two are remarkably similar: brash and polarizing views, distinctive art and creative processes, masterful self-promotion, savvy media manipulation and global ubiquity. This is all the more astounding given Ai Weiwei’s arrest, detentions and prohibition on travel outside of Beijing. He’s even made it to the Venice Biennale this year — only his art of course.

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart).

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart). “… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California).

“… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California).

In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

In 1960 radio astronomer Frank Drake began the first systematic search for intelligent signals emanating from space. He was not successful, but his pioneering efforts paved the way for numerous other programs, including SETI (Search for Extra-Terrestrial Intelligence). The Drake Equation is named for him, and put simply, gives an estimate of the number of active, extraterrestrial civilizations with methods of communication in our own galaxy. Drake postulated the equation as a way to get the scientific community engaged in the search for life beyond our home planet.

If you are an English speaker and are over the age of 39 you may be pondering the fate of the English language. As the younger generations fill cyberspace with terabytes of misspelled texts and tweets do you not wonder if gorgeous grammatical language will survive? Are the technophobes and anti-Twitterites doomed to a future world of #hashtag-driven conversation and ADHD-like literature? Those of us who care are reminded of George Orwell’s 1946 essay “Politics and the English Language”, in which he decried the swelling ugliness of the language at the time.

If you are an English speaker and are over the age of 39 you may be pondering the fate of the English language. As the younger generations fill cyberspace with terabytes of misspelled texts and tweets do you not wonder if gorgeous grammatical language will survive? Are the technophobes and anti-Twitterites doomed to a future world of #hashtag-driven conversation and ADHD-like literature? Those of us who care are reminded of George Orwell’s 1946 essay “Politics and the English Language”, in which he decried the swelling ugliness of the language at the time.

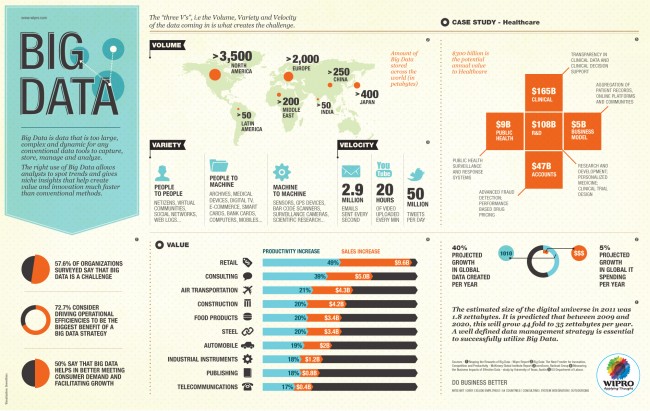

Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy?

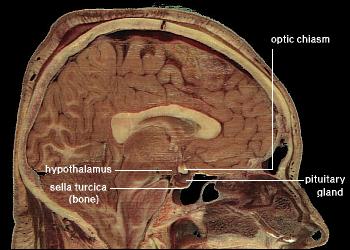

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy? Researchers are continuing to make great progress in unraveling the complexities of aging. While some fingers point to the shortening of telomeres — end caps — in our chromosomal DNA as a contributing factor, other research points to the hypothalamus. This small sub-region of the brain has been found to play a major role in aging and death (though, at the moment only in mice).

Researchers are continuing to make great progress in unraveling the complexities of aging. While some fingers point to the shortening of telomeres — end caps — in our chromosomal DNA as a contributing factor, other research points to the hypothalamus. This small sub-region of the brain has been found to play a major role in aging and death (though, at the moment only in mice).

Stephen Wolfram, physicist, mathematician and complexity theorist, has taken big data ideas to an entirely new level — he’s quantifying himself and his relationships. He calls this discipline personal analytics.

Stephen Wolfram, physicist, mathematician and complexity theorist, has taken big data ideas to an entirely new level — he’s quantifying himself and his relationships. He calls this discipline personal analytics. Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s.

Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s. We live in a world of brands, pitches, advertising, promotions, PR, consumer research, product placement, focus groups, and 24/7 spin. So, it should come as no surprise that even that ubiquitous and utilitarian listing of food and drink items from your local restaurant — the menu — would come in for some 21st century marketing treatment.

We live in a world of brands, pitches, advertising, promotions, PR, consumer research, product placement, focus groups, and 24/7 spin. So, it should come as no surprise that even that ubiquitous and utilitarian listing of food and drink items from your local restaurant — the menu — would come in for some 21st century marketing treatment.