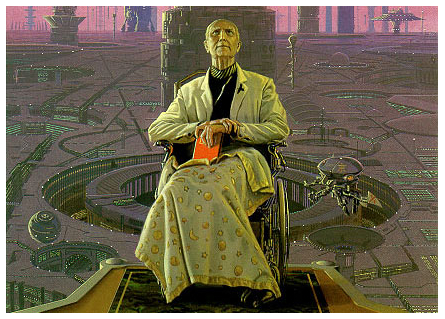

Christopher Hitchens, incisive, erudite and eloquent as ever.

Christopher Hitchens, incisive, erudite and eloquent as ever.

Author, polemicist par-excellence, journalist, atheist, Orwellian (as in, following in George Orwell’s steps), and literary critic, Christopher Hitchens shows us how the pen truly is mightier than the sword (though me might well argue to the contrary).

Now fighting oesophageal cancer, Hitchen’s written word continues to provide clarity and insight. We excerpt below part of his recent, very personal essay for Vanity Fair, on the miracle (scientific, that is) and madness of modern medicine.

[div class=attrib]From Vanity Fair:[end-div]

Death has this much to be said for it:

You don’t have to get out of bed for it.

Wherever you happen to be

They bring it to you—free.

—Kingsley Amis

Pointed threats, they bluff with scorn

Suicide remarks are torn

From the fool’s gold mouthpiece the hollow horn

Plays wasted words, proves to warn

That he not busy being born is busy dying.

—Bob Dylan, “It’s Alright, Ma (I’m Only Bleeding)”

When it came to it, and old Kingsley suffered from a demoralizing and disorienting fall, he did take to his bed and eventually turned his face to the wall. It wasn’t all reclining and waiting for hospital room service after that—“Kill me, you fucking fool!” he once alarmingly exclaimed to his son Philip—but essentially he waited passively for the end. It duly came, without much fuss and with no charge.

Mr. Robert Zimmerman of Hibbing, Minnesota, has had at least one very close encounter with death, more than one update and revision of his relationship with the Almighty and the Four Last Things, and looks set to go on demonstrating that there are many different ways of proving that one is alive. After all, considering the alternatives …

Before I was diagnosed with esophageal cancer a year and a half ago, I rather jauntily told the readers of my memoirs that when faced with extinction I wanted to be fully conscious and awake, in order to “do” death in the active and not the passive sense. And I do, still, try to nurture that little flame of curiosity and defiance: willing to play out the string to the end and wishing to be spared nothing that properly belongs to a life span. However, one thing that grave illness does is to make you examine familiar principles and seemingly reliable sayings. And there’s one that I find I am not saying with quite the same conviction as I once used to: In particular, I have slightly stopped issuing the announcement that “Whatever doesn’t kill me makes me stronger.”

In fact, I now sometimes wonder why I ever thought it profound. It is usually attributed to Friedrich Nietzsche: Was mich nicht umbringt macht mich stärker. In German it reads and sounds more like poetry, which is why it seems probable to me that Nietzsche borrowed it from Goethe, who was writing a century earlier. But does the rhyme suggest a reason? Perhaps it does, or can, in matters of the emotions. I can remember thinking, of testing moments involving love and hate, that I had, so to speak, come out of them ahead, with some strength accrued from the experience that I couldn’t have acquired any other way. And then once or twice, walking away from a car wreck or a close encounter with mayhem while doing foreign reporting, I experienced a rather fatuous feeling of having been toughened by the encounter. But really, that’s to say no more than “There but for the grace of god go I,” which in turn is to say no more than “The grace of god has happily embraced me and skipped that unfortunate other man.”

Or take an example from an altogether different and more temperate philosopher, nearer to our own time. The late Professor Sidney Hook was a famous materialist and pragmatist, who wrote sophisticated treatises that synthesized the work of John Dewey and Karl Marx. He too was an unrelenting atheist. Toward the end of his long life he became seriously ill and began to reflect on the paradox that—based as he was in the medical mecca of Stanford, California—he was able to avail himself of a historically unprecedented level of care, while at the same time being exposed to a degree of suffering that previous generations might not have been able to afford. Reasoning on this after one especially horrible experience from which he had eventually recovered, he decided that he would after all rather have died:

I lay at the point of death. A congestive heart failure was treated for diagnostic purposes by an angiogram that triggered a stroke. Violent and painful hiccups, uninterrupted for several days and nights, prevented the ingestion of food. My left side and one of my vocal cords became paralyzed. Some form of pleurisy set in, and I felt I was drowning in a sea of slime In one of my lucid intervals during those days of agony, I asked my physician to discontinue all life-supporting services or show me how to do it.

The physician denied this plea, rather loftily assuring Hook that “someday I would appreciate the unwisdom of my request.” But the stoic philosopher, from the vantage point of continued life, still insisted that he wished he had been permitted to expire. He gave three reasons. Another agonizing stroke could hit him, forcing him to suffer it all over again. His family was being put through a hellish experience. Medical resources were being pointlessly expended. In the course of his essay, he used a potent phrase to describe the position of others who suffer like this, referring to them as lying on “mattress graves.”

If being restored to life doesn’t count as something that doesn’t kill you, then what does? And yet there seems no meaningful sense in which it made Sidney Hook “stronger.” Indeed, if anything, it seems to have concentrated his attention on the way in which each debilitation builds on its predecessor and becomes one cumulative misery with only one possible outcome. After all, if it were otherwise, then each attack, each stroke, each vile hiccup, each slime assault, would collectively build one up and strengthen resistance. And this is plainly absurd. So we are left with something quite unusual in the annals of unsentimental approaches to extinction: not the wish to die with dignity but the desire to have died.

[div class=attrib]Read the entire article here.[end-div]

[div class=attrib]Image: Christopher Hitchens, 2010. Courtesy of Wikipedia.[end-div]

A popular stereotype suggests that we become increasingly conservative in our values as we age. Thus, one would expect that older voters would be more likely to vote for Republican candidates. However, a recent social study debunks this view.

A popular stereotype suggests that we become increasingly conservative in our values as we age. Thus, one would expect that older voters would be more likely to vote for Republican candidates. However, a recent social study debunks this view.

[div class=attrib]From the New Scientist:[end-div]

[div class=attrib]From the New Scientist:[end-div] The social standing of atheists seems to be on the rise. Back in December we

The social standing of atheists seems to be on the rise. Back in December we  Seventeenth century polymath Blaise Pascal had it right when he remarked, “Distraction is the only thing that consoles us for our miseries, and yet it is itself the greatest of our miseries.”

Seventeenth century polymath Blaise Pascal had it right when he remarked, “Distraction is the only thing that consoles us for our miseries, and yet it is itself the greatest of our miseries.” Let’s face it, taking money out of politics in the United States, especially since the 2010 Supreme Court Decision (

Let’s face it, taking money out of politics in the United States, especially since the 2010 Supreme Court Decision (

Having just posted

Having just posted  The holidays approach, which for many means spending a more than usual amount of time with extended family and distant relatives. So, why talk face-to-face when you could text Great Uncle Aloysius instead?

The holidays approach, which for many means spending a more than usual amount of time with extended family and distant relatives. So, why talk face-to-face when you could text Great Uncle Aloysius instead?

For adults living in North America, the answer is that it’s probably more likely that they would prefer a rapist teacher as babysitter over an atheistic one. Startling as that may seem, the conclusion is backed by some real science, excerpted below.

For adults living in North America, the answer is that it’s probably more likely that they would prefer a rapist teacher as babysitter over an atheistic one. Startling as that may seem, the conclusion is backed by some real science, excerpted below.

In recent years narcissism has been taking a bad rap. So much so that Narcissistic Personality Disorder (NPD) was slated for removal from the 2013 edition of the Diagnostic and Statistical Manual of Mental Disorders – DSM-V. The DSM-V is the professional reference guide published by the American Psychiatric Association (APA). Psychiatrists and clinical psychologists had decided that they needed only 5 fundamental types of personality disorder: anti-social, avoidant, borderline, obsessive-compulsive and schizotypal. Hence no need for NPD.

In recent years narcissism has been taking a bad rap. So much so that Narcissistic Personality Disorder (NPD) was slated for removal from the 2013 edition of the Diagnostic and Statistical Manual of Mental Disorders – DSM-V. The DSM-V is the professional reference guide published by the American Psychiatric Association (APA). Psychiatrists and clinical psychologists had decided that they needed only 5 fundamental types of personality disorder: anti-social, avoidant, borderline, obsessive-compulsive and schizotypal. Hence no need for NPD.

Six degrees of separation is commonly held urban myth that on average everyone on Earth is six connections or less away from any other person. That is, through a chain of friend of a friend (of a friend, etc) relationships you can find yourself linked to the President, the Chinese Premier, a farmer on the steppes of Mongolia, Nelson Mandela, the editor of theDiagonal, and any one of the other 7 billion people on the planet.

Six degrees of separation is commonly held urban myth that on average everyone on Earth is six connections or less away from any other person. That is, through a chain of friend of a friend (of a friend, etc) relationships you can find yourself linked to the President, the Chinese Premier, a farmer on the steppes of Mongolia, Nelson Mandela, the editor of theDiagonal, and any one of the other 7 billion people on the planet.