To date the fastest speed ever traveled by humans is just under 25,000 miles per hour. This milestone was reached by the reentry capsule from the Apollo 10 moon mission — reaching 24,961 mph as it hurtled through Earth’s upper atmosphere. Yet this pales in comparison to the speed of light, which clocks in at 186,282 miles per second, in a vacuum. A quick visit to the calculator puts Apollo 10 at 6.93 miles per second, or 0.0037 percent speed of light!

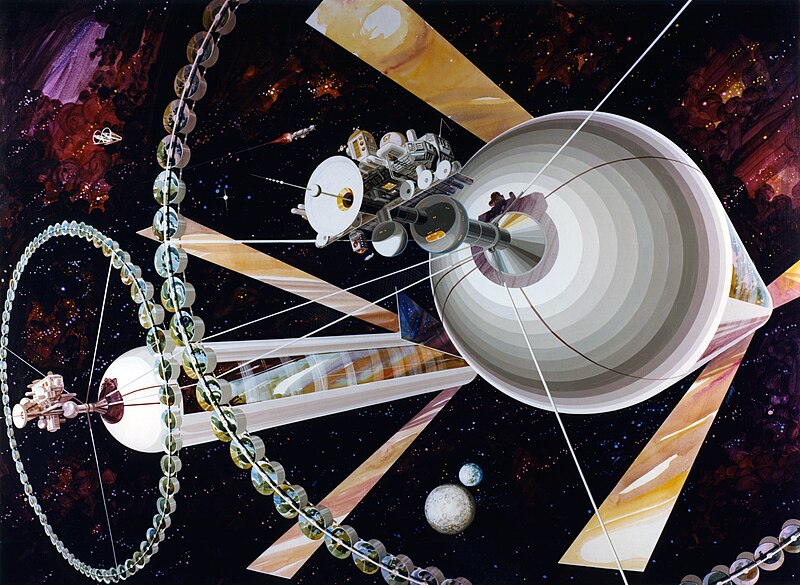

Despite our very pedestrian speeds many dream of a future where humans might reach the stars, powered by some kind of “warp drive” (yes, Star Trek comes to mind). A handful of researchers at NASA are actively pondering this today. Though, our poor level of technology combined with our lack of understanding of the workings of the universe, suggests that an Alcubierre-like approach is still centuries away from our grasp.

From the New York Times:

Beyond the security gate at the Johnson Space Center’s 1960s-era campus here, inside a two-story glass and concrete building with winding corridors, there is a floating laboratory.

Harold G. White, a physicist and advanced propulsion engineer at NASA, beckoned toward a table full of equipment there on a recent afternoon: a laser, a camera, some small mirrors, a ring made of ceramic capacitors and a few other objects.

He and other NASA engineers have been designing and redesigning these instruments, with the goal of using them to slightly warp the trajectory of a photon, changing the distance it travels in a certain area, and then observing the change with a device called an interferometer. So sensitive is their measuring equipment that it was picking up myriad earthly vibrations, including people walking nearby. So they recently moved into this lab, which floats atop a system of underground pneumatic piers, freeing it from seismic disturbances.

The team is trying to determine whether faster-than-light travel — warp drive — might someday be possible.

Warp drive. Like on “Star Trek.”

“Space has been expanding since the Big Bang 13.7 billion years ago,” said Dr. White, 43, who runs the research project. “And we know that when you look at some of the cosmology models, there were early periods of the universe where there was explosive inflation, where two points would’ve went receding away from each other at very rapid speeds.”

“Nature can do it,” he said. “So the question is, can we do it?”

Einstein famously postulated that, as Dr. White put it, “thou shalt not exceed the speed of light,” essentially setting a galactic speed limit. But in 1994, a Mexican physicist, Miguel Alcubierre, theorized that faster-than-light speeds were possible in a way that did not contradict Einstein, though Dr. Alcubierre did not suggest anyone could actually construct the engine that could accomplish that.

His theory involved harnessing the expansion and contraction of space itself. Under Dr. Alcubierre’s hypothesis, a ship still couldn’t exceed light speed in a local region of space. But a theoretical propulsion system he sketched out manipulated space-time by generating a so-called “warp bubble” that would expand space on one side of a spacecraft and contract it on another.

“In this way, the spaceship will be pushed away from the Earth and pulled towards a distant star by space-time itself,” Dr. Alcubierre wrote. Dr. White has likened it to stepping onto a moving walkway at an airport.

But Dr. Alcubierre’s paper was purely theoretical, and suggested insurmountable hurdles. Among other things, it depended on large amounts of a little understood or observed type of “exotic matter” that violates typical physical laws.

Dr. White believes that advances he and others have made render warp speed less implausible. Among other things, he has redesigned the theoretical warp-traveling spacecraft — and in particular a ring around it that is key to its propulsion system — in a way that he believes will greatly reduce the energy requirements.

Read the entire article here.

Camera aficionados will find themselves lamenting the demise of the film advance. Now that the world has moved on from film to digital you will no longer hear that distinctive mechanical sound as you wind on the film, and hope the teeth on the spool engage the plastic of the film.

Camera aficionados will find themselves lamenting the demise of the film advance. Now that the world has moved on from film to digital you will no longer hear that distinctive mechanical sound as you wind on the film, and hope the teeth on the spool engage the plastic of the film. By most accounts the internet is home to around 650 million websites, of which around 200 million are active. About 8,000 new websites go live every hour of every day.

By most accounts the internet is home to around 650 million websites, of which around 200 million are active. About 8,000 new websites go live every hour of every day. It sounds fanciful, and maybe it is. But Musk is not the only one working on ultra-fast land-based transportation systems. And if anyone can turn an idea like this into reality, it might just be the man who has spent the past decade revolutionizing electric cars and space transport. Don’t be surprised if the biggest obstacles to the Hyperloop turn out to be bureaucratic rather than technological. After all, we’ve known how to build bullet trains for half a century, and look how far that has gotten us. Still, a nation can dream—and as long as we’re dreaming, why not dream about something way cooler than what Japan and China are already working on?

It sounds fanciful, and maybe it is. But Musk is not the only one working on ultra-fast land-based transportation systems. And if anyone can turn an idea like this into reality, it might just be the man who has spent the past decade revolutionizing electric cars and space transport. Don’t be surprised if the biggest obstacles to the Hyperloop turn out to be bureaucratic rather than technological. After all, we’ve known how to build bullet trains for half a century, and look how far that has gotten us. Still, a nation can dream—and as long as we’re dreaming, why not dream about something way cooler than what Japan and China are already working on? There is no doubting that technology’s grasp finds us at increasingly younger ages. No longer is it just our teens constantly mesmerized by status updates on their mobiles, and not just our “in-betweeners” addicted to “facetiming” with their BFFs. Now our technologies are fast becoming the tools of choice for our kindergarteners and pre-K kids. Some parents lament.

There is no doubting that technology’s grasp finds us at increasingly younger ages. No longer is it just our teens constantly mesmerized by status updates on their mobiles, and not just our “in-betweeners” addicted to “facetiming” with their BFFs. Now our technologies are fast becoming the tools of choice for our kindergarteners and pre-K kids. Some parents lament. Technology is altering the lives of us all. Often it is a positive influence, offering its users tremendous benefits from time-saving to life-extension. However, the relationship of technology to our employment is more complex and usually detrimental.

Technology is altering the lives of us all. Often it is a positive influence, offering its users tremendous benefits from time-saving to life-extension. However, the relationship of technology to our employment is more complex and usually detrimental. Soon courtesy of Amazon, Google and other retail giants, and of course lubricated by the likes of the ubiquitous UPS and Fedex trucks, you may be able to dispense with the weekly or even daily trip to the grocery store. Amazon is expanding a trial of its same-day grocery delivery service, and others are following suit in select local and regional tests.

Soon courtesy of Amazon, Google and other retail giants, and of course lubricated by the likes of the ubiquitous UPS and Fedex trucks, you may be able to dispense with the weekly or even daily trip to the grocery store. Amazon is expanding a trial of its same-day grocery delivery service, and others are following suit in select local and regional tests. Paradoxically the law and common sense often seem to be at odds. Justice may still be blind, at least in most open democracies, but there seems to be no question as to the stupidity of much of our law.

Paradoxically the law and common sense often seem to be at odds. Justice may still be blind, at least in most open democracies, but there seems to be no question as to the stupidity of much of our law. Really, it was only a matter of time. First, digital cameras killed off their film-dependent predecessors and then dealt a death knell for Kodak. Now social media and the #hashtag is doing the same to the professional photographer.

Really, it was only a matter of time. First, digital cameras killed off their film-dependent predecessors and then dealt a death knell for Kodak. Now social media and the #hashtag is doing the same to the professional photographer.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world. “There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

“There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

“… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California).

“… But You Can Never Leave”. So goes one of the most memorable of lyrical phrases from The Eagles (Hotel California). Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Good customer service once meant that a store or service employee would know you by name. This person would know your previous purchasing habits and your preferences; this person would know the names of your kids and your dog. Great customer service once meant that an employee could use this knowledge to anticipate your needs or personalize a specific deal. Well, this type of service still exists — in some places — but many businesses have outsourced it to offshore call center personnel or to machines, or both. Service may seem personal, but it’s not — service is customized to suit your profile, but it’s not personal in the same sense that once held true.

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy?

Ubiquitous connectivity for, and between, individuals and businesses is widely held to be beneficial for all concerned. We can connect rapidly and reliably with family, friends and colleagues from almost anywhere to anywhere via a wide array of internet enabled devices. Yet, as these devices become more powerful and interconnected, and enabled with location-based awareness, such as GPS (Global Positioning System) services, we are likely to face an increasing acute dilemma — connectedness or privacy? It’s official — teens can’t stay off social media for more than 15 minutes. It’s no secret that many kids aged between 8 and 18 spend most of their time texting, tweeting and checking their real-time social status. The profound psychological and sociological consequences of this behavior will only start to become apparent ten to fifteen year from now. In the meantime, researchers are finding a general degradation in kids’ memory skills from using social media and multi-tasking while studying.

It’s official — teens can’t stay off social media for more than 15 minutes. It’s no secret that many kids aged between 8 and 18 spend most of their time texting, tweeting and checking their real-time social status. The profound psychological and sociological consequences of this behavior will only start to become apparent ten to fifteen year from now. In the meantime, researchers are finding a general degradation in kids’ memory skills from using social media and multi-tasking while studying. The collective IQ of Google, the company, inched up a few notches in January of 2013 when they hired

The collective IQ of Google, the company, inched up a few notches in January of 2013 when they hired

By all accounts serial entrepreneur, inventor and futurist Ray Kurzweil is Google’s most famous employee, eclipsing even co-founders Larry Page and Sergei Brin. As an inventor he can lay claim to some impressive firsts, such as the flatbed scanner, optical character recognition and the music synthesizer. As a futurist, for which he is now more recognized in the public consciousness, he ponders longevity, immortality and the human brain.

By all accounts serial entrepreneur, inventor and futurist Ray Kurzweil is Google’s most famous employee, eclipsing even co-founders Larry Page and Sergei Brin. As an inventor he can lay claim to some impressive firsts, such as the flatbed scanner, optical character recognition and the music synthesizer. As a futurist, for which he is now more recognized in the public consciousness, he ponders longevity, immortality and the human brain.

Martin Cooper. You may not know that name, but you and a fair proportion of the world’s 7 billion inhabitants have surely held or dropped or prodded or cursed his offspring.

Martin Cooper. You may not know that name, but you and a fair proportion of the world’s 7 billion inhabitants have surely held or dropped or prodded or cursed his offspring. Robert Hof argues that the time is ripe for Steve Jobs’ corporate legacy to reinvent the TV. Apple transformed the personal computer industry, the mobile phone market and the music business. Clearly the company has all the components in place to assemble another innovation.

Robert Hof argues that the time is ripe for Steve Jobs’ corporate legacy to reinvent the TV. Apple transformed the personal computer industry, the mobile phone market and the music business. Clearly the company has all the components in place to assemble another innovation.