Following the recent horrendous mass murders in Mali, Paris, and Lebanon (and elsewhere) there is a visible outpouring of grief, and on a worldwide scale. Many of us, while removed from direct involvement and having no direct connection to the victims and their families, still feel sadness, pain and loss.

Following the recent horrendous mass murders in Mali, Paris, and Lebanon (and elsewhere) there is a visible outpouring of grief, and on a worldwide scale. Many of us, while removed from direct involvement and having no direct connection to the victims and their families, still feel sadness, pain and loss.

The empathy expressed by strangers or distant acquaintances for those even remotely connected with the violence is tangible and genuine. For instance, we foreigners may seek out a long-lost French colleague to express our concern and condolences for Mali / France and all Bamakoans / Parisians. There is genuine concern and sense of connection, at a personal level, however frail that connection may be.

But what is going on when Amazon, Apple, eBay, Uber and other corporations wave their digital banners of solidarity — expressing grief — on the home pages of their websites?

Jessica Reed over at the Guardian makes some interesting observations. She is absolutely right to admonish those businesses that would seek to profit from such barbaric acts. In fact, we should boycott any found to be doing so. Some are taking real and positive action, such as enabling free communication or providing free transportation and products. However, she is also correct to warn us of the growing, insidious tendency to anthropomorphize and project sentience onto corporations.

Brands and companies increasingly love us, they sympathize, and now they grieve with us. But there is a vast difference from being hugged in sympathy by the boss of your local deli and the faceless, impersonal digital flag-waving courtesy of a dotcom home page.

Who knows where this will lead us decades from now: perhaps if there is money to be made, big corporations will terrorize us as well.

From Jessica Reed:

The pain is shared by all of us, but a golden rule should apply: don’t capitalise on grief, don’t profit from it. Perhaps this is why big companies imposing their sympathy on the rest of us leaves a bitter taste in my month: it is hard for me to see these gestures as anything but profiteering.

Companies are now posing as entities capable of compassion, never mind that they cannot possibly speak for all of its employees. This also brings us a step closer to endowing them with a human trait: the capacity to express emotions. They think they’re sentient.

If this sounds crazy, it’s because it is.

In the US, the debate about corporate personhood is ongoing. The supreme court already ruled that corporations are indeed people in some contexts: they have been granted the right to spend money on political issues, for example, as well as the right to refuse to cover birth control in their employee health plans on religious grounds.

Armed with these rulings, brands continue to colonise our lives, accompanying us from the cradle to the grave. They see you grow up, they see you die. They’re benevolent. They’re family.

Looking for someone to prove me wrong, I asked Ed Zitron, a PR chief executive, about these kinds of tactics. Zitron points out that tech companies are, in some cases, performing a useful service – as in Facebook’s “safety check”, T-Mobile and Verizon’s free communication with France, and Airbnb’s decision to compensate hosts for letting people stay longer for free. Those are tangible gestures – the equivalent of bringing grief-stricken neighbours meals to sustain them, rather than sending a hastily-written card.

Anything else, he says, is essentially good-old free publicity: “an empty gesture, a non-movement, a sanguine pretend-help that does nothing other than promote themselves”.

It’s hard to disagree with him and illustrates how far brands have further infiltrated our lives since the publication of Naomi Klein’s No Logo, which documented advertising as an industry not only interested in selling products, but also a dream and a message. We can now add “grief surfing” to the list.

A fear years back, Jon Stewart mocked this sorry state of affairs:

If only there were a way to prove that corporations are not people, show their inability to love, to show that they lack awareness of their own mortality, to see what they do when you walk in on them masturbating …

Turns out we can’t – companies will love you, in sickness and in health, for better and for worse, whether you want it or not.

Read the entire article here.

Image: Screen grab from Amazon.fr, November 17, 2015.

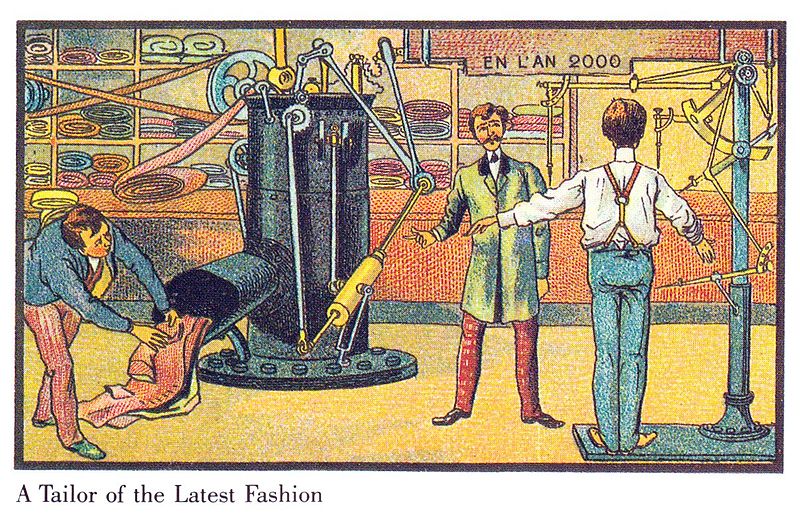

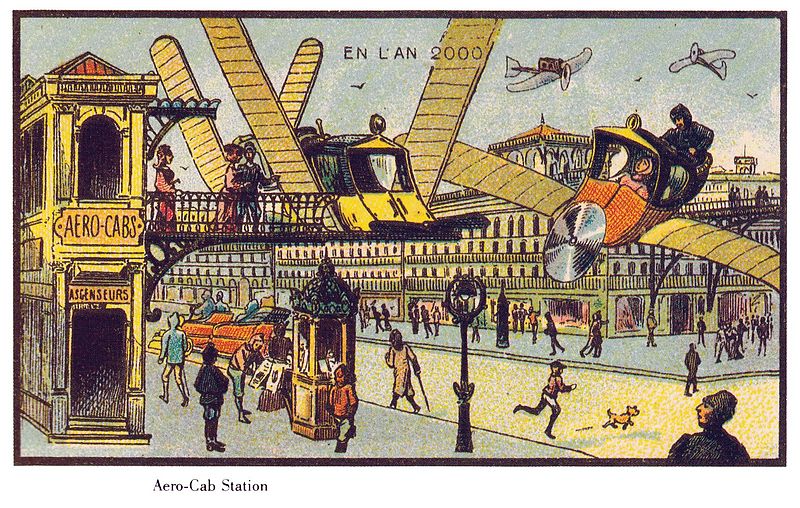

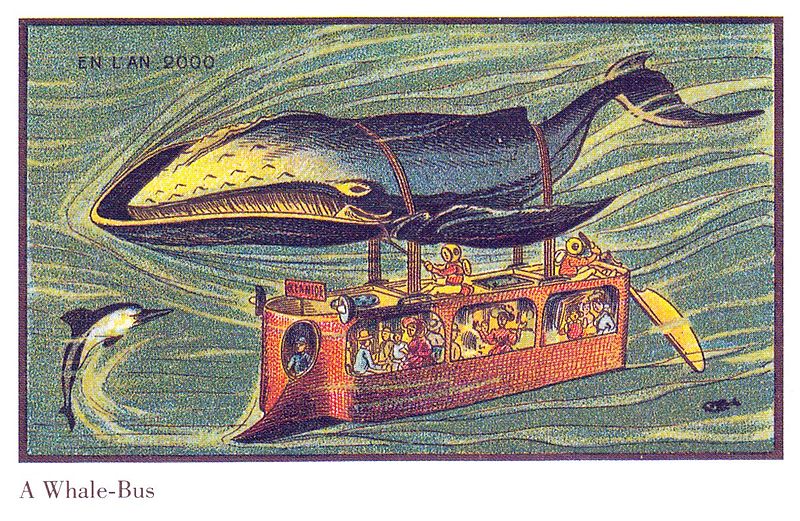

Just over a hundred years ago, at the turn of the 19th century, Jean-Marc Côté and some of his fellow French artists were commissioned to imagine what the world would look like in 2000. Their colorful sketches and paintings portrayed some interesting inventions, though all seem to be grounded in familiar principles and incremental innovations — mechanical helpers, ubiquitous propellers and wings. Interestingly, none of these artist-futurists imagined a world beyond Victorian dress, gender inequality and wars. But these are gems nonetheless.

Just over a hundred years ago, at the turn of the 19th century, Jean-Marc Côté and some of his fellow French artists were commissioned to imagine what the world would look like in 2000. Their colorful sketches and paintings portrayed some interesting inventions, though all seem to be grounded in familiar principles and incremental innovations — mechanical helpers, ubiquitous propellers and wings. Interestingly, none of these artist-futurists imagined a world beyond Victorian dress, gender inequality and wars. But these are gems nonetheless. Some of their works found their way into cigar boxes and cigarette cases, others were exhibited at the 1900 World Exhibition in Paris. My three favorites: a Tailor of the Latest Fashion, the Aero-cab Station and the Whale Bus. See the full complement of these remarkable futuristic visions at the Public Domain Review, and check out the House Rolling Through the Countryside and At School.

Some of their works found their way into cigar boxes and cigarette cases, others were exhibited at the 1900 World Exhibition in Paris. My three favorites: a Tailor of the Latest Fashion, the Aero-cab Station and the Whale Bus. See the full complement of these remarkable futuristic visions at the Public Domain Review, and check out the House Rolling Through the Countryside and At School. Images courtesy of the Public Domain Review, a project of the Open Knowledge Foundation. Public Domain.

Images courtesy of the Public Domain Review, a project of the Open Knowledge Foundation. Public Domain.