A mere four years ago Uber was being used mostly by Silicon Valley engineers to reserve local limo rides. Now, the Uber app is in the hands of millions of people and being used to book car transportation across sixty cities in six continents. Google recently invested $258 million in the company, which gives Uber a value of around $3.5 billion. Those who have used the service — drivers and passengers alike — swear by it; the service is convenient and the app is simple and engaging. But that doesn’t seem to justify the enormous valuation. So, what’s going on?

From Wired:

When Uber cofounder and CEO Travis Kalanick was in sixth grade, he learned to code on a Commodore 64. His favorite things to program were videogames. But in the mid-’80s, getting the machine to do what he wanted still felt a lot like manual labor. “Back then you would have to do the graphics pixel by pixel,” Kalanick says. “But it was cool because you were like, oh my God, it’s moving across the screen! My monster is moving across the screen!” These days, Kalanick, 37, has lost none of his fascination with watching pixels on the move.

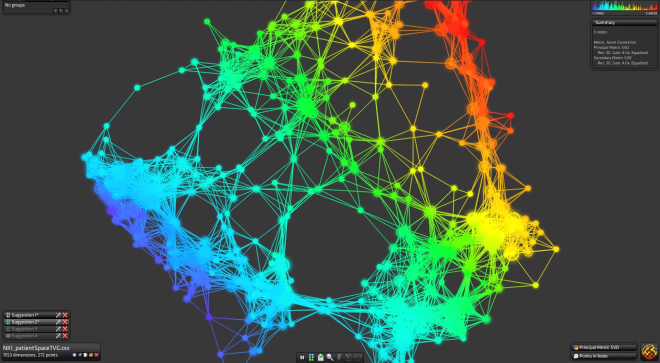

In Uber’s San Francisco headquarters, a software tool called God View shows all the vehicles on the Uber system moving at once. On a laptop web browser, tiny cars on a map show every Uber driver currently on the city’s streets. Tiny eyeballs on the same map show the location of every customer currently looking at the Uber app on their smartphone. In a way, the company anointed by Silicon Valley’s elite as the best hope for transforming global transportation couldn’t have a simpler task: It just has to bring those cars and those eyeballs together — the faster and cheaper, the better.

“Uber should feel magical to the customer,” Kalanick says one morning in November. “They just push the button and the car comes. But there’s a lot going on under the hood to make that happen.”

A little less than four years ago, when Uber was barely more than a private luxury car service for Silicon Valley’s elite techies, Kalanick sat watching the cars crisscrossing San Francisco on God View and had a Matrix-y moment when he “started seeing the math.” He was going to make the monster move — not just across the screen but across cities around the globe. Since then, Uber has expanded to some 60 cities on six continents and grown to at least 400 employees. Millions of people have used Uber to get a ride, and revenue has increased at a rate of nearly 20 percent every month over the past year.

The company’s speedy ascent has taken place in parallel with a surge of interest in the so-called sharing economy — using technology to connect consumers with goods and services that would otherwise go unused. Kalanick had the vision to see potential profit in the empty seats of limos and taxis sitting idle as drivers wait for customers to call.

But Kalanick doesn’t put on the airs of a visionary. In business he’s a brawler. Reaching Uber’s goals has meant digging in against the established bureaucracy in many cities, where giving rides for money is heavily regulated. Uber has won enough of those fights to threaten the market share of the entrenched players. It not only offers a more efficient way to hail a ride but gives drivers a whole new way to see where demand is bubbling up. In the process, Uber seems capable of opening up sections of cities that taxis and car services never bothered with before.

In an Uber-fied future, fewer people own cars, but everybody has access to them.

In San Francisco, Uber has become its own noun — you “get an Uber.” But to make it a verb — to get to the point where everyone Ubers the same way they Google — the company must outperform on transportation the same way Google does on search.

No less than Google itself believes Uber has this potential. In a massive funding round in August led by the search giant’s venture capital arm, Uber received $258 million. The investment reportedly valued Uber at around $3.5 billion and pushed the company to the forefront of speculation about the next big tech IPO — and Kalanick as the next great tech leader.

The deal set Silicon Valley buzzing about what else Uber could become. A delivery service powered by Google’s self-driving cars? The new on-the-ground army for ferrying all things Amazon? Jeff Bezos also is an Uber investor, and Kalanick cites him as an entrepreneurial inspiration. “Amazon was just books and then some CDs,” Kalanick says. “And then they’re like, you know what, let’s do frickin’ ladders!” Then came the Kindle and Amazon Web Services — examples, Kalanick says, of how an entrepreneur’s “creative pragmatism” can defy expectations. He clearly enjoys daring the world to think of Uber as merely another way to get a ride.

“We feel like we’re still realizing what the potential is,” he says. “We don’t know yet where that stops.”

From the back of an Uber-summoned Mercedes GL450 SUV, Kalanick banters with the driver about which make and model will replace the discontinued Lincoln Town Car as the default limo of choice.

Mercedes S-Class? Too expensive, Kalanick says. Cadillac XTS? Too small.

So what is it?

“OK, I’m glad you asked,” Kalanick says. “This is going to blow you away, dude. Are you ready? Have you seen the 2013 Ford Explorer?” Spacious, like a Lexus crossover, but way cheaper.

As Uber becomes a dominant presence in urban transportation, it’s easy to imagine the company playing a role in making this prophecy self-fulfilling. It’s just one more sign of how far Uber has come since Kalanick helped create the company in 2009. In the beginning, it was just a way for him and his cofounder, StumbleUpon creator Garrett Camp, and their friends to get around in style.

They could certainly afford it. At age 21, Kalanick, born and raised in Los Angeles, had started a Napster-like peer-to-peer file-sharing search engine called Scour that got him sued for a quarter-trillion dollars by major media companies. Scour filed for bankruptcy, but Kalanick cofounded Red Swoosh to serve digital media over the Internet for the same companies that had sued him. Akamai bought the company in 2007 in a stock deal worth $19 million.

By the time he reached his thirties, Kalanick was a seasoned veteran in the startup trenches. But part of him wondered if he still had the drive to build another company. His breakthrough came when he was watching, of all things, a Woody Allen movie. The film was Vicky Christina Barcelona, which Allen made in 2008, when he was in his seventies. “I’m like, that dude is old! And he is still bringing it! He’s still making really beautiful art. And I’m like, all right, I’ve got a chance, man. I can do it too.”

Kalanick charged into Uber and quickly collided with the muscular resistance of the taxi and limo industry. It wasn’t long before San Francisco’s transportation agency sent the company a cease-and-desist letter, calling Uber an unlicensed taxi service. Kalanick and Uber did neither, arguing vehemently that it merely made the software that connected drivers and riders. The company kept offering rides and building its stature among tech types—a constituency city politicians have been loathe to alienate—as the cool way to get around.

Uber has since faced the wrath of government and industry in other cites, notably New York, Chicago, Boston, and Washington, DC.

One councilmember opposed to Uber in the nation’s capital was self-described friend of the taxi industry Marion Barry (yes, that Marion Barry). Kalanick, in DC to lobby on Uber’s behalf, told The Washington Post he had an offer for the former mayor: “I will personally chauffeur him myself in his silver Jaguar to work every day of the week, if he can just make this happen.” Though that ride never happened, the council ultimately passed a legal framework that Uber called “an innovative model for city transportation legislation across the country.”

Though Kalanick clearly relishes a fight, he lights up more when talking about Uber as an engineering problem. To fulfill its promise—a ride within five minutes of the tap of a smartphone button—Uber must constantly optimize the algorithms that govern, among other things, how many of its cars are on the road, where they go, and how much a ride costs. While Uber offers standard local rates for its various options, times of peak demand send prices up, which Uber calls surge pricing. Some critics call it price-gouging, but Kalanick says the economics are far less insidious. To meet increased demand, drivers need extra incentive to get out on the road. Since they aren’t employees, the marketplace has to motivate them. “Most things are dynamically priced,” Kalanick points out, from airline tickets to happy hour cocktails.

Kalanick employs a data-science team of PhDs from fields like nuclear physics, astrophysics, and computational biology to grapple with the number of variables involved in keeping Uber reliable. They stay busy perfecting algorithms that are dependable and flexible enough to be ported to hundreds of cities worldwide. When we met, Uber had just gone live in Bogotè, Colombia, as well as Shanghai, Dubai, and Bangalore.

And it’s no longer just black cars and yellow cabs. A newer option, UberX, offers lower-priced rides from drivers piloting their personal vehicles. According to Uber, only certain late-model cars are allowed, and drivers undergo the same background screening as others in the service. In an Uber-fied version of the future, far fewer people may own cars but everybody would have access to them. “You know, I hadn’t driven for a year, and then I drove over the weekend,” Kalanick says. “I had to jump-start my car to get going. It was a little awkward. So I think that’s a sign.”

Back at Uber headquarters, burly drivers crowd the lobby while nearby, coders sit elbow to elbow. Like other San Francisco startups on the cusp of something bigger, Uber is preparing to move to a larger space. Its new digs will be in the same building as Square, the mobile payments company led by Twitter mastermind Jack Dorsey. Twitter’s offices are across the street. The symbolism is hard to miss: Uber is joining the coterie of companies that define San Francisco’s latest tech boom.

Still, part of that image depends on Uber’s outsize potential to expand what it does. The logistical numbers it crunches to make it easier for people to get around would seem a natural fit for a transition into a delivery service. Uber coyly fuels that perception with publicity stunts like ferrying ice cream and barbecue to customers through its app. It’s easy to imagine such promotions quietly doubling as proofs of concept. News of Google’s massive investment prompted visions of a push-button delivery service powered by Google’s self-driving cars.

If Uber expands into delivery, its competition will suddenly include behemoths like Amazon, eBay, and Walmart.

Kalanick acknowledges that the most recent round of investment is intended to fund Uber’s growth, but that’s as far as he’ll go. “In a lot of ways, it’s not the money that allows you to do new things. It’s the growth and the ability to find things that people want and to use your creativity to target those,” he says. “There are a whole hell of a lot of other things that we can do and intend on doing.”

But the calculus of delivery may not even be the hardest part. If Uber were to expand into delivery, its competition—for now other ride-sharing startups such as Lyft, Sidecar, and Hailo—would include Amazon, eBay, and Walmart too.

One way to skirt rivalry with such giants is to offer itself as the back-end technology that can power same-day online retail. In early fall, Google launched its Shopping Express service in San Francisco. The program lets customers shop online at local stores through a Google-powered app; Google sends a courier with their deliveries the same day.

David Krane, the Google Ventures partner who led the investment deal, says there’s nothing happening between Uber and Shopping Express. He also says self-driving delivery vehicles are nowhere near ready to be looked at seriously as part of Uber. “Those meetings will happen when the technology is ready for such discussion,” he says. “That is many moons away.”

Read the entire article here.

Image courtesy of Uber.