Not far from London’s beautiful Hampstead Heath lies The Bishops Avenue. From the 1930s until the mid-1970s this mile-long street became the archetypal symbol for new wealth; the nouveau riche millionaires made this the most sought after — and well-known — address for residential property in the nation (of course “old money” still preferred its stately mansions and castles). But since then, The Bishops Avenue has changed, with many properties now in the hands of billionaires, hedge fund investors and oil rich plutocrats.

From the Telegraph:

You can tell when a property is out of your price bracket if the estate agent’s particulars come not on a sheet of A4 but are presented in a 50-page hardback coffee-table book, with a separate section for the staff quarters.

Other giveaway signs, in case you were in any doubt, are the fact the lift is leather-lined, there are 62 internal CCTV cameras, a private cinema, an indoor swimming pool, sauna, steam room, and a series of dressing rooms – “for both summer and winter”, the estate agent informs me – which are larger than many central London flats.

But then any property on The Bishops Avenue in north London is out of most people’s price bracket – such as number 62, otherwise known as Jersey House, which is on the market for £38 million. I am being shown around by Grant Alexson, from Knight Frank estate agents, both of us in our socks to ensure that we do not grubby the miles of carpets or marble floors in the bathrooms (all of which have televisions set into the walls).

My hopes of picking up a knock-down bargain had been raised after the news this week that one property on The Bishops Avenue, Dryades, had been repossessed. The owners, the family of the former Pakistan privatisation minister Waqar Ahmed Khan, were unable to settle a row with their lender, Deutsche Bank.

It is not the only property in the hands of the receivers on this mile-long stretch. One was tied up in a Lehman Brothers property portfolio and remains boarded up. Meanwhile, the Saudi royal family, which bought 10 properties during the First Gulf War as boltholes in case Saddam Hussein invaded, has offloaded the entire package for a reported £80 million in recent weeks. And the most expensive property on the market, Heath Hall, had £35 million knocked off the asking price (taking it down to a mere £65 million).

This has all thrown the spotlight once again on this strange road, which has been nicknamed “Millionaires’ Row” since the 1930s – when a million meant something. Now, it is called “Billionaires’ Row”. It was designed, from its earliest days, to be home to the very wealthy. One of the first inhabitants was George Sainsbury, son of the supermarket founder; another was William Lyle, who used his sugar fortune to build a vast mansion in the Arts and Crafts style. Stars such as Gracie Fields also lived here.

But between the wars, the road became the butt of Music Hall comedians who joked about it being full of “des-reses” for the nouveaux riches such as Billy Butlin. Evelyn Waugh, the master of social nuance, made sure his swaggering newspaper baron Lord Copper of Scoop resided here. It was the 1970s, however, that saw the road vault from being home to millionaires to a pleasure ground for international plutocrats, who used their shipping or oil wealth to snap up properties, knock them down and build monstrous mansions in “Hollywood Tudor” style. Worse were the pastiches of Classical temples, the most notorious of which was built by the Turkish industrialist Halis Toprak, who decided the bath big enough to fit 20 people was not enough of a statement. So he slapped “Toprak Mansion” on the portico (causing locals to dub it “Top Whack Mansion”). It was sold a couple of years ago to the Kazakhstani billionairess Horelma Peramam, who renamed it Royal Mansion.

Perhaps the most famous of recent inhabitants was Lakshmi Mittal, the steel magnate, and for a long time Britain’s richest man. But he sold Summer Palace, for £38 million in 2011 to move to the much grander Kensington Palace Gardens, in the heart of London. The cast list became even more varied with the arrival of Salman Rushdie who hid behind bullet-proof glass and tycoon Asil Nadir, whose address is now HM Belmarsh Prison.

Of course, you can be hard-pressed to discover who owns these properties or how much anyone paid. These are not run-of-the-mill transactions between families moving home. Official Land Registry records reveal a complex web of deals between offshore companies. Miss Peramam holds Royal Mansion in the name of Hartwood Resources Company, registered in the British Virgin Islands, and the records suggest she paid closer to £40 million than the £50 million reported.

Alexson says the complexity of the deals are not just about avoiding stamp duty (which is now at 7 per cent for properties over £2 million). “Discretion first, tax second,” he argues. “Look, some of the Middle Eastern families own £500 billion. Stamp duty is not an issue for them.” Still, new tax rules this year, which increase stamp duty to 15 per cent if the property is bought through an offshore vehicle, have had an effect, according to Alexson, who says that the last five houses he sold have been bought by an individual, not a company.

But there is little sign of these individuals on the road itself. Walking down the main stretch of the Avenue from the beautiful Hampstead Heath to the booming A1, which bisects the road, more than 10 of these 39 houses are either boarded up or in a state of severe disrepair. Behind the high gates and walls, moss and weeds climb over the balustrades. Many others are clearly uninhabited, except for a crew of builders and a security guard. (Barnet council defends all the building work it has sanctioned, with Alexson pointing out that the new developments are invariably rectifying the worst atrocities of the 1980s.)

Read the entire article here.

Image: Toprak Mansion (now known as Royal Mansion), The Bishops Avenue. Courtesy of Daily Mail.

What better way to get around your post-apocalyptic neighborhhood after the end-of-times than on a trusty bicycle. Let’s face it, a simple human-powered, ride-share bike is likely to fare much better in a dystopian landscape than a gas-guzzling truck or an electric vehicle or even s fuel-efficient moped.

What better way to get around your post-apocalyptic neighborhhood after the end-of-times than on a trusty bicycle. Let’s face it, a simple human-powered, ride-share bike is likely to fare much better in a dystopian landscape than a gas-guzzling truck or an electric vehicle or even s fuel-efficient moped.

![The beef was grown in a lab by a pioneer in this arena — Mark Post of Maastricht University in the Netherlands. My colleague Henry Fountain has reported the details in a fascinating news article. Here’s an excerpt followed by my thoughts on next steps in what I see as an important area of research and development: According to the three people who ate it, the burger was dry and a bit lacking in flavor. One taster, Josh Schonwald, a Chicago-based author of a book on the future of food [link], said “the bite feels like a conventional hamburger” but that the meat tasted “like an animal-protein cake.” But taste and texture were largely beside the point: The event, arranged by a public relations firm and broadcast live on the Web, was meant to make a case that so-called in-vitro, or cultured, meat deserves additional financing and research….. Dr. Post, one of a handful of scientists working in the field, said there was still much research to be done and that it would probably take 10 years or more before cultured meat was commercially viable. Reducing costs is one major issue — he estimated that if production could be scaled up, cultured beef made as this one burger was made would cost more than $30 a pound. The two-year project to make the one burger, plus extra tissue for testing, cost $325,000. On Monday it was revealed that Sergey Brin, one of the founders of Google, paid for the project. Dr. Post said Mr. Brin got involved because “he basically shares the same concerns about the sustainability of meat production and animal welfare.” The enormous potential environmental benefits of shifting meat production, where feasible, from farms to factories were estimated in “Environmental Impacts of Cultured Meat Production,”a 2011 study in Environmental Science and Technology.](http://cdn.theatlantic.com/newsroom/img/posts/RTX12B04.jpg)

If you live somewhere rather toasty you know how painful your electricity bills can be during the summer months. So, wouldn’t it be good to have a system automatically find you the cheapest electricity when you need it most? Welcome to the artificially intelligent smarter smart grid.

If you live somewhere rather toasty you know how painful your electricity bills can be during the summer months. So, wouldn’t it be good to have a system automatically find you the cheapest electricity when you need it most? Welcome to the artificially intelligent smarter smart grid.

New research models show just how precarious our planet’s climate really is. Runaway greenhouse warming would make a predicted 2-6 feet rise in average sea levels over the next 50-100 years seem like a puddle at the local splash pool.

New research models show just how precarious our planet’s climate really is. Runaway greenhouse warming would make a predicted 2-6 feet rise in average sea levels over the next 50-100 years seem like a puddle at the local splash pool.

It’s summer, which means lots of people driving every-which-way for family vacations.

It’s summer, which means lots of people driving every-which-way for family vacations.

The world of fiction is populated with hundreds of different genres — most of which were invented by clever marketeers anxious to ensure vampire novels (teen / horror) don’t live next to classic works (literary) on real or imagined (think Amazon) book shelves. So, it should come as no surprise to see a new category recently emerge: cli-fi.

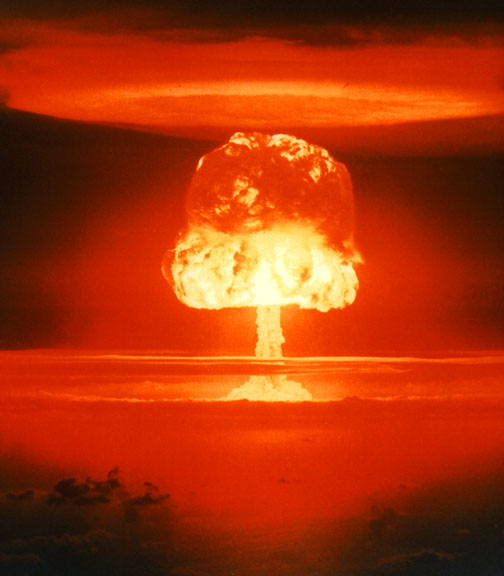

The world of fiction is populated with hundreds of different genres — most of which were invented by clever marketeers anxious to ensure vampire novels (teen / horror) don’t live next to classic works (literary) on real or imagined (think Amazon) book shelves. So, it should come as no surprise to see a new category recently emerge: cli-fi. The Cold War between the former U.S.S.R and the United States brought us the perfect acronym for the ultimate human “game” of brinkmanship — it was called MAD, for mutually assured destruction.

The Cold War between the former U.S.S.R and the United States brought us the perfect acronym for the ultimate human “game” of brinkmanship — it was called MAD, for mutually assured destruction. It’s safe to suggest that most of us above a certain age — let’s say 30 — wish to stay young. It is also safer to suggest, in the absence of a solution to this first wish, that many of us wish to age gracefully and happily. Yet for most of us, especially in the West, we age in a less dignified manner in combination with colorful medicines, lengthy tubes, and unpronounceable procedures. We are collectively living longer. But, the quality of those extra years leaves much to be desired.

It’s safe to suggest that most of us above a certain age — let’s say 30 — wish to stay young. It is also safer to suggest, in the absence of a solution to this first wish, that many of us wish to age gracefully and happily. Yet for most of us, especially in the West, we age in a less dignified manner in combination with colorful medicines, lengthy tubes, and unpronounceable procedures. We are collectively living longer. But, the quality of those extra years leaves much to be desired.

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart).

For centuries biologists, zoologists and ecologists have been mapping the wildlife that surrounds us in the great outdoors. Now a group led by microbiologist Noah Fierer at the University of Colorado Boulder is pursuing flora and fauna in one of the last unexplored eco-systems — the home. (Not for the faint of heart).

Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s.

Yesterday, May 10, 2013, scientists published new measures of atmospheric carbon dioxide (CO2). For the first time in human history CO2 levels reached an average of 400 parts per million (ppm). This is particularly troubling since CO2 has long been known as the most potent heat trapping component of the atmosphere. The sobering milestone was recorded from the Mauna Loa Observatory in Hawaii — monitoring has been underway at the site since the mid-1950s.