For those of us seeking to live another 100 years or more the news and/or hype over the last decade belonged to resveratrol. The molecule is believed to improve functioning of specific biochemical pathways in the cell, which may improve cell repair and hinder the aging process. Resveratrol is found — in trace amounts — in grape skin (and hence wine), blueberries and raspberries. While proof remains scarce, this has not stopped the public from consuming large quantities of wine and berries.

For those of us seeking to live another 100 years or more the news and/or hype over the last decade belonged to resveratrol. The molecule is believed to improve functioning of specific biochemical pathways in the cell, which may improve cell repair and hinder the aging process. Resveratrol is found — in trace amounts — in grape skin (and hence wine), blueberries and raspberries. While proof remains scarce, this has not stopped the public from consuming large quantities of wine and berries.

Ironically, one would need to ingest such large amounts of resveratrol to replicate the benefits found in mice studies, that the wine alone would probably cause irreversible liver damage before any health benefits appeared. Oh well.

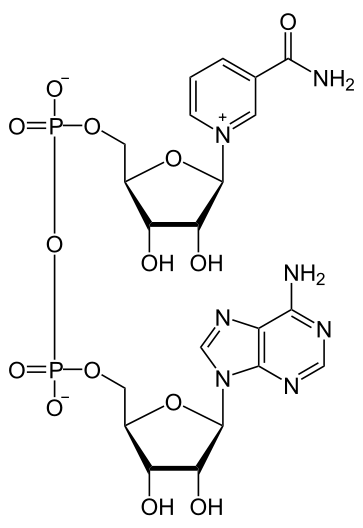

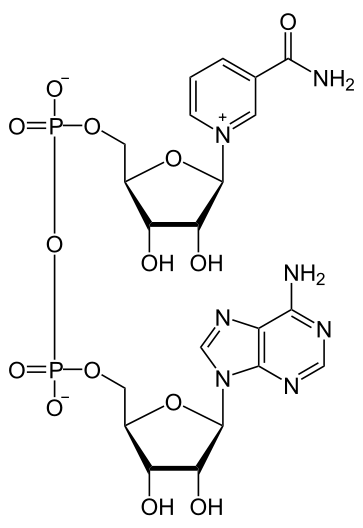

So, on to the next big thing, since aging cannot wait. It’s called NAD or Nicotinamide Adenine Dinucleotide. NAD performs several critical roles in the cell, one of which is energy metabolism. As we age our cells show diminishing levels of NAD and this is, possibly, linked to mitochondrial deterioration. Mitochondria are the cells’ energy factories, so keeping our mitochondria humming along is critical. Thus, hordes of researchers are now experimenting with NAD and related substances to see if they hold promise in postponing cellular demise.

From Scientific American:

Whenever I see my 10-year-old daughter brimming over with so much energy that she jumps up in the middle of supper to run around the table, I think to myself, “those young mitochondria.”

Mitochondria are our cells’ energy dynamos. Descended from bacteria that colonized other cells about 2 billion years, they get flaky as we age. A prominent theory of aging holds that decaying of mitochondria is a key driver of aging. While it’s not clear why our mitochondria fade as we age, evidence suggests that it leads to everything from heart failure to neurodegeneration, as well as the complete absence of zipping around the supper table.

Recent research suggests it may be possible to reverse mitochondrial decay with dietary supplements that increase cellular levels of a molecule called NAD (nicotinamide adenine dinucleotide). But caution is due: While there’s promising test-tube data and animal research regarding NAD boosters, no human clinical results on them have been published.

NAD is a linchpin of energy metabolism, among other roles, and its diminishing level with age has been implicated in mitochondrial deterioration. Supplements containing nicotinamide riboside, or NR, a precursor to NAD that’s found in trace amounts in milk, might be able to boost NAD levels. In support of that idea, half a dozen Nobel laureates and other prominent scientists are working with two small companies offering NR supplements.

The NAD story took off toward the end of 2013 with a high-profile paper by Harvard’s David Sinclair and colleagues. Sinclair, recall, achieved fame in the mid-2000s for research on yeast and mice that suggested the red wine ingredient resveratrol mimics anti-aging effects of calorie restriction. This time his lab made headlines by reporting that the mitochondria in muscles of elderly mice were restored to a youthful state after just a week of injections with NMN (nicotinamide mononucleotide), a molecule that naturally occurs in cells and, like NR, boosts levels of NAD.

It should be noted, however, that muscle strength was not improved in the NMN-treated micethe researchers speculated that one week of treatment wasn’t enough to do that despite signs that their age-related mitochondrial deterioration was reversed.

NMN isn’t available as a consumer product. But Sinclair’s report sparked excitement about NR, which was already on the market as a supplement called Niagen. Niagen’s maker, ChromaDex, a publicly traded Irvine, Calif., company, sells it to various retailers, which market it under their own brand names. In the wake of Sinclair’s paper, Niagen was hailed in the media as a potential blockbuster.

In early February, Elysium Health, a startup cofounded by Sinclair’s former mentor, MIT biologist Lenny Guarente, jumped into the NAD game by unveiling another supplement with NR. Dubbed Basis, it’s only offered online by the company. Elysium is taking no chances when it comes to scientific credibility. Its website lists a dream team of advising scientists, including five Nobel laureates and other big names such as the Mayo Clinic’s Jim Kirkland, a leader in geroscience, and biotech pioneer Lee Hood. I can’t remember a startup with more stars in its firmament.

A few days later, ChromaDex reasserted its first-comer status in the NAD game by announcing that it had conducted a clinical trial demonstrating that a single dose of NR resulted in statistically significant increases in NAD in humansthe first evidence that supplements could really boost NAD levels in people. Details of the study won’t be out until it’s reported in a peer-reviewed journal, the company said. (ChromaDex also brandishes Nobel credentials: Roger Kornberg, a Stanford professor who won the Chemistry prize in 2006, chairs its scientific advisory board. Hes the son of Nobel laureate Arthur Kornberg, who, ChromaDex proudly notes, was among the first scientists to study NR some 60 years ago.)

The NAD findings tie into the ongoing story about enzymes called sirtuins, which Guarente, Sinclair and other researchers have implicated as key players in conferring the longevity and health benefits of calorie restriction. Resveratrol, the wine ingredient, is thought to rev up one of the sirtuins, SIRT1, which appears to help protect mice on high doses of resveratrol from the ill effects of high-fat diets. A slew of other health benefits have been attributed to SIRT1 activation in hundreds of studies, including several small human trials.

Here’s the NAD connection: In 2000, Guarente’s lab reported that NAD fuels the activity of sirtuins, including SIRT1the more NAD there is in cells, the more SIRT1 does beneficial things. One of those things is to induce formation of new mitochondria. NAD can also activate another sirtuin, SIRT3, which is thought to keep mitochondria running smoothly.

Read the entire article here.

Image: Structure of nicotinamide adenine dinucleotide, oxidized (NAD+). Courtesy of Wikipedia. Public Domain.

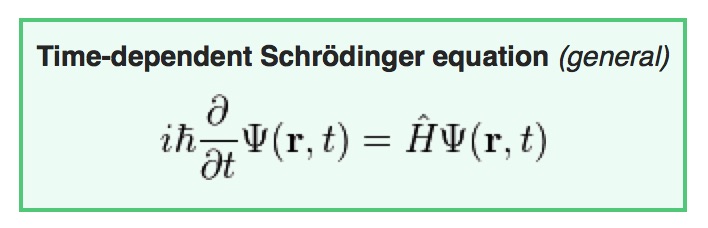

Once in every while I have to delve into the esoteric world of

Once in every while I have to delve into the esoteric world of

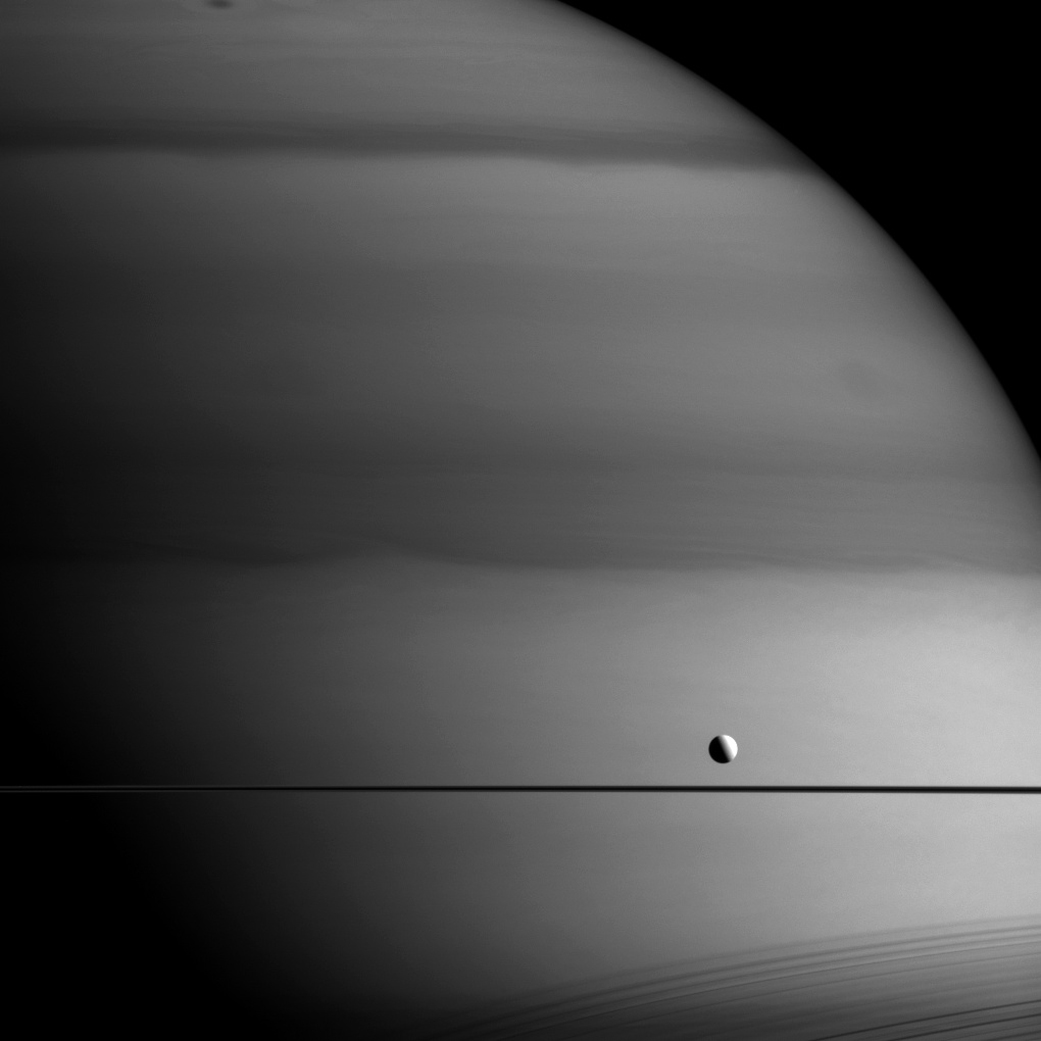

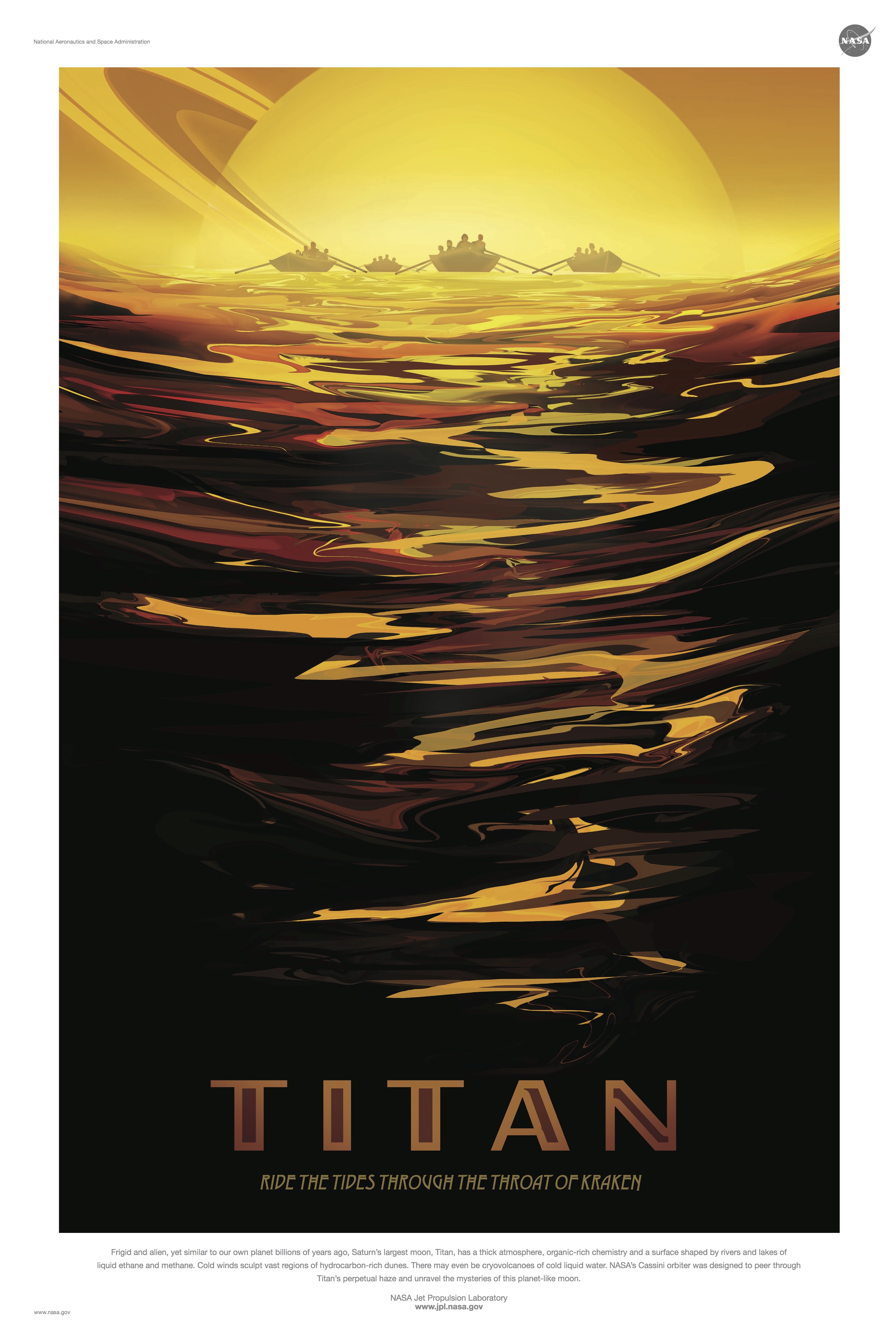

NASA is advertising its upcoming space tourism trip to Saturn’s largest moon Titan with this gorgeous retro poster.

NASA is advertising its upcoming space tourism trip to Saturn’s largest moon Titan with this gorgeous retro poster.