Alliterations aside this is a great story of how passion, persistence and persuasiveness can make a real impact. This is especially significant when you look at the triumphant climax to NASA’s unlikely New Horizons mission to Pluto. Over 20 years in the making and fraught with budget cuts and political infighting — NASA is known for its bureaucracy — the mission reached its zenith last week. While thanks go to the many hundreds engineers and scientists involved from its inception, the mission would not have succeeded without the vision and determination of one person — Alan Stern.

In a music track called “Over the Sea” by the 1980s (and 90s) band Information Society there is a sample of Star Trek’s Captain Kirk saying,

“In every revolution there is one man with a vision.”

How appropriate.

From Smithsonian

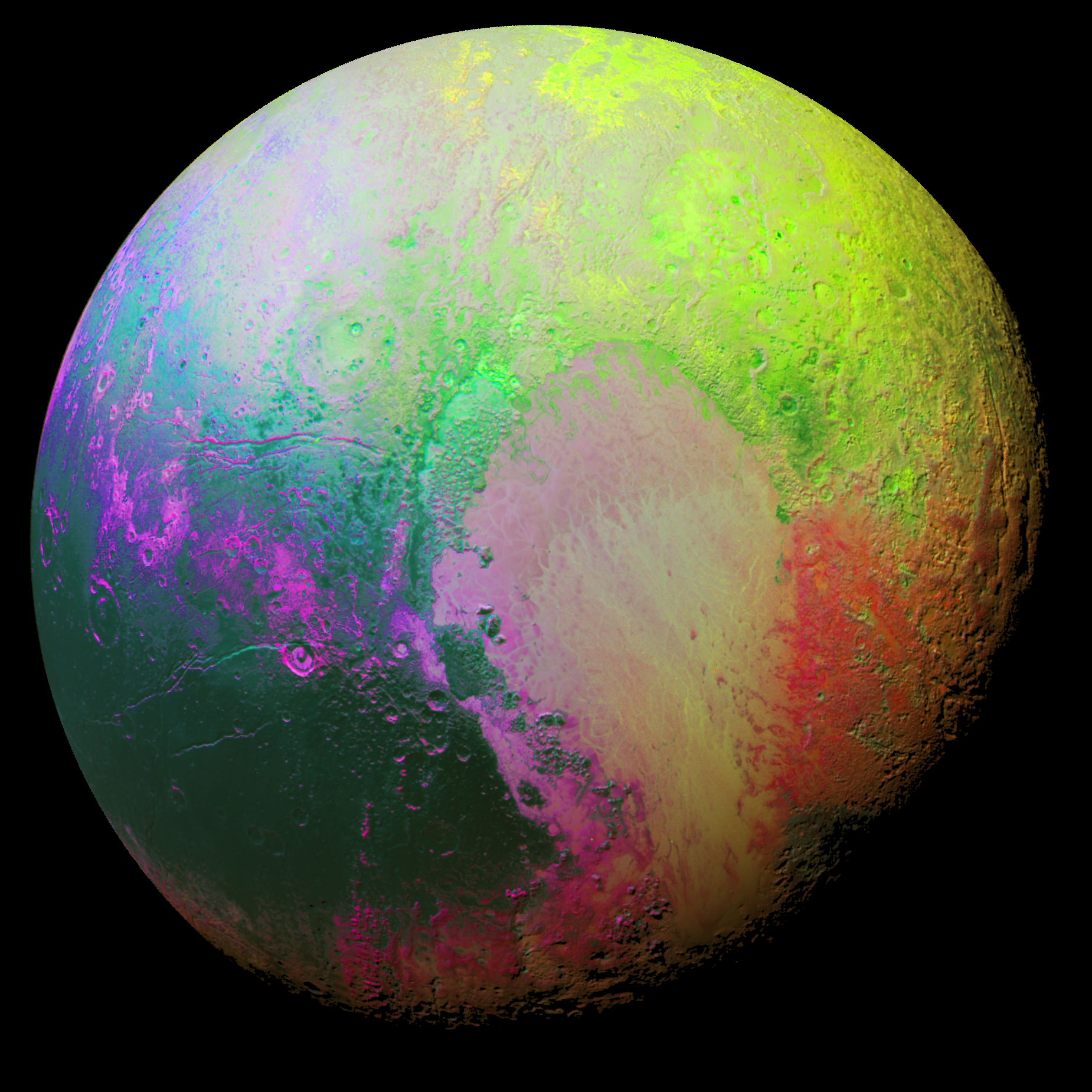

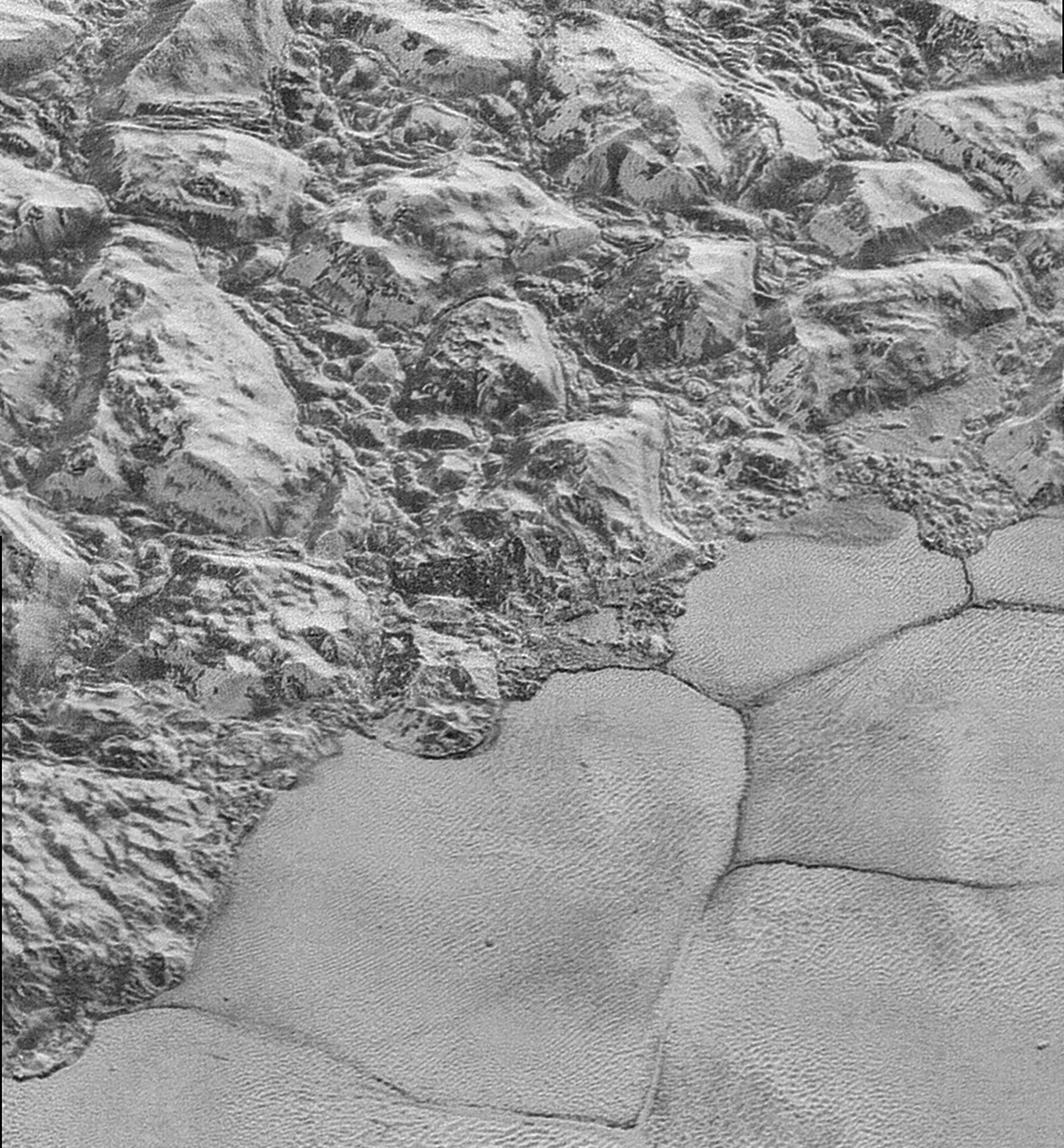

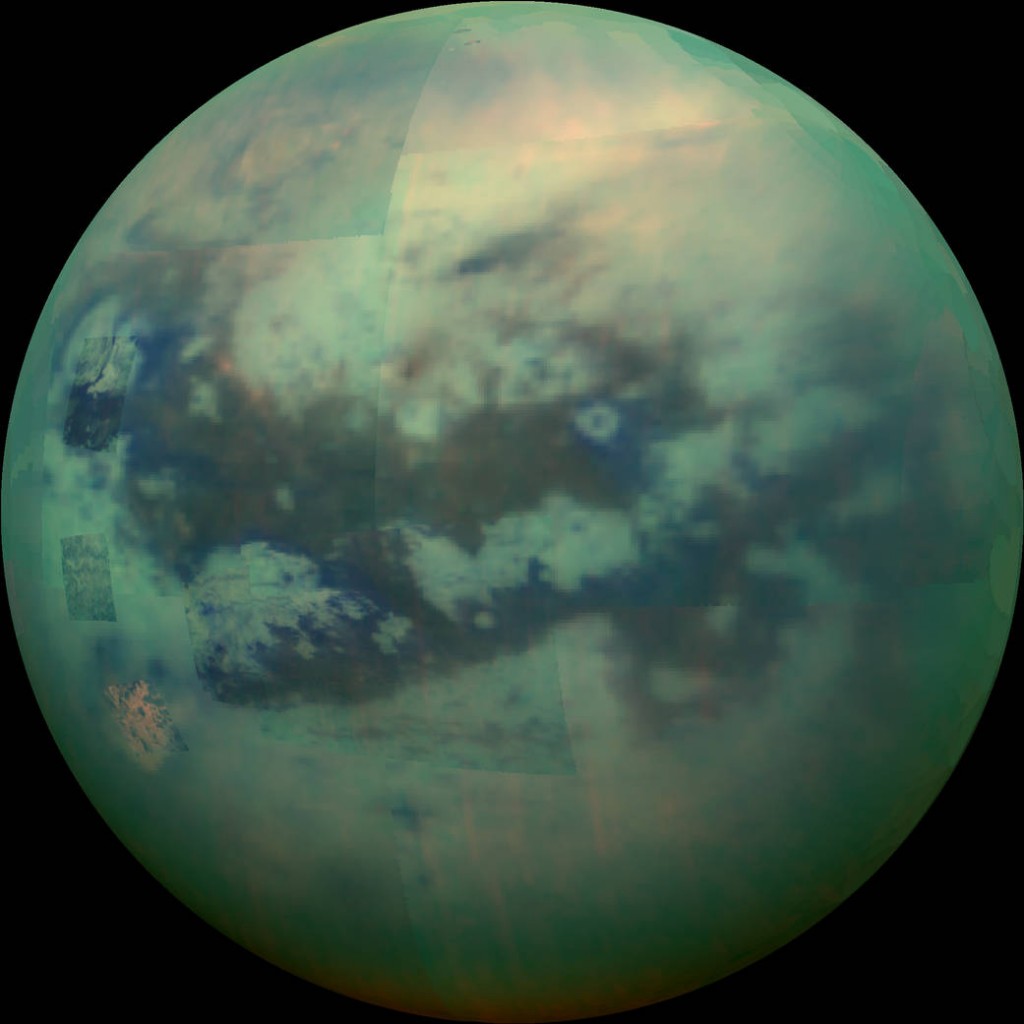

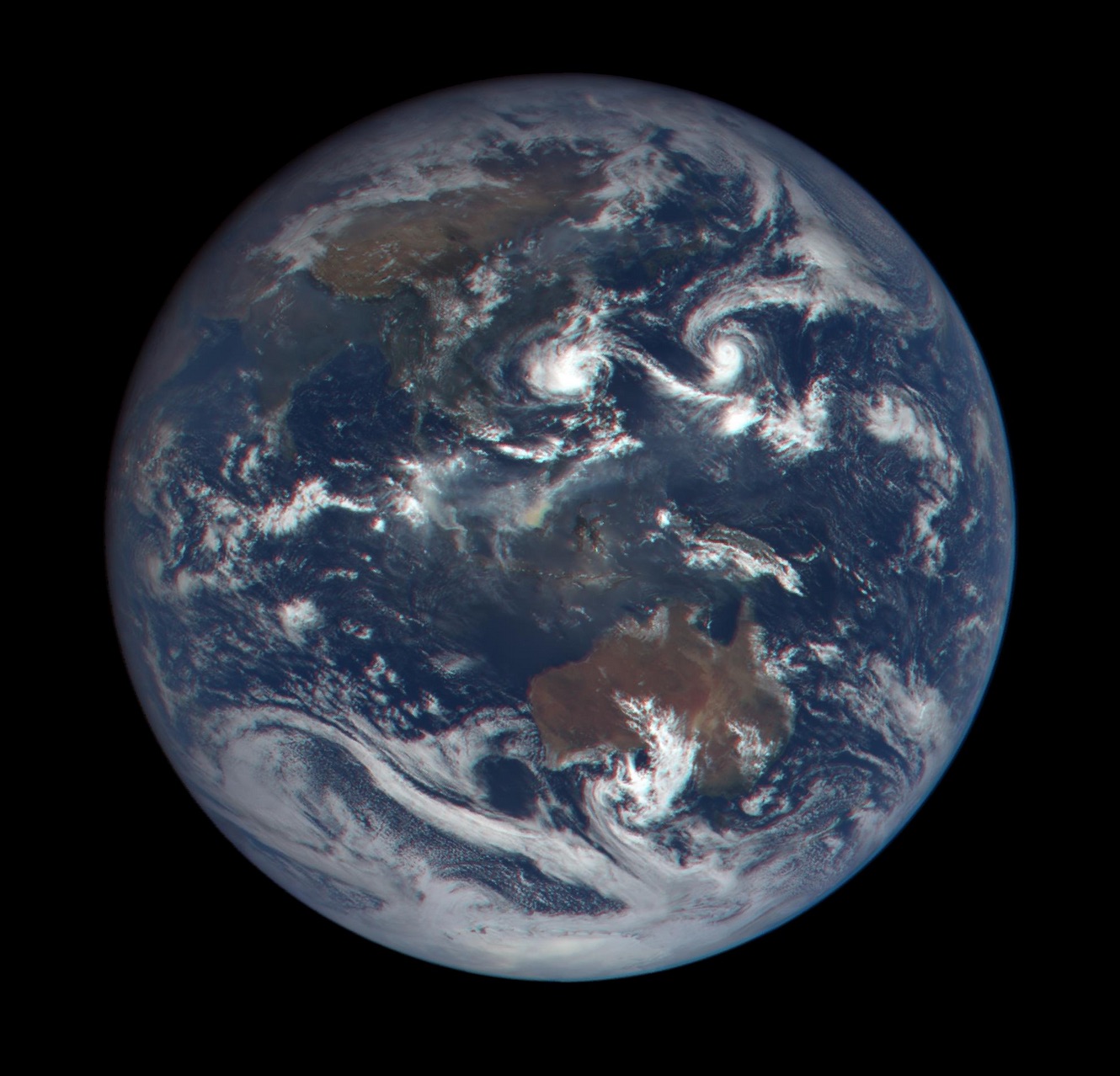

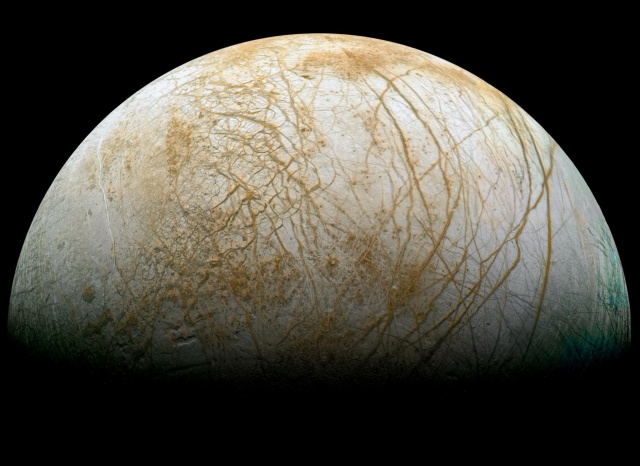

On July 14 at approximately 8 a.m. Eastern time, a half-ton NASA spacecraft that has been racing across the solar system for nine and a half years will finally catch up with tiny Pluto, at three billion miles from the Sun the most distant object that anyone or anything from Earth has ever visited. Invisible to the naked eye, Pluto wasn’t even discovered until 1930, and has been regarded as our solar system’s oddball ever since, completely different from the rocky planets close to the Sun, Earth included, and equally unlike the outer gas giants. This quirky and mysterious little world will swing into dramatic view as the New Horizons spacecraft makes its closest approach, just 6,000 miles away, and onboard cameras snap thousands of photographs. Other instruments will gauge Pluto’s topography, surface and atmospheric chemistry, temperature, magnetic field and more. New Horizons will also take a hard look at Pluto’s five known moons, including Charon, the largest. It might even find other moons, and maybe a ring or two.

It was barely 20 years ago when scientists first learned that Pluto, far from alone at the edge of the solar system, was just one in a vast swarm of small frozen bodies in wide, wide orbit around the Sun, like a ring of debris left at the outskirts of a construction zone. That insight, among others, has propelled the New Horizons mission. Understand Pluto and how it fits in with those remnant bodies, scientists say, and you can better understand the formation and evolution of the solar system itself.

If all goes well, “encounter day,” as the New Horizons team calls it, will be a cork-popping celebration of tremendous scientific and engineering prowess—it’s no small feat to fling a collection of precision instruments through the frigid void at speeds up to 47,000 miles an hour to rendezvous nearly a decade later with an icy sphere about half as wide as the United States is broad. The day will also be a sweet vindication for the leader of the mission, Alan Stern. A 57-year-old astronomer, aeronautical engineer, would-be astronaut and self-described “rabble-rouser,” Stern has spent the better part of his career fighting to get Pluto the attention he thinks it deserves. He began pushing NASA to approve a Pluto mission nearly a quarter of a century ago, then watched in frustration as the agency gave the green light to one Pluto probe after another, only to later cancel them. “It was incredibly frustrating,” he says, “like watching Lucy yank the football away from Charlie Brown, over and over.” Finally, Stern recruited other scientists and influential senators to join his lobbying effort, and because underdog Pluto has long been a favorite of children, proponents of the mission savvily enlisted kids to write to Congress, urging that funding for the spacecraft be approved.

New Horizons mission control is headquartered at Johns Hopkins University’s Applied Physics Laboratory near Baltimore, where Stern and several dozen other Plutonians will be installed for weeks around the big July event, but I caught up with Stern late last year in Boulder at the Southwest Research Institute, where he is an associate vice president for research and development. A picture window in his impressive office looks out onto the Rockies, where he often goes to hike and unwind. Trim and athletic at 5-foot-4, he’s also a runner, a sport he pursues with the exactitude of, well, a rocket scientist. He has calculated his stride rate, and says (only half-joking) that he’d be world-class if only his legs were longer. It wouldn’t be an overstatement to say that he is a polarizing figure in the planetary science community; his single-minded pursuit of Pluto has annoyed some colleagues. So has his passionate defense of Pluto in the years since astronomy officials famously demoted it to a “dwarf planet,” giving it the bum’s rush out of the exclusive solar system club, now limited to the eight biggies.

The timing of that insult, which is how Stern and other jilted Pluto-lovers see it, could not have been more dramatic, coming in August 2006, just months after New Horizons had rocketed into space from Cape Canaveral. What makes Pluto’s demotion even more painfully ironic to Stern is that some of the groundbreaking scientific discoveries that he had predicted greatly strengthened his opponents’ arguments, all while opening the door to a new age of planetary science. In fact, Stern himself used the term “dwarf planet” as early as the 1990s.

The wealthy astronomer Percival Lowell, widely known for insisting there were artificial canals on Mars, first started searching for Pluto at his private observatory in Arizona in 1905. Careful study of planetary orbits had suggested that Neptune was not the only object out there exerting a gravitational tug on Uranus, and Lowell set out to find what he dubbed “Planet X.” He died without success, but a young man named Clyde Tombaugh, who had a passion for astronomy though no college education, arrived at the observatory and picked up the search in 1929. After 7,000 hours staring at some 90 million star images, he caught sight of a new planet on his photographic plates in February 1930. The name Pluto, the Roman god of the underworld, was suggested by an 11-year-old British girl named Venetia Burney, who had been discussing the discovery with her grandfather. The name was unanimously adopted by the Lowell Observatory staff in part because the first two letters are Percival Lowell’s initials.

Pluto’s solitary nature baffled scientists for decades. Shouldn’t there be other, similar objects out beyond Neptune? Why did the solar system appear to run out of material so abruptly? “It seemed just weird that the outer solar system would be so empty, while the inner solar system was filled with planets and asteroids,” recalls David Jewitt, a planetary scientist at UCLA. Throughout the decades various astronomers proposed that there were smaller bodies out there, yet unseen. Comets that periodically sweep in to light up the night sky, they speculated, probably hailed from a belt or disk of debris at the solar system’s outer reaches.

Stern, in a paper published in 1991 in the journal Icarus, argued not only that the belt existed, but also that it contained things as big as Pluto. They were simply too far away, and too dim, to be easily seen. His reasoning: Neptune’s moon Triton is a near-twin of Pluto, and probably orbited the Sun before it was captured by Neptune’s gravity. Uranus has a drastically tilted axis of rotation, probably due to a collision eons ago with a Pluto-size object. That made three Pluto-like objects at least, which suggested to Stern there had to be more. The number of planets in the solar system would someday need to be revised upward, he thought. There were probably hundreds, with the majority, including Pluto, best assigned to a subcategory of “dwarf planets.”

Just a year later, the first object (other than Pluto and Charon) was discovered in that faraway region, called the Kuiper Belt after the Dutch-born astronomer Gerard Kuiper. Found by Jewitt and his colleague, Jane Luu, it’s only about 100 miles across, while Pluto spans 1,430 miles. A decade later, Caltech astronomers Mike Brown and Chad Trujillo discovered an object about half the size of Pluto, large enough to be spherical, which they named Quaoar (pronounced “kwa-war” and named for the creator god in the mythology of the pre-Columbian Tongva people native to the Los Angeles basin). It was followed in quick succession by Haumea, and in 2005, Brown’s group found Eris, about the same size as Pluto and also spherical.

Planetary scientists have spotted many hundreds of smaller Kuiper Belt Objects; there could be as many as ten billion that are a mile across or more. Stern will take a more accurate census of their sizes with the cameras on New Horizons. His simple idea is to map and measure Pluto’s and Charon’s craters, which are signs of collisions with other Kuiper Belt Objects and thus serve as a representative sample. When Pluto is closest to the Sun, frozen surface material evaporates into a temporary atmosphere, some of which escapes into space. This “escape erosion” can erase older craters, so Pluto will provide a recent census. Charon, without this erosion, will offer a record that spans cosmic history. In one leading theory, the original, much denser Kuiper Belt would have formed dozens of planets as big or bigger than Earth, but the orbital changes of Jupiter and Saturn flung most of the building blocks away before that could happen, nipping planet formation in the bud.

By the time New Horizons launched at Cape Canaveral on January 19, 2006, it had become difficult to argue that Pluto was materially different from many of its Kuiper Belt neighbors. Curiously, no strict definition of “planet” existed at the time, so some scientists argued that there should be a size cutoff, to avoid making the list of planets too long. If you called Pluto and the other relatively small bodies something else, you’d be left with a nice tidy eight planets—Mercury through Neptune. In 2000, Neil deGrasse Tyson, director of the Hayden Planetarium in New York City, had famously chosen the latter option, leaving Pluto out of a solar system exhibit.

Then, with New Horizons less than 15 percent of the way to Pluto, members of the International Astronomical Union, responsible for naming and classifying celestial objects, voted at a meeting in Prague to make that arrangement official. Pluto and the others were now to be known as dwarf planets, which, in contrast to Stern’s original meaning, were not planets. They were an entirely different sort of beast. Because he discovered Eris, Caltech’s Brown is sometimes blamed for the demotion. He has said he would have been fine with either outcome, but he did title his 2010 memoir How I Killed Pluto and Why It Had It Coming.

“It’s embarrassing,” recalls Stern, who wasn’t in Prague for the vote. “It’s wrong scientifically and it’s wrong pedagogically.” He said the same sort of things publicly at the time, in language that’s unusually blunt in the world of science. Among the dumbest arguments for demoting Pluto and the others, Stern noted, was the idea that having 20 or more planets would be somehow inconvenient. Also ridiculous, he says, is the notion that a dwarf planet isn’t really a planet. “Is a dwarf evergreen not an evergreen?” he asks.

Stern’s barely concealed contempt for what he considers foolishness of the bureaucratic and scientific varieties hasn’t always endeared him to colleagues. One astronomer I asked about Stern replied, “My mother taught me that if you can’t say anything nice about someone, don’t say anything.” Another said, “His last name is ‘Stern.’ That tells you all you need to know.”

DeGrasse Tyson, for his part, offers measured praise: “When it comes to everything from rousing public sentiment in support of astronomy to advocating space science missions to defending Pluto, Alan Stern is always there.”

Stern also inspires less reserved admiration. “Alan is incredibly creative and incredibly energetic,” says Richard Binzel, an MIT planetary scientist who has known Stern since their graduate-school days. “I don’t know where he gets it.”

Read the entire article

here.

Image: New Horizons Principal Investigator Alan Stern of Southwest Research Institute (SwRI), Boulder, CO, celebrates with New Horizons Flight Controllers after they received confirmation from the spacecraft that it had successfully completed the flyby of Pluto, Tuesday, July 14, 2015 in the Mission Operations Center (MOC) of the Johns Hopkins University Applied Physics Laboratory (APL), Laurel, Maryland. Public domain.